Insurers betting big on AI: AccentureAI News New research from Accenture has discovered insurance executives are planning on increased investment into AI during 2026 despite a widening skills gap in insurance organisations. Surveying 3,650 C-suite leaders over 20 industries and 20 countries, the Pulse of Change poll revealed 90% of the 218 senior insurance executives intend to spend more on AI over

The post Insurers betting big on AI: Accenture appeared first on AI News.

New research from Accenture has discovered insurance executives are planning on increased investment into AI during 2026 despite a widening skills gap in insurance organisations. Surveying 3,650 C-suite leaders over 20 industries and 20 countries, the Pulse of Change poll revealed 90% of the 218 senior insurance executives intend to spend more on AI over

The post Insurers betting big on AI: Accenture appeared first on AI News. Read More

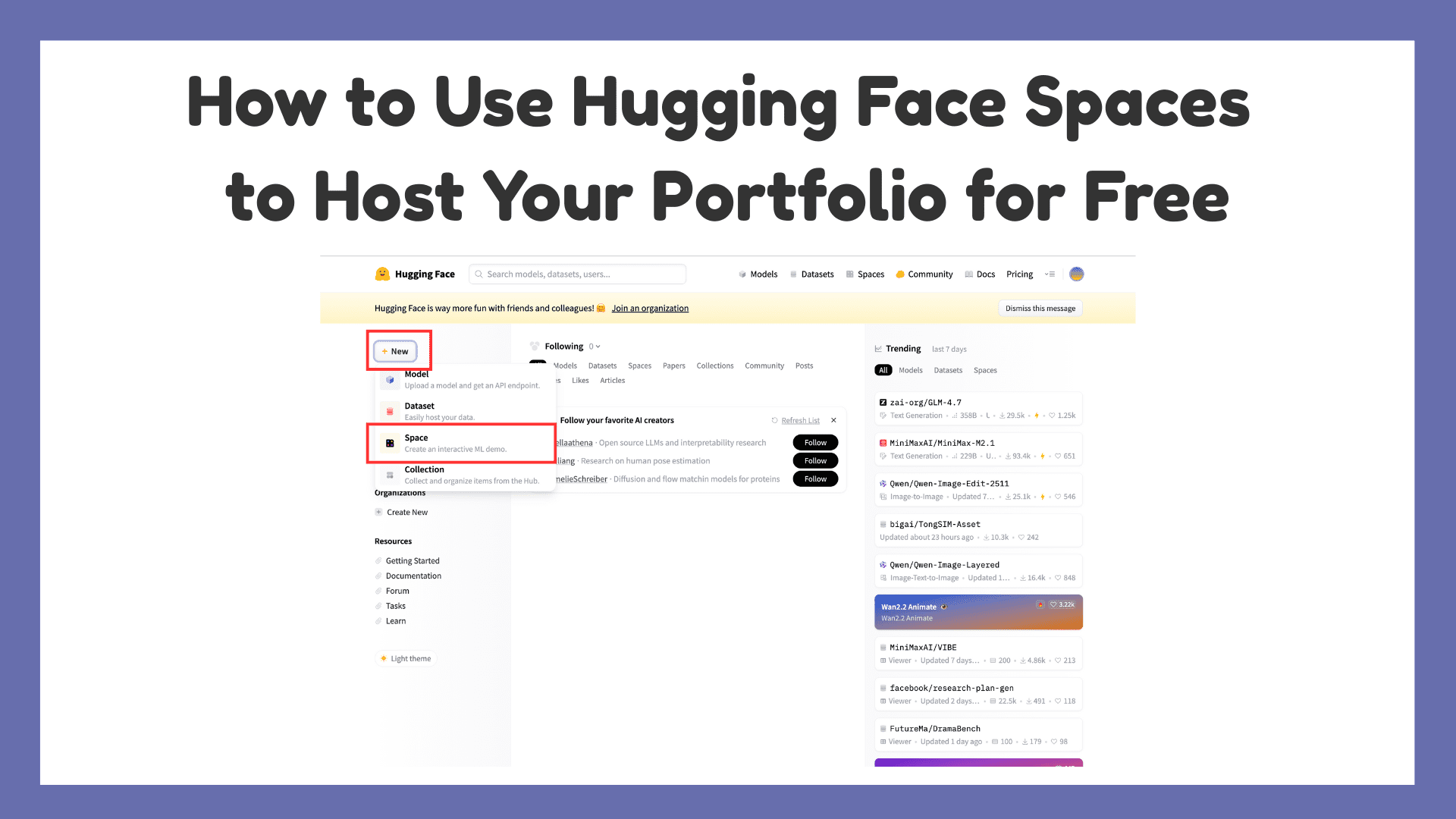

How to Use Hugging Face Spaces to Host Your Portfolio for FreeKDnuggets Hugging Face Spaces is a free way to host a portfolio with live demos, and this article walks through setting one up step by step.

Hugging Face Spaces is a free way to host a portfolio with live demos, and this article walks through setting one up step by step. Read More

RoPE, Clearly ExplainedTowards Data Science Going beyond the math to build intuition

The post RoPE, Clearly Explained appeared first on Towards Data Science.

Going beyond the math to build intuition

The post RoPE, Clearly Explained appeared first on Towards Data Science. Read More

7 Scikit-learn Tricks for Hyperparameter TuningKDnuggets Ready to learn these 7 Scikit-learn tricks that will take your machine learning models’ hyperparameter tuning skills to the next level?

Ready to learn these 7 Scikit-learn tricks that will take your machine learning models’ hyperparameter tuning skills to the next level? Read More

Optimizing Vector Search: Why You Should Flatten Structured Data Towards Data Science An analysis of how flattening structured data can boost precision and recall by up to 20%

The post Optimizing Vector Search: Why You Should Flatten Structured Data appeared first on Towards Data Science.

An analysis of how flattening structured data can boost precision and recall by up to 20%

The post Optimizing Vector Search: Why You Should Flatten Structured Data appeared first on Towards Data Science. Read More

Beyond the Chatbox: Generative UI, AG-UI, and the Stack Behind Agent-Driven InterfacesMarkTechPost Most AI applications still showcase the model as a chat box. That interface is simple, but it hides what agents are actually doing, such as planning steps, calling tools, and updating state. Generative UI is about letting the agent drive real interface elements, for example tables, charts, forms, and progress indicators, so the experience feels

The post Beyond the Chatbox: Generative UI, AG-UI, and the Stack Behind Agent-Driven Interfaces appeared first on MarkTechPost.

Most AI applications still showcase the model as a chat box. That interface is simple, but it hides what agents are actually doing, such as planning steps, calling tools, and updating state. Generative UI is about letting the agent drive real interface elements, for example tables, charts, forms, and progress indicators, so the experience feels

The post Beyond the Chatbox: Generative UI, AG-UI, and the Stack Behind Agent-Driven Interfaces appeared first on MarkTechPost. Read More

Project Genie: Experimenting with infinite, interactive worldsGoogle DeepMind News Google AI Ultra subscribers in the U.S. can try out Project Genie, an experimental research prototype that lets you create and explore worlds.

Google AI Ultra subscribers in the U.S. can try out Project Genie, an experimental research prototype that lets you create and explore worlds. Read More

Microsoft has linked recent reports of Windows 11 boot failures after installing the January 2026 updates to previously failed attempts to install the December 2025 security update, which left systems in an “improper state.” […] Read More

If an attacker splits a malicious prompt into discrete chunks, some large language models (LLMs) will get lost in the details and miss the true intent. Read More

A new Android malware campaign is using the Hugging Face platform as a repository for thousands of variations of an APK payload that collects credentials for popular financial and payment services. […] Read More