EU AI Act Risk Assessment Checklist and Template

A structured framework designed to support organizations navigating EU AI Act compliance requirements across all implementation stages.

[Download Now]

This checklist provides a structured approach for documenting AI system compliance with the EU AI Act. The template walks you through each phase of the compliance lifecycle, from initial system classification through ongoing post-market monitoring. Organizations will need to customize each section based on their specific AI systems, use cases, and organizational context.

The document includes fillable fields, checkbox tracking systems, and article references to help teams organize their compliance documentation. Expect to invest time adapting the framework to your particular circumstances and consulting with legal counsel on specific regulatory interpretations.

Key Benefits

- ✓ Provides a structured framework for EU AI Act compliance documentation

- ✓ Includes stage-gated implementation approach covering five lifecycle phases

- ✓ Contains specific EU AI Act article references for each requirement section

- ✓ Offers checklist format for tracking documentation completion status

- ✓ Includes risk scoring matrices and vulnerable groups impact assessment tools

- ✓ Covers both high-risk AI systems and General-Purpose AI Model (GPAI) requirements

- ✓ Provides 10-year document retention tracking aligned with Article 18 requirements

Who Uses This?

This template is designed for:

- AI system providers and developers working with EU markets

- Compliance officers responsible for AI governance programs

- Risk managers assessing AI system regulatory requirements

- Legal and regulatory affairs teams advising on EU AI Act obligations

- Organizations deploying high-risk AI systems under Annex III categories

Preview

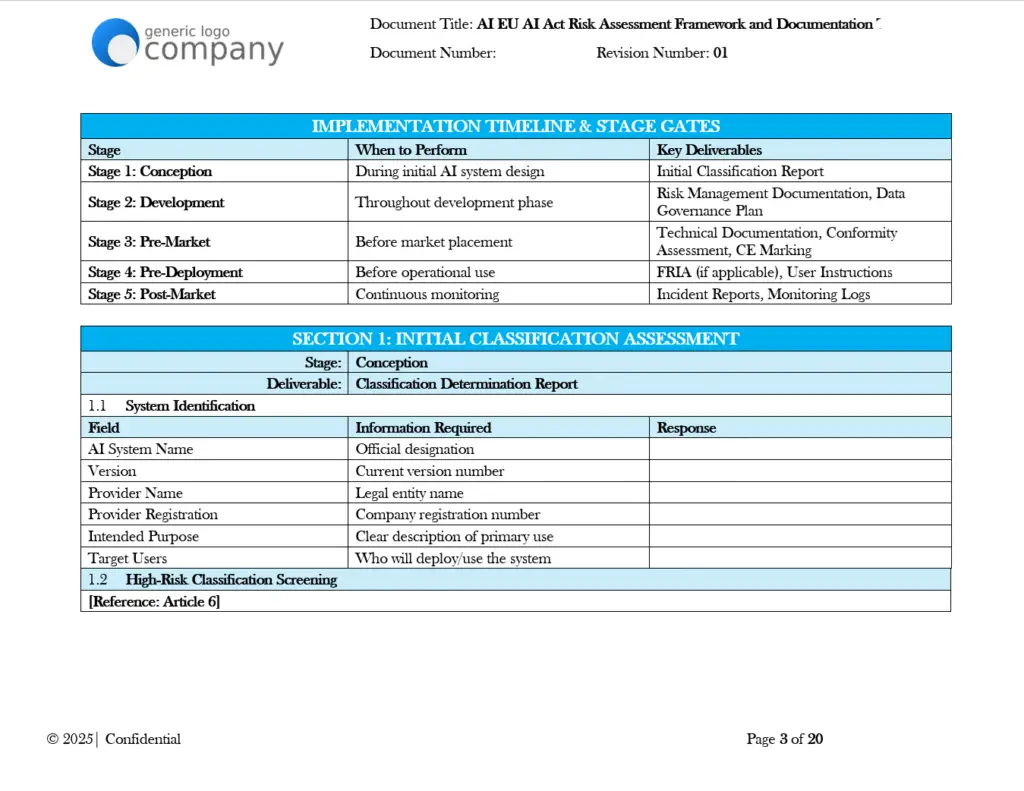

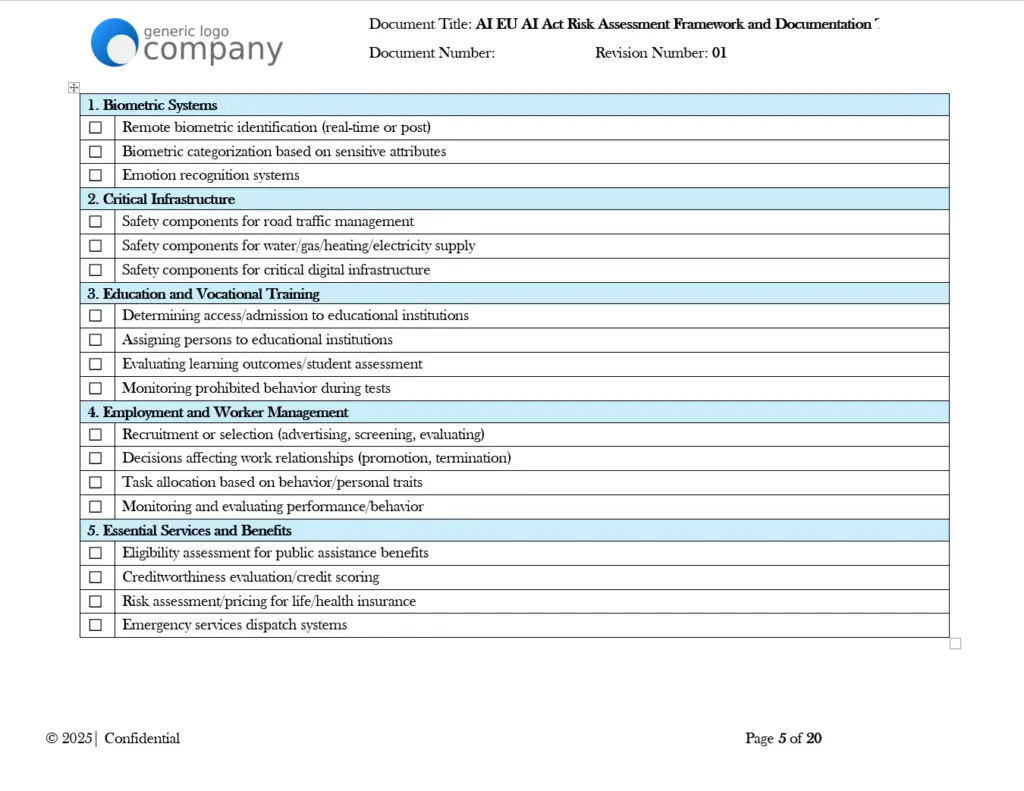

The template includes 10 main sections with fillable checklists, risk matrices, and documentation tracking fields. Key components include high-risk classification screening, Annex III category checks, data governance assessment frameworks, human oversight design requirements, and Fundamental Rights Impact Assessment (FRIA) guidance.

Why This Matters

The EU AI Act establishes legal requirements for AI systems operating within the European Union, with provisions that began phasing in during 2024. Organizations placing AI systems on the EU market or deploying AI systems within the EU need to assess whether their systems fall under the regulation’s high-risk categories and, if so, document compliance with specific technical and organizational requirements.

High-risk AI systems face the most stringent obligations under the regulation. These include systems used in areas such as biometric identification, critical infrastructure, education, employment, essential services, law enforcement, migration, and administration of justice. The Act requires providers of high-risk systems to implement risk management systems, maintain technical documentation, establish data governance practices, and enable human oversight capabilities.

The compliance timeline creates urgency for organizations to begin assessment and documentation efforts. Without a structured approach, teams may struggle to identify which requirements apply to their specific systems and how to demonstrate compliance to regulatory authorities.

Framework Alignment

This template includes references to specific EU AI Act provisions:

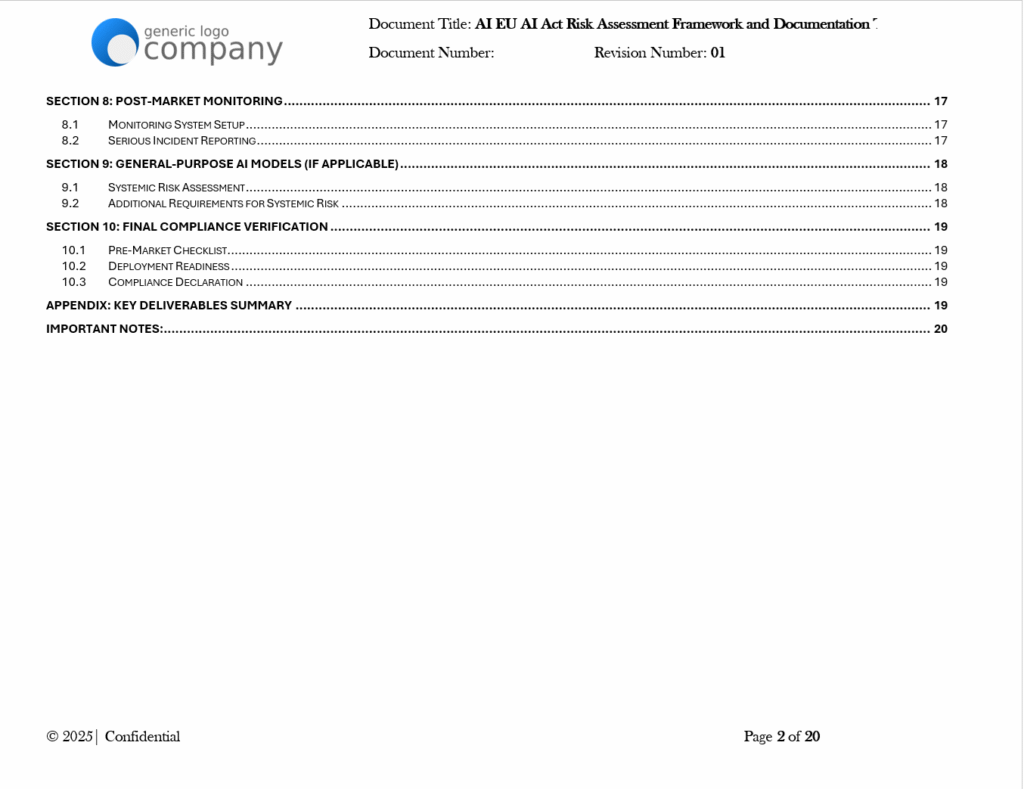

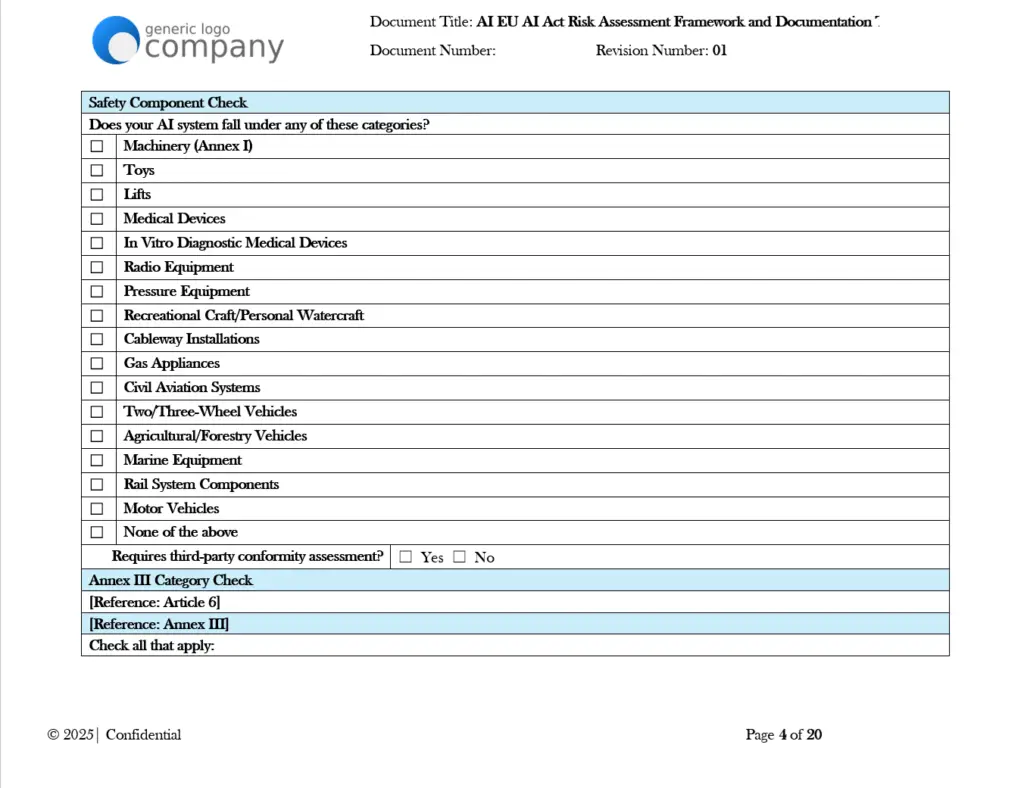

- Article 6: High-risk classification criteria and safety component requirements

- Article 9: Risk management system requirements

- Article 10: Data and data governance obligations

- Article 11 & Annex IV: Technical documentation requirements

- Article 12: Record-keeping and logging requirements

- Article 13 & Article 50: Transparency obligations

- Article 15: Accuracy, robustness, and cybersecurity standards

- Article 17: Quality management system requirements

- Article 18: Documentation retention (10 years)

- Article 27: Fundamental Rights Impact Assessment requirements

- Articles 47-49: Conformity assessment and CE marking

- Articles 51-55: General-Purpose AI Model obligations

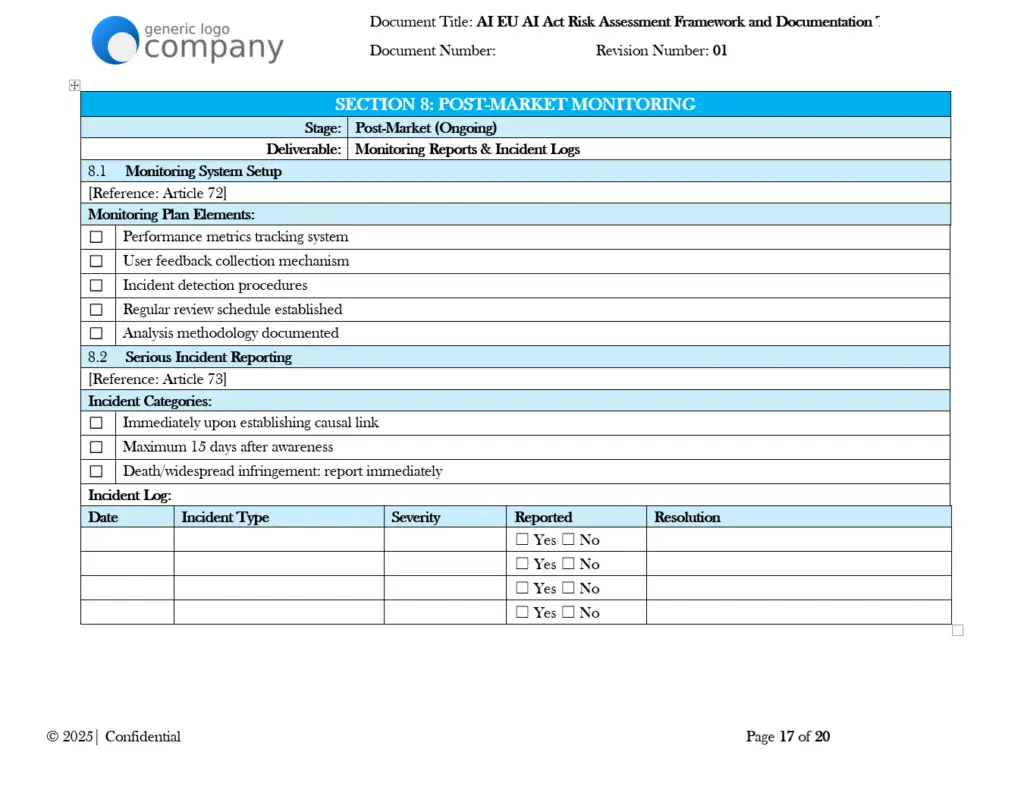

- Articles 72-73: Post-market monitoring and incident reporting

Key Features

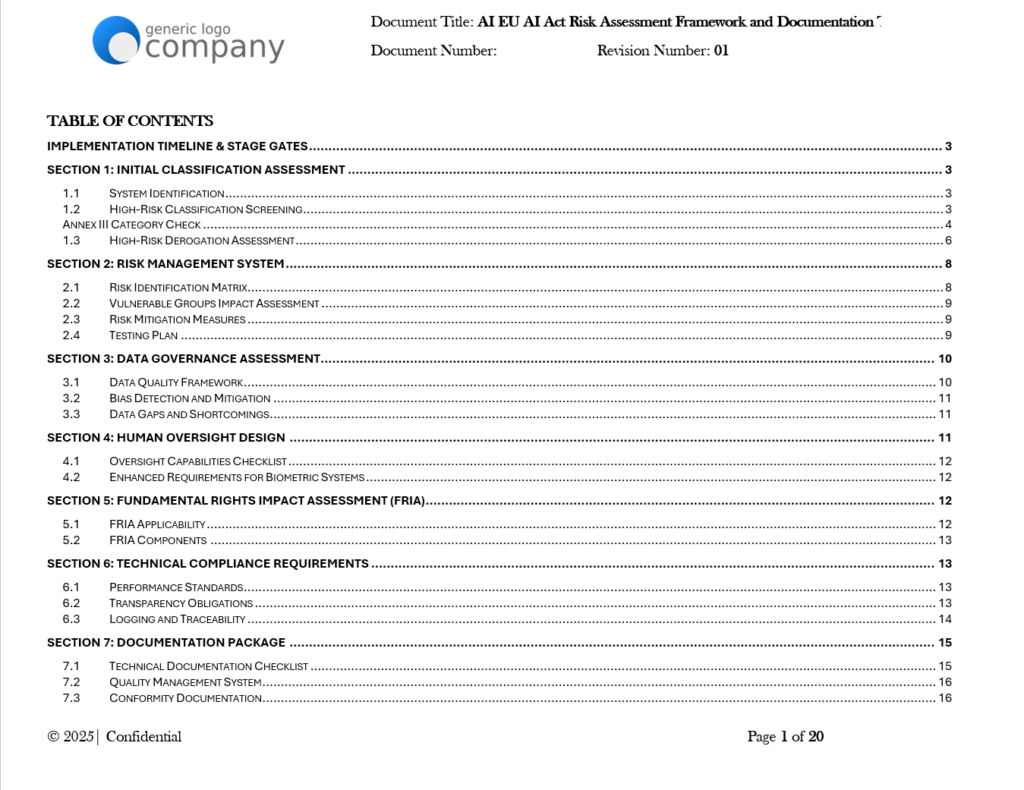

- Implementation Timeline & Stage Gates: Five-phase approach covering Conception, Development, Pre-Market, Pre-Deployment, and Post-Market stages with corresponding deliverables

- Initial Classification Assessment: System identification fields, high-risk classification screening against Annex I safety components and Annex III categories, derogation assessment questions

- Risk Management System: Risk identification matrix with probability and impact scoring, vulnerable groups impact assessment, risk mitigation tracking, and testing plan documentation

- Data Governance Assessment: Data quality framework covering collection, origin, preparation, assumptions, and volume; bias detection and mitigation documentation; data gaps identification

- Human Oversight Design: Oversight capabilities checklist covering system understanding, human control measures, and enhanced requirements for biometric systems

- Fundamental Rights Impact Assessment (FRIA): Applicability determination for public bodies and specific use cases, component documentation for deployment process, usage period, affected persons, specific risks, oversight measures, mitigation plans, and complaints mechanisms

- Technical Compliance Requirements: Performance standards tracking for accuracy, robustness, and cybersecurity; transparency obligation checklists; logging and traceability requirements

- Documentation Package: Technical documentation checklist aligned with Annex IV, Quality Management System components, conformity documentation and CE marking tracking

- Post-Market Monitoring: Monitoring system setup elements, serious incident reporting procedures with timeline requirements

- General-Purpose AI Models Section: Systemic risk assessment including computational power checks (10^25 FLOPs threshold), Commission designation tracking, and additional requirements for systemic risk models

- Final Compliance Verification: Pre-market checklist, deployment readiness assessment, and compliance declaration form

Comparison Table: Ad-Hoc Approach vs. Structured Template

| Aspect | Ad-Hoc Approach | This Template |

|---|---|---|

| Documentation structure | Scattered across multiple formats and locations | Consolidated checklist with defined sections |

| Article references | Requires manual cross-referencing with regulation text | Built-in article citations for each requirement |

| Progress tracking | Difficult to assess completion status | Checkbox system for tracking documentation status |

| Risk assessment | Informal or inconsistent methodology | Structured risk matrices with scoring framework |

| Stage management | Unclear milestones and deliverables | Five-phase implementation with defined stage gates |

| Retention compliance | May lack systematic tracking | Includes retention period fields (10-year requirement) |

FAQ Section

What format is this template delivered in? Documents are optimized for Microsoft Word to ensure proper formatting and collaborative editing capabilities. The template includes fillable fields and checkboxes that work within standard Word functionality.

Does completing this template ensure EU AI Act compliance? No. This template provides a documentation framework to support compliance efforts, but does not guarantee regulatory compliance. Organizations should seek legal counsel for specific compliance questions and to verify their documentation meets regulatory requirements for their particular circumstances.

Which AI systems does this template cover? The template is primarily designed for high-risk AI systems under the EU AI Act, including those falling under Annex III categories. It also includes a section for General-Purpose AI Models (GPAI). Systems not classified as high-risk may have fewer applicable requirements.

How much customization is required? Substantial customization is needed. The template provides a framework and structure, but all content fields require organization-specific information about your AI systems, risk assessments, technical implementations, and governance processes.

Does this template cover the full EU AI Act? The template focuses on provider obligations for high-risk AI systems and includes GPAI provisions. It does not comprehensively cover all aspects of the regulation, such as prohibited practices, deployer obligations beyond FRIA requirements, or enforcement mechanisms.

How often should this documentation be updated? The template includes notes indicating updates are required when AI systems undergo substantial modification, new risks are identified, regulatory guidance changes, or incidents occur requiring corrective action.

Ideal For

- Organizations developing or deploying AI systems for EU markets

- Companies conducting gap assessments against EU AI Act requirements

- Compliance teams building AI governance documentation programs

- Risk management professionals assessing AI system obligations

- Legal teams advising on regulatory documentation requirements

- Consultants supporting clients with EU AI Act compliance projects

- Organizations with systems potentially falling under Annex III high-risk categories

Pricing Strategy Options

Single Template: Contact for pricing based on organizational requirements and customization needs.

Bundle Option: May be combined with additional AI governance templates depending on organizational compliance scope.

Enterprise Option: Available as part of comprehensive governance documentation suites.

Differentiator

This template consolidates EU AI Act compliance documentation requirements into a single structured checklist that follows the regulation’s own organizational logic. Rather than requiring users to interpret the regulation and create their own documentation structures, the template provides pre-built sections mapped to specific articles and annexes. The stage-gated approach helps organizations understand when different compliance activities should occur during the AI system lifecycle, from initial conception through ongoing post-market monitoring. The inclusion of both high-risk system requirements and General-Purpose AI Model provisions in one document supports organizations whose portfolios may include multiple system types requiring different compliance approaches.

Important Disclaimers

- This template is a compliance support tool and does not constitute legal advice

- Organizations should seek qualified legal counsel for specific compliance questions

- Template requires substantial customization for each organization’s circumstances

- Regulatory interpretations may evolve as guidance and enforcement practices develop

- Completing this template does not guarantee regulatory compliance or approval