AI Use Case Risk Assessment Framework and Documentation Template

A structured framework designed to support comprehensive evaluation of AI systems against EU AI Act requirements, NIST AI RMF trustworthiness characteristics, and multi-jurisdictional regulatory considerations.

[Download Now]

This template provides a structured approach to documenting and assessing AI use cases before deployment. Organizations can use this framework to systematically evaluate AI systems against regulatory requirements, document technical architecture decisions, and establish clear approval pathways. The template requires customization to reflect your organization’s specific context, risk tolerance, and operational environment. What typically consumes significant internal time in building assessment frameworks from scratch can be streamlined with this comprehensive starting point.

Key Benefits

✓ Provides structured framework for EU AI Act risk classification (Prohibited, High-Risk, Limited Risk, Minimal Risk categories)

✓ Includes NIST AI RMF trustworthiness assessment sections covering seven characteristics of trustworthy AI

✓ Contains quantitative risk scoring methodology with likelihood and severity matrices

✓ Supports multi-jurisdiction regulatory mapping across EU, US federal, and state-level requirements

✓ Includes technical architecture and data governance assessment sections aligned with ISO 42001 Clause 8.1

✓ Provides human oversight model documentation framework per EU AI Act Article 14

✓ Contains approval pathway workflows with role-based review requirements

✓ Includes lifecycle monitoring requirements and action plan templates

Who Uses This?

This template is designed for:

- AI Governance Officers and Program Managers

- Compliance and Risk Management Teams

- IT Security and Data Protection Officers

- Legal and Regulatory Affairs Professionals

- Organizations deploying AI systems in regulated environments

- Companies preparing for EU AI Act compliance requirements

Preview Mention

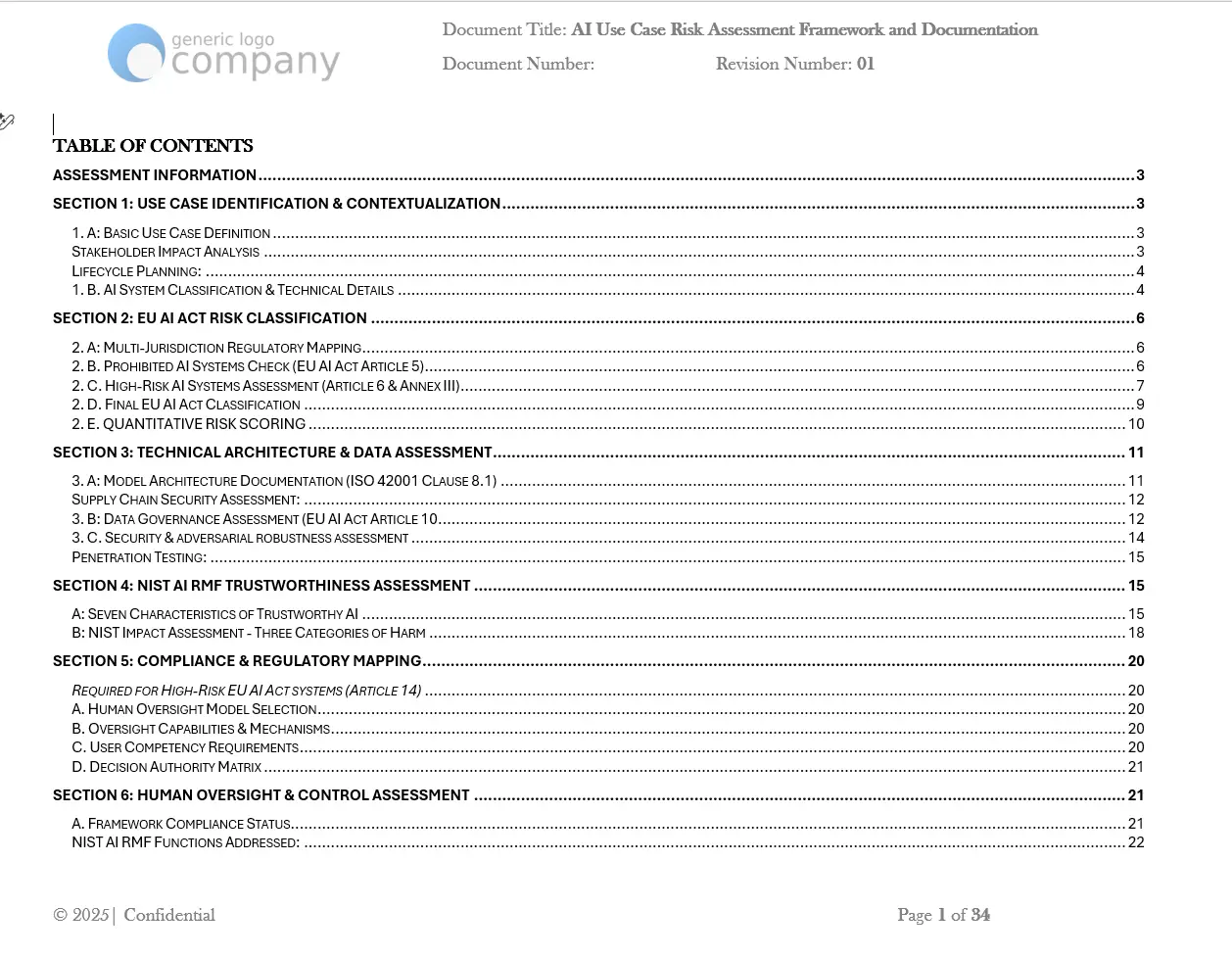

The template includes eight comprehensive sections: Use Case Identification and Contextualization, EU AI Act Risk Classification (including Prohibited Systems Check and Annex III Use Cases), Technical Architecture and Data Assessment, NIST AI RMF Trustworthiness Assessment, Compliance and Regulatory Mapping, Human Oversight and Control Assessment, Risk Determination and Approval Pathway, and Action Plan and Monitoring Requirements. Each section contains structured fields, checkboxes, and documentation requirements.

Why This Matters

Organizations deploying AI systems face an increasingly complex regulatory landscape. The EU AI Act establishes binding requirements for AI systems based on risk classification, with significant penalties for non-compliance. Meanwhile, the NIST AI Risk Management Framework provides voluntary guidance that many US organizations are adopting as a governance standard. Navigating these overlapping requirements while maintaining consistent documentation presents operational challenges for teams without dedicated regulatory expertise.

The gap between understanding regulatory requirements and implementing practical assessment processes often creates compliance risk. Organizations may lack clarity on which AI use cases trigger regulatory obligations, what documentation is necessary, or how to structure approval workflows. Without structured assessment processes, organizations may deploy AI systems that create unintended regulatory exposure or fail to capture important risk factors during evaluation.

This template addresses these challenges by providing a single documentation framework that maps to major regulatory requirements. Rather than building assessment processes from scratch, organizations can adapt this structured approach to their specific context while maintaining alignment with established frameworks.

Framework Alignment

The template references and aligns with the following frameworks explicitly mentioned throughout the document:

- EU AI Act – Article 5 (Prohibited Practices), Article 6 (High-Risk Classification), Article 10 (Data Governance), Article 14 (Human Oversight), Annex I, Annex III

- NIST AI Risk Management Framework (AI RMF) – Seven Characteristics of Trustworthy AI, Three Categories of Harm assessment, NIST AI 100-2 threat modeling approach

- ISO/IEC 42001 – Clause 8.1 (Model Architecture Documentation)

- ISO/IEC 23053 and 23894 – International AI standards (referenced in regulatory mapping)

- GDPR – Data protection requirements (referenced in data governance section)

- US State Regulations – California SB 1001, Colorado SB 21-169, Illinois BIPA (referenced in regulatory mapping)

- US Federal Guidance – FTC AI Guidance, FDA (Healthcare), FINRA (Financial Services), EEOC (Employment) sector-specific requirements

Note: This template references these frameworks to support compliance efforts; it does not replace professional legal review or official framework documentation.

Key Features

The template includes the following documented sections and components:

- Use Case Identification Module – Fields for use case naming, stakeholder impact analysis, lifecycle planning, AI system type classification (ML, Generative AI, Computer Vision, NLP, Expert Systems, RPA), and decision-making role categorization (Fully Automated, Human-Assisted, Human-Supervised, Human-in-the-Loop)

- EU AI Act Classification Workflow – Prohibited systems checklist (8 categories per Article 5), High-Risk assessment pathway (Article 6.1 Safety Component Check, Annex III Use Case mapping across 7 categories), exemption criteria evaluation, and final classification determination

- Quantitative Risk Scoring Matrix – Likelihood scale (1-5) and severity scale (1-5) with risk categories for Technical Failure, Bias/Discrimination, Privacy Breach, Security/Adversarial, Regulatory Non-compliance, Reputational Damage, Financial Loss, and User Harm

- Technical Architecture Documentation – Model specifications (name, version, type, training methodology, parameters), supply chain security assessment checklist, integration architecture fields, and deployment source classification

- Data Governance Assessment – Training data documentation, data quality assessment fields, data drift monitoring parameters, personal data analysis (PII, Special Category, Biometric, Financial), legal basis documentation, and bias assessment results

- Security and Adversarial Robustness Section – Threat modeling framework based on NIST AI 100-2, penetration testing documentation fields

- NIST AI RMF Trustworthiness Assessment – Structured evaluation across the seven trustworthiness characteristics with rating scales and evidence documentation

- NIST Impact Assessment – Three categories of harm evaluation framework

- Human Oversight Documentation – Oversight model selection, capabilities and mechanisms documentation, user competency requirements, decision authority matrix

- Compliance Status Tracking – Framework compliance checklist, NIST AI RMF function mapping, required documentation portfolio

- Risk Determination and Approval Pathway – Risk assessment summary, final classification recommendation, approval pathway with required reviews

- Action Plan and Monitoring – Pre-implementation requirements checklist, lifecycle monitoring requirements, assessment certification

Comparison Table: Building From Scratch vs. Professional Template

| Aspect | Building Assessment From Scratch | This Professional Template |

|---|---|---|

| Framework Coverage | Requires independent research across multiple frameworks | Integrates EU AI Act, NIST AI RMF, ISO 42001, and sector-specific requirements in single document |

| Risk Classification | Must develop custom classification methodology | Includes EU AI Act four-tier classification workflow with documented criteria |

| Quantitative Scoring | Requires creating scoring methodology | Provides likelihood/severity matrix with 8 risk categories and prioritization guidance |

| Technical Documentation | Must determine required fields | Contains structured fields for model specifications, data governance, and security assessment |

| Regulatory Mapping | Time-intensive cross-referencing | Multi-jurisdiction checklist covering EU, US federal, and state-level requirements |

| Human Oversight | Must research Article 14 requirements | Includes oversight model selection, competency requirements, and decision authority matrix |

| Approval Workflows | Requires process design | Contains structured approval pathways with role-based review requirements |

| Customization | Starting from blank page | Starting point requiring organizational adaptation |

FAQ Section

What file format is this template delivered in? Documents are optimized for Microsoft Word to ensure proper formatting and collaborative editing capabilities. The template contains structured tables, checkboxes, and form fields designed for Word compatibility.

Does this template guarantee compliance with the EU AI Act or other regulations? No. This template is designed to support compliance efforts by providing a structured documentation framework. Regulatory compliance depends on organizational implementation, customization to specific contexts, and professional review. Organizations should consult qualified legal and compliance professionals for official compliance determinations.

How much customization is required? Significant customization is required. The template provides structure and framework alignment, but organizations must complete all assessment fields based on their specific AI use cases, adapt risk scoring thresholds to organizational risk tolerance, and integrate approval workflows with existing governance processes.

Which EU AI Act risk categories does this template address? The template includes assessment workflows for all four EU AI Act risk tiers: Prohibited (Article 5), High-Risk (Article 6, Annex III), Limited Risk (transparency obligations), and Minimal Risk. The Prohibited systems checklist includes 8 categories; the High-Risk assessment covers all 7 Annex III use case categories.

What NIST AI RMF components are included? The template includes structured assessment sections for the seven characteristics of trustworthy AI and the three categories of harm evaluation framework. It also includes NIST AI RMF function mapping in the compliance status section.

Is the quantitative risk scoring methodology standardized? The template provides a 5×5 likelihood/severity matrix with defined scales. Organizations should calibrate scoring thresholds and risk prioritization levels to their specific risk tolerance and operational context.

Can this template be used for AI systems already in production? Yes. The template can support both pre-deployment assessment and retrospective evaluation of existing AI systems, though organizations should adapt the lifecycle and approval sections accordingly.

Ideal For Section

This template is designed for:

- AI Governance Teams establishing standardized assessment processes across the organization

- Compliance Officers documenting AI system evaluations for regulatory review

- Risk Managers implementing quantitative AI risk assessment methodologies

- Legal Teams supporting due diligence documentation for AI deployments

- IT Security Professionals documenting technical architecture and security assessments

- Data Protection Officers evaluating data governance and privacy implications of AI systems

- Organizations operating in the EU preparing for EU AI Act requirements

- US Organizations adopting NIST AI RMF as governance framework

- Regulated Industries (healthcare, financial services, employment) requiring sector-specific AI assessment documentation

- Mid-size to Enterprise Organizations deploying multiple AI systems requiring consistent assessment processes

Pricing Strategy Options

Single Template: Contact for pricing based on organizational requirements and customization needs.

Bundle Option: May be combined with additional AI governance templates (Acceptable Use Policy, AI Governance Charter, AI Incident Response) depending on organizational compliance scope.

Enterprise Option: Available as part of comprehensive AI governance documentation suites with volume considerations.

Differentiator

This template consolidates multiple regulatory frameworks into a single assessment workflow rather than requiring separate evaluation processes for each standard. Where organizations typically need to cross-reference EU AI Act articles, NIST AI RMF characteristics, ISO 42001 clauses, and sector-specific requirements independently, this framework integrates these references into structured sections with clear documentation requirements. The inclusion of quantitative risk scoring alongside qualitative classification provides both measurable metrics and regulatory categorization in one assessment process. The template’s eight-section structure moves from use case identification through approval pathways, supporting end-to-end documentation rather than isolated compliance checks.

This template requires customization to organizational context and does not constitute legal advice or compliance certification. Professional review is recommended before regulatory submissions.