NVIDIA Nemotron 3 Nano 30B MoE model is now available in Amazon SageMaker JumpStartArtificial Intelligence Today we’re excited to announce that the NVIDIA Nemotron 3 Nano 30B model with 3B active parameters is now generally available in the Amazon SageMaker JumpStart model catalog. You can accelerate innovation and deliver tangible business value with Nemotron 3 Nano on Amazon Web Services (AWS) without having to manage model deployment complexities. You can power your generative AI applications with Nemotron capabilities using the managed deployment capabilities offered by SageMaker JumpStart.

Today we’re excited to announce that the NVIDIA Nemotron 3 Nano 30B model with 3B active parameters is now generally available in the Amazon SageMaker JumpStart model catalog. You can accelerate innovation and deliver tangible business value with Nemotron 3 Nano on Amazon Web Services (AWS) without having to manage model deployment complexities. You can power your generative AI applications with Nemotron capabilities using the managed deployment capabilities offered by SageMaker JumpStart. Read More

Indian defense sector and government-aligned organizations have been targeted by multiple campaigns that are designed to compromise Windows and Linux environments with remote access trojans capable of stealing sensitive data and ensuring continued access to infected machines. The campaigns are characterized by the use of malware families like Geta RAT, Ares RAT, and DeskRAT, which […]

How to Build an Atomic-Agents RAG Pipeline with Typed Schemas, Dynamic Context Injection, and Agent ChainingMarkTechPost In this tutorial, we build an advanced, end-to-end learning pipeline around Atomic-Agents by wiring together typed agent interfaces, structured prompting, and a compact retrieval layer that grounds outputs in real project documentation. Also, we demonstrate how to plan retrieval, retrieve relevant context, inject it dynamically into an answering agent, and run an interactive loop that

The post How to Build an Atomic-Agents RAG Pipeline with Typed Schemas, Dynamic Context Injection, and Agent Chaining appeared first on MarkTechPost.

In this tutorial, we build an advanced, end-to-end learning pipeline around Atomic-Agents by wiring together typed agent interfaces, structured prompting, and a compact retrieval layer that grounds outputs in real project documentation. Also, we demonstrate how to plan retrieval, retrieve relevant context, inject it dynamically into an answering agent, and run an interactive loop that

The post How to Build an Atomic-Agents RAG Pipeline with Typed Schemas, Dynamic Context Injection, and Agent Chaining appeared first on MarkTechPost. Read More

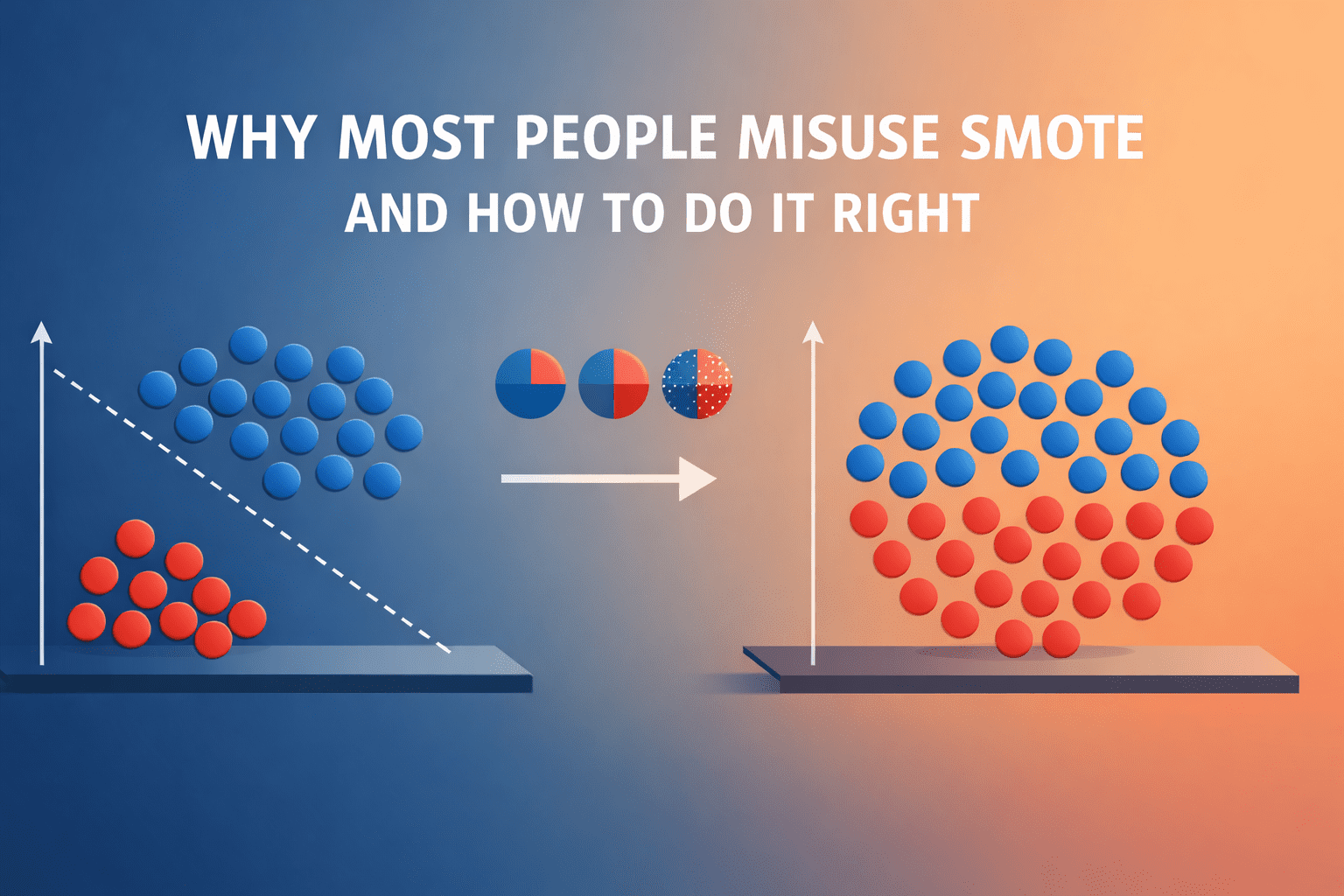

Why Most People Misuse SMOTE, And How to Do It RightKDnuggets Keys for oversampling your data for addressing class imbalance issues, the right way.

Keys for oversampling your data for addressing class imbalance issues, the right way. Read More

Mastering Amazon Bedrock throttling and service availability: A comprehensive guideArtificial Intelligence This post shows you how to implement robust error handling strategies that can help improve application reliability and user experience when using Amazon Bedrock. We’ll dive deep into strategies for optimizing performances for the application with these errors. Whether this is for a fairly new application or matured AI application, in this post you will be able to find the practical guidelines to operate with on these errors.

This post shows you how to implement robust error handling strategies that can help improve application reliability and user experience when using Amazon Bedrock. We’ll dive deep into strategies for optimizing performances for the application with these errors. Whether this is for a fairly new application or matured AI application, in this post you will be able to find the practical guidelines to operate with on these errors. Read More

Swann provides Generative AI to millions of IoT Devices using Amazon Bedrock Artificial Intelligence

Swann provides Generative AI to millions of IoT Devices using Amazon BedrockArtificial Intelligence This post shows you how to implement intelligent notification filtering using Amazon Bedrock and its gen-AI capabilities. You’ll learn model selection strategies, cost optimization techniques, and architectural patterns for deploying gen-AI at IoT scale, based on Swann Communications deployment across millions of devices.

This post shows you how to implement intelligent notification filtering using Amazon Bedrock and its gen-AI capabilities. You’ll learn model selection strategies, cost optimization techniques, and architectural patterns for deploying gen-AI at IoT scale, based on Swann Communications deployment across millions of devices. Read More

How LinqAlpha assesses investment theses using Devil’s Advocate on Amazon BedrockArtificial Intelligence LinqAlpha is a Boston-based multi-agent AI system built specifically for institutional investors. The system supports and streamlines agentic workflows across company screening, primer generation, stock price catalyst mapping, and now, pressure-testing investment ideas through a new AI agent called Devil’s Advocate. In this post, we share how LinqAlpha uses Amazon Bedrock to build and scale Devil’s Advocate.

LinqAlpha is a Boston-based multi-agent AI system built specifically for institutional investors. The system supports and streamlines agentic workflows across company screening, primer generation, stock price catalyst mapping, and now, pressure-testing investment ideas through a new AI agent called Devil’s Advocate. In this post, we share how LinqAlpha uses Amazon Bedrock to build and scale Devil’s Advocate. Read More

Building an AI Agent to Detect and Handle Anomalies in Time-Series DataTowards Data Science Combining statistical detection with agentic decision-making

The post Building an AI Agent to Detect and Handle Anomalies in Time-Series Data appeared first on Towards Data Science.

Combining statistical detection with agentic decision-making

The post Building an AI Agent to Detect and Handle Anomalies in Time-Series Data appeared first on Towards Data Science. Read More

Not All RecSys Problems Are Created EqualTowards Data Science How baseline strength, churn, and subjectivity determine complexity

The post Not All RecSys Problems Are Created Equal appeared first on Towards Data Science.

How baseline strength, churn, and subjectivity determine complexity

The post Not All RecSys Problems Are Created Equal appeared first on Towards Data Science. Read More

Versioning and Testing Data Solutions: Applying CI and Unit Tests on Interview-style QueriesKDnuggets Learn how to apply unit testing, version control, and continuous integration to data analysis scripts using Python and GitHub Actions.

Learn how to apply unit testing, version control, and continuous integration to data analysis scripts using Python and GitHub Actions. Read More