Build an intelligent contract management solution with Amazon Quick Suite and Bedrock AgentCoreArtificial Intelligence This blog post demonstrates how to build an intelligent contract management solution using Amazon Quick Suite as your primary contract management solution, augmented with Amazon Bedrock AgentCore for advanced multi-agent capabilities.

This blog post demonstrates how to build an intelligent contract management solution using Amazon Quick Suite as your primary contract management solution, augmented with Amazon Bedrock AgentCore for advanced multi-agent capabilities. Read More

3 Ways to Anonymize and Protect User Data in Your ML PipelineKDnuggets In this article, you will learn three practical ways to protect user data in real-world ML pipelines, with techniques that data scientists can implement directly in their workflows.

In this article, you will learn three practical ways to protect user data in real-world ML pipelines, with techniques that data scientists can implement directly in their workflows. Read More

Data Science as Engineering: Foundations, Education, and Professional IdentityTowards Data Science Recognize data science as an engineering practice and structure education accordingly.

The post Data Science as Engineering: Foundations, Education, and Professional Identity appeared first on Towards Data Science.

Recognize data science as an engineering practice and structure education accordingly.

The post Data Science as Engineering: Foundations, Education, and Professional Identity appeared first on Towards Data Science. Read More

Databricks: Enterprise AI adoption shifts to agentic systemsAI News According to Databricks, enterprise AI adoption is shifting to agentic systems as organisations embrace intelligent workflows. Generative AI’s first wave promised business transformation but often delivered little more than isolated chatbots and stalled pilot programmes. Technology leaders found themselves managing high expectations with limited operational utility. However, new telemetry from Databricks suggests the market has

The post Databricks: Enterprise AI adoption shifts to agentic systems appeared first on AI News.

According to Databricks, enterprise AI adoption is shifting to agentic systems as organisations embrace intelligent workflows. Generative AI’s first wave promised business transformation but often delivered little more than isolated chatbots and stalled pilot programmes. Technology leaders found themselves managing high expectations with limited operational utility. However, new telemetry from Databricks suggests the market has

The post Databricks: Enterprise AI adoption shifts to agentic systems appeared first on AI News. Read More

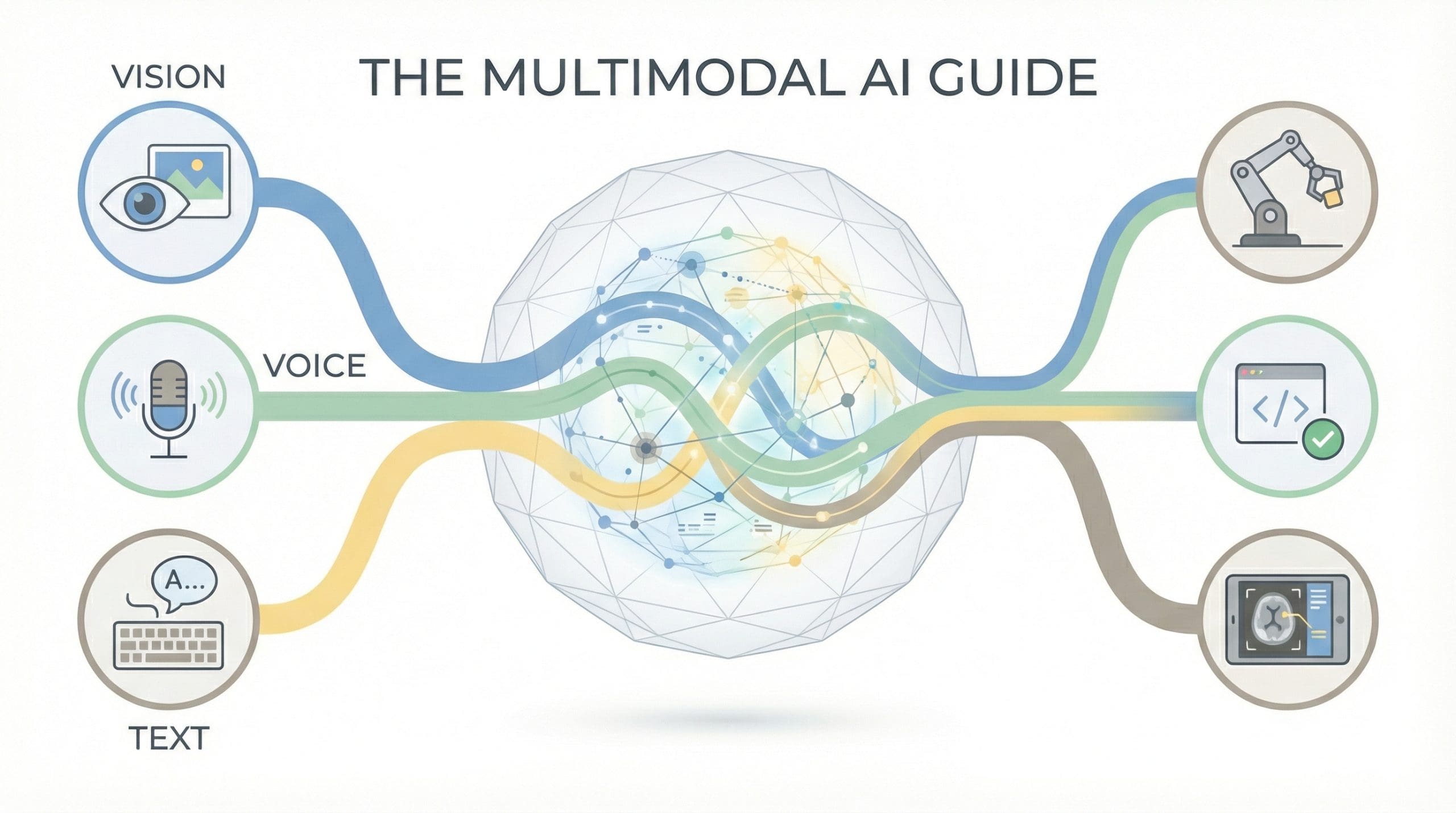

The Multimodal AI Guide: Vision, Voice, Text, and BeyondKDnuggets AI systems now see images, hear speech, and process video, understanding information in its native form.

AI systems now see images, hear speech, and process video, understanding information in its native form. Read More

Build reliable Agentic AI solution with Amazon Bedrock: Learn from Pushpay’s journey on GenAI evaluationArtificial Intelligence In this post, we walk you through Pushpay’s journey in building this solution and explore how Pushpay used Amazon Bedrock to create a custom generative AI evaluation framework for continuous quality assurance and establishing rapid iteration feedback loops on AWS.

In this post, we walk you through Pushpay’s journey in building this solution and explore how Pushpay used Amazon Bedrock to create a custom generative AI evaluation framework for continuous quality assurance and establishing rapid iteration feedback loops on AWS. Read More

A new ransomware strain that entered the scene last year has poorly designed code and an odd “Hebrew” identity that might be a false flag. Read More

OpenAI plans to begin rolling out ads on ChatGPT in the United States if you have a free or $8 Go subscription, but the catch is that the ads could be very expensive for advertisers. […] Read More

Modern ransomware has shifted from encryption to psychological extortion that exploits fear, liability, and exposure. Flare shows how today’s ransomware groups weaponize stolen data and pressure tactics to force payment. […] Read More

While telnet is considered obsolete, the network protocol is still used by hundreds of thousands of legacy systems and IoT devices for remote access. Read More