Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated November 16th, 2025

Table of Contents

NIST AI RMF Function Overviews: View Articles

What is NIST AI RMF Govern?

The GOVERN function is the cornerstone of NIST’s AI Risk Management Framework. It establishes the policies, culture, accountability structures, and processes that enable your organization to manage AI risks systematically.

GOVERN includes 19 subcategories across six categories. Organizations benefit most by implementing this function first, as it sets the tone from executive leadership through technical teams. Without it, AI risk management becomes subjective, inconsistent, and vulnerable to failures. Small organizations typically need two to four months to implement core elements; larger enterprises typically need five to eight months (timelines vary based on organizational complexity and existing governance structures).

Why NIST AI RMF GOVERN Matters: A Real Example

The following documented incident illustrates what happens without the NIST AI RMF GOVERN function in place.

In 2014, Amazon built an AI recruiting tool to automate candidate evaluation. By 2018, they scrapped it after discovering systematic bias against women (Reuters, 2018; MIT Technology Review, 2018). The system penalized resumes containing “women’s” and downgraded graduates from all-women’s colleges.

This demonstrates specific NIST AI RMF GOVERN failures:

- GOVERN 3.1 violated: No diverse team informed the AI risk decisions during development

- GOVERN 1.5 absent: No ongoing monitoring caught the bias for years

- GOVERN 4.2 missing: Teams didn’t document potential discriminatory impacts before deployment

- GOVERN 2.3 gap: Unclear executive accountability for the discriminatory outcomes

Had Amazon implemented the NIST AI RMF GOVERN function, these subcategories would have required diverse teams in decision-making, documented impact assessments, ongoing monitoring protocols, and clear executive responsibility. The GOVERN function exists specifically to prevent these organizational failures.

Important Context: The NIST AI Risk Management Framework was published in January 2023, five years after Amazon scrapped this tool. This example illustrates the types of organizational governance gaps that the NIST AI RMF GOVERN function was designed to address. Had such a framework been available and implemented during Amazon’s development process, these specific GOVERN subcategories would have established the practices that could have prevented these failures.

Understanding NIST AI RMF GOVERN’s Role

NIST’s AI Risk Management Framework has four core functions: Govern, Map, Measure, and Manage. The NIST AI RMF GOVERN function is the cross-cutting function that enables the other three.

While Map, Measure, and Manage deal with specific AI systems and technical risks, the NIST AI RMF GOVERN function operates at the organizational level. It cultivates a risk management culture, connects AI design to organizational values, addresses the full product lifecycle (including third-party software and data), and establishes accountability.

The NIST AI RMF GOVERN function is voluntary, but it provides the organizational foundation that makes all other AI risk management activities effective.

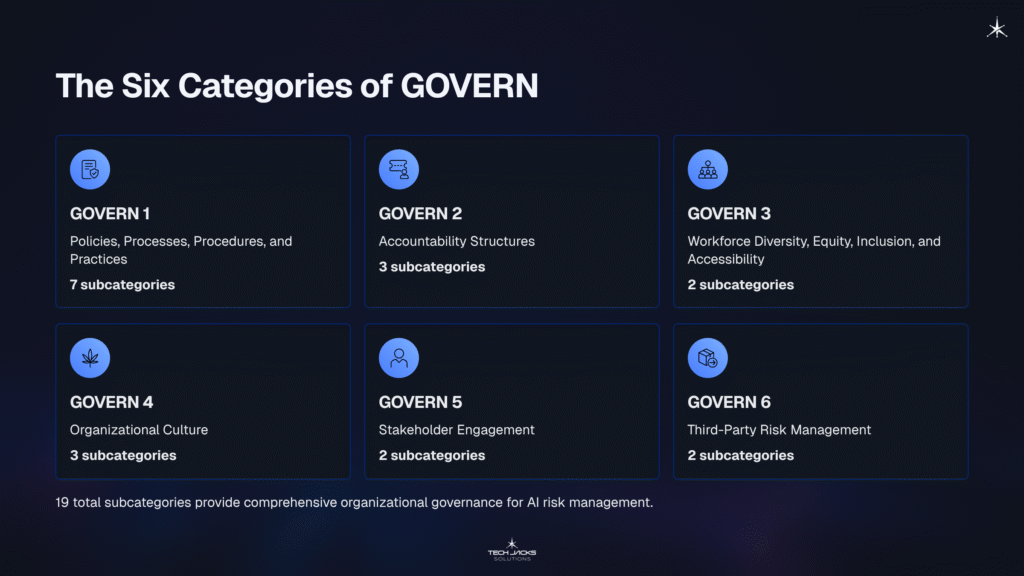

The Six Categories of NIST AI RMF GOVERN

The NIST AI RMF GOVERN function is organized into six categories containing 19 total subcategories:

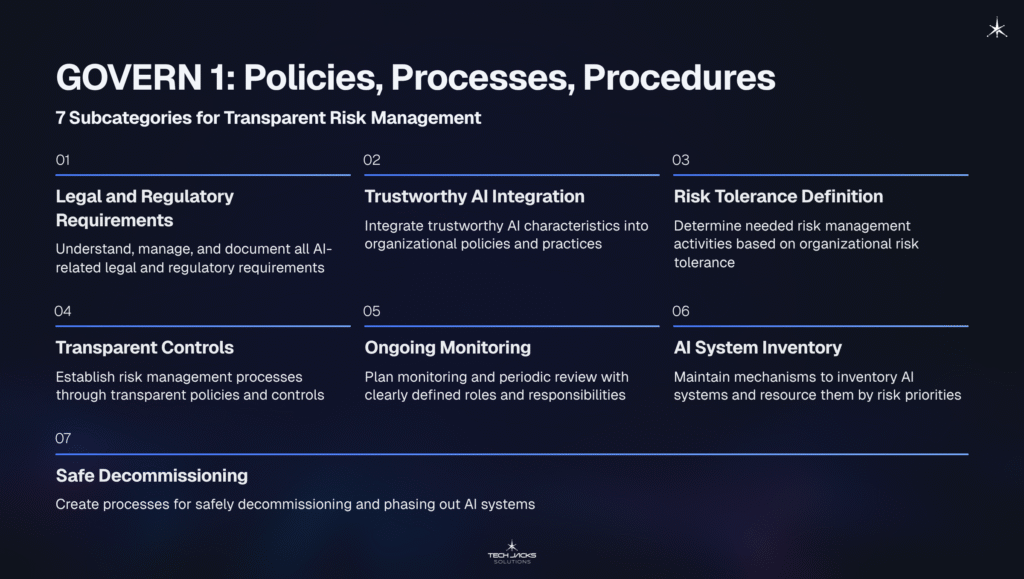

GOVERN 1: Policies, Processes, Procedures, and Practices (7 subcategories)

Ensures transparent, implemented policies for managing AI risks.

GOVERN 1.1: Legal and regulatory requirements involving AI are understood, managed, and documented.

GOVERN 1.2: Trustworthy AI characteristics are integrated into organizational policies, processes, procedures, and practices.

GOVERN 1.3: Processes determine the needed level of risk management activities based on organizational risk tolerance.

GOVERN 1.4: Risk management process and outcomes are established through transparent policies, procedures, and controls.

GOVERN 1.5: Ongoing monitoring and periodic review are planned with clearly defined organizational roles and responsibilities.

GOVERN 1.6: Mechanisms inventory AI systems and are resourced according to organizational risk priorities.

GOVERN 1.7: Processes exist for safely decommissioning and phasing out AI systems.

GOVERN 2: Accountability Structures (3 subcategories)

Ensures appropriate teams are empowered, responsible, and trained for AI risk management.

GOVERN 2.1: Roles, responsibilities, and lines of communication for AI risks are documented and clear throughout the organization.

GOVERN 2.2: Personnel and partners receive AI risk management training consistent with policies, procedures, and agreements.

GOVERN 2.3: Executive leadership takes responsibility for decisions about AI system risks.

GOVERN 3: Workforce Diversity, Equity, Inclusion, and Accessibility (2 subcategories)

Prioritizes diverse perspectives in AI risk management.

GOVERN 3.1: Decision-making is informed by diverse teams (demographics, disciplines, experience, expertise, backgrounds).

GOVERN 3.2: Policies define roles and responsibilities for human-AI configurations and oversight.

GOVERN 4: Organizational Culture (3 subcategories)

Commits teams to a culture that considers and communicates AI risk.

GOVERN 4.1: Policies foster a critical thinking and safety-first mindset to minimize negative impacts.

GOVERN 4.2: Teams document and communicate risks and potential impacts of AI technology.

GOVERN 4.3: Practices enable AI testing, incident identification, and information sharing.

GOVERN 5: Stakeholder Engagement (2 subcategories)

Ensures robust engagement with relevant AI actors.

GOVERN 5.1: Policies collect, consider, prioritize, and integrate feedback from external stakeholders regarding AI risks and societal impacts.

GOVERN 5.2: Mechanisms enable teams to regularly incorporate adjudicated feedback into system design and implementation.

GOVERN 6: Third-Party Risk Management (2 subcategories)

Addresses risks from third-party software, data, and supply chain issues.

GOVERN 6.1: Policies address AI risks from third-party entities, including intellectual property infringement risks.

GOVERN 6.2: Contingency processes handle failures or incidents in third-party data or high-risk AI systems.

Most Valuable NIST AI RMF GOVERN Subcategories for Implementation

Focus on these five foundational NIST AI RMF GOVERN subcategories first:

1. GOVERN 2.3 (Executive Leadership Responsibility): Senior leadership must formally declare risk tolerances and delegate power, resources, and authorization. This sets organizational tone for the entire NIST AI RMF GOVERN implementation.

2. GOVERN 1.2 (Trustworthy AI Integration): Integrating trustworthy AI characteristics into policies prevents building harmful systems by design. This directly addresses the type of failure seen in the Amazon recruiting tool example.

3. GOVERN 1.3 (Risk Tolerance Definition): Determines needed risk management activities based on organizational risk tolerance, preventing team disagreements about what matters.

4. GOVERN 2.1 (Roles and Responsibilities): Clear documentation eliminates confusion that leads to critical risks falling through gaps between teams.

5. GOVERN 6.1 (Third-Party Risk Policies): Most organizations rely on third-party AI technologies. Defining these policies early prevents expensive retrofitting.

These five NIST AI RMF GOVERN subcategories provide the foundational clarity that subsequent technical work depends on.

How to Implement NIST AI RMF GOVERN

Implementation of the NIST AI RMF GOVERN function requires ongoing attention as knowledge, organizational culture, and stakeholder expectations evolve.

Start with executive mandate. Senior leadership must formally commit to NIST AI RMF GOVERN implementation and allocate resources. Next, establish foundational policies: document your approach to trustworthy AI, define risk tolerances, and create accountability structures. Small to medium organizations typically need two to four months for this phase; large enterprises typically need four to eight months (timelines vary based on competing priorities, approval cycles, organizational complexity, and existing governance structures).

Then build organizational capacity through training, feedback mechanisms, and AI system inventory. This happens in parallel with policy development and continues indefinitely.

The NIST AI RMF GOVERN function is voluntary. NIST designed it as flexible and non-prescriptive. Organizations select categories and subcategories based on their resources and capabilities.

Week One: Immediate Steps for NIST AI RMF GOVERN

Days 1-2: AI System Inventory Document every AI system your organization uses. Include formal machine learning models, third-party AI services, and shadow AI (employees using ChatGPT, Copilot, or similar tools for work). Most organizations discover more AI usage than expected.

Days 3-5: Executive Engagement Schedule a 90-minute executive meeting (not 30 minutes; you need time for education and discussion). Prepare a one-page brief covering: current AI use, potential risks under NIST AI RMF categories, and request for formal risk tolerance statement per GOVERN 1.3.

Week 2-3: Map Current Accountability Identify who currently makes AI decisions. Map who approves deployments, monitors performance, and handles incidents. You’ll discover gaps where no one owns critical decisions. This mapping directly supports GOVERN 2.1 (roles and responsibilities).

These first steps establish your baseline for implementing the full NIST AI RMF GOVERN function.

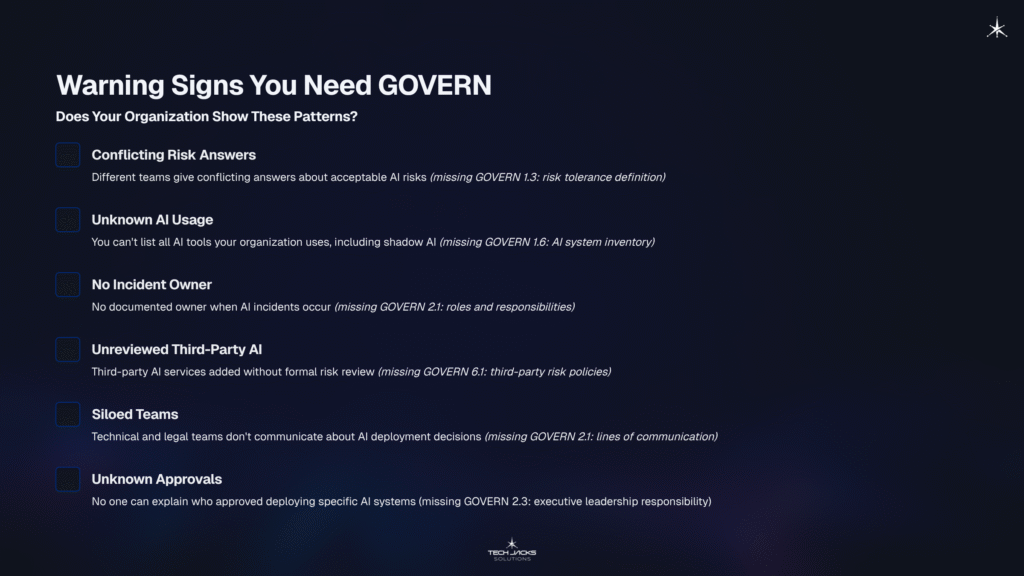

Warning Signs You Need NIST AI RMF GOVERN

Your organization needs the NIST AI RMF GOVERN function if you observe these patterns:

- Different teams give conflicting answers about acceptable AI risks (indicates missing GOVERN 1.3: risk tolerance definition)

- You can’t list all AI tools your organization uses, including shadow AI like employees using ChatGPT (indicates missing GOVERN 1.6: AI system inventory)

- No documented owner when AI incidents occur (indicates missing GOVERN 2.1: roles and responsibilities)

- Third-party AI services added without formal risk review (indicates missing GOVERN 6.1: third-party risk policies)

- Technical and legal teams don’t communicate about AI deployment decisions (indicates missing GOVERN 2.1: lines of communication)

- No one can explain who approved deploying specific AI systems (indicates missing GOVERN 2.3: executive leadership responsibility)

These gaps represent the organizational risks that the NIST AI RMF GOVERN function specifically addresses.

Frequently Asked Questions

Q: Is NIST AI RMF GOVERN mandatory for organizations using AI?

No. The NIST AI RMF GOVERN function is entirely voluntary. However, specific regulations in your industry may require governance practices that the NIST AI RMF GOVERN function addresses.

Q: How much does it cost to implement NIST AI RMF GOVERN?

Costs vary dramatically by organization size, existing governance structures, and scope. The NIST AI RMF framework itself is free. Costs come from staff time, training, and documentation systems needed to implement GOVERN subcategories.

Q: Can small organizations implement NIST AI RMF GOVERN?

Absolutely. Small organizations can focus on the five most valuable GOVERN subcategories rather than implementing all 19. The NIST AI RMF framework allows selecting elements that fit your resources.

Q: Do we need to implement NIST AI RMF GOVERN before the other functions?

NIST recommends implementing the GOVERN function first because it establishes the foundation for Map, Measure, and Manage. However, organizations can perform functions in any order based on needs.

Q: What’s the difference between NIST AI RMF GOVERN policies and AI system controls?

The NIST AI RMF GOVERN function operates at the organizational level (accountability, risk tolerance, decision-making). Technical controls on specific AI systems come from the Map, Measure, and Manage functions. GOVERN enables those technical functions to work effectively.

Q: How often should we update our NIST AI RMF GOVERN implementation?

The NIST AI RMF GOVERN function requires continuous attention. Many organizations conduct formal reviews quarterly or annually, with ad-hoc updates as AI technologies evolve or regulations change.

Q: What happens if we skip NIST AI RMF GOVERN?

Organizations that skip the NIST AI RMF GOVERN function often encounter inconsistent risk assessments across teams, unclear accountability during AI incidents, legal violations, and difficulty scaling AI initiatives. The Amazon recruiting tool example demonstrates these failures.

Ready to Test Your Knowledge?

Article based on NIST AI 100-1 (Artificial Intelligence Risk Management Framework) and supporting documentation from the NIST Trustworthy and Responsible AI Resource Center.