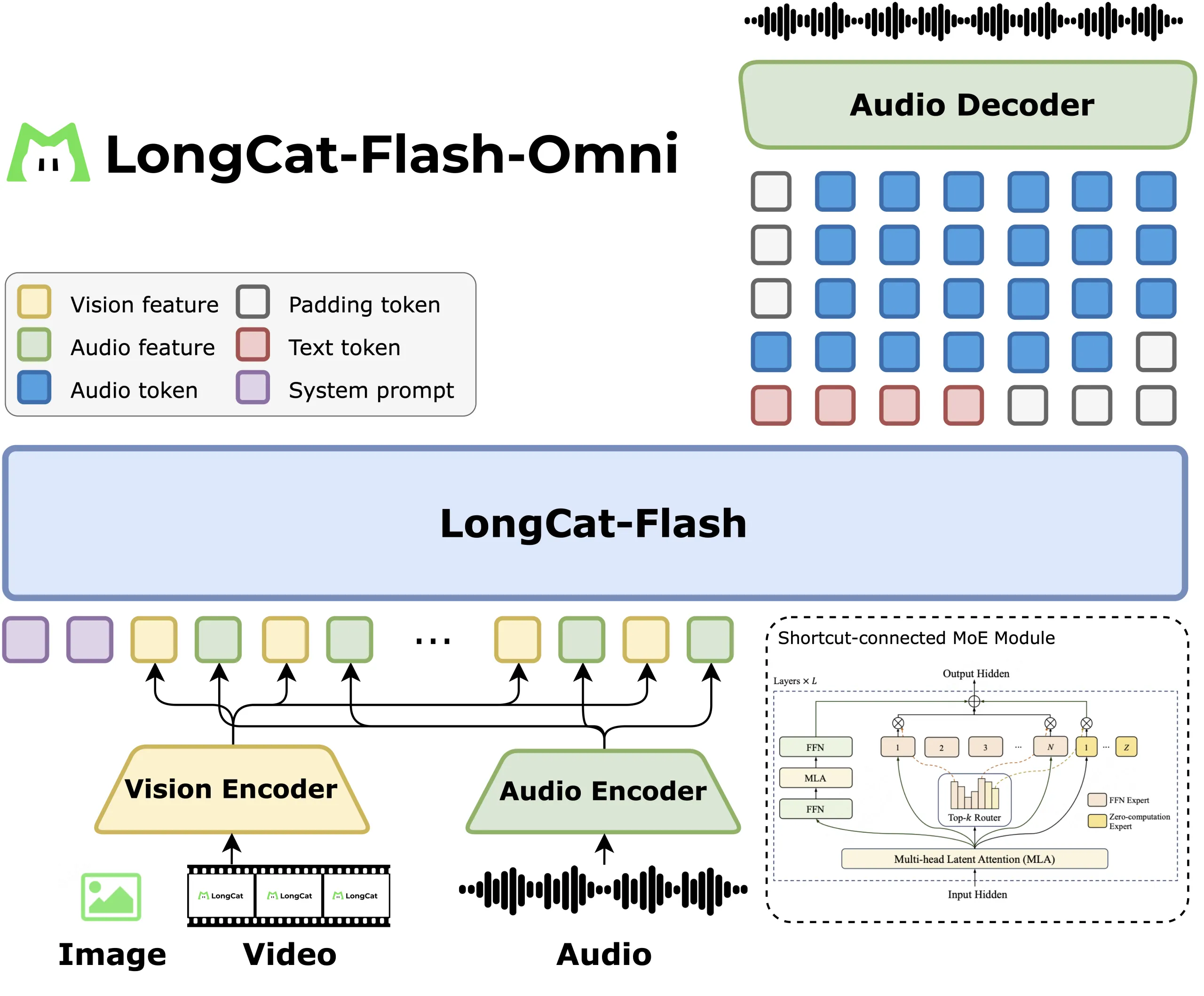

How do you design a single model that can listen, see, read and respond in real time across text, image, video and audio without losing the efficiency? Meituan’s LongCat team has released LongCat Flash Omni, an open source omni modal model with 560 billion parameters and about 27 billion active per token, built on the

The post LongCat-Flash-Omni: A SOTA Open-Source Omni-Modal Model with 560B Parameters with 27B activated, Excelling at Real-Time Audio-Visual Interaction appeared first on MarkTechPost. Read More