Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated January 20th, 2026

Table of Contents

What Is ISO 42001 Clause 9: From AI Operations to Verification

Your AI risk assessments are complete. Risk treatments are implemented. Impact assessments documented. The operational controls from Clause 8 are running. Now comes the harder question: how do you prove any of it actually works?

Clause 9 of ISO/IEC 42001:2023 shifts focus from doing AI governance to verifying AI governance. It’s where organizations move beyond operational execution to systematic evaluation of whether their controls achieve intended outcomes.

Disclaimer: This article provides educational guidance on AI governance principles aligned with ISO standards. It does not reproduce ISO standard text. Organizations should obtain ISO/IEC 42001:2023 directly from ISO or national standards bodies for specific compliance requirements.

📋 EXECUTIVE SUMMARY

- Clause 9 represents the “Check” phase of Plan-Do-Check-Act, following Clause 8’s operational requirements

- Three components: monitoring/measurement, internal audits, and management reviews

- Creates objective evidence that operational controls from Clause 8 perform as intended

- Supports EU AI Act post-market monitoring requirements

- Integrates with existing ISO management systems (27001, 9001)

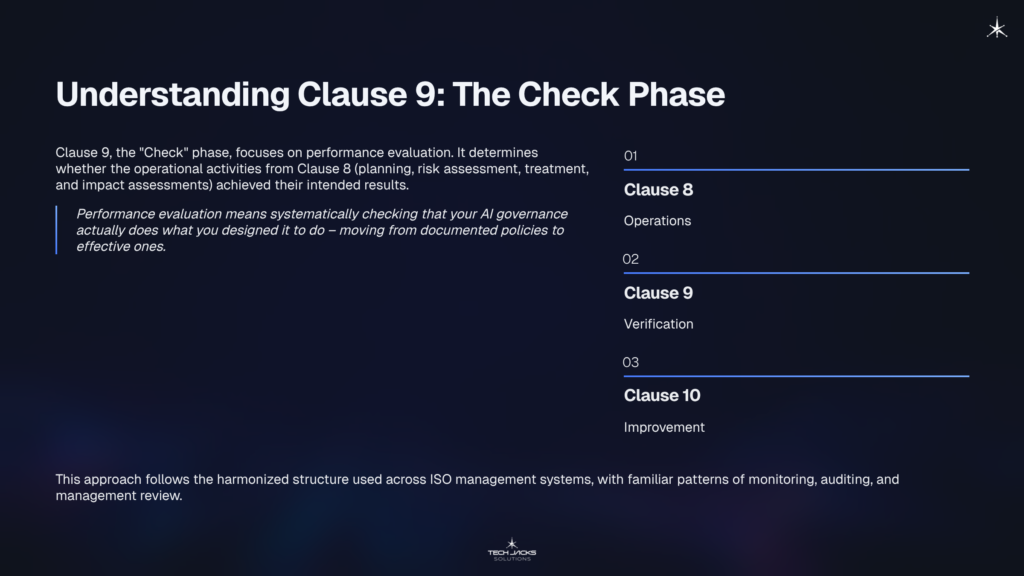

Understanding ISO 42001 Clause 9: The Check Phase

Performance evaluation transforms operational activity into verified outcomes. Clause 8 established operational planning, risk assessment execution, risk treatment implementation, and impact assessments. Clause 9 determines whether those activities achieved their intended results.

Without systematic verification, organizations can’t distinguish between functioning controls and documented controls. They assume risk treatments reduced risk. They believe monitoring detects drift. They trust that implemented controls prevent the harms they were designed to prevent.

Clause 9 of the AI management system standard establishes mandatory requirements (using “shall” language) for proving these assumptions correct (or identifying when they’re not). The standard structures performance evaluation around three interconnected activities that together provide comprehensive oversight of the AI Management System (AIMS).

In plain English: Performance evaluation means systematically checking that your AI governance actually does what you designed it to do. It’s the difference between having policies and having effective policies.

The approach follows the same harmonized structure used across ISO management systems. Organizations familiar with ISO 27001 or ISO 9001 will recognize the pattern of monitoring, auditing, and management review.

How ISO 42001 Clause 9 Works: Three Core Components

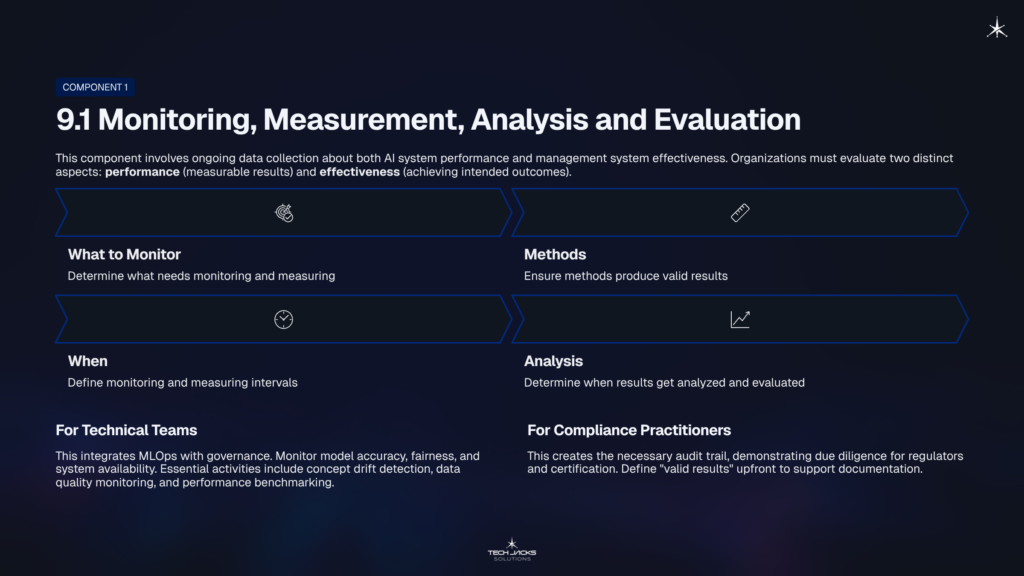

9.1 Monitoring, Measurement, Analysis and Evaluation

This component addresses ongoing data collection about both AI system performance and management system effectiveness. The standard requires organizations to evaluate two distinct aspects: performance (measurable results) and effectiveness (extent to which planned activities achieve intended outcomes).

Organizations must determine four specific elements: (a) what needs monitoring and measuring, (b) methods that ensure valid results, (c) when monitoring and measuring occurs, and (d) when results get analyzed and evaluated.

The requirement generates documented evidence of results. This evidence serves multiple purposes: demonstrating system health to stakeholders, identifying performance degradation before it causes harm, and feeding data into improvement processes.

For Technical Teams: Think of this as MLOps meets governance. You’re monitoring model accuracy, fairness metrics, and system availability while simultaneously tracking whether your governance processes function as designed. Concept drift detection, data quality monitoring, and performance benchmarking all contribute to this requirement.

For Compliance Practitioners: This requirement creates the audit trail you’ll need. Documented monitoring results demonstrate due diligence to regulators and provide evidence during certification audits. The key is establishing what constitutes “valid results” before you need them.

💡 KEY TAKEAWAY: Monitoring frequency is organization-determined, but the requirement is mandatory. Organizations define “when” based on risk and system criticality. This typically represents the highest effort component of Clause 9 because it requires technical integration into AI operations and regular analysis against established criteria.

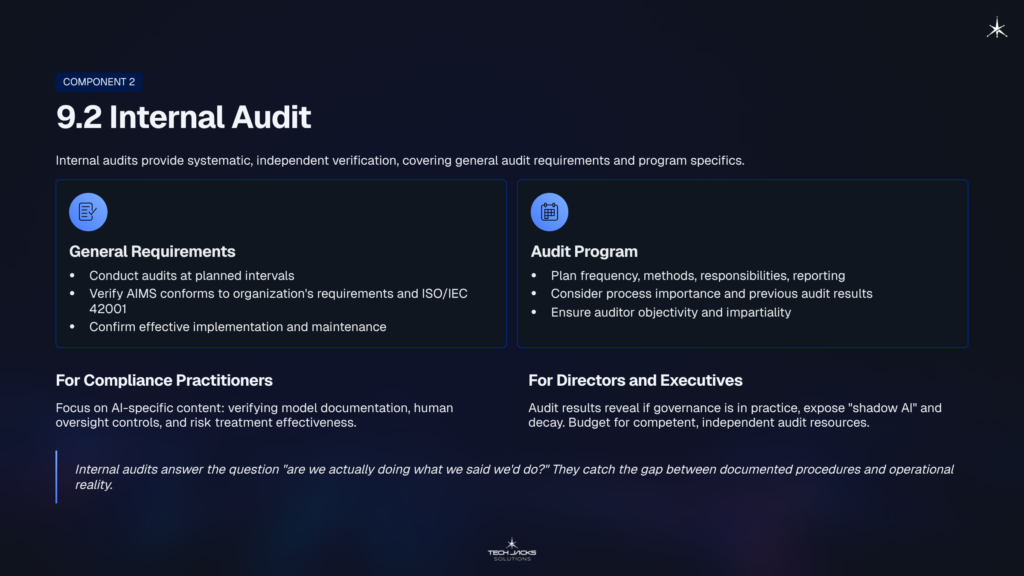

9.2 Internal Audit

Internal audits provide systematic, independent verification. The requirement has two parts: general audit requirements and audit program requirements.

The general component requires organizations to conduct audits at planned intervals. These audits determine whether the AIMS conforms to both the organization’s own requirements and the requirements of ISO/IEC 42001. They also verify that the system is effectively implemented and maintained (not just documented).

The audit program component requires planning that covers frequency, methods, responsibilities, and reporting. Audit scope must consider the importance of processes concerned and results of previous audits (enabling risk-based audit prioritization). Auditor selection must ensure objectivity and impartiality. Someone cannot audit their own work. Results go to relevant managers.

For Compliance Practitioners: This is familiar territory. Internal audit programs follow the same principles as information security audits. The difference is AI-specific content: verifying that model documentation matches actual implementations, that human oversight controls function as designed, and that risk treatments address the risks they’re supposed to address.

For Directors and Executives: Audit results tell you whether governance exists on paper or in practice. They reveal “shadow AI” (systems operating outside governance) and governance decay (controls that stopped being followed). Budget appropriately for competent, independent audit resources.

In plain English: Internal audits answer the question “are we actually doing what we said we’d do?” They catch the gap between documented procedures and operational reality.

9.3 Management Review

Top management must review the AIMS at planned intervals. This requirement ensures executive engagement isn’t optional or delegable.

The review requires specific mandatory inputs: (a) status of actions from previous reviews, (b) changes in external and internal context, (c) changes in stakeholder needs and expectations, (d) performance trends (nonconformities, monitoring results, audit findings), and (e) opportunities for continual improvement.

The review produces specific outputs: decisions about continual improvement and any changes needed to the AI management system. Both inputs and outputs require documented evidence.

For Directors and Executives: This is your governance checkpoint. Management review prevents “zombie” AI projects (systems that no longer serve business purposes but consume resources) and ensures AI strategy remains aligned with organizational direction. The requirement creates accountability at the highest level.

💡 KEY TAKEAWAY: Management review has lower effort than monitoring or auditing (typically quarterly or annual meetings), but carries significant strategic weight. Top management cannot claim ignorance of AI governance status if they’re conducting proper reviews.

Clause 9 Documentation Requirements

Each sub-clause mandates specific documented information. These are auditable requirements, not recommendations.

| Sub-Clause | Required Documentation |

|---|---|

| 9.1 | Evidence of monitoring and measurement results |

| 9.2 | Audit program implementation records + audit results and findings |

| 9.3 | Evidence of management review results (inputs considered, decisions made) |

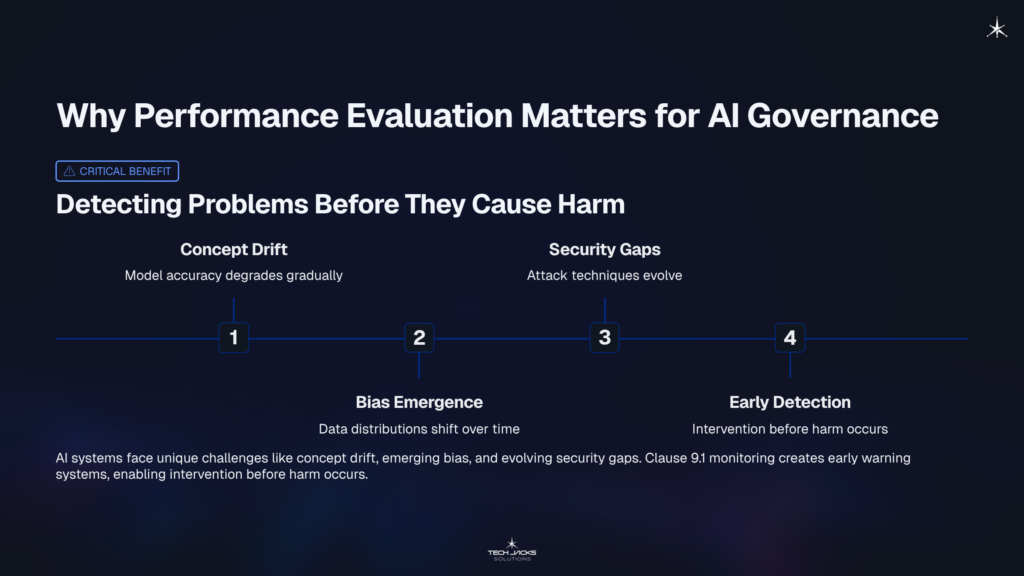

Why Performance Evaluation Matters for AI Governance

Detecting Problems Before They Cause Harm

AI systems don’t fail like traditional software. Model accuracy degrades gradually through concept drift. Bias emerges as data distributions shift. Security vulnerabilities appear as attack techniques evolve.

Clause 9.1 monitoring creates early warning systems for these AI-specific failure modes. Organizations detect when systems operate outside intended conditions, triggering intervention before harm occurs.

Supporting Regulatory Compliance

The EU AI Act requires post-market monitoring for high-risk AI systems. Clause 9.1 provides the mechanism to satisfy these obligations by defining methods and intervals for monitoring. The documentation requirements create audit trails regulators expect during investigations.

Organizations operating under multiple regulatory frameworks benefit from integrated performance evaluation. One systematic approach addresses overlapping compliance obligations.

Building Stakeholder Confidence

Trust requires evidence. Customers, partners, investors, and regulators increasingly expect organizations to demonstrate responsible AI practices. Documented monitoring results and independent audit findings carry more weight than promises.

Performance evaluation transforms marketing statements into verifiable commitments.

Enabling Continual Improvement

Clause 9 outputs feed directly into Clause 10 (Improvement). Monitoring data, audit findings, and management review decisions provide the evidence base for corrective actions and system enhancements. Without Clause 9, improvement efforts lack objective direction.

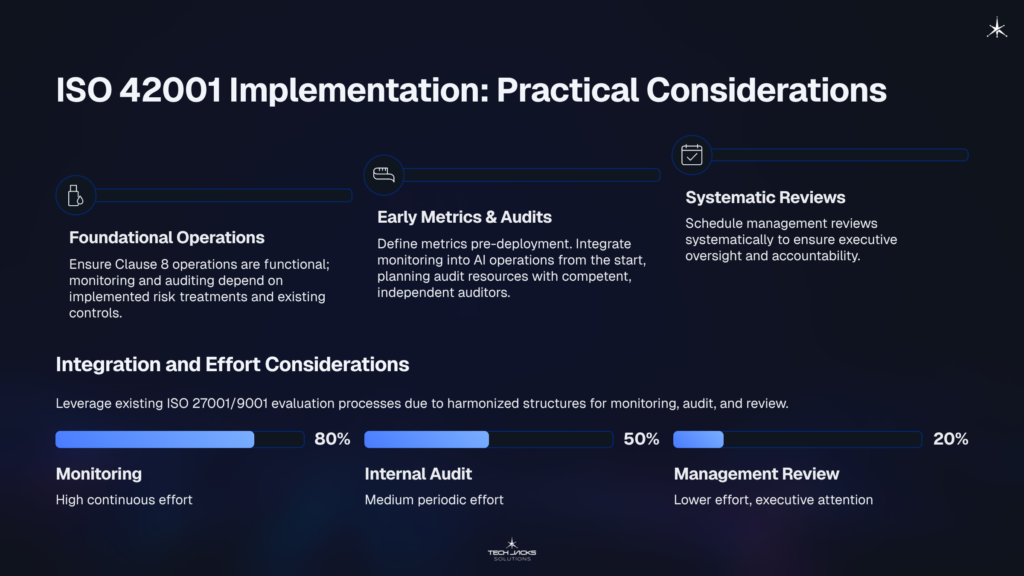

ISO 42001 Implementation: Practical Considerations

Getting Started with Performance Evaluation

Organizations need functioning Clause 8 operations before meaningful performance evaluation. You can’t monitor risk treatments that aren’t implemented. You can’t audit controls that don’t exist. Clause 9 evaluates what Clause 8 establishes.

Define metrics before deployment. Build monitoring into AI operations from the start. Plan audit resources with competent, independent auditors. Schedule management reviews systematically.

Integration and Effort Considerations

Organizations certified to ISO 27001 or ISO 9001 can extend existing evaluation processes. The harmonized structure means monitoring, audit, and review share common foundations.

Effort varies significantly: monitoring requires high continuous effort, internal audit requires medium periodic effort, and management review requires lower effort but demands executive attention.

💡 KEY TAKEAWAY: Don’t underestimate monitoring effort. Organizations that budget only for periodic audits miss the continuous oversight that catches AI problems early.

ISO 42001 Clause 9 FAQ

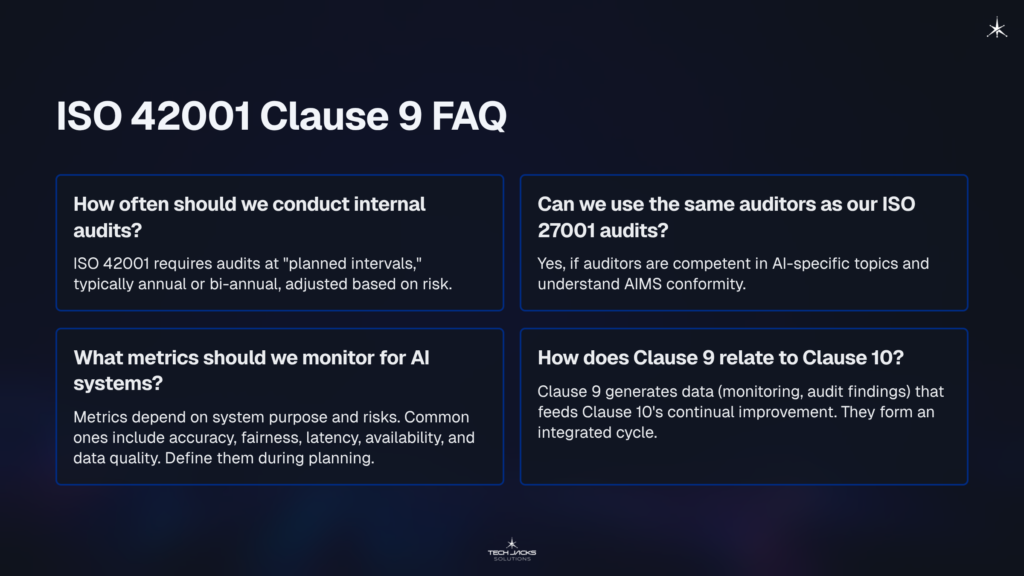

How often should we conduct internal audits?

ISO/IEC 42001 requires audits at “planned intervals” without specifying frequency. Most organizations align with existing audit cycles (annual or bi-annual), adjusting based on risk and previous findings.

Can we use the same auditors as our ISO 27001 audits?

Yes, provided they have competence in AI-specific topics. Auditors need to understand AI systems and governance controls to evaluate AIMS conformity effectively.

What metrics should we monitor for AI systems?

This depends on the system’s purpose and risks. Common metrics include accuracy, fairness measures, latency, availability, and data quality indicators. Define metrics during planning before deployment.

How does Clause 9 relate to Clause 10?

Clause 9 generates the data Clause 10 uses. Monitoring results, audit findings, and management review outputs all feed into continual improvement. They work as an integrated cycle.

Ready to Test Your Knowledge?

AI Governance Standards and Resources

Official Standards:

- ISO/IEC 42001:2023 – AI management systems (available through ISO and national standards bodies)

- ISO/IEC 22989:2022 – AI concepts and terminology

- ISO/IEC 23894 – Guidance on AI risk management

Regulatory Documents:

- EU AI Act (Regulation 2024/1689) – EU regulatory framework for AI

- NIST AI Risk Management Framework – US framework for AI risk management

Related Standards:

- ISO/IEC 27001:2022 – Information security management

- ISO 9001:2015 – Quality management systems

Your Next Steps: Implementing Performance Evaluation

This Week:

☐ Inventory current monitoring capabilities for AI systems

☐ Review existing internal audit program for AI coverage

☐ Assess management review agenda for AIMS topics

This Month:

☐ Define metrics for critical AI systems

☐ Identify audit competency gaps

☐ Schedule initial management review

Need Help? [Download our ISO 42001 Performance Evaluation Template] | [Book a Consultation]