Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated: 11/25/2025

Table of Contents

Hello Everyone, Help us grow our community by sharing and/or supporting us on other platforms. This allow us to show verification that what we are doing is valued. It also allows us to plan and allocate resources to improve what we are doing, as we then know others are interested/supportive.

What Is ISO 42001 Clause 7

Building the Foundation: Resources, Competence, and Communication

Your AI management system might look great on paper. But the reality: you can’t execute without the right people, tools, and processes.

That’s what Clause 7 fixes. It’s about the practical stuff that makes your AI governance actually work.

Disclaimer: This article discusses general principles for AI governance in alignment with ISO standards. Organizations should consult ISO/IEC 42001:2023 directly for specific compliance requirements.

WHAT YOU’LL LEARN:

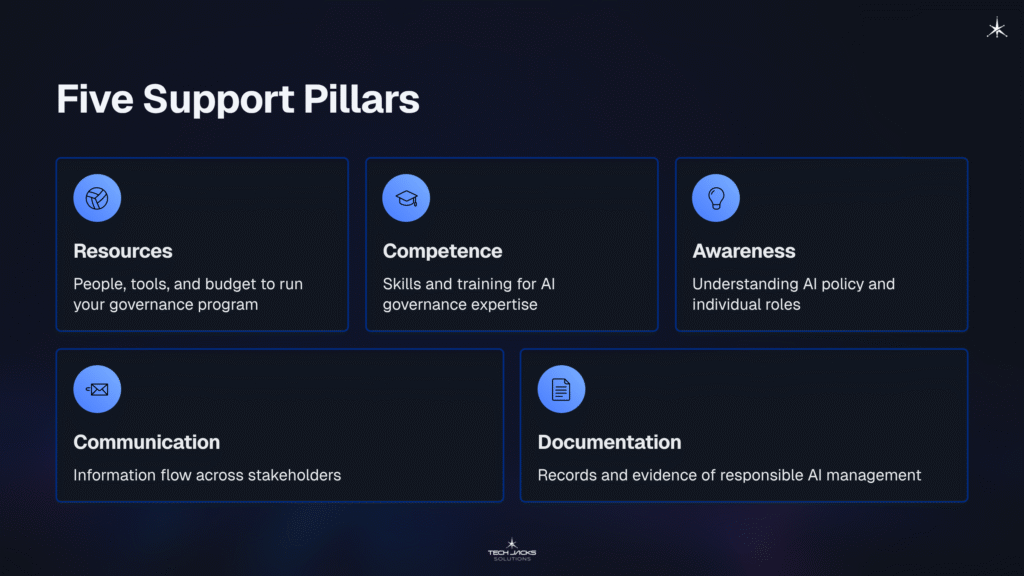

Clause 7 defines five support requirements for AI governance:

- Resources (7.1) – People, tools, budget

- Competence (7.2) – Skills and training

- Awareness (7.3) – Understanding AI policy

- Communication (7.4) – Information flow

- Documented Information (7.5) – Records and evidence

QUICK SUMMARY

• Support infrastructure realistically takes 4-7 months accounting for organizational overhead

• Five areas: resources, competence, awareness, communication, documentation

• You need people who understand both AI technology AND governance

• Documentation proves you’re managing AI responsibly

What Clause 7 Actually Covers

Support requirements can be thought of as your operational foundation. Five critical areas: resources, competence, awareness, communication, and documented information.

If you’ve worked with ISO 27001 or ISO 9001, you’ll recognize the pattern. But AI governance needs specialized skills that traditional IT governance doesn’t address.

In plain English: Support means everything your system needs to function. People, training, tools, and processes.

You can’t just assign your existing IT team. AI systems need ongoing monitoring, continuous data management, and expertise that spans technical and ethical domains.

Why This Matters Right Now

Technical Reality: AI systems aren’t like traditional software. Models drift. Data quality affects performance. Algorithmic decisions need oversight. Without proper support infrastructure, your governance becomes a filing cabinet nobody uses.

Regulatory Pressure: The EU AI Act’s Article 17 requires technical documentation for high-risk AI systems. Regulators will check if you have people who actually know what they’re doing.

Organizational Chaos: AI projects typically span data science, IT, legal, and business units. Without structured communication, these groups work in silos. Result? Risk assessments that miss critical details.

BOTTOM LINE: Organizations may find that a 4-7 month investment in support infrastructure prevents 18-26 months of fixing governance that doesn’t work.

Five Support Pillars

1. Resources (Clause 7.1)

You need to provide resources for establishing, implementing, and improving your AI management system.

This means three things. Human resources: enough people across AI lifecycle stages. Technological resources: development environments, monitoring tools, infrastructure. Financial resources: ongoing costs for training, audits, improvements.

Translation: Having enough people, money, and tools to run your governance program, not just document it.

The standard’s Annex A.4 covers control objectives. You need to identify data resources throughout the AI lifecycle, document your algorithms and models, and account for computing resources from development to production.

Here’s the thing: data scientists understand models but not governance as much. Compliance professionals understand risk frameworks but not AI technology as much. Building teams with both skills takes deliberate investment and planning.

2. Competence (Clause 7.2)

You must determine necessary competencies for anyone whose work affects AI performance, ensure competence through education or training, and maintain documented evidence.

Annex B.4.6 emphasizes diverse expertise: technical knowledge (AI concepts, machine learning, data science), governance expertise (risk frameworks, audit practices), and legal understanding (data privacy, AI regulations, ethical principles).

Real Deal: You can’t staff AI governance with people who only know tech OR only know compliance. You need both.

Organizations can create competency matrices showing required knowledge and skills. Training programs address gaps through assessments or demonstrated capability.

3. Awareness (Clause 7.3)

Everyone working under your control must know three things: the AI policy, how they contribute to effectiveness, and what happens if they don’t follow requirements.

Awareness isn’t the same as competence. Competence means having skills. Awareness means understanding why it matters.

Programs typically include onboarding, regular policy updates, and scenario-based training showing what goes wrong. You’re building a culture where everyone understands their role.

A dev team might be great at building models but clueless about how their documentation affects audits. Awareness programs bridge that gap, and can save a couple of headaches.

4. Communication (Clause 7.4)

You need to figure out what to communicate, when, to whom, and how.

Internal stakeholders include technical teams, leadership, and business units. External ones include customers, regulators, and partners. Plans specify regular touchpoints with appropriate timing.

Technical teams should have weekly updates. Leadership gets quarterly reviews. Customers get transparency information about AI systems affecting them.

KEY POINT: Getting the right information to the right people at the right time in usable format.

5. Documentation (Clause 7.5)

Your system needs documented information required by the standard plus(+) whatever else is necessary.

You need appropriate identification, format, review, and approval processes. How much varies based on size, complexity, and personnel competence.

Key docs: AI policy, risk assessment methods, risk treatment plans, competency records, audit reports. Documentation guides people and proves to auditors you’re managing AI responsibly.

For AI systems that work like “black boxes,” documented governance is your primary way of demonstrating control to regulators.

Making It Work

Build Competency: Start with skills gap analysis. Combine technical and governance training. Verify through assessments.

Create Awareness: Connect governance to daily work. Use communications, scenario training, and recognition programs.

Set Up Communication: Define information needs. Create templates and escalation paths.

Hypothetical Implementation Scenarios

Note: The following are hypothetical examples illustrating common patterns.

Hypothetical: Financial Services – Consider a hypothetical financial services organization creating an AI governance team combining data scientists, risk managers, and compliance professionals. A competency framework might define 10-15 essential competencies. Training programs realistically span 6-10 months accounting for scheduling and learning curves. Monthly leadership updates, quarterly regulator reports.

Hypothetical: Healthcare Technology – Consider a hypothetical healthcare organization developing diagnostic AI emphasizing clinical expertise alongside AI knowledge. Documentation practices create audit trails linking updates to clinical performance. Implementation realistically spans 5-8 months given regulatory complexity.

Hypothetical: Manufacturing – Consider a hypothetical manufacturing organization deploying predictive maintenance AI, training maintenance personnel on capabilities and limitations over several months. Communication ensures managers understand model confidence levels.

Getting Ready

Invest in support infrastructure when implementing AI systems that need governance. Essential when facing regulatory requirements, customer accountability demands, or internal risk management needs.

Already certified to other ISO management systems? Use existing infrastructure. HR manages competency for ISO 27001. Documentation processes exist for ISO 9001. Extend these for AI needs.

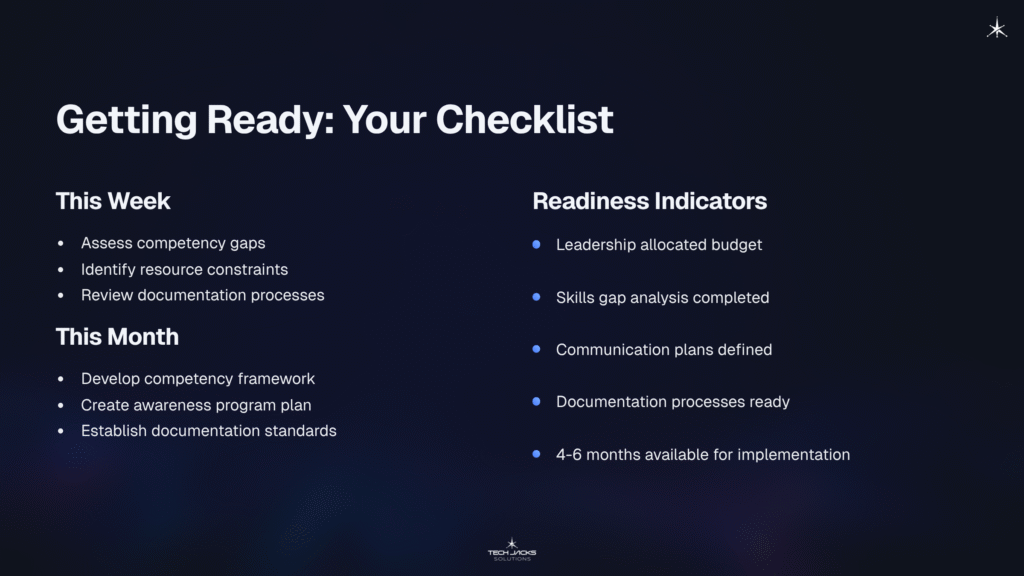

Realistic readiness indicators:

- Leadership allocated budget

- Skills gap analysis completed

- Communication plans defined

- Documentation processes ready

- Realistically 4-6 months available for implementation (accounting for organizational overhead)

Common Questions

How much training do people need?

Data scientists with no governance background realistically need 35-50 hours covering risk management, regulations, and documentation. Compliance professionals need similar duration on AI concepts.

Can we outsource this?

You can engage consultants for specialized expertise. But you must determine competencies and ensure people are competent. You’re accountable even with external resources.

Should contractors do awareness training?

Yes, if they do work affecting your system. Clause 7.3 covers “persons doing work under the organization’s control.”

Standards and Resources

Official Standards:

- ISO/IEC 42001:2023 – AI management systems

- ISO/IEC 22989:2022 – AI concepts and terminology

- ISO/IEC 42005:2025 – AI system impact assessment guidance

Regulatory Documents:

- EU AI Act – EU regulatory framework

- NIST AI Risk Management Framework – US framework

Related Standards:

- ISO/IEC 27001:2022 – Information security management

- ISO 31000:2018 – Risk management guidelines

Next Steps

This Week:

☐ Assess competency gaps

☐ Identify resource constraints

☐ Review documentation processes

This Month:

☐ Develop competency framework

☐ Create awareness program plan

☐ Establish documentation standards

Support infrastructure enables everything else. You can’t implement risk assessments, impact assessments, or operational controls without the people, processes, and systems that Clause 7 requires. This is where governance moves from theory to practice.