Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated January 24th, 2026

Table of Contents

What Is ISO 42001 Clause 10: Improvement?

The Final Phase of AI Governance That Actually Matters

You’ve built your AI management system. Policies are documented. Risk assessments are complete. Audits have happened. Now what?

This is where most organizations stall. They treat compliance as a destination rather than a process. Clause 10 exists specifically to prevent that stagnation, ensuring your governance evolves alongside your actual AI capabilities.

The improvement requirements represent the “Act” phase of the Plan-Do-Check-Act (PDCA) cycle that ISO uses across management system standards. It’s the mechanism that keeps AI governance from becoming shelf documentation that nobody references after certification.

Disclaimer: This article discusses general principles for AI governance in alignment with ISO standards. Organizations should consult ISO/IEC 42001:2023 directly for specific compliance requirements.

📋 EXECUTIVE SUMMARY • Clause 10 contains two requirements: continual improvement (10.1) and nonconformity management (10.2) • Organizations must maintain three system attributes: suitability, adequacy, and effectiveness • Nonconformities require root cause analysis, not just quick fixes • Corrective actions must be proportional to actual impact • Critical for surveillance audits and certification maintenance

What Is ISO 42001 Clause 10?

Understanding ISO 42001 Clause 10 starts with its position in the framework. It’s the final operational clause. That placement is intentional. You can’t improve a system you haven’t built, monitored, and evaluated.

The clause divides into two sub-requirements. Clause 10.1 addresses continual improvement at the strategic level. Clause 10.2 handles tactical response to specific failures (called “nonconformities” in ISO terminology). A nonconformity is any instance where a requirement isn’t met, whether from the standard itself, your own policies, or regulatory obligations.

In plain English: Clause 10 is about fixing what’s broken and making the system better over time. When something goes wrong, you don’t just patch it. You figure out why it happened and prevent it from happening again.

ISO management systems share a harmonized structure across standards like ISO 27001 and ISO 9001. If you’ve worked with other certifications, the improvement requirements will feel familiar. The difference lies in applying these concepts to AI systems, where technology changes faster and failure modes can be harder to predict.

Why Improvement Requirements Matter for AI Governance

Technology Evolution Speed: AI capabilities change faster than traditional IT systems. A governance framework designed for 2024 models may not address 2026 capabilities. The continual improvement requirement forces regular reassessment of whether your policies and controls still fit your technology landscape.

Unpredictable Failure Modes: AI systems can fail in ways that weren’t anticipated during risk assessment. A model might produce biased outputs only under specific conditions outside testing scenarios. The nonconformity process provides structured response when unexpected problems emerge.

Certification Maintenance: ISO 42001 certification isn’t permanent. Certification bodies conduct annual surveillance audits during the three-year certificate cycle, specifically checking whether continual improvement is occurring. Organizations that can’t demonstrate Clause 10 activity risk certification suspension.

💡 KEY TAKEAWAY: Organizations skipping rigorous improvement processes often discover problems during surveillance audits rather than through their own monitoring. Fixing issues reactively under auditor scrutiny costs more (in time, resources, and reputation) than catching them proactively.

How ISO 42001 Clause 10 Works: Core Requirements

Clause 10.1: Continual Improvement

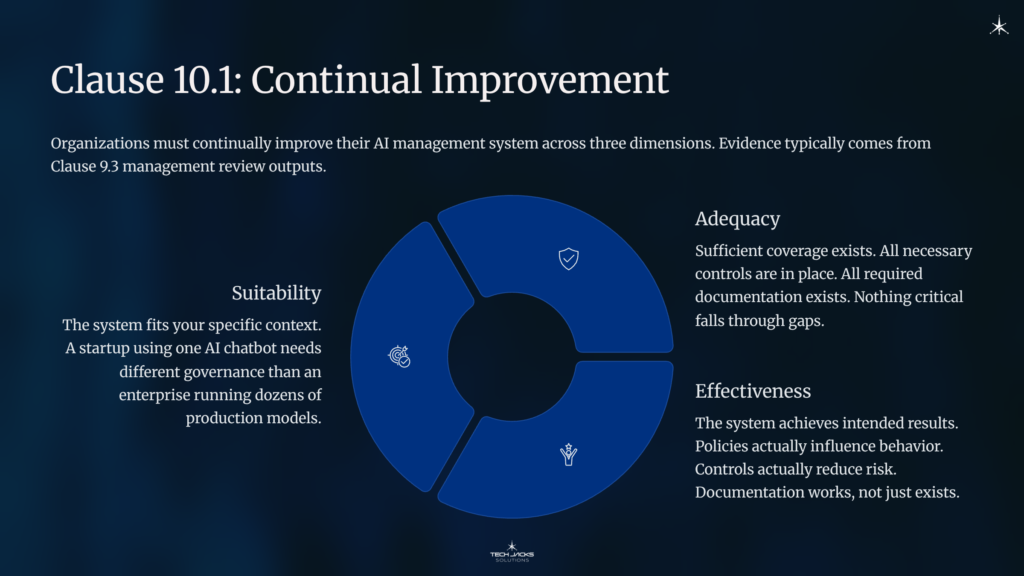

Organizations must continually improve their AI management system across three dimensions. The evidence for this typically comes from Clause 9.3 management review outputs, which must include decisions related to improvement opportunities.

Suitability means the system fits your specific context. A startup using one AI chatbot needs different governance than an enterprise running dozens of production models. What’s suitable changes as organizational context shifts.

Adequacy means sufficient coverage exists. All necessary controls are in place. All required documentation exists. Nothing critical falls through gaps.

Effectiveness means the system achieves intended results. Policies actually influence behavior. Controls actually reduce risk. This isn’t about documentation existing. It’s about documentation working.

Clause 10.2: Nonconformity and Corrective Action

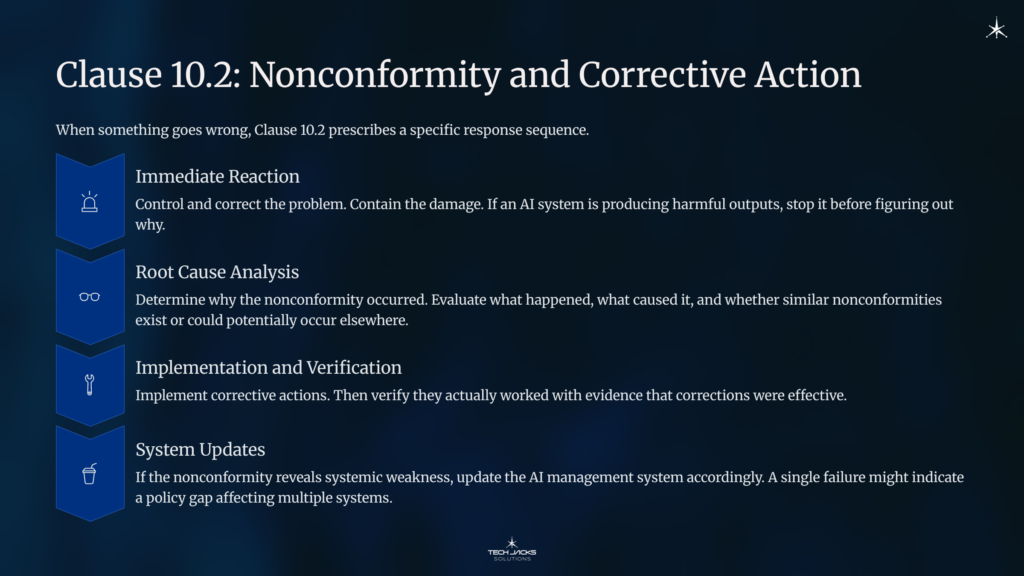

When something goes wrong, Clause 10.2 prescribes a specific response sequence.

Immediate Reaction: Control and correct the problem. Contain the damage. If an AI system is producing harmful outputs, stop it from producing more before you figure out why.

Root Cause Analysis: Quick fixes aren’t enough. Organizations must determine why the nonconformity occurred. The standard requires evaluating three things: what happened, what caused it, and whether similar nonconformities exist or could potentially occur elsewhere. That third element is mandatory, not optional.

Implementation and Verification: Implement corrective actions. Then verify they actually worked. Organizations frequently assume their fixes solve problems without confirming the assumption. The standard requires evidence that corrections were effective.

System Updates: If the nonconformity reveals systemic weakness, update the AI management system accordingly. A single failure might indicate a policy gap, training deficiency, or missing control affecting multiple systems.

What does this look like in practice? A typical nonconformity might involve an AI system producing outputs that violate your documented fairness thresholds. The immediate reaction stops the problematic outputs. Root cause analysis might reveal the training data contained historical bias that wasn’t caught during validation. The corrective action addresses data quality processes. Verification confirms the updated process catches similar issues. And the organization checks whether other AI systems used similar data sources.

💡 KEY TAKEAWAY: The standard explicitly requires corrective actions be proportional to effects. Minor administrative errors don’t require the same response as AI safety incidents. Calibrate your response to actual impact.

ISO 42001 Implementation: Evidence Requirements

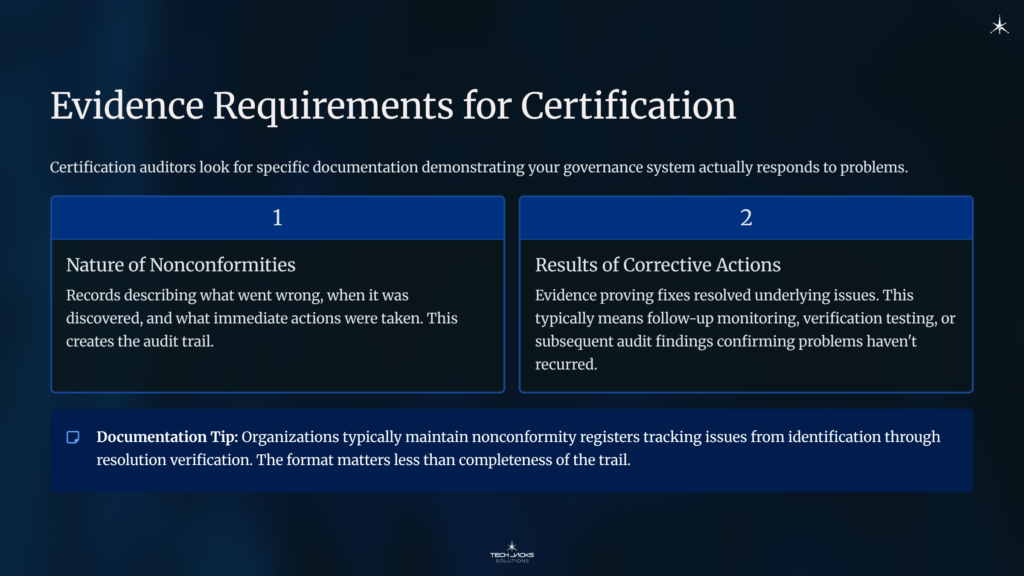

Certification auditors look for specific documentation.

Nature of Nonconformities: Records describing what went wrong, when it was discovered, and what immediate actions were taken. This creates the audit trail demonstrating your governance system actually responds to problems.

Results of Corrective Actions: Evidence proving fixes resolved underlying issues. This typically means follow-up monitoring, verification testing, or subsequent audit findings confirming problems haven’t recurred.

Organizations typically maintain nonconformity registers tracking issues from identification through resolution verification. The format matters less than completeness of the trail.

Supporting Controls and Integration

Several Annex A controls directly support Clause 10. Control A.2.4 requires periodic AI policy review to ensure continuing suitability, adequacy, and effectiveness, mirroring 10.1’s exact language. Control A.6.2.6 mandates repair capabilities and system monitoring, supporting immediate reaction requirements. Control A.6.2.8 requires event logging, providing evidence for root cause analysis.

These controls connect Clause 10 to broader AI risk management practices. When root cause analysis reveals gaps in risk identification, findings should feed back into Clause 6 risk assessment processes. When corrective actions require new controls, the Statement of Applicability may need updating.

The NIST AI Risk Management Framework emphasizes similar concepts through its “Govern” and “Manage” functions. Organizations using both frameworks will find natural integration points between NIST’s continuous monitoring guidance and ISO 42001’s improvement requirements.

Practical Order of Operations

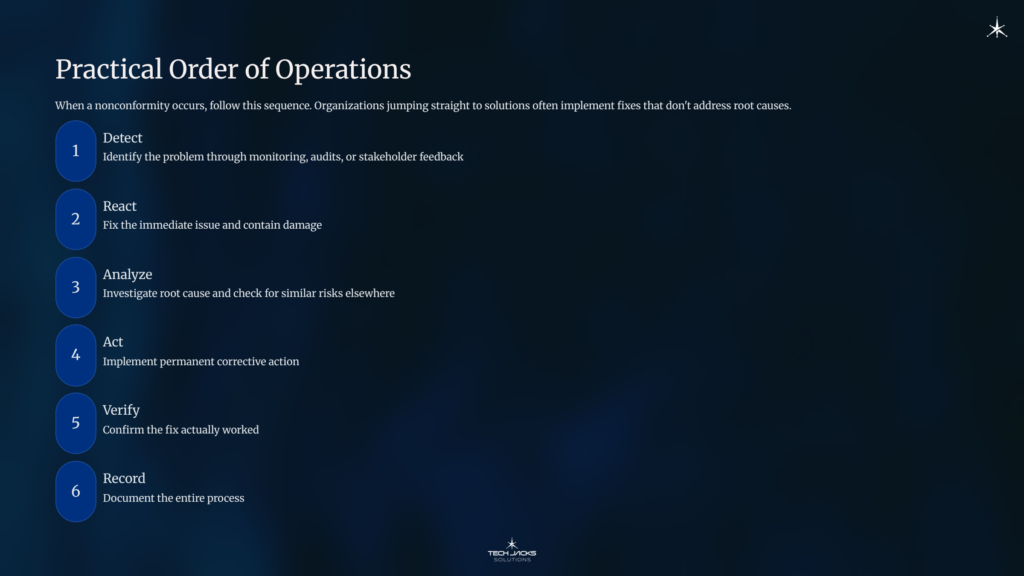

When a nonconformity occurs:

- Detect: Identify the problem through monitoring, audits, or stakeholder feedback

- React: Fix the immediate issue and contain damage

- Analyze: Investigate root cause and check for similar risks elsewhere

- Act: Implement permanent corrective action

- Verify: Confirm the fix actually worked

- Record: Document the entire process

The sequence matters. Organizations jumping straight to solutions often implement fixes that don’t address root causes.

ISO 42001 Clause 10 FAQ

How does Clause 10 differ from Clause 9?

Clause 9 checks whether your system works. Clause 10 acts on what you learned. Audits generate findings. Improvement requirements ensure those findings drive actual changes.

What triggers a nonconformity investigation?

Any failure to meet requirements from the standard, your policies, regulations, or stakeholder expectations. This includes near-misses that could have caused failures.

Who owns nonconformity management?

The standard doesn’t prescribe specific roles. Organizations typically assign investigation responsibility to process owners, with corrective action approval at management level. Document your approach in governance procedures.

Standards and Resources

Official Standards:

- ISO/IEC 42001:2023 – AI management systems standard

- ISO/IEC 22989:2022 – AI concepts and terminology

Related Frameworks:

- NIST AI Risk Management Framework – US framework for AI risk management

- EU AI Act (Regulation 2024/1689) – EU regulatory framework

Your Next Steps

This Week: ☐ Review your nonconformity management process ☐ Verify corrective action follow-up happens consistently

This Month: ☐ Establish or refine your nonconformity register ☐ Define proportionality criteria for corrective responses ☐ Schedule management review of improvement trends

Improvement isn’t optional. For organizations serious about AI governance, Clause 10 transforms compliance from static achievement into evolving capability. That evolution determines whether your certificate survives its first surveillance audit.