Use Amazon Quick Suite custom action connectors to upload text files to Google Drive using OpenAPI specificationArtificial Intelligence In this post, we demonstrate how to build a secure file upload solution by integrating Google Drive with Amazon Quick Suite custom connectors using Amazon API Gateway and AWS Lambda.

In this post, we demonstrate how to build a secure file upload solution by integrating Google Drive with Amazon Quick Suite custom connectors using Amazon API Gateway and AWS Lambda. Read More

AI agents in enterprises: Best practices with Amazon Bedrock AgentCoreArtificial Intelligence This post explores nine essential best practices for building enterprise AI agents using Amazon Bedrock AgentCore. Amazon Bedrock AgentCore is an agentic platform that provides the services you need to create, deploy, and manage AI agents at scale. In this post, we cover everything from initial scoping to organizational scaling, with practical guidance that you can apply immediately.

This post explores nine essential best practices for building enterprise AI agents using Amazon Bedrock AgentCore. Amazon Bedrock AgentCore is an agentic platform that provides the services you need to create, deploy, and manage AI agents at scale. In this post, we cover everything from initial scoping to organizational scaling, with practical guidance that you can apply immediately. Read More

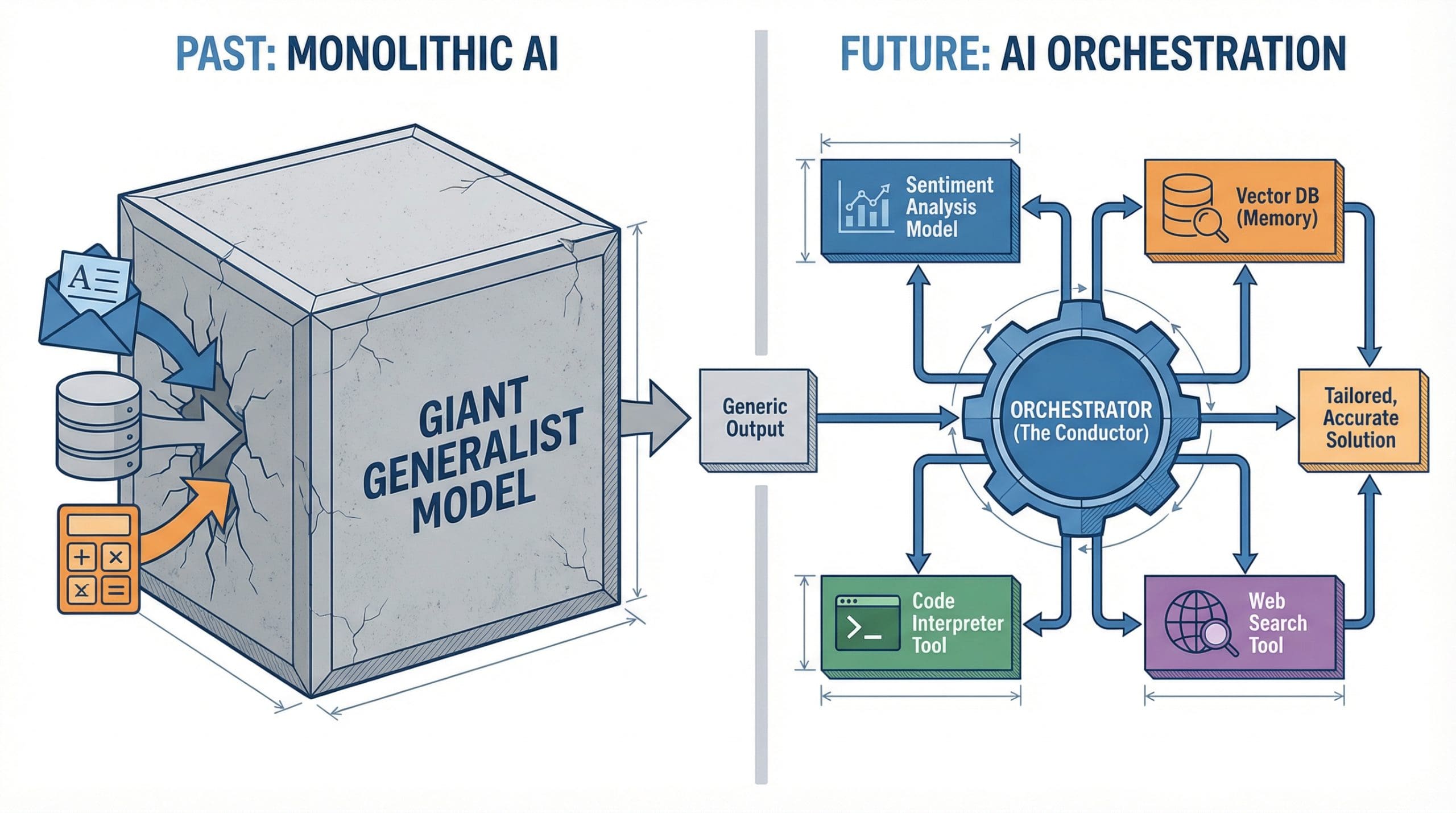

Beyond Giant Models: Why AI Orchestration Is the New ArchitectureKDnuggets AI orchestration coordinates specialized models and tools into systems greater than the sum of their parts.

AI orchestration coordinates specialized models and tools into systems greater than the sum of their parts. Read More

Routing in a Sparse Graph: a Distributed Q-Learning ApproachTowards Data Science Distributed agents need only decide one move ahead.

The post Routing in a Sparse Graph: a Distributed Q-Learning Approach appeared first on Towards Data Science.

Distributed agents need only decide one move ahead.

The post Routing in a Sparse Graph: a Distributed Q-Learning Approach appeared first on Towards Data Science. Read More

Agentic AI for healthcare data analysis with Amazon SageMaker Data AgentArtificial Intelligence On November 21, 2025, Amazon SageMaker introduced a built-in data agent within Amazon SageMaker Unified Studio that transforms large-scale data analysis. In this post, we demonstrate, through a detailed case study of an epidemiologist conducting clinical cohort analysis, how SageMaker Data Agent can help reduce weeks of data preparation into days, and days of analysis development into hours—ultimately accelerating the path from clinical questions to research conclusions.

On November 21, 2025, Amazon SageMaker introduced a built-in data agent within Amazon SageMaker Unified Studio that transforms large-scale data analysis. In this post, we demonstrate, through a detailed case study of an epidemiologist conducting clinical cohort analysis, how SageMaker Data Agent can help reduce weeks of data preparation into days, and days of analysis development into hours—ultimately accelerating the path from clinical questions to research conclusions. Read More

Step Finance announced that it lost $40 million worth of digital assets after hackers compromised devices belonging to the company’s team of executives. […] Read More

The United Kingdom’s data protection authority launched a formal investigation into X and its Irish subsidiary over reports that the Grok AI assistant was used to generate nonconsensual sexual images. […] Read More

YOLOv2 & YOLO9000 Paper Walkthrough: Better, Faster, StrongerTowards Data Science From YOLOv1 to YOLOv2: prior box, k-means, Darknet-19, passthrough layer, and more

The post YOLOv2 & YOLO9000 Paper Walkthrough: Better, Faster, Stronger appeared first on Towards Data Science.

From YOLOv1 to YOLOv2: prior box, k-means, Darknet-19, passthrough layer, and more

The post YOLOv2 & YOLO9000 Paper Walkthrough: Better, Faster, Stronger appeared first on Towards Data Science. Read More

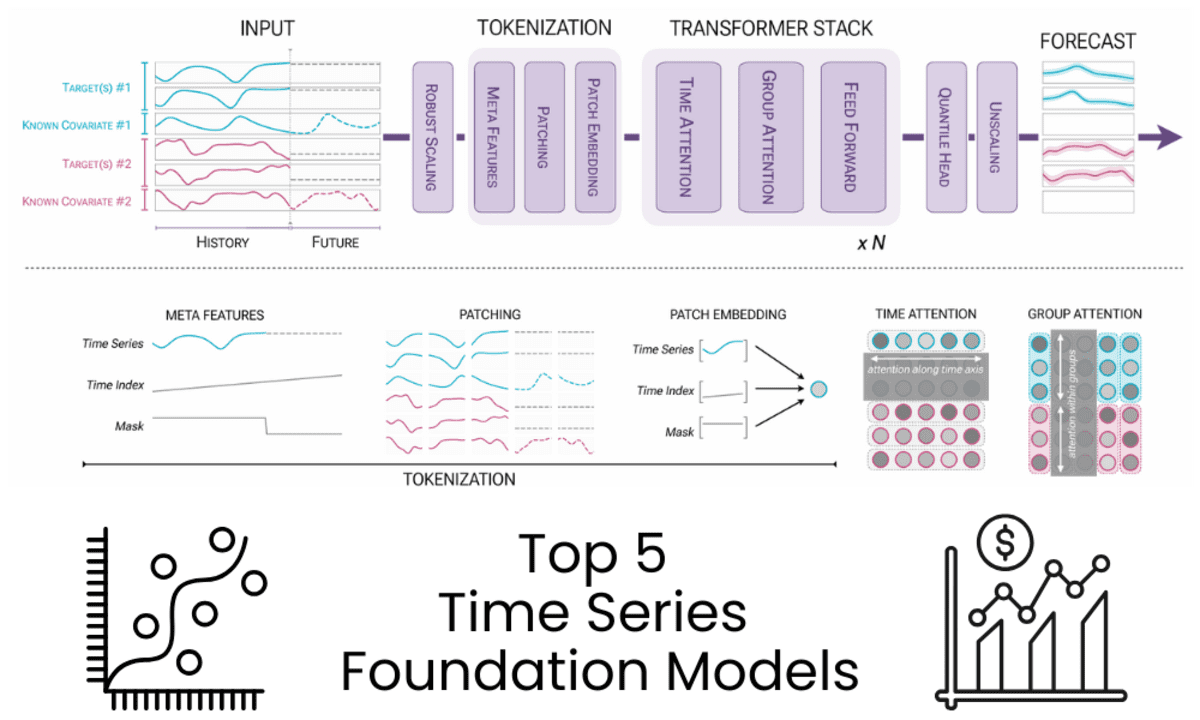

5 Time Series Foundation Models You Are Missing Out OnKDnuggets Five widely adopted time series foundation models delivering accurate zero-shot forecasting across industries and time horizons.

Five widely adopted time series foundation models delivering accurate zero-shot forecasting across industries and time horizons. Read More

Creating a Data Pipeline to Monitor Local Crime TrendsTowards Data Science A walkthough of creating an ETL pipeline to extract local crime data and visualize it in Metabase.

The post Creating a Data Pipeline to Monitor Local Crime Trends appeared first on Towards Data Science.

A walkthough of creating an ETL pipeline to extract local crime data and visualize it in Metabase.

The post Creating a Data Pipeline to Monitor Local Crime Trends appeared first on Towards Data Science. Read More