PerfDojo: Automated ML Library Generation for Heterogeneous Architecturescs.AI updates on arXiv.org arXiv:2511.03586v1 Announce Type: cross

Abstract: The increasing complexity of machine learning models and the proliferation of diverse hardware architectures (CPUs, GPUs, accelerators) make achieving optimal performance a significant challenge. Heterogeneity in instruction sets, specialized kernel requirements for different data types and model features (e.g., sparsity, quantization), and architecture-specific optimizations complicate performance tuning. Manual optimization is resource-intensive, while existing automatic approaches often rely on complex hardware-specific heuristics and uninterpretable intermediate representations, hindering performance portability. We introduce PerfLLM, a novel automatic optimization methodology leveraging Large Language Models (LLMs) and Reinforcement Learning (RL). Central to this is PerfDojo, an environment framing optimization as an RL game using a human-readable, mathematically-inspired code representation that guarantees semantic validity through transformations. This allows effective optimization without prior hardware knowledge, facilitating both human analysis and RL agent training. We demonstrate PerfLLM’s ability to achieve significant performance gains across diverse CPU (x86, Arm, RISC-V) and GPU architectures.

arXiv:2511.03586v1 Announce Type: cross

Abstract: The increasing complexity of machine learning models and the proliferation of diverse hardware architectures (CPUs, GPUs, accelerators) make achieving optimal performance a significant challenge. Heterogeneity in instruction sets, specialized kernel requirements for different data types and model features (e.g., sparsity, quantization), and architecture-specific optimizations complicate performance tuning. Manual optimization is resource-intensive, while existing automatic approaches often rely on complex hardware-specific heuristics and uninterpretable intermediate representations, hindering performance portability. We introduce PerfLLM, a novel automatic optimization methodology leveraging Large Language Models (LLMs) and Reinforcement Learning (RL). Central to this is PerfDojo, an environment framing optimization as an RL game using a human-readable, mathematically-inspired code representation that guarantees semantic validity through transformations. This allows effective optimization without prior hardware knowledge, facilitating both human analysis and RL agent training. We demonstrate PerfLLM’s ability to achieve significant performance gains across diverse CPU (x86, Arm, RISC-V) and GPU architectures. Read More

When Generative Artificial Intelligence meets Extended Reality: A Systematic Reviewcs.AI updates on arXiv.org arXiv:2511.03282v1 Announce Type: cross

Abstract: With the continuous advancement of technology, the application of generative artificial intelligence (AI) in various fields is gradually demonstrating great potential, particularly when combined with Extended Reality (XR), creating unprecedented possibilities. This survey article systematically reviews the applications of generative AI in XR, covering as much relevant literature as possible from 2023 to 2025. The application areas of generative AI in XR and its key technology implementations are summarised through PRISMA screening and analysis of the final 26 articles. The survey highlights existing articles from the last three years related to how XR utilises generative AI, providing insights into current trends and research gaps. We also explore potential opportunities for future research to further empower XR through generative AI, providing guidance and information for future generative XR research.

arXiv:2511.03282v1 Announce Type: cross

Abstract: With the continuous advancement of technology, the application of generative artificial intelligence (AI) in various fields is gradually demonstrating great potential, particularly when combined with Extended Reality (XR), creating unprecedented possibilities. This survey article systematically reviews the applications of generative AI in XR, covering as much relevant literature as possible from 2023 to 2025. The application areas of generative AI in XR and its key technology implementations are summarised through PRISMA screening and analysis of the final 26 articles. The survey highlights existing articles from the last three years related to how XR utilises generative AI, providing insights into current trends and research gaps. We also explore potential opportunities for future research to further empower XR through generative AI, providing guidance and information for future generative XR research. Read More

Efficient Neural Networks with Discrete Cosine Transform Activationscs.AI updates on arXiv.org arXiv:2511.03531v1 Announce Type: cross

Abstract: In this paper, we extend our previous work on the Expressive Neural Network (ENN), a multilayer perceptron with adaptive activation functions parametrized using the Discrete Cosine Transform (DCT). Building upon previous work that demonstrated the strong expressiveness of ENNs with compact architectures, we now emphasize their efficiency, interpretability and pruning capabilities. The DCT-based parameterization provides a structured and decorrelated representation that reveals the functional role of each neuron and allows direct identification of redundant components. Leveraging this property, we propose an efficient pruning strategy that removes unnecessary DCT coefficients with negligible or no loss in performance. Experimental results across classification and implicit neural representation tasks confirm that ENNs achieve state-of-the-art accuracy while maintaining a low number of parameters. Furthermore, up to 40% of the activation coefficients can be safely pruned, thanks to the orthogonality and bounded nature of the DCT basis. Overall, these findings demonstrate that the ENN framework offers a principled integration of signal processing concepts into neural network design, achieving a balanced trade-off between expressiveness, compactness, and interpretability.

arXiv:2511.03531v1 Announce Type: cross

Abstract: In this paper, we extend our previous work on the Expressive Neural Network (ENN), a multilayer perceptron with adaptive activation functions parametrized using the Discrete Cosine Transform (DCT). Building upon previous work that demonstrated the strong expressiveness of ENNs with compact architectures, we now emphasize their efficiency, interpretability and pruning capabilities. The DCT-based parameterization provides a structured and decorrelated representation that reveals the functional role of each neuron and allows direct identification of redundant components. Leveraging this property, we propose an efficient pruning strategy that removes unnecessary DCT coefficients with negligible or no loss in performance. Experimental results across classification and implicit neural representation tasks confirm that ENNs achieve state-of-the-art accuracy while maintaining a low number of parameters. Furthermore, up to 40% of the activation coefficients can be safely pruned, thanks to the orthogonality and bounded nature of the DCT basis. Overall, these findings demonstrate that the ENN framework offers a principled integration of signal processing concepts into neural network design, achieving a balanced trade-off between expressiveness, compactness, and interpretability. Read More

Whisper Leak: a side-channel attack on Large Language Modelscs.AI updates on arXiv.org arXiv:2511.03675v1 Announce Type: cross

Abstract: Large Language Models (LLMs) are increasingly deployed in sensitive domains including healthcare, legal services, and confidential communications, where privacy is paramount. This paper introduces Whisper Leak, a side-channel attack that infers user prompt topics from encrypted LLM traffic by analyzing packet size and timing patterns in streaming responses. Despite TLS encryption protecting content, these metadata patterns leak sufficient information to enable topic classification. We demonstrate the attack across 28 popular LLMs from major providers, achieving near-perfect classification (often >98% AUPRC) and high precision even at extreme class imbalance (10,000:1 noise-to-target ratio). For many models, we achieve 100% precision in identifying sensitive topics like “money laundering” while recovering 5-20% of target conversations. This industry-wide vulnerability poses significant risks for users under network surveillance by ISPs, governments, or local adversaries. We evaluate three mitigation strategies – random padding, token batching, and packet injection – finding that while each reduces attack effectiveness, none provides complete protection. Through responsible disclosure, we have collaborated with providers to implement initial countermeasures. Our findings underscore the need for LLM providers to address metadata leakage as AI systems handle increasingly sensitive information.

arXiv:2511.03675v1 Announce Type: cross

Abstract: Large Language Models (LLMs) are increasingly deployed in sensitive domains including healthcare, legal services, and confidential communications, where privacy is paramount. This paper introduces Whisper Leak, a side-channel attack that infers user prompt topics from encrypted LLM traffic by analyzing packet size and timing patterns in streaming responses. Despite TLS encryption protecting content, these metadata patterns leak sufficient information to enable topic classification. We demonstrate the attack across 28 popular LLMs from major providers, achieving near-perfect classification (often >98% AUPRC) and high precision even at extreme class imbalance (10,000:1 noise-to-target ratio). For many models, we achieve 100% precision in identifying sensitive topics like “money laundering” while recovering 5-20% of target conversations. This industry-wide vulnerability poses significant risks for users under network surveillance by ISPs, governments, or local adversaries. We evaluate three mitigation strategies – random padding, token batching, and packet injection – finding that while each reduces attack effectiveness, none provides complete protection. Through responsible disclosure, we have collaborated with providers to implement initial countermeasures. Our findings underscore the need for LLM providers to address metadata leakage as AI systems handle increasingly sensitive information. Read More

From Measurement to Expertise: Empathetic Expert Adapters for Context-Based Empathy in Conversational AI Agentscs.AI updates on arXiv.org arXiv:2511.03143v1 Announce Type: cross

Abstract: Empathy is a critical factor in fostering positive user experiences in conversational AI. While models can display empathy, it is often generic rather than tailored to specific tasks and contexts. In this work, we introduce a novel framework for developing and evaluating context-specific empathetic large language models (LLMs). We first analyze a real-world conversational dataset consisting of 672 multi-turn conversations across 8 tasks, revealing significant differences in terms of expected and experienced empathy before and after the conversations, respectively. To help minimize this gap, we develop a synthetic multi-turn conversational generation pipeline and steer responses toward our defined empathy patterns based on the context that more closely matches users’ expectations. We then train empathetic expert adapters for context-specific empathy that specialize in varying empathy levels based on the recognized task. Our empirical results demonstrate a significant gap reduction of 72.66% between perceived and desired empathy with scores increasing by an average factor of 2.43 as measured by our metrics and reward models. Additionally, our trained empathetic expert adapters demonstrate superior effectiveness in preserving empathy patterns throughout conversation turns, outperforming system prompts, which tend to dramatically diminish in impact as conversations lengthen.

arXiv:2511.03143v1 Announce Type: cross

Abstract: Empathy is a critical factor in fostering positive user experiences in conversational AI. While models can display empathy, it is often generic rather than tailored to specific tasks and contexts. In this work, we introduce a novel framework for developing and evaluating context-specific empathetic large language models (LLMs). We first analyze a real-world conversational dataset consisting of 672 multi-turn conversations across 8 tasks, revealing significant differences in terms of expected and experienced empathy before and after the conversations, respectively. To help minimize this gap, we develop a synthetic multi-turn conversational generation pipeline and steer responses toward our defined empathy patterns based on the context that more closely matches users’ expectations. We then train empathetic expert adapters for context-specific empathy that specialize in varying empathy levels based on the recognized task. Our empirical results demonstrate a significant gap reduction of 72.66% between perceived and desired empathy with scores increasing by an average factor of 2.43 as measured by our metrics and reward models. Additionally, our trained empathetic expert adapters demonstrate superior effectiveness in preserving empathy patterns throughout conversation turns, outperforming system prompts, which tend to dramatically diminish in impact as conversations lengthen. Read More

GraphCliff: Short-Long Range Gating for Subtle Differences but Critical Changescs.AI updates on arXiv.org arXiv:2511.03170v1 Announce Type: cross

Abstract: Quantitative structure-activity relationship assumes a smooth relationship between molecular structure and biological activity. However, activity cliffs defined as pairs of structurally similar compounds with large potency differences break this continuity. Recent benchmarks targeting activity cliffs have revealed that classical machine learning models with extended connectivity fingerprints outperform graph neural networks. Our analysis shows that graph embeddings fail to adequately separate structurally similar molecules in the embedding space, making it difficult to distinguish between structurally similar but functionally different molecules. Despite this limitation, molecular graph structures are inherently expressive and attractive, as they preserve molecular topology. To preserve the structural representation of molecules as graphs, we propose a new model, GraphCliff, which integrates short- and long-range information through a gating mechanism. Experimental results demonstrate that GraphCliff consistently improves performance on both non-cliff and cliff compounds. Furthermore, layer-wise node embedding analyses reveal reduced over-smoothing and enhanced discriminative power relative to strong baseline graph models.

arXiv:2511.03170v1 Announce Type: cross

Abstract: Quantitative structure-activity relationship assumes a smooth relationship between molecular structure and biological activity. However, activity cliffs defined as pairs of structurally similar compounds with large potency differences break this continuity. Recent benchmarks targeting activity cliffs have revealed that classical machine learning models with extended connectivity fingerprints outperform graph neural networks. Our analysis shows that graph embeddings fail to adequately separate structurally similar molecules in the embedding space, making it difficult to distinguish between structurally similar but functionally different molecules. Despite this limitation, molecular graph structures are inherently expressive and attractive, as they preserve molecular topology. To preserve the structural representation of molecules as graphs, we propose a new model, GraphCliff, which integrates short- and long-range information through a gating mechanism. Experimental results demonstrate that GraphCliff consistently improves performance on both non-cliff and cliff compounds. Furthermore, layer-wise node embedding analyses reveal reduced over-smoothing and enhanced discriminative power relative to strong baseline graph models. Read More

Beyond Numbers: How to Humanize Your Data & AnalysisTowards Data Science The scintillating grid optical illusion is a perfect metaphor for how raw data can mislead us, causing us to see false trends. To escape the “data-rich, action-poor” paradox, organizations should need data humanization.

This approach focuses on turning abstract metrics (the what) into clear, actionable stories (the why). It requires new roles like “Data Artisans,” a core competency in “Data Storytelling,” and a focus on proving the financial Impact (ROI) of these clearer insights.

The post Beyond Numbers: How to Humanize Your Data & Analysis appeared first on Towards Data Science.

The scintillating grid optical illusion is a perfect metaphor for how raw data can mislead us, causing us to see false trends. To escape the “data-rich, action-poor” paradox, organizations should need data humanization.

This approach focuses on turning abstract metrics (the what) into clear, actionable stories (the why). It requires new roles like “Data Artisans,” a core competency in “Data Storytelling,” and a focus on proving the financial Impact (ROI) of these clearer insights.

The post Beyond Numbers: How to Humanize Your Data & Analysis appeared first on Towards Data Science. Read More

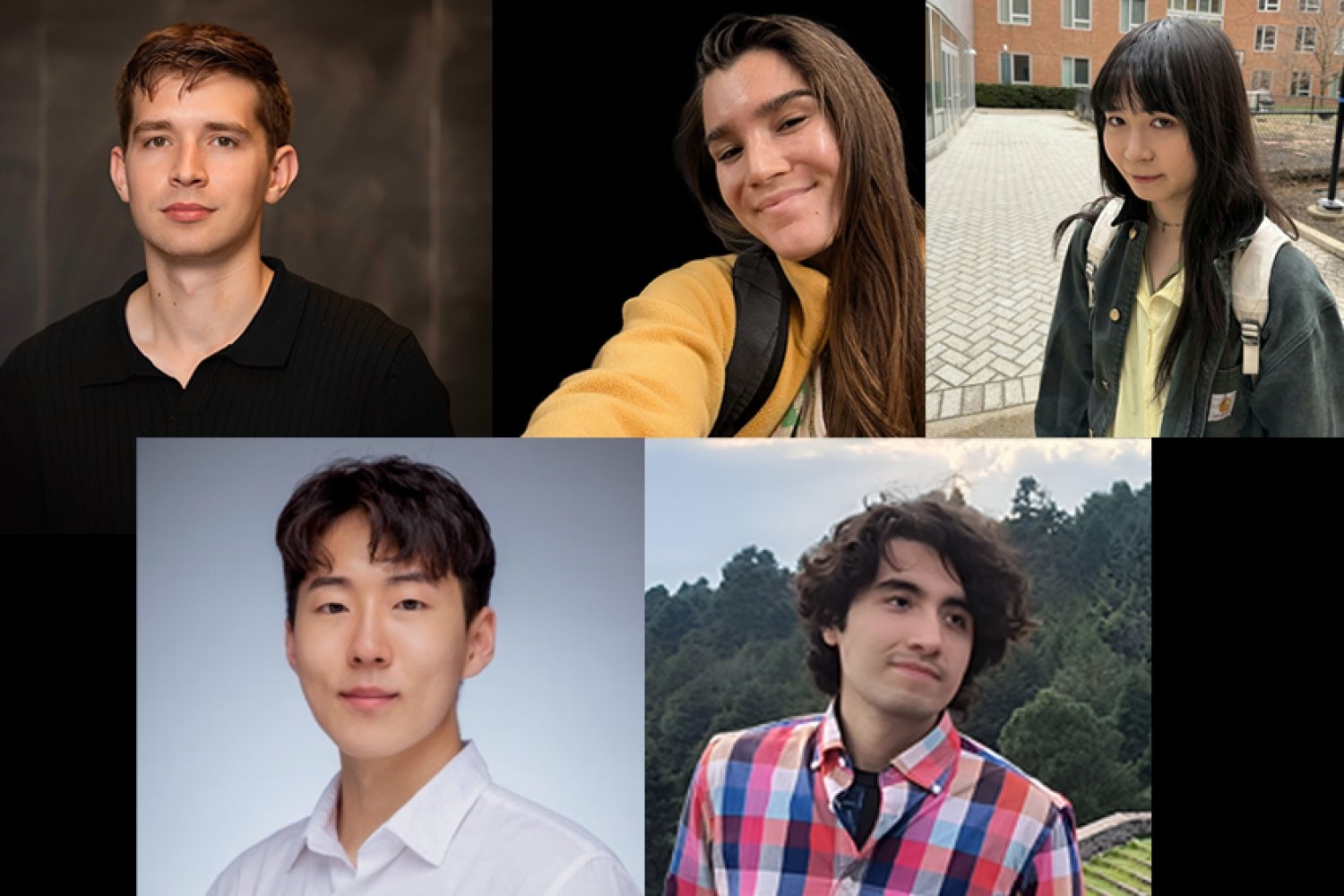

Charting the future of AI, from safer answers to faster thinkingMIT News – Machine learning MIT PhD students who interned with the MIT-IBM Watson AI Lab Summer Program are pushing AI tools to be more flexible, efficient, and grounded in truth.

MIT PhD students who interned with the MIT-IBM Watson AI Lab Summer Program are pushing AI tools to be more flexible, efficient, and grounded in truth. Read More

TDS Newsletter: The Theory and Practice of Using AI EffectivelyTowards Data Science When we encounter a new technology — say, LLM applications — some of us tend to jump right in, sleeves rolled up, impatient to start tinkering. Others prefer a more cautious approach: reading a few relevant research papers, or browsing through a bunch of blog posts, with the goal of understanding the context in which these tools

The post TDS Newsletter: The Theory and Practice of Using AI Effectively appeared first on Towards Data Science.

When we encounter a new technology — say, LLM applications — some of us tend to jump right in, sleeves rolled up, impatient to start tinkering. Others prefer a more cautious approach: reading a few relevant research papers, or browsing through a bunch of blog posts, with the goal of understanding the context in which these tools

The post TDS Newsletter: The Theory and Practice of Using AI Effectively appeared first on Towards Data Science. Read More

Free AI and Data Courses with 365 Data Science— 100% Unlimited Access until Nov 21KDnuggets Begin your AI and data journey for free at 365 Data Science.

Begin your AI and data journey for free at 365 Data Science. Read More