AI Hype: Don’t Overestimate the Impact of AITowards Data Science Targeting moonshots instead of trolleys

The post AI Hype: Don’t Overestimate the Impact of AI appeared first on Towards Data Science.

Targeting moonshots instead of trolleys

The post AI Hype: Don’t Overestimate the Impact of AI appeared first on Towards Data Science. Read More

How to Build Agents with GPT-5Towards Data Science Learn how to use GPT-5 as a powerful AI Agent on your data.

The post How to Build Agents with GPT-5 appeared first on Towards Data Science.

Learn how to use GPT-5 as a powerful AI Agent on your data.

The post How to Build Agents with GPT-5 appeared first on Towards Data Science. Read More

Meta AI Releases Omnilingual ASR: A Suite of Open-Source Multilingual Speech Recognition Models for 1600+ LanguagesMarkTechPost How do you build a single speech recognition system that can understand 1,000’s of languages including many that never had working ASR (automatic speech recognition) models before? Meta AI has released Omnilingual ASR, an open source speech recognition suite that scales to more than 1,600 languages and can be extended to unseen languages with only

The post Meta AI Releases Omnilingual ASR: A Suite of Open-Source Multilingual Speech Recognition Models for 1600+ Languages appeared first on MarkTechPost.

How do you build a single speech recognition system that can understand 1,000’s of languages including many that never had working ASR (automatic speech recognition) models before? Meta AI has released Omnilingual ASR, an open source speech recognition suite that scales to more than 1,600 languages and can be extended to unseen languages with only

The post Meta AI Releases Omnilingual ASR: A Suite of Open-Source Multilingual Speech Recognition Models for 1600+ Languages appeared first on MarkTechPost. Read More

Chinese AI startup Moonshot outperforms GPT-5 and Claude Sonnet 4.5: What you need to knowAI News A Chinese AI startup, Moonshot, has disrupted expectations in artificial intelligence development after its Kimi K2 Thinking model surpassed OpenAI’s GPT-5 and Anthropic’s Claude Sonnet 4.5 across multiple performance benchmarks, sparking renewed debate about whether America’s AI dominance is being challenged by cost-efficient Chinese innovation. Beijing-based Moonshot AI, valued at US$3.3 billion and backed by

The post Chinese AI startup Moonshot outperforms GPT-5 and Claude Sonnet 4.5: What you need to know appeared first on AI News.

A Chinese AI startup, Moonshot, has disrupted expectations in artificial intelligence development after its Kimi K2 Thinking model surpassed OpenAI’s GPT-5 and Anthropic’s Claude Sonnet 4.5 across multiple performance benchmarks, sparking renewed debate about whether America’s AI dominance is being challenged by cost-efficient Chinese innovation. Beijing-based Moonshot AI, valued at US$3.3 billion and backed by

The post Chinese AI startup Moonshot outperforms GPT-5 and Claude Sonnet 4.5: What you need to know appeared first on AI News. Read More

A Coding Implementation to Build and Train Advanced Architectures with Residual Connections, Self-Attention, and Adaptive Optimization Using JAX, Flax, and OptaxMarkTechPost In this tutorial, we explore how to build and train an advanced neural network using JAX, Flax, and Optax in an efficient and modular way. We begin by designing a deep architecture that integrates residual connections and self-attention mechanisms for expressive feature learning. As we progress, we implement sophisticated optimization strategies with learning rate scheduling,

The post A Coding Implementation to Build and Train Advanced Architectures with Residual Connections, Self-Attention, and Adaptive Optimization Using JAX, Flax, and Optax appeared first on MarkTechPost.

In this tutorial, we explore how to build and train an advanced neural network using JAX, Flax, and Optax in an efficient and modular way. We begin by designing a deep architecture that integrates residual connections and self-attention mechanisms for expressive feature learning. As we progress, we implement sophisticated optimization strategies with learning rate scheduling,

The post A Coding Implementation to Build and Train Advanced Architectures with Residual Connections, Self-Attention, and Adaptive Optimization Using JAX, Flax, and Optax appeared first on MarkTechPost. Read More

Rethinking Metrics and Diffusion Architecture for 3D Point Cloud Generationcs.AI updates on arXiv.org arXiv:2511.05308v2 Announce Type: cross

Abstract: As 3D point clouds become a cornerstone of modern technology, the need for sophisticated generative models and reliable evaluation metrics has grown exponentially. In this work, we first expose that some commonly used metrics for evaluating generated point clouds, particularly those based on Chamfer Distance (CD), lack robustness against defects and fail to capture geometric fidelity and local shape consistency when used as quality indicators. We further show that introducing samples alignment prior to distance calculation and replacing CD with Density-Aware Chamfer Distance (DCD) are simple yet essential steps to ensure the consistency and robustness of point cloud generative model evaluation metrics. While existing metrics primarily focus on directly comparing 3D Euclidean coordinates, we present a novel metric, named Surface Normal Concordance (SNC), which approximates surface similarity by comparing estimated point normals. This new metric, when combined with traditional ones, provides a more comprehensive evaluation of the quality of generated samples. Finally, leveraging recent advancements in transformer-based models for point cloud analysis, such as serialized patch attention , we propose a new architecture for generating high-fidelity 3D structures, the Diffusion Point Transformer. We perform extensive experiments and comparisons on the ShapeNet dataset, showing that our model outperforms previous solutions, particularly in terms of quality of generated point clouds, achieving new state-of-the-art. Code available at https://github.com/matteo-bastico/DiffusionPointTransformer.

arXiv:2511.05308v2 Announce Type: cross

Abstract: As 3D point clouds become a cornerstone of modern technology, the need for sophisticated generative models and reliable evaluation metrics has grown exponentially. In this work, we first expose that some commonly used metrics for evaluating generated point clouds, particularly those based on Chamfer Distance (CD), lack robustness against defects and fail to capture geometric fidelity and local shape consistency when used as quality indicators. We further show that introducing samples alignment prior to distance calculation and replacing CD with Density-Aware Chamfer Distance (DCD) are simple yet essential steps to ensure the consistency and robustness of point cloud generative model evaluation metrics. While existing metrics primarily focus on directly comparing 3D Euclidean coordinates, we present a novel metric, named Surface Normal Concordance (SNC), which approximates surface similarity by comparing estimated point normals. This new metric, when combined with traditional ones, provides a more comprehensive evaluation of the quality of generated samples. Finally, leveraging recent advancements in transformer-based models for point cloud analysis, such as serialized patch attention , we propose a new architecture for generating high-fidelity 3D structures, the Diffusion Point Transformer. We perform extensive experiments and comparisons on the ShapeNet dataset, showing that our model outperforms previous solutions, particularly in terms of quality of generated point clouds, achieving new state-of-the-art. Code available at https://github.com/matteo-bastico/DiffusionPointTransformer. Read More

Data Culture Is the Symptom, Not the SolutionTowards Data Science The hidden reason your data investments fail

The post Data Culture Is the Symptom, Not the Solution appeared first on Towards Data Science.

The hidden reason your data investments fail

The post Data Culture Is the Symptom, Not the Solution appeared first on Towards Data Science. Read More

Moonshot AI Releases Kosong: The LLM Abstraction Layer that Powers Kimi CLIMarkTechPost Modern agentic applications rarely talk to a single model or a single tool, so how do you keep that stack maintainable when providers, models and tools keep changing every few weeks. Moonshot AI’s Kosong targets this problem as an LLM abstraction layer for agent applications. Kosong unifies message structures, asynchronous tool orchestration and pluggable chat

The post Moonshot AI Releases Kosong: The LLM Abstraction Layer that Powers Kimi CLI appeared first on MarkTechPost.

Modern agentic applications rarely talk to a single model or a single tool, so how do you keep that stack maintainable when providers, models and tools keep changing every few weeks. Moonshot AI’s Kosong targets this problem as an LLM abstraction layer for agent applications. Kosong unifies message structures, asynchronous tool orchestration and pluggable chat

The post Moonshot AI Releases Kosong: The LLM Abstraction Layer that Powers Kimi CLI appeared first on MarkTechPost. Read More

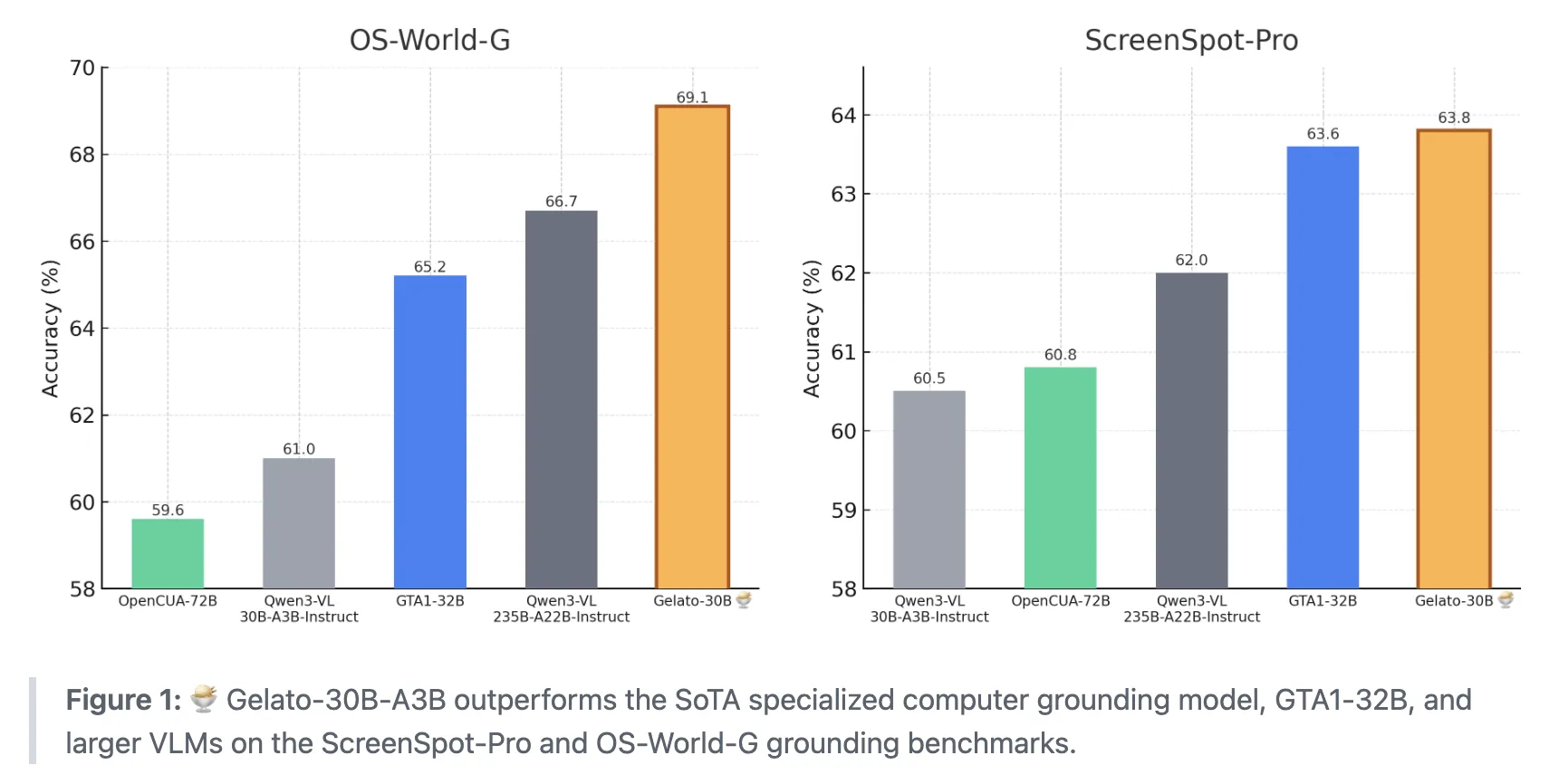

Gelato-30B-A3B: A State-of-the-Art Grounding Model for GUI Computer-Use Tasks, Surpassing Computer Grounding Models like GTA1-32B MarkTechPost How do we teach AI agents to reliably find and click the exact on screen element we mean when we give them a simple instruction? A team of researchers from ML Foundations has introduced Gelato-30B-A3B, a state of the art grounding model for graphical user interfaces that is designed to plug into computer use agents

The post Gelato-30B-A3B: A State-of-the-Art Grounding Model for GUI Computer-Use Tasks, Surpassing Computer Grounding Models like GTA1-32B appeared first on MarkTechPost.

How do we teach AI agents to reliably find and click the exact on screen element we mean when we give them a simple instruction? A team of researchers from ML Foundations has introduced Gelato-30B-A3B, a state of the art grounding model for graphical user interfaces that is designed to plug into computer use agents

The post Gelato-30B-A3B: A State-of-the-Art Grounding Model for GUI Computer-Use Tasks, Surpassing Computer Grounding Models like GTA1-32B appeared first on MarkTechPost. Read More

Fine-tune VLMs for multipage document-to-JSON with SageMaker AI and SWIFTArtificial Intelligence In this post, we demonstrate that fine-tuning VLMs provides a powerful and flexible approach to automate and significantly enhance document understanding capabilities. We also demonstrate that using focused fine-tuning allows smaller, multi-modal models to compete effectively with much larger counterparts (98% accuracy with Qwen2.5 VL 3B).

In this post, we demonstrate that fine-tuning VLMs provides a powerful and flexible approach to automate and significantly enhance document understanding capabilities. We also demonstrate that using focused fine-tuning allows smaller, multi-modal models to compete effectively with much larger counterparts (98% accuracy with Qwen2.5 VL 3B). Read More