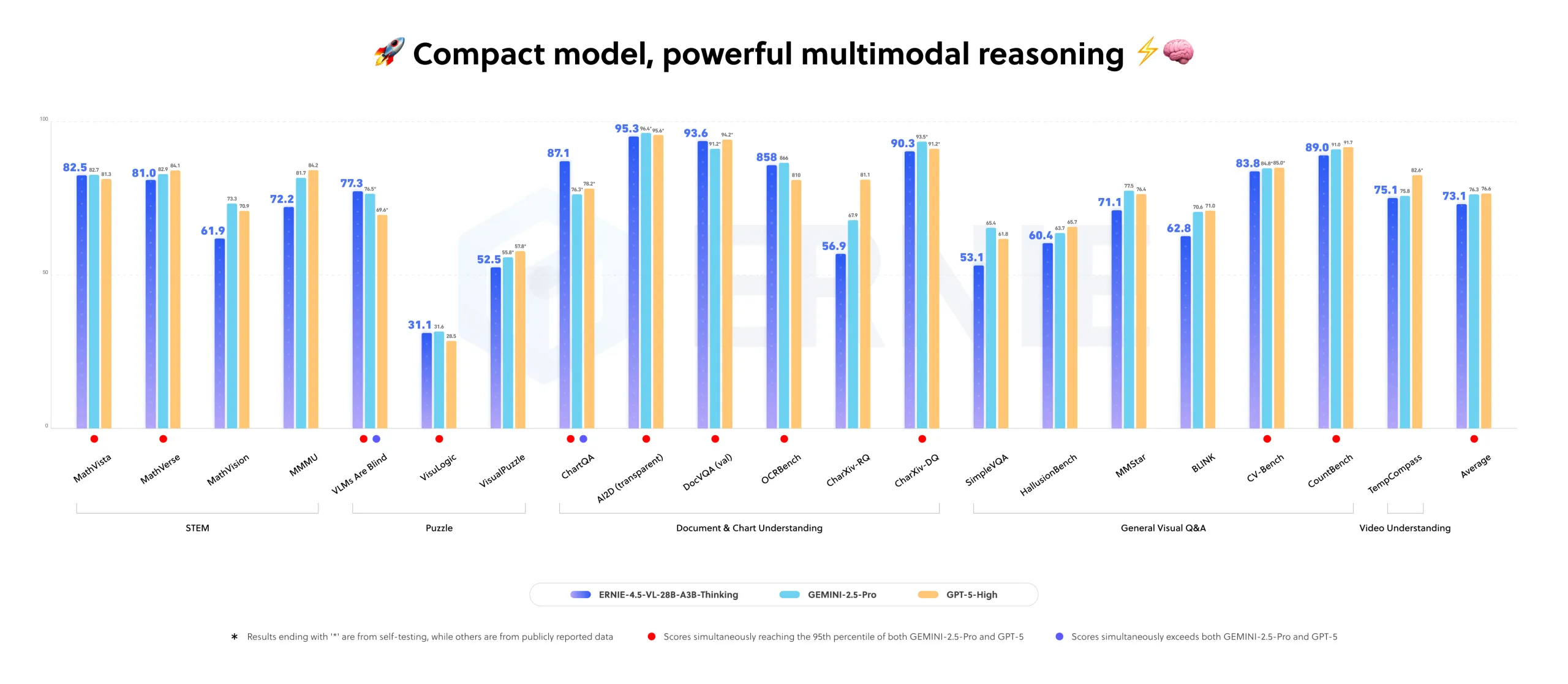

Baidu ERNIE multimodal AI beats GPT and Gemini in benchmarksAI News Baidu’s latest ERNIE model, a super-efficient multimodal AI, is beating GPT and Gemini on key benchmarks and targets enterprise data often ignored by text-focused models. For many businesses, valuable insights are locked in engineering schematics, factory-floor video feeds, medical scans, and logistics dashboards. Baidu’s new model, ERNIE-4.5-VL-28B-A3B-Thinking, is designed to fill this gap. What’s interesting

The post Baidu ERNIE multimodal AI beats GPT and Gemini in benchmarks appeared first on AI News.

Baidu’s latest ERNIE model, a super-efficient multimodal AI, is beating GPT and Gemini on key benchmarks and targets enterprise data often ignored by text-focused models. For many businesses, valuable insights are locked in engineering schematics, factory-floor video feeds, medical scans, and logistics dashboards. Baidu’s new model, ERNIE-4.5-VL-28B-A3B-Thinking, is designed to fill this gap. What’s interesting

The post Baidu ERNIE multimodal AI beats GPT and Gemini in benchmarks appeared first on AI News. Read More

The Benefits of an “Everything” Notebook in NotebookLMKDnuggets The goal of an “everything” notebook in NotebookLM is having your entire professional memory instantly accessible and understandable.

The goal of an “everything” notebook in NotebookLM is having your entire professional memory instantly accessible and understandable. Read More

Building ReAct Agents with LangGraph: A Beginner’s GuideMachineLearningMastery.com

Neuro drives national retail wins with ChatGPT BusinessOpenAI News Neuro uses ChatGPT Business to scale nationwide with fewer than seventy employees. From drafting contracts to uncovering insights in customer data, the team saves time, cuts costs, and turns ideas into growth.

Neuro uses ChatGPT Business to scale nationwide with fewer than seventy employees. From drafting contracts to uncovering insights in customer data, the team saves time, cuts costs, and turns ideas into growth. Read More

Procedural Knowledge Improves Agentic LLM Workflowscs.AI updates on arXiv.org arXiv:2511.07568v1 Announce Type: new

Abstract: Large language models (LLMs) often struggle when performing agentic tasks without substantial tool support, prom-pt engineering, or fine tuning. Despite research showing that domain-dependent, procedural knowledge can dramatically increase planning efficiency, little work evaluates its potential for improving LLM performance on agentic tasks that may require implicit planning. We formalize, implement, and evaluate an agentic LLM workflow that leverages procedural knowledge in the form of a hierarchical task network (HTN). Empirical results of our implementation show that hand-coded HTNs can dramatically improve LLM performance on agentic tasks, and using HTNs can boost a 20b or 70b parameter LLM to outperform a much larger 120b parameter LLM baseline. Furthermore, LLM-created HTNs improve overall performance, though less so. The results suggest that leveraging expertise–from humans, documents, or LLMs–to curate procedural knowledge will become another important tool for improving LLM workflows.

arXiv:2511.07568v1 Announce Type: new

Abstract: Large language models (LLMs) often struggle when performing agentic tasks without substantial tool support, prom-pt engineering, or fine tuning. Despite research showing that domain-dependent, procedural knowledge can dramatically increase planning efficiency, little work evaluates its potential for improving LLM performance on agentic tasks that may require implicit planning. We formalize, implement, and evaluate an agentic LLM workflow that leverages procedural knowledge in the form of a hierarchical task network (HTN). Empirical results of our implementation show that hand-coded HTNs can dramatically improve LLM performance on agentic tasks, and using HTNs can boost a 20b or 70b parameter LLM to outperform a much larger 120b parameter LLM baseline. Furthermore, LLM-created HTNs improve overall performance, though less so. The results suggest that leveraging expertise–from humans, documents, or LLMs–to curate procedural knowledge will become another important tool for improving LLM workflows. Read More

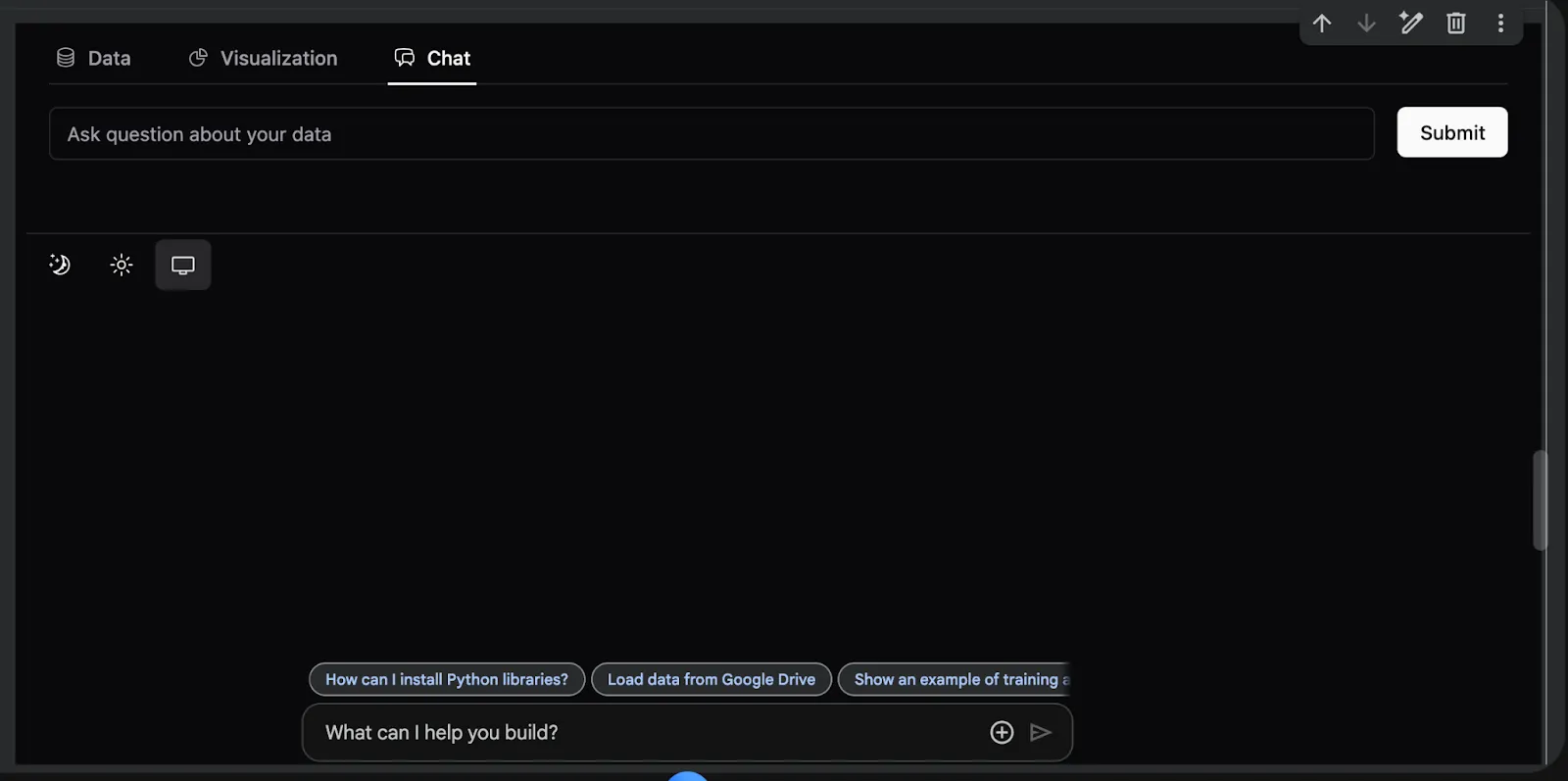

How to Build an End-to-End Interactive Analytics Dashboard Using PyGWalker Features for Insightful Data ExplorationMarkTechPost In this tutorial, we explore the advanced capabilities of PyGWalker, a powerful tool for visual data analysis that integrates seamlessly with pandas. We begin by generating a realistic e-commerce dataset enriched with time, demographic, and marketing features to mimic real-world business data. We then prepare multiple analytical views, including daily sales, category performance, and customer

The post How to Build an End-to-End Interactive Analytics Dashboard Using PyGWalker Features for Insightful Data Exploration appeared first on MarkTechPost.

In this tutorial, we explore the advanced capabilities of PyGWalker, a powerful tool for visual data analysis that integrates seamlessly with pandas. We begin by generating a realistic e-commerce dataset enriched with time, demographic, and marketing features to mimic real-world business data. We then prepare multiple analytical views, including daily sales, category performance, and customer

The post How to Build an End-to-End Interactive Analytics Dashboard Using PyGWalker Features for Insightful Data Exploration appeared first on MarkTechPost. Read More

Baidu Releases ERNIE-4.5-VL-28B-A3B-Thinking: An Open-Source and Compact Multimodal Reasoning Model Under the ERNIE-4.5 FamilyMarkTechPost How can we get large model level multimodal reasoning for documents, charts and videos while running only a 3B class model in production? Baidu has added a new model to the ERNIE-4.5 open source family. ERNIE-4.5-VL-28B-A3B-Thinking is a vision language model that focuses on document, chart and video understanding with a small active parameter budget.

The post Baidu Releases ERNIE-4.5-VL-28B-A3B-Thinking: An Open-Source and Compact Multimodal Reasoning Model Under the ERNIE-4.5 Family appeared first on MarkTechPost.

How can we get large model level multimodal reasoning for documents, charts and videos while running only a 3B class model in production? Baidu has added a new model to the ERNIE-4.5 open source family. ERNIE-4.5-VL-28B-A3B-Thinking is a vision language model that focuses on document, chart and video understanding with a small active parameter budget.

The post Baidu Releases ERNIE-4.5-VL-28B-A3B-Thinking: An Open-Source and Compact Multimodal Reasoning Model Under the ERNIE-4.5 Family appeared first on MarkTechPost. Read More

Beyond Fact Retrieval: Episodic Memory for RAG with Generative Semantic Workspacescs.AI updates on arXiv.org arXiv:2511.07587v1 Announce Type: new

Abstract: Large Language Models (LLMs) face fundamental challenges in long-context reasoning: many documents exceed their finite context windows, while performance on texts that do fit degrades with sequence length, necessitating their augmentation with external memory frameworks. Current solutions, which have evolved from retrieval using semantic embeddings to more sophisticated structured knowledge graphs representations for improved sense-making and associativity, are tailored for fact-based retrieval and fail to build the space-time-anchored narrative representations required for tracking entities through episodic events. To bridge this gap, we propose the textbf{Generative Semantic Workspace} (GSW), a neuro-inspired generative memory framework that builds structured, interpretable representations of evolving situations, enabling LLMs to reason over evolving roles, actions, and spatiotemporal contexts. Our framework comprises an textit{Operator}, which maps incoming observations to intermediate semantic structures, and a textit{Reconciler}, which integrates these into a persistent workspace that enforces temporal, spatial, and logical coherence. On the Episodic Memory Benchmark (EpBench) cite{huet_episodic_2025} comprising corpora ranging from 100k to 1M tokens in length, GSW outperforms existing RAG based baselines by up to textbf{20%}. Furthermore, GSW is highly efficient, reducing query-time context tokens by textbf{51%} compared to the next most token-efficient baseline, reducing inference time costs considerably. More broadly, GSW offers a concrete blueprint for endowing LLMs with human-like episodic memory, paving the way for more capable agents that can reason over long horizons.

arXiv:2511.07587v1 Announce Type: new

Abstract: Large Language Models (LLMs) face fundamental challenges in long-context reasoning: many documents exceed their finite context windows, while performance on texts that do fit degrades with sequence length, necessitating their augmentation with external memory frameworks. Current solutions, which have evolved from retrieval using semantic embeddings to more sophisticated structured knowledge graphs representations for improved sense-making and associativity, are tailored for fact-based retrieval and fail to build the space-time-anchored narrative representations required for tracking entities through episodic events. To bridge this gap, we propose the textbf{Generative Semantic Workspace} (GSW), a neuro-inspired generative memory framework that builds structured, interpretable representations of evolving situations, enabling LLMs to reason over evolving roles, actions, and spatiotemporal contexts. Our framework comprises an textit{Operator}, which maps incoming observations to intermediate semantic structures, and a textit{Reconciler}, which integrates these into a persistent workspace that enforces temporal, spatial, and logical coherence. On the Episodic Memory Benchmark (EpBench) cite{huet_episodic_2025} comprising corpora ranging from 100k to 1M tokens in length, GSW outperforms existing RAG based baselines by up to textbf{20%}. Furthermore, GSW is highly efficient, reducing query-time context tokens by textbf{51%} compared to the next most token-efficient baseline, reducing inference time costs considerably. More broadly, GSW offers a concrete blueprint for endowing LLMs with human-like episodic memory, paving the way for more capable agents that can reason over long horizons. Read More

AI-Driven Contribution Evaluation and Conflict Resolution: A Framework & Design for Group Workload Investigationcs.AI updates on arXiv.org arXiv:2511.07667v1 Announce Type: new

Abstract: The equitable assessment of individual contribution in teams remains a persistent challenge, where conflict and disparity in workload can result in unfair performance evaluation, often requiring manual intervention – a costly and challenging process. We survey existing tool features and identify a gap in conflict resolution methods and AI integration. To address this, we propose a framework and implementation design for a novel AI-enhanced tool that assists in dispute investigation. The framework organises heterogeneous artefacts – submissions (code, text, media), communications (chat, email), coordination records (meeting logs, tasks), peer assessments, and contextual information – into three dimensions with nine benchmarks: Contribution, Interaction, and Role. Objective measures are normalised, aggregated per dimension, and paired with inequality measures (Gini index) to surface conflict markers. A Large Language Model (LLM) architecture performs validated and contextual analysis over these measures to generate interpretable and transparent advisory judgments. We argue for feasibility under current statutory and institutional policy, and outline practical analytics (sentimental, task fidelity, word/line count, etc.), bias safeguards, limitations, and practical challenges.

arXiv:2511.07667v1 Announce Type: new

Abstract: The equitable assessment of individual contribution in teams remains a persistent challenge, where conflict and disparity in workload can result in unfair performance evaluation, often requiring manual intervention – a costly and challenging process. We survey existing tool features and identify a gap in conflict resolution methods and AI integration. To address this, we propose a framework and implementation design for a novel AI-enhanced tool that assists in dispute investigation. The framework organises heterogeneous artefacts – submissions (code, text, media), communications (chat, email), coordination records (meeting logs, tasks), peer assessments, and contextual information – into three dimensions with nine benchmarks: Contribution, Interaction, and Role. Objective measures are normalised, aggregated per dimension, and paired with inequality measures (Gini index) to surface conflict markers. A Large Language Model (LLM) architecture performs validated and contextual analysis over these measures to generate interpretable and transparent advisory judgments. We argue for feasibility under current statutory and institutional policy, and outline practical analytics (sentimental, task fidelity, word/line count, etc.), bias safeguards, limitations, and practical challenges. Read More

Google reveals its own version of Apple’s AI cloudAI News Google has rolled out Private AI Compute, a new cloud-based processing system designed to bring the privacy of on-device AI to the cloud. The platform aims to give users faster, more capable AI experiences without compromising data security. It combines Google’s most advanced Gemini models with strict privacy safeguards, reflecting the company’s ongoing effort to

The post Google reveals its own version of Apple’s AI cloud appeared first on AI News.

Google has rolled out Private AI Compute, a new cloud-based processing system designed to bring the privacy of on-device AI to the cloud. The platform aims to give users faster, more capable AI experiences without compromising data security. It combines Google’s most advanced Gemini models with strict privacy safeguards, reflecting the company’s ongoing effort to

The post Google reveals its own version of Apple’s AI cloud appeared first on AI News. Read More