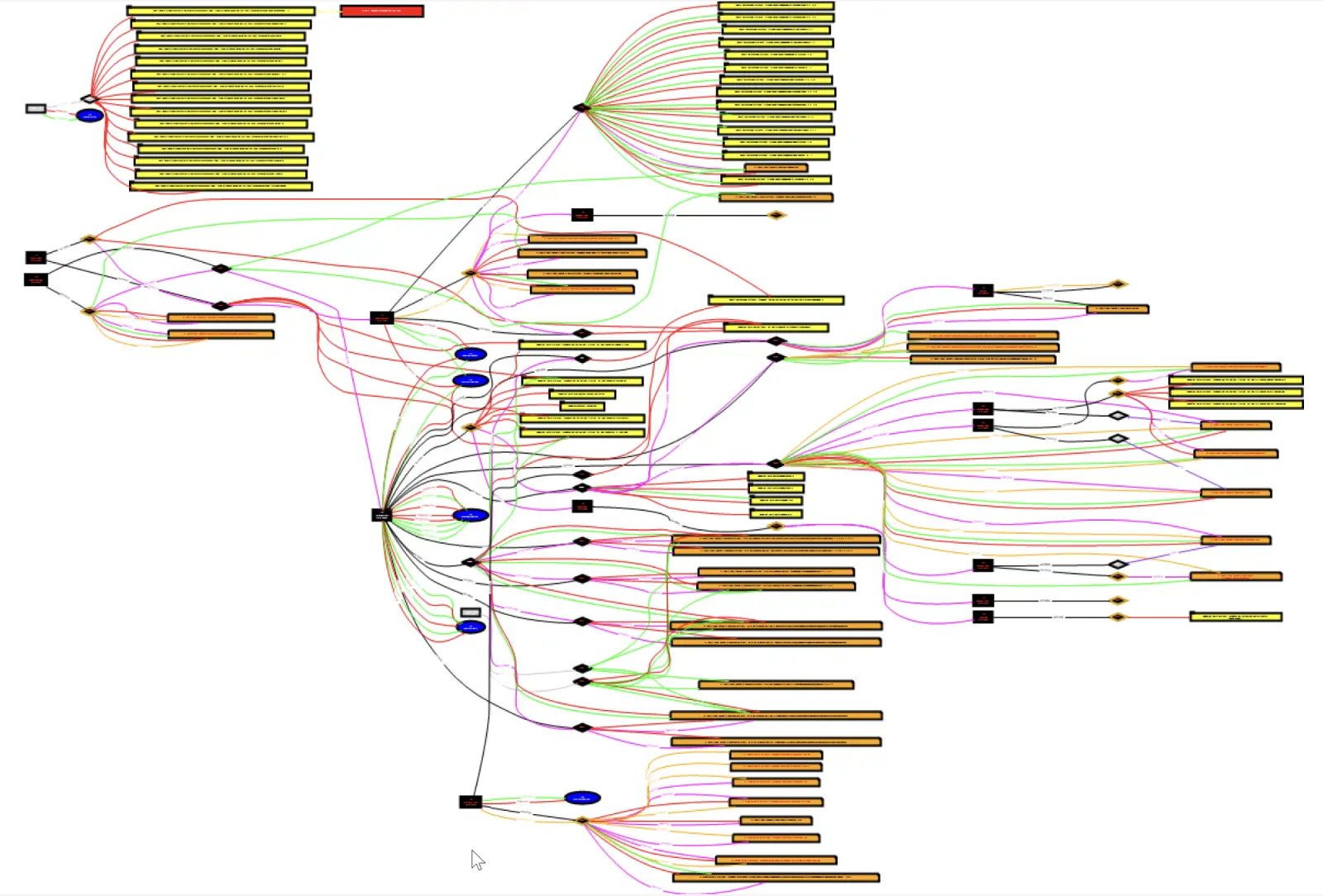

When I’m teachning FOR610[1], I always say to my students that reverse engineering does not only apply to “executable files” (read: PE or ELF files). Most of the time, the infection path involves many stages to defeat the Security Analyst or security controls. Here is an example that I found yesterday. An email was received […]

New data centre projects mark Anthropic’s biggest US expansion yetAI News New US data centre projects in Texas and New York will receive $50 billion in new funding, part of a plan to grow US computing capacity for advanced AI work. The facilities, built with Fluidstack, are designed for Anthropic’s systems and will focus on power and efficiency needs that come with training and running large

The post New data centre projects mark Anthropic’s biggest US expansion yet appeared first on AI News.

New US data centre projects in Texas and New York will receive $50 billion in new funding, part of a plan to grow US computing capacity for advanced AI work. The facilities, built with Fluidstack, are designed for Anthropic’s systems and will focus on power and efficiency needs that come with training and running large

The post New data centre projects mark Anthropic’s biggest US expansion yet appeared first on AI News. Read More

How to Build a Fully Functional Custom GPT-style Conversational AI Locally Using Hugging Face TransformersMarkTechPost In this tutorial, we build our own custom GPT-style chat system from scratch using a local Hugging Face model. We start by loading a lightweight instruction-tuned model that understands conversational prompts, then wrap it inside a structured chat framework that includes a system role, user memory, and assistant responses. We define how the agent interprets

The post How to Build a Fully Functional Custom GPT-style Conversational AI Locally Using Hugging Face Transformers appeared first on MarkTechPost.

In this tutorial, we build our own custom GPT-style chat system from scratch using a local Hugging Face model. We start by loading a lightweight instruction-tuned model that understands conversational prompts, then wrap it inside a structured chat framework that includes a system role, user memory, and assistant responses. We define how the agent interprets

The post How to Build a Fully Functional Custom GPT-style Conversational AI Locally Using Hugging Face Transformers appeared first on MarkTechPost. Read More

Semantic-Consistent Bidirectional Contrastive Hashing for Noisy Multi-Label Cross-Modal Retrievalcs.AI updates on arXiv.org arXiv:2511.07780v1 Announce Type: cross

Abstract: Cross-modal hashing (CMH) facilitates efficient retrieval across different modalities (e.g., image and text) by encoding data into compact binary representations. While recent methods have achieved remarkable performance, they often rely heavily on fully annotated datasets, which are costly and labor-intensive to obtain. In real-world scenarios, particularly in multi-label datasets, label noise is prevalent and severely degrades retrieval performance. Moreover, existing CMH approaches typically overlook the partial semantic overlaps inherent in multi-label data, limiting their robustness and generalization. To tackle these challenges, we propose a novel framework named Semantic-Consistent Bidirectional Contrastive Hashing (SCBCH). The framework comprises two complementary modules: (1) Cross-modal Semantic-Consistent Classification (CSCC), which leverages cross-modal semantic consistency to estimate sample reliability and reduce the impact of noisy labels; (2) Bidirectional Soft Contrastive Hashing (BSCH), which dynamically generates soft contrastive sample pairs based on multi-label semantic overlap, enabling adaptive contrastive learning between semantically similar and dissimilar samples across modalities. Extensive experiments on four widely-used cross-modal retrieval benchmarks validate the effectiveness and robustness of our method, consistently outperforming state-of-the-art approaches under noisy multi-label conditions.

arXiv:2511.07780v1 Announce Type: cross

Abstract: Cross-modal hashing (CMH) facilitates efficient retrieval across different modalities (e.g., image and text) by encoding data into compact binary representations. While recent methods have achieved remarkable performance, they often rely heavily on fully annotated datasets, which are costly and labor-intensive to obtain. In real-world scenarios, particularly in multi-label datasets, label noise is prevalent and severely degrades retrieval performance. Moreover, existing CMH approaches typically overlook the partial semantic overlaps inherent in multi-label data, limiting their robustness and generalization. To tackle these challenges, we propose a novel framework named Semantic-Consistent Bidirectional Contrastive Hashing (SCBCH). The framework comprises two complementary modules: (1) Cross-modal Semantic-Consistent Classification (CSCC), which leverages cross-modal semantic consistency to estimate sample reliability and reduce the impact of noisy labels; (2) Bidirectional Soft Contrastive Hashing (BSCH), which dynamically generates soft contrastive sample pairs based on multi-label semantic overlap, enabling adaptive contrastive learning between semantically similar and dissimilar samples across modalities. Extensive experiments on four widely-used cross-modal retrieval benchmarks validate the effectiveness and robustness of our method, consistently outperforming state-of-the-art approaches under noisy multi-label conditions. Read More

A Negotiation-Based Multi-Agent Reinforcement Learning Approach for Dynamic Scheduling of Reconfigurable Manufacturing Systemscs.AI updates on arXiv.org arXiv:2511.07707v1 Announce Type: cross

Abstract: Reconfigurable manufacturing systems (RMS) are critical for future market adjustment given their rapid adaptation to fluctuations in consumer demands, the introduction of new technological advances, and disruptions in linked supply chain sections. The adjustable hard settings of such systems require a flexible soft planning mechanism that enables realtime production planning and scheduling amid the existing complexity and variability in their configuration settings. This study explores the application of multi agent reinforcement learning (MARL) for dynamic scheduling in soft planning of the RMS settings. In the proposed framework, deep Qnetwork (DQN) agents trained in centralized training learn optimal job machine assignments in real time while adapting to stochastic events such as machine breakdowns and reconfiguration delays. The model also incorporates a negotiation with an attention mechanism to enhance state representation and improve decision focus on critical system features. Key DQN enhancements including prioritized experience replay, nstep returns, double DQN and soft target update are used to stabilize and accelerate learning. Experiments conducted in a simulated RMS environment demonstrate that the proposed approach outperforms baseline heuristics in reducing makespan and tardiness while improving machine utilization. The reconfigurable manufacturing environment was extended to simulate realistic challenges, including machine failures and reconfiguration times. Experimental results show that while the enhanced DQN agent is effective in adapting to dynamic conditions, machine breakdowns increase variability in key performance metrics such as makespan, throughput, and total tardiness. The results confirm the advantages of applying the MARL mechanism for intelligent and adaptive scheduling in dynamic reconfigurable manufacturing environments.

arXiv:2511.07707v1 Announce Type: cross

Abstract: Reconfigurable manufacturing systems (RMS) are critical for future market adjustment given their rapid adaptation to fluctuations in consumer demands, the introduction of new technological advances, and disruptions in linked supply chain sections. The adjustable hard settings of such systems require a flexible soft planning mechanism that enables realtime production planning and scheduling amid the existing complexity and variability in their configuration settings. This study explores the application of multi agent reinforcement learning (MARL) for dynamic scheduling in soft planning of the RMS settings. In the proposed framework, deep Qnetwork (DQN) agents trained in centralized training learn optimal job machine assignments in real time while adapting to stochastic events such as machine breakdowns and reconfiguration delays. The model also incorporates a negotiation with an attention mechanism to enhance state representation and improve decision focus on critical system features. Key DQN enhancements including prioritized experience replay, nstep returns, double DQN and soft target update are used to stabilize and accelerate learning. Experiments conducted in a simulated RMS environment demonstrate that the proposed approach outperforms baseline heuristics in reducing makespan and tardiness while improving machine utilization. The reconfigurable manufacturing environment was extended to simulate realistic challenges, including machine failures and reconfiguration times. Experimental results show that while the enhanced DQN agent is effective in adapting to dynamic conditions, machine breakdowns increase variability in key performance metrics such as makespan, throughput, and total tardiness. The results confirm the advantages of applying the MARL mechanism for intelligent and adaptive scheduling in dynamic reconfigurable manufacturing environments. Read More

DRAGON: Guard LLM Unlearning in Context via Negative Detection and Reasoning AI updates on arXiv.org

DRAGON: Guard LLM Unlearning in Context via Negative Detection and Reasoningcs.AI updates on arXiv.org arXiv:2511.05784v2 Announce Type: replace-cross

Abstract: Unlearning in Large Language Models (LLMs) is crucial for protecting private data and removing harmful knowledge. Most existing approaches rely on fine-tuning to balance unlearning efficiency with general language capabilities. However, these methods typically require training or access to retain data, which is often unavailable in real world scenarios. Although these methods can perform well when both forget and retain data are available, few works have demonstrated equivalent capability in more practical, data-limited scenarios. To overcome these limitations, we propose Detect-Reasoning Augmented GeneratiON (DRAGON), a systematic, reasoning-based framework that utilizes in-context chain-of-thought (CoT) instructions to guard deployed LLMs before inference. Instead of modifying the base model, DRAGON leverages the inherent instruction-following ability of LLMs and introduces a lightweight detection module to identify forget-worthy prompts without any retain data. These are then routed through a dedicated CoT guard model to enforce safe and accurate in-context intervention. To robustly evaluate unlearning performance, we introduce novel metrics for unlearning performance and the continual unlearning setting. Extensive experiments across three representative unlearning tasks validate the effectiveness of DRAGON, demonstrating its strong unlearning capability, scalability, and applicability in practical scenarios.

arXiv:2511.05784v2 Announce Type: replace-cross

Abstract: Unlearning in Large Language Models (LLMs) is crucial for protecting private data and removing harmful knowledge. Most existing approaches rely on fine-tuning to balance unlearning efficiency with general language capabilities. However, these methods typically require training or access to retain data, which is often unavailable in real world scenarios. Although these methods can perform well when both forget and retain data are available, few works have demonstrated equivalent capability in more practical, data-limited scenarios. To overcome these limitations, we propose Detect-Reasoning Augmented GeneratiON (DRAGON), a systematic, reasoning-based framework that utilizes in-context chain-of-thought (CoT) instructions to guard deployed LLMs before inference. Instead of modifying the base model, DRAGON leverages the inherent instruction-following ability of LLMs and introduces a lightweight detection module to identify forget-worthy prompts without any retain data. These are then routed through a dedicated CoT guard model to enforce safe and accurate in-context intervention. To robustly evaluate unlearning performance, we introduce novel metrics for unlearning performance and the continual unlearning setting. Extensive experiments across three representative unlearning tasks validate the effectiveness of DRAGON, demonstrating its strong unlearning capability, scalability, and applicability in practical scenarios. Read More

Revisiting NLI: Towards Cost-Effective and Human-Aligned Metrics for Evaluating LLMs in Question Answeringcs.AI updates on arXiv.org arXiv:2511.07659v1 Announce Type: cross

Abstract: Evaluating answers from state-of-the-art large language models (LLMs) is challenging: lexical metrics miss semantic nuances, whereas “LLM-as-Judge” scoring is computationally expensive. We re-evaluate a lightweight alternative — off-the-shelf Natural Language Inference (NLI) scoring augmented by a simple lexical-match flag and find that this decades-old technique matches GPT-4o’s accuracy (89.9%) on long-form QA, while requiring orders-of-magnitude fewer parameters. To test human alignment of these metrics rigorously, we introduce DIVER-QA, a new 3000-sample human-annotated benchmark spanning five QA datasets and five candidate LLMs. Our results highlight that inexpensive NLI-based evaluation remains competitive and offer DIVER-QA as an open resource for future metric research.

arXiv:2511.07659v1 Announce Type: cross

Abstract: Evaluating answers from state-of-the-art large language models (LLMs) is challenging: lexical metrics miss semantic nuances, whereas “LLM-as-Judge” scoring is computationally expensive. We re-evaluate a lightweight alternative — off-the-shelf Natural Language Inference (NLI) scoring augmented by a simple lexical-match flag and find that this decades-old technique matches GPT-4o’s accuracy (89.9%) on long-form QA, while requiring orders-of-magnitude fewer parameters. To test human alignment of these metrics rigorously, we introduce DIVER-QA, a new 3000-sample human-annotated benchmark spanning five QA datasets and five candidate LLMs. Our results highlight that inexpensive NLI-based evaluation remains competitive and offer DIVER-QA as an open resource for future metric research. Read More

N-ReLU: Zero-Mean Stochastic Extension of ReLUcs.AI updates on arXiv.org arXiv:2511.07559v1 Announce Type: cross

Abstract: Activation functions are fundamental for enabling nonlinear representations in deep neural networks. However, the standard rectified linear unit (ReLU) often suffers from inactive or “dead” neurons caused by its hard zero cutoff. To address this issue, we introduce N-ReLU (Noise-ReLU), a zero-mean stochastic extension of ReLU that replaces negative activations with Gaussian noise while preserving the same expected output. This expectation-aligned formulation maintains gradient flow in inactive regions and acts as an annealing-style regularizer during training. Experiments on the MNIST dataset using both multilayer perceptron (MLP) and convolutional neural network (CNN) architectures show that N-ReLU achieves accuracy comparable to or slightly exceeding that of ReLU, LeakyReLU, PReLU, GELU, and RReLU at moderate noise levels (sigma = 0.05-0.10), with stable convergence and no dead neurons observed. These results demonstrate that lightweight Gaussian noise injection offers a simple yet effective mechanism to enhance optimization robustness without modifying network structures or introducing additional parameters.

arXiv:2511.07559v1 Announce Type: cross

Abstract: Activation functions are fundamental for enabling nonlinear representations in deep neural networks. However, the standard rectified linear unit (ReLU) often suffers from inactive or “dead” neurons caused by its hard zero cutoff. To address this issue, we introduce N-ReLU (Noise-ReLU), a zero-mean stochastic extension of ReLU that replaces negative activations with Gaussian noise while preserving the same expected output. This expectation-aligned formulation maintains gradient flow in inactive regions and acts as an annealing-style regularizer during training. Experiments on the MNIST dataset using both multilayer perceptron (MLP) and convolutional neural network (CNN) architectures show that N-ReLU achieves accuracy comparable to or slightly exceeding that of ReLU, LeakyReLU, PReLU, GELU, and RReLU at moderate noise levels (sigma = 0.05-0.10), with stable convergence and no dead neurons observed. These results demonstrate that lightweight Gaussian noise injection offers a simple yet effective mechanism to enhance optimization robustness without modifying network structures or introducing additional parameters. Read More

Alignment-Constrained Dynamic Pruning for LLMs: Identifying and Preserving Alignment-Critical Circuitscs.AI updates on arXiv.org arXiv:2511.07482v1 Announce Type: cross

Abstract: Large Language Models require substantial computational resources for inference, posing deployment challenges. While dynamic pruning offers superior efficiency over static methods through adaptive circuit selection, it exacerbates alignment degradation by retaining only input-dependent safety-critical circuit preservation across diverse inputs. As a result, addressing these heightened alignment vulnerabilities remains critical. We introduce Alignment-Aware Probe Pruning (AAPP), a dynamic structured pruning method that adaptively preserves alignment-relevant circuits during inference, building upon Probe Pruning. Experiments on LLaMA 2-7B, Qwen2.5-14B-Instruct, and Gemma-3-12B-IT show AAPP improves refusal rates by 50% at matched compute, enabling efficient yet safety-preserving LLM deployment.

arXiv:2511.07482v1 Announce Type: cross

Abstract: Large Language Models require substantial computational resources for inference, posing deployment challenges. While dynamic pruning offers superior efficiency over static methods through adaptive circuit selection, it exacerbates alignment degradation by retaining only input-dependent safety-critical circuit preservation across diverse inputs. As a result, addressing these heightened alignment vulnerabilities remains critical. We introduce Alignment-Aware Probe Pruning (AAPP), a dynamic structured pruning method that adaptively preserves alignment-relevant circuits during inference, building upon Probe Pruning. Experiments on LLaMA 2-7B, Qwen2.5-14B-Instruct, and Gemma-3-12B-IT show AAPP improves refusal rates by 50% at matched compute, enabling efficient yet safety-preserving LLM deployment. Read More

Constrained and Robust Policy Synthesis with Satisfiability-Modulo-Probabilistic-Model-Checkingcs.AI updates on arXiv.org arXiv:2511.08078v1 Announce Type: cross

Abstract: The ability to compute reward-optimal policies for given and known finite Markov decision processes (MDPs) underpins a variety of applications across planning, controller synthesis, and verification. However, we often want policies (1) to be robust, i.e., they perform well on perturbations of the MDP and (2) to satisfy additional structural constraints regarding, e.g., their representation or implementation cost. Computing such robust and constrained policies is indeed computationally more challenging. This paper contributes the first approach to effectively compute robust policies subject to arbitrary structural constraints using a flexible and efficient framework. We achieve flexibility by allowing to express our constraints in a first-order theory over a set of MDPs, while the root for our efficiency lies in the tight integration of satisfiability solvers to handle the combinatorial nature of the problem and probabilistic model checking algorithms to handle the analysis of MDPs. Experiments on a few hundred benchmarks demonstrate the feasibility for constrained and robust policy synthesis and the competitiveness with state-of-the-art methods for various fragments of the problem.

arXiv:2511.08078v1 Announce Type: cross

Abstract: The ability to compute reward-optimal policies for given and known finite Markov decision processes (MDPs) underpins a variety of applications across planning, controller synthesis, and verification. However, we often want policies (1) to be robust, i.e., they perform well on perturbations of the MDP and (2) to satisfy additional structural constraints regarding, e.g., their representation or implementation cost. Computing such robust and constrained policies is indeed computationally more challenging. This paper contributes the first approach to effectively compute robust policies subject to arbitrary structural constraints using a flexible and efficient framework. We achieve flexibility by allowing to express our constraints in a first-order theory over a set of MDPs, while the root for our efficiency lies in the tight integration of satisfiability solvers to handle the combinatorial nature of the problem and probabilistic model checking algorithms to handle the analysis of MDPs. Experiments on a few hundred benchmarks demonstrate the feasibility for constrained and robust policy synthesis and the competitiveness with state-of-the-art methods for various fragments of the problem. Read More