When AI Persuades: Adversarial Explanation Attacks on Human Trust in AI-Assisted Decision Makingcs.AI updates on arXiv.org arXiv:2602.04003v1 Announce Type: new

Abstract: Most adversarial threats in artificial intelligence target the computational behavior of models rather than the humans who rely on them. Yet modern AI systems increasingly operate within human decision loops, where users interpret and act on model recommendations. Large Language Models generate fluent natural-language explanations that shape how users perceive and trust AI outputs, revealing a new attack surface at the cognitive layer: the communication channel between AI and its users. We introduce adversarial explanation attacks (AEAs), where an attacker manipulates the framing of LLM-generated explanations to modulate human trust in incorrect outputs. We formalize this behavioral threat through the trust miscalibration gap, a metric that captures the difference in human trust between correct and incorrect outputs under adversarial explanations. By incorporating this gap, AEAs explore the daunting threats in which persuasive explanations reinforce users’ trust in incorrect predictions. To characterize this threat, we conducted a controlled experiment (n = 205), systematically varying four dimensions of explanation framing: reasoning mode, evidence type, communication style, and presentation format. Our findings show that users report nearly identical trust for adversarial and benign explanations, with adversarial explanations preserving the vast majority of benign trust despite being incorrect. The most vulnerable cases arise when AEAs closely resemble expert communication, combining authoritative evidence, neutral tone, and domain-appropriate reasoning. Vulnerability is highest on hard tasks, in fact-driven domains, and among participants who are less formally educated, younger, or highly trusting of AI. This is the first systematic security study that treats explanations as an adversarial cognitive channel and quantifies their impact on human trust in AI-assisted decision making.

arXiv:2602.04003v1 Announce Type: new

Abstract: Most adversarial threats in artificial intelligence target the computational behavior of models rather than the humans who rely on them. Yet modern AI systems increasingly operate within human decision loops, where users interpret and act on model recommendations. Large Language Models generate fluent natural-language explanations that shape how users perceive and trust AI outputs, revealing a new attack surface at the cognitive layer: the communication channel between AI and its users. We introduce adversarial explanation attacks (AEAs), where an attacker manipulates the framing of LLM-generated explanations to modulate human trust in incorrect outputs. We formalize this behavioral threat through the trust miscalibration gap, a metric that captures the difference in human trust between correct and incorrect outputs under adversarial explanations. By incorporating this gap, AEAs explore the daunting threats in which persuasive explanations reinforce users’ trust in incorrect predictions. To characterize this threat, we conducted a controlled experiment (n = 205), systematically varying four dimensions of explanation framing: reasoning mode, evidence type, communication style, and presentation format. Our findings show that users report nearly identical trust for adversarial and benign explanations, with adversarial explanations preserving the vast majority of benign trust despite being incorrect. The most vulnerable cases arise when AEAs closely resemble expert communication, combining authoritative evidence, neutral tone, and domain-appropriate reasoning. Vulnerability is highest on hard tasks, in fact-driven domains, and among participants who are less formally educated, younger, or highly trusting of AI. This is the first systematic security study that treats explanations as an adversarial cognitive channel and quantifies their impact on human trust in AI-assisted decision making. Read More

The U.S. Cybersecurity and Infrastructure Security Agency (CISA) has issued a new binding operational directive requiring federal agencies to identify and remove network edge devices that no longer receive security updates from manufacturers. […] Read More

Cybersecurity researchers have discovered a new supply chain attack in which legitimate packages on npm and the Python Package Index (PyPI) repository have been compromised to push malicious versions to facilitate wallet credential theft and remote code execution. The compromised versions of the two packages are listed below – @dydxprotocol/v4-client-js (npm) – 3.4.1, 1.22.1, 1.15.2, […]

GPT-5 lowers the cost of cell-free protein synthesisOpenAI News An autonomous lab combining OpenAI’s GPT-5 with Ginkgo Bioworks’ cloud automation cut cell-free protein synthesis costs by 40% through closed-loop experimentation.

An autonomous lab combining OpenAI’s GPT-5 with Ginkgo Bioworks’ cloud automation cut cell-free protein synthesis costs by 40% through closed-loop experimentation. Read More

AI Expo 2026 Day 2: Moving experimental pilots to AI productionAI News The second day of the co-located AI & Big Data Expo and Digital Transformation Week in London showed a market in a clear transition. Early excitement over generative models is fading. Enterprise leaders now face the friction of fitting these tools into current stacks. Day two sessions focused less on large language models and more

The post AI Expo 2026 Day 2: Moving experimental pilots to AI production appeared first on AI News.

The second day of the co-located AI & Big Data Expo and Digital Transformation Week in London showed a market in a clear transition. Early excitement over generative models is fading. Enterprise leaders now face the friction of fitting these tools into current stacks. Day two sessions focused less on large language models and more

The post AI Expo 2026 Day 2: Moving experimental pilots to AI production appeared first on AI News. Read More

OpenAI Just Launched GPT-5.3-Codex: A Faster Agentic Coding Model Unifying Frontier Code Performance And Professional Reasoning Into One SystemMarkTechPost OpenAI has just introduced GPT-5.3-Codex, a new agentic coding model that extends Codex from writing and reviewing code to handling a broad range of work on a computer. The model combines the frontier coding performance of GPT-5.2-Codex with the reasoning and professional knowledge capabilities of GPT-5.2 into a single system, and it runs 25% faster

The post OpenAI Just Launched GPT-5.3-Codex: A Faster Agentic Coding Model Unifying Frontier Code Performance And Professional Reasoning Into One System appeared first on MarkTechPost.

OpenAI has just introduced GPT-5.3-Codex, a new agentic coding model that extends Codex from writing and reviewing code to handling a broad range of work on a computer. The model combines the frontier coding performance of GPT-5.2-Codex with the reasoning and professional knowledge capabilities of GPT-5.2 into a single system, and it runs 25% faster

The post OpenAI Just Launched GPT-5.3-Codex: A Faster Agentic Coding Model Unifying Frontier Code Performance And Professional Reasoning Into One System appeared first on MarkTechPost. Read More

How to Build Production-Grade Data Validation Pipelines Using Pandera, Typed Schemas, and Composable DataFrame ContractsMarkTechPost Schemas, and Composable DataFrame ContractsIn this tutorial, we demonstrate how to build robust, production-grade data validation pipelines using Pandera with typed DataFrame models. We start by simulating realistic, imperfect transactional data and progressively enforce strict schema constraints, column-level rules, and cross-column business logic using declarative checks. We show how lazy validation helps us surface multiple

The post How to Build Production-Grade Data Validation Pipelines Using Pandera, Typed Schemas, and Composable DataFrame Contracts appeared first on MarkTechPost.

Schemas, and Composable DataFrame ContractsIn this tutorial, we demonstrate how to build robust, production-grade data validation pipelines using Pandera with typed DataFrame models. We start by simulating realistic, imperfect transactional data and progressively enforce strict schema constraints, column-level rules, and cross-column business logic using declarative checks. We show how lazy validation helps us surface multiple

The post How to Build Production-Grade Data Validation Pipelines Using Pandera, Typed Schemas, and Composable DataFrame Contracts appeared first on MarkTechPost. Read More

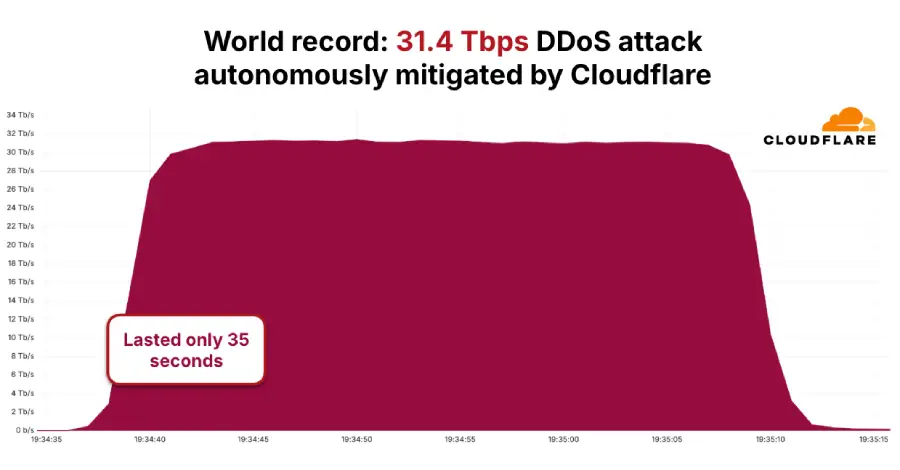

The distributed denial-of-service (DDoS) botnet known as AISURU/Kimwolf has been attributed to a record-setting attack that peaked at 31.4 Terabits per second (Tbps) and lasted only 35 seconds. Cloudflare, which automatically detected and mitigated the activity, said it’s part of a growing number of hyper-volumetric HTTP DDoS attacks mounted by the botnet in the fourth […]

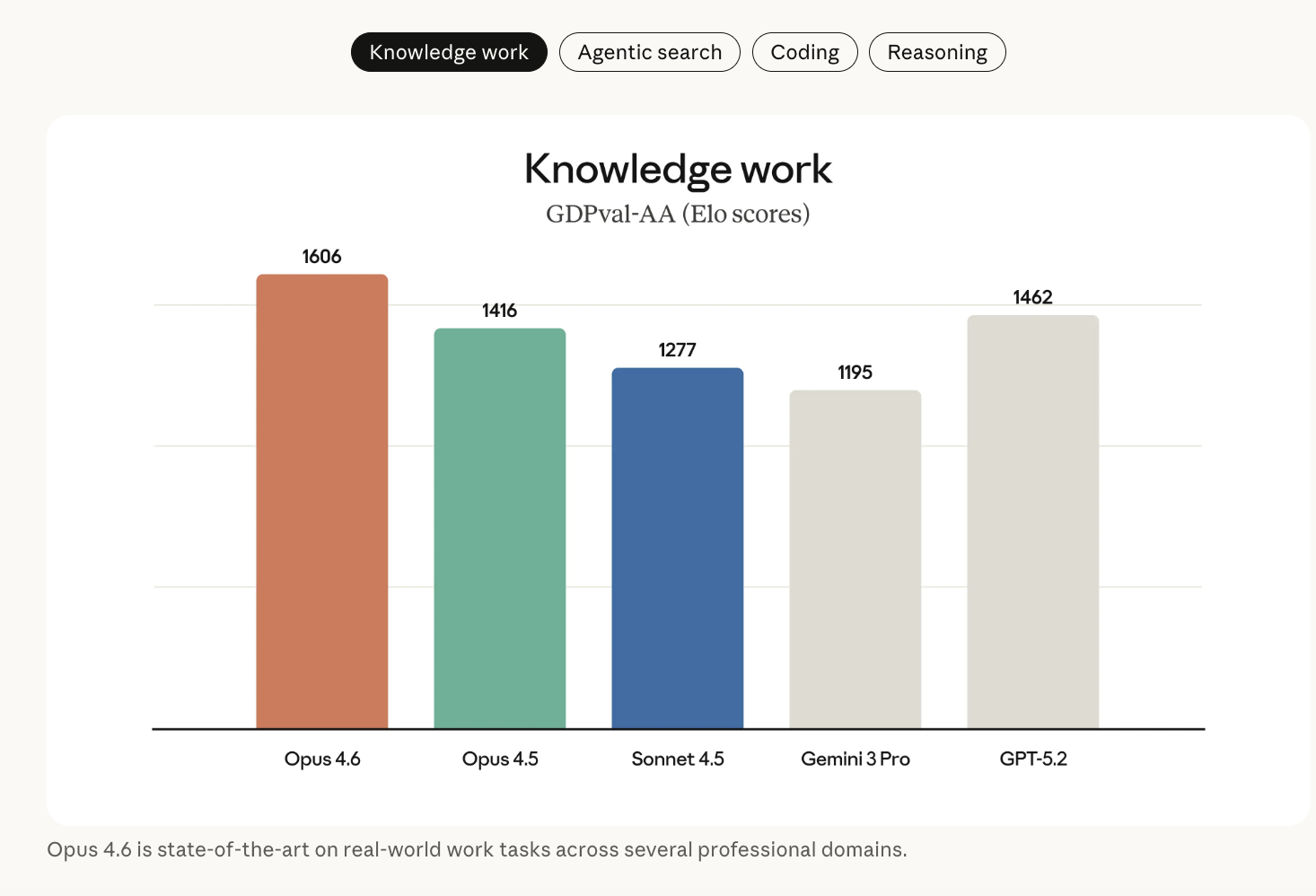

Anthropic Releases Claude Opus 4.6 With 1M Context, Agentic Coding, Adaptive Reasoning Controls, and Expanded Safety Tooling CapabilitiesMarkTechPost Anthropic has launched Claude Opus 4.6, its most capable model to date, focused on long-context reasoning, agentic coding, and high-value knowledge work. The model builds on Claude Opus 4.5 and is now available on claude.ai, the Claude API, and major cloud providers under the ID claude-opus-4-6. Model focus: agentic work, not single answers Opus 4.6

The post Anthropic Releases Claude Opus 4.6 With 1M Context, Agentic Coding, Adaptive Reasoning Controls, and Expanded Safety Tooling Capabilities appeared first on MarkTechPost.

Anthropic has launched Claude Opus 4.6, its most capable model to date, focused on long-context reasoning, agentic coding, and high-value knowledge work. The model builds on Claude Opus 4.5 and is now available on claude.ai, the Claude API, and major cloud providers under the ID claude-opus-4-6. Model focus: agentic work, not single answers Opus 4.6

The post Anthropic Releases Claude Opus 4.6 With 1M Context, Agentic Coding, Adaptive Reasoning Controls, and Expanded Safety Tooling Capabilities appeared first on MarkTechPost. Read More

How Associa transforms document classification with the GenAI IDP Accelerator and Amazon BedrockArtificial Intelligence Associa collaborated with the AWS Generative AI Innovation Center to build a generative AI-powered document classification system aligning with Associa’s long-term vision of using generative AI to achieve operational efficiencies in document management. The solution automatically categorizes incoming documents with high accuracy, processes documents efficiently, and provides substantial cost savings while maintaining operational excellence. The document classification system, developed using the Generative AI Intelligent Document Processing (GenAI IDP) Accelerator, is designed to integrate seamlessly into existing workflows. It revolutionizes how employees interact with document management systems by reducing the time spent on manual classification tasks.

Associa collaborated with the AWS Generative AI Innovation Center to build a generative AI-powered document classification system aligning with Associa’s long-term vision of using generative AI to achieve operational efficiencies in document management. The solution automatically categorizes incoming documents with high accuracy, processes documents efficiently, and provides substantial cost savings while maintaining operational excellence. The document classification system, developed using the Generative AI Intelligent Document Processing (GenAI IDP) Accelerator, is designed to integrate seamlessly into existing workflows. It revolutionizes how employees interact with document management systems by reducing the time spent on manual classification tasks. Read More