Data Visualization Explained (Part 5): Visualizing Time-Series Data in Python (Matplotlib, Plotly, and Altair)Towards Data Science An explanation of time-series visualization, including in-depth code examples in Matplotlib, Plotly, and Altair.

The post Data Visualization Explained (Part 5): Visualizing Time-Series Data in Python (Matplotlib, Plotly, and Altair) appeared first on Towards Data Science.

An explanation of time-series visualization, including in-depth code examples in Matplotlib, Plotly, and Altair.

The post Data Visualization Explained (Part 5): Visualizing Time-Series Data in Python (Matplotlib, Plotly, and Altair) appeared first on Towards Data Science. Read More

Data Cleaning at the Command Line for Beginner Data ScientistsKDnuggets Data cleaning doesn’t always require Python or Excel. Learn how simple command-line tools can help you clean datasets faster and more efficiently.

Data cleaning doesn’t always require Python or Excel. Learn how simple command-line tools can help you clean datasets faster and more efficiently. Read More

How to choose the best thermal binoculars for long-range detection in 2026AI News Choosing the right thermal binoculars is essential for security professionals and outdoor specialists who need reliable long-range detection. Many users who previously relied on the market’s best night vision binoculars now seek advanced thermal imaging for superior clarity, extended range, and weather-independent performance. In 2026, ATN continues to lead the market with cutting-edge thermal binoculars

The post How to choose the best thermal binoculars for long-range detection in 2026 appeared first on AI News.

Choosing the right thermal binoculars is essential for security professionals and outdoor specialists who need reliable long-range detection. Many users who previously relied on the market’s best night vision binoculars now seek advanced thermal imaging for superior clarity, extended range, and weather-independent performance. In 2026, ATN continues to lead the market with cutting-edge thermal binoculars

The post How to choose the best thermal binoculars for long-range detection in 2026 appeared first on AI News. Read More

How Relevance Models Foreshadowed Transformers for NLPTowards Data Science Tracing the history of LLM attention: standing on the shoulders of giants

The post How Relevance Models Foreshadowed Transformers for NLP appeared first on Towards Data Science.

Tracing the history of LLM attention: standing on the shoulders of giants

The post How Relevance Models Foreshadowed Transformers for NLP appeared first on Towards Data Science. Read More

Oligo Security has warned of ongoing attacks exploiting a two-year-old security flaw in the Ray open-source artificial intelligence (AI) framework to turn infected clusters with NVIDIA GPUs into a self-replicating cryptocurrency mining botnet. The activity, codenamed ShadowRay 2.0, is an evolution of a prior wave that was observed between September 2023 and March 2024. The […]

A unique take on the software update gambit has allowed “PlushDaemon” to evade attention as it mostly targets Chinese organizations. Read More

Editors from Dark Reading, Cybersecurity Dive, and TechTarget Search Security break down the depressing state of cybersecurity awareness campaigns and how organizations can overcome basic struggles with password hygiene and phishing attacks. Read More

A major spike in malicious scanning against Palo Alto Networks GlobalProtect portals has been detected, starting on November 14, 2025. […] Read More

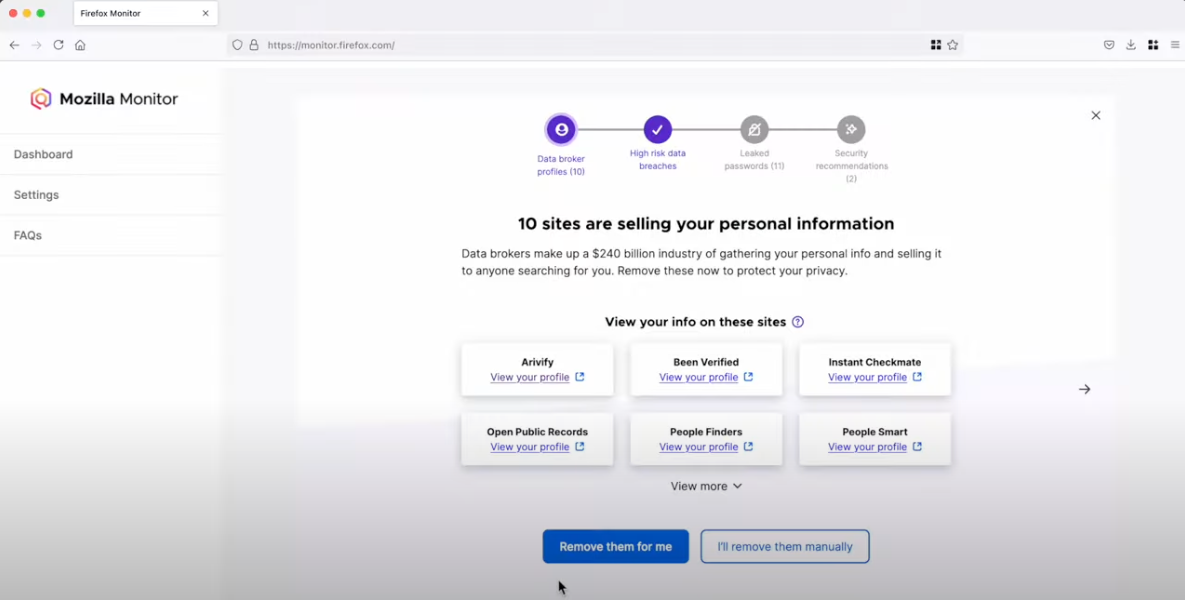

In March 2024, Mozilla said it was winding down its collaboration with Onerep — an identity protection service offered with the Firefox web browser that promises to remove users from hundreds of people-search sites — after KrebsOnSecurity revealed Onerep’s founder had created dozens of people-search services and was continuing to operate at least one of […]

Near-Lossless Model Compression Enables Longer Context Inference in DNA Large Language Modelscs.AI updates on arXiv.org arXiv:2511.14694v1 Announce Type: cross

Abstract: Trained on massive cross-species DNA corpora, DNA large language models (LLMs) learn the fundamental “grammar” and evolutionary patterns of genomic sequences. This makes them powerful priors for DNA sequence modeling, particularly over long ranges. However, two major constraints hinder their use in practice: the quadratic computational cost of self-attention and the growing memory required for key-value (KV) caches during autoregressive decoding. These constraints force the use of heuristics such as fixed-window truncation or sliding windows, which compromise fidelity on ultra-long sequences by discarding distant information. We introduce FOCUS (Feature-Oriented Compression for Ultra-long Self-attention), a progressive context-compression module that can be plugged into pretrained DNA LLMs. FOCUS combines the established k-mer representation in genomics with learnable hierarchical compression: it inserts summary tokens at k-mer granularity and progressively compresses attention key and value activations across multiple Transformer layers, retaining only the summary KV states across windows while discarding ordinary-token KV. A shared-boundary windowing scheme yields a stationary cross-window interface that propagates long-range information with minimal loss. We validate FOCUS on an Evo-2-based DNA LLM fine-tuned on GRCh38 chromosome 1 with self-supervised training and randomized compression schedules to promote robustness across compression ratios. On held-out human chromosomes, FOCUS achieves near-lossless fidelity: compressing a 1 kb context into only 10 summary tokens (about 100x) shifts the average per-nucleotide probability by only about 0.0004. Compared to a baseline without compression, FOCUS reduces KV-cache memory and converts effective inference scaling from O(N^2) to near-linear O(N), enabling about 100x longer inference windows on commodity GPUs with near-lossless fidelity.

arXiv:2511.14694v1 Announce Type: cross

Abstract: Trained on massive cross-species DNA corpora, DNA large language models (LLMs) learn the fundamental “grammar” and evolutionary patterns of genomic sequences. This makes them powerful priors for DNA sequence modeling, particularly over long ranges. However, two major constraints hinder their use in practice: the quadratic computational cost of self-attention and the growing memory required for key-value (KV) caches during autoregressive decoding. These constraints force the use of heuristics such as fixed-window truncation or sliding windows, which compromise fidelity on ultra-long sequences by discarding distant information. We introduce FOCUS (Feature-Oriented Compression for Ultra-long Self-attention), a progressive context-compression module that can be plugged into pretrained DNA LLMs. FOCUS combines the established k-mer representation in genomics with learnable hierarchical compression: it inserts summary tokens at k-mer granularity and progressively compresses attention key and value activations across multiple Transformer layers, retaining only the summary KV states across windows while discarding ordinary-token KV. A shared-boundary windowing scheme yields a stationary cross-window interface that propagates long-range information with minimal loss. We validate FOCUS on an Evo-2-based DNA LLM fine-tuned on GRCh38 chromosome 1 with self-supervised training and randomized compression schedules to promote robustness across compression ratios. On held-out human chromosomes, FOCUS achieves near-lossless fidelity: compressing a 1 kb context into only 10 summary tokens (about 100x) shifts the average per-nucleotide probability by only about 0.0004. Compared to a baseline without compression, FOCUS reduces KV-cache memory and converts effective inference scaling from O(N^2) to near-linear O(N), enabling about 100x longer inference windows on commodity GPUs with near-lossless fidelity. Read More