Provably Mitigating Corruption, Overoptimization, and Verbosity Simultaneously in Offline and Online RLHF/DPO Alignmentcs.AI updates on arXiv.org arXiv:2510.05526v2 Announce Type: replace-cross

Abstract: Reinforcement learning from human feedback (RLHF) and direct preference optimization (DPO) are important techniques to align large language models (LLM) with human preference. However, the quality of RLHF and DPO training is seriously compromised by textit{textbf{C}orrupted} preference, reward textit{textbf{O}veroptimization}, and bias towards textit{textbf{V}erbosity}. To our knowledge, most existing works tackle only one of these important issues, and the few other works require much computation to estimate multiple reward models and lack theoretical guarantee of generalization ability. In this work, we propose RLHF-textbf{COV} and DPO-textbf{COV} algorithms that can simultaneously mitigate these three issues, in both offline and online settings. This ability is theoretically demonstrated by obtaining length-regularized generalization error rates for our DPO-COV algorithms trained on corrupted data, which match the best-known rates for simpler cases with clean data and without length regularization. Moreover, our DPO-COV algorithm is simple to implement without reward estimation, and is proved to be equivalent to our RLHF-COV algorithm, which directly implies the equivalence between the vanilla RLHF and DPO algorithms. Experiments demonstrate the effectiveness of our DPO-COV algorithms under both offline and online settings.

arXiv:2510.05526v2 Announce Type: replace-cross

Abstract: Reinforcement learning from human feedback (RLHF) and direct preference optimization (DPO) are important techniques to align large language models (LLM) with human preference. However, the quality of RLHF and DPO training is seriously compromised by textit{textbf{C}orrupted} preference, reward textit{textbf{O}veroptimization}, and bias towards textit{textbf{V}erbosity}. To our knowledge, most existing works tackle only one of these important issues, and the few other works require much computation to estimate multiple reward models and lack theoretical guarantee of generalization ability. In this work, we propose RLHF-textbf{COV} and DPO-textbf{COV} algorithms that can simultaneously mitigate these three issues, in both offline and online settings. This ability is theoretically demonstrated by obtaining length-regularized generalization error rates for our DPO-COV algorithms trained on corrupted data, which match the best-known rates for simpler cases with clean data and without length regularization. Moreover, our DPO-COV algorithm is simple to implement without reward estimation, and is proved to be equivalent to our RLHF-COV algorithm, which directly implies the equivalence between the vanilla RLHF and DPO algorithms. Experiments demonstrate the effectiveness of our DPO-COV algorithms under both offline and online settings. Read More

Fortinet, Ivanti, and SAP have moved to address critical security flaws in their products that, if successfully exploited, could result in an authentication bypass and code execution. The Fortinet vulnerabilities affect FortiOS, FortiWeb, FortiProxy, and FortiSwitchManager and relate to a case of improper verification of a cryptographic signature. They are tracked as CVE-2025-59718 and Read More

Microsoft closed out 2025 with patches for 56 security flaws in various products across the Windows platform, including one vulnerability that has been actively exploited in the wild. Of the 56 flaws, three are rated Critical, and 53 are rated Important in severity. Two other defects are listed as publicly known at the time of […]

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License. Read More

Inside the playbook of companies winning with AIAI News Many companies are still working out how to use AI in a steady and practical way, but a small group is already pulling ahead. New research from NTT DATA outlines a playbook that shows how these “AI leaders” set themselves apart through strong plans, firm decisions, and a disciplined approach to building and using AI

The post Inside the playbook of companies winning with AI appeared first on AI News.

Many companies are still working out how to use AI in a steady and practical way, but a small group is already pulling ahead. New research from NTT DATA outlines a playbook that shows how these “AI leaders” set themselves apart through strong plans, firm decisions, and a disciplined approach to building and using AI

The post Inside the playbook of companies winning with AI appeared first on AI News. Read More

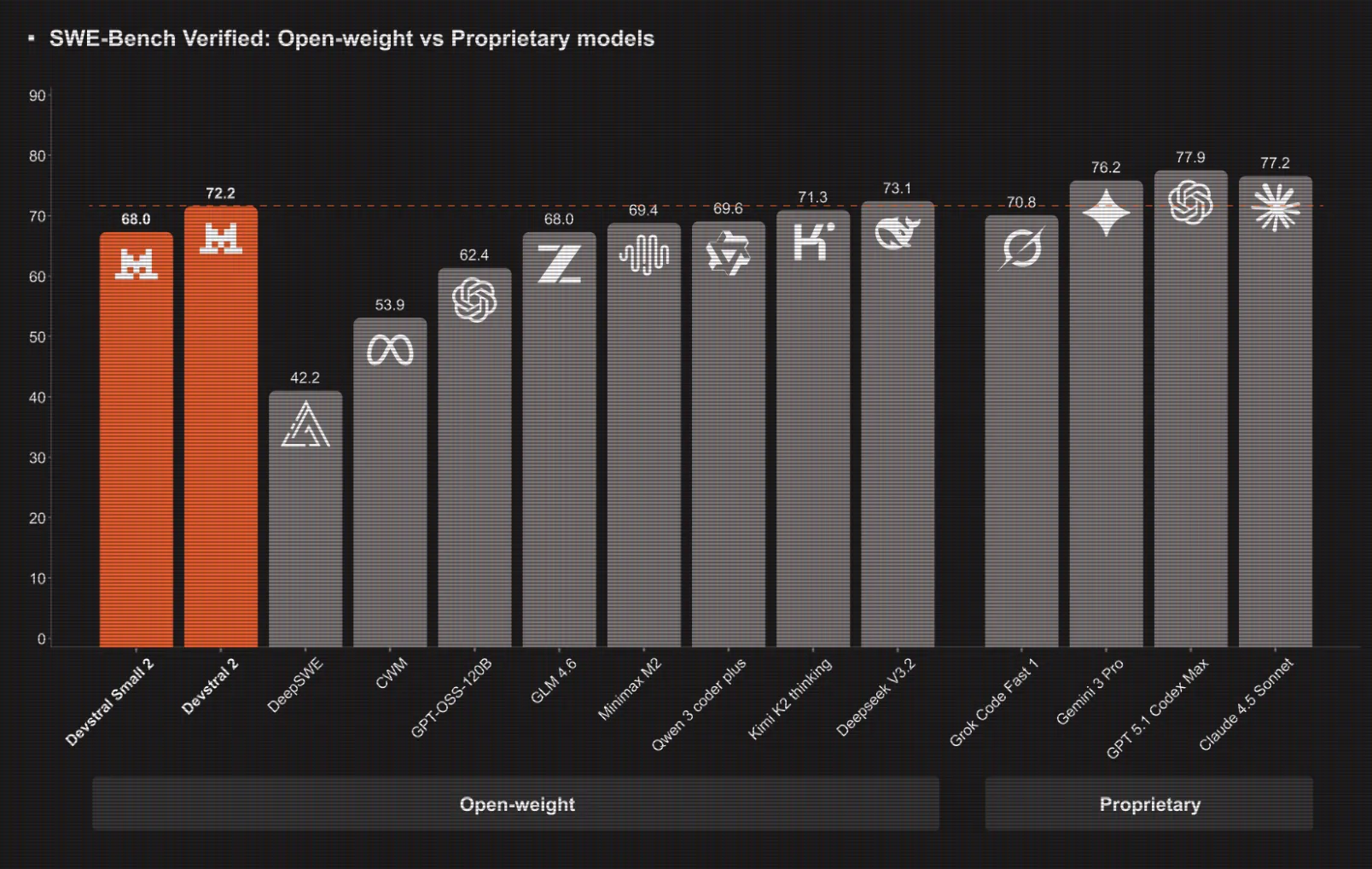

Mistral AI Ships Devstral 2 Coding Models And Mistral Vibe CLI For Agentic, Terminal Native DevelopmentMarkTechPost Mistral AI has introduced Devstral 2, a next generation coding model family for software engineering agents, together with Mistral Vibe CLI, an open source command line coding assistant that runs inside the terminal or IDEs that support the Agent Communication Protocol. Devstral 2 and Devstral Small 2, model sizes, context and benchmarks Devstral 2 is

The post Mistral AI Ships Devstral 2 Coding Models And Mistral Vibe CLI For Agentic, Terminal Native Development appeared first on MarkTechPost.

Mistral AI has introduced Devstral 2, a next generation coding model family for software engineering agents, together with Mistral Vibe CLI, an open source command line coding assistant that runs inside the terminal or IDEs that support the Agent Communication Protocol. Devstral 2 and Devstral Small 2, model sizes, context and benchmarks Devstral 2 is

The post Mistral AI Ships Devstral 2 Coding Models And Mistral Vibe CLI For Agentic, Terminal Native Development appeared first on MarkTechPost. Read More

Arbitrage: Efficient Reasoning via Advantage-Aware Speculationcs.AI updates on arXiv.org arXiv:2512.05033v2 Announce Type: replace-cross

Abstract: Modern Large Language Models achieve impressive reasoning capabilities with long Chain of Thoughts, but they incur substantial computational cost during inference, and this motivates techniques to improve the performance-cost ratio. Among these techniques, Speculative Decoding accelerates inference by employing a fast but inaccurate draft model to autoregressively propose tokens, which are then verified in parallel by a more capable target model. However, due to unnecessary rejections caused by token mismatches in semantically equivalent steps, traditional token-level Speculative Decoding struggles in reasoning tasks. Although recent works have shifted to step-level semantic verification, which improve efficiency by accepting or rejecting entire reasoning steps, existing step-level methods still regenerate many rejected steps with little improvement, wasting valuable target compute. To address this challenge, we propose Arbitrage, a novel step-level speculative generation framework that routes generation dynamically based on the relative advantage between draft and target models. Instead of applying a fixed acceptance threshold, Arbitrage uses a lightweight router trained to predict when the target model is likely to produce a meaningfully better step. This routing approximates an ideal Arbitrage Oracle that always chooses the higher-quality step, achieving near-optimal efficiency-accuracy trade-offs. Across multiple mathematical reasoning benchmarks, Arbitrage consistently surpasses prior step-level Speculative Decoding baselines, reducing inference latency by up to $sim2times$ at matched accuracy.

arXiv:2512.05033v2 Announce Type: replace-cross

Abstract: Modern Large Language Models achieve impressive reasoning capabilities with long Chain of Thoughts, but they incur substantial computational cost during inference, and this motivates techniques to improve the performance-cost ratio. Among these techniques, Speculative Decoding accelerates inference by employing a fast but inaccurate draft model to autoregressively propose tokens, which are then verified in parallel by a more capable target model. However, due to unnecessary rejections caused by token mismatches in semantically equivalent steps, traditional token-level Speculative Decoding struggles in reasoning tasks. Although recent works have shifted to step-level semantic verification, which improve efficiency by accepting or rejecting entire reasoning steps, existing step-level methods still regenerate many rejected steps with little improvement, wasting valuable target compute. To address this challenge, we propose Arbitrage, a novel step-level speculative generation framework that routes generation dynamically based on the relative advantage between draft and target models. Instead of applying a fixed acceptance threshold, Arbitrage uses a lightweight router trained to predict when the target model is likely to produce a meaningfully better step. This routing approximates an ideal Arbitrage Oracle that always chooses the higher-quality step, achieving near-optimal efficiency-accuracy trade-offs. Across multiple mathematical reasoning benchmarks, Arbitrage consistently surpasses prior step-level Speculative Decoding baselines, reducing inference latency by up to $sim2times$ at matched accuracy. Read More

This release addresses 57 vulnerabilities. 3 of these vulnerabilities are rated critical. One vulnerability was already exploited, and two were publicly disclosed before the patch was released. CVE-2025-62221: This privilege escalation vulnerability in the Microsoft Cloud Files Mini Filters driver is already being exploited. CVE-2025-54100: A PowerShell script using Invoke-WebRequest may execute scripts that are included […]

SAP has released its December security updates addressing 14 vulnerabilities across a range of products, including three critical-severity flaws. […] Read More

Microsoft today pushed updates to fix at least 56 security flaws in its Windows operating systems and supported software. This final Patch Tuesday of 2025 tackles one zero-day bug that is already being exploited, as well as two publicly disclosed vulnerabilities. Despite releasing a lower-than-normal number of security updates these past few months, Microsoft patched […]