The browser has become the main interface to GenAI for most enterprises: from web-based LLMs and copilots, to GenAI‑powered extensions and agentic browsers like ChatGPT Atlas. Employees are leveraging the power of GenAI to draft emails, summarize documents, work on code, and analyze data, often by copying/pasting sensitive information directly into prompts or uploading files. […]

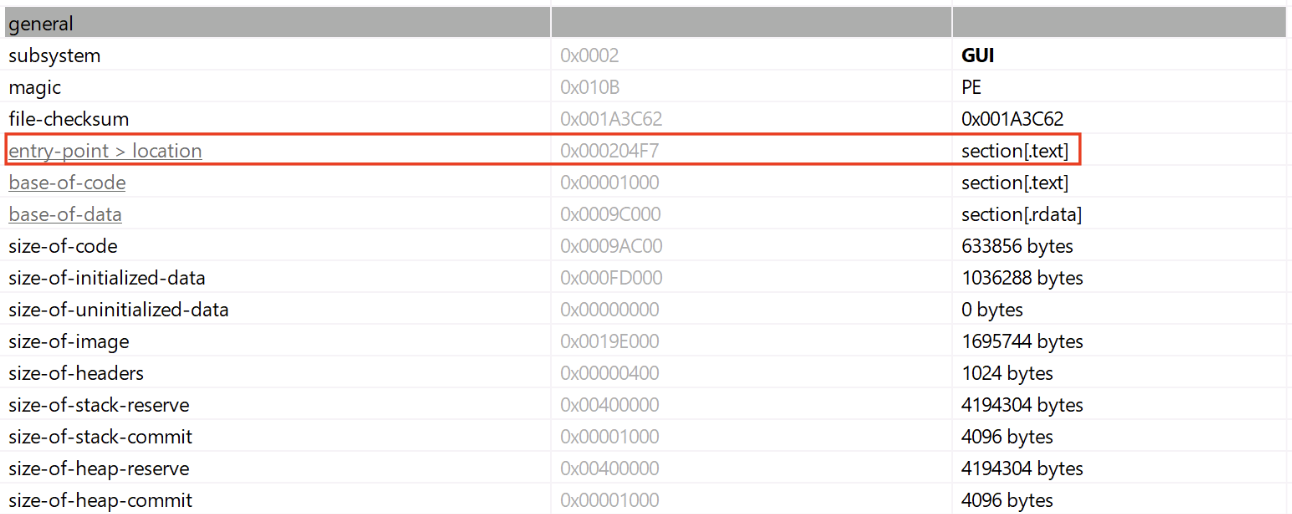

In the Microsoft Windows ecosystem, DLLs (Dynamic Load Libraries) are PE files like regular programs. One of the main differences is that they export functions that can be called by programs that load them. By example, to call RegOpenKeyExA(), the program must first load the ADVAPI32.dll. A PE files has a lot of headers (metadata) […]

The U.S. Cybersecurity and Infrastructure Security Agency (CISA) on Thursday added a high-severity security flaw impacting OSGeo GeoServer to its Known Exploited Vulnerabilities (KEV) catalog, based on evidence of active exploitation in the wild. The vulnerability in question is CVE-2025-58360 (CVSS score: 8.2), an unauthenticated XML External Entity (XXE) flaw that affects all versions prior […]

SpatialScore: Towards Comprehensive Evaluation for Spatial Intelligencecs.AI updates on arXiv.org arXiv:2505.17012v2 Announce Type: replace-cross

Abstract: Existing evaluations of multimodal large language models (MLLMs) on spatial intelligence are typically fragmented and limited in scope. In this work, we aim to conduct a holistic assessment of the spatial understanding capabilities of modern MLLMs and propose complementary data-driven and agent-based solutions. Specifically, we make the following contributions: (i) we introduce SpatialScore, to our knowledge, the most comprehensive and diverse benchmark for multimodal spatial intelligence to date. It covers multiple visual data types, input modalities, and question-answering formats, and contains approximately 5K manually verified samples spanning 30 distinct tasks; (ii) using SpatialScore, we extensively evaluate 40 representative MLLMs, revealing persistent challenges and a substantial gap between current models and human-level spatial intelligence; (iii) to advance model capabilities, we construct SpatialCorpus, a large-scale training resource with 331K multimodal QA samples that supports fine-tuning on spatial reasoning tasks and significantly improves the performance of existing models (e.g., Qwen3-VL); (iv) to complement this data-driven route with a training-free paradigm, we develop SpatialAgent, a multi-agent system equipped with 12 specialized spatial perception tools that supports both Plan-Execute and ReAct reasoning, enabling substantial gains in spatial reasoning without additional model training. Extensive experiments and in-depth analyses demonstrate the effectiveness of our benchmark, corpus, and agent framework. We expect these resources to serve as a solid foundation for advancing MLLMs toward human-level spatial intelligence. All data, code, and models will be released to the research community.

arXiv:2505.17012v2 Announce Type: replace-cross

Abstract: Existing evaluations of multimodal large language models (MLLMs) on spatial intelligence are typically fragmented and limited in scope. In this work, we aim to conduct a holistic assessment of the spatial understanding capabilities of modern MLLMs and propose complementary data-driven and agent-based solutions. Specifically, we make the following contributions: (i) we introduce SpatialScore, to our knowledge, the most comprehensive and diverse benchmark for multimodal spatial intelligence to date. It covers multiple visual data types, input modalities, and question-answering formats, and contains approximately 5K manually verified samples spanning 30 distinct tasks; (ii) using SpatialScore, we extensively evaluate 40 representative MLLMs, revealing persistent challenges and a substantial gap between current models and human-level spatial intelligence; (iii) to advance model capabilities, we construct SpatialCorpus, a large-scale training resource with 331K multimodal QA samples that supports fine-tuning on spatial reasoning tasks and significantly improves the performance of existing models (e.g., Qwen3-VL); (iv) to complement this data-driven route with a training-free paradigm, we develop SpatialAgent, a multi-agent system equipped with 12 specialized spatial perception tools that supports both Plan-Execute and ReAct reasoning, enabling substantial gains in spatial reasoning without additional model training. Extensive experiments and in-depth analyses demonstrate the effectiveness of our benchmark, corpus, and agent framework. We expect these resources to serve as a solid foundation for advancing MLLMs toward human-level spatial intelligence. All data, code, and models will be released to the research community. Read More

Optimizing the non-Clifford-count in unitary synthesis using Reinforcement Learningcs.AI updates on arXiv.org arXiv:2509.21709v2 Announce Type: replace-cross

Abstract: In this paper we study the potential of using reinforcement learning (RL) in order to synthesize quantum circuits, while optimizing the T-count and CS-count, of unitaries that are exactly implementable by the Clifford+T and Clifford+CS gate sets, respectively. We have designed our RL framework to work with channel representation of unitaries, that enables us to perform matrix operations efficiently, using integers only. We have also incorporated pruning heuristics and a canonicalization of operators, in order to reduce the search complexity. As a result, compared to previous works, we are able to implement significantly larger unitaries, in less time, with much better success rate and improvement factor. Our results for Clifford+T synthesis on two qubit unitaries achieve close-to-optimal decompositions for up to 100 T gates, 5 times more than previous RL algorithms and to the best of our knowledge, the largest instances achieved with any method to date. Our RL algorithm is able to recover previously-known optimal linear complexity algorithm for T-count-optimal decomposition of 1 qubit unitaries. We illustrate significant reduction in the asymptotic T-count estimate of important primitives like controlled cyclic shift (43%), controlled adder (14.3%) and multiplier (14%), without adding any extra ancilla. For 2-qubit Clifford+CS unitaries, our algorithm achieves a linear complexity, something that could only be accomplished by a previous algorithm using SO(6) representation.

arXiv:2509.21709v2 Announce Type: replace-cross

Abstract: In this paper we study the potential of using reinforcement learning (RL) in order to synthesize quantum circuits, while optimizing the T-count and CS-count, of unitaries that are exactly implementable by the Clifford+T and Clifford+CS gate sets, respectively. We have designed our RL framework to work with channel representation of unitaries, that enables us to perform matrix operations efficiently, using integers only. We have also incorporated pruning heuristics and a canonicalization of operators, in order to reduce the search complexity. As a result, compared to previous works, we are able to implement significantly larger unitaries, in less time, with much better success rate and improvement factor. Our results for Clifford+T synthesis on two qubit unitaries achieve close-to-optimal decompositions for up to 100 T gates, 5 times more than previous RL algorithms and to the best of our knowledge, the largest instances achieved with any method to date. Our RL algorithm is able to recover previously-known optimal linear complexity algorithm for T-count-optimal decomposition of 1 qubit unitaries. We illustrate significant reduction in the asymptotic T-count estimate of important primitives like controlled cyclic shift (43%), controlled adder (14.3%) and multiplier (14%), without adding any extra ancilla. For 2-qubit Clifford+CS unitaries, our algorithm achieves a linear complexity, something that could only be accomplished by a previous algorithm using SO(6) representation. Read More

The LLM Wears Prada: Analysing Gender Bias and Stereotypes through Online Shopping Datacs.AI updates on arXiv.org arXiv:2504.01951v2 Announce Type: replace

Abstract: With the wide and cross-domain adoption of Large Language Models, it becomes crucial to assess to which extent the statistical correlations in training data, which underlie their impressive performance, hide subtle and potentially troubling biases. Gender bias in LLMs has been widely investigated from the perspectives of works, hobbies, and emotions typically associated with a specific gender. In this study, we introduce a novel perspective. We investigate whether LLMs can predict an individual’s gender based solely on online shopping histories and whether these predictions are influenced by gender biases and stereotypes. Using a dataset of historical online purchases from users in the United States, we evaluate the ability of six LLMs to classify gender and we then analyze their reasoning and products-gender co-occurrences. Results indicate that while models can infer gender with moderate accuracy, their decisions are often rooted in stereotypical associations between product categories and gender. Furthermore, explicit instructions to avoid bias reduce the certainty of model predictions, but do not eliminate stereotypical patterns. Our findings highlight the persistent nature of gender biases in LLMs and emphasize the need for robust bias-mitigation strategies.

arXiv:2504.01951v2 Announce Type: replace

Abstract: With the wide and cross-domain adoption of Large Language Models, it becomes crucial to assess to which extent the statistical correlations in training data, which underlie their impressive performance, hide subtle and potentially troubling biases. Gender bias in LLMs has been widely investigated from the perspectives of works, hobbies, and emotions typically associated with a specific gender. In this study, we introduce a novel perspective. We investigate whether LLMs can predict an individual’s gender based solely on online shopping histories and whether these predictions are influenced by gender biases and stereotypes. Using a dataset of historical online purchases from users in the United States, we evaluate the ability of six LLMs to classify gender and we then analyze their reasoning and products-gender co-occurrences. Results indicate that while models can infer gender with moderate accuracy, their decisions are often rooted in stereotypical associations between product categories and gender. Furthermore, explicit instructions to avoid bias reduce the certainty of model predictions, but do not eliminate stereotypical patterns. Our findings highlight the persistent nature of gender biases in LLMs and emphasize the need for robust bias-mitigation strategies. Read More

Free unofficial patches are available for a new Windows zero-day vulnerability that allows attackers to crash the Remote Access Connection Manager (RasMan) service. […] Read More

Exploring Health Misinformation Detection with Multi-Agent Debatecs.AI updates on arXiv.org arXiv:2512.09935v1 Announce Type: new

Abstract: Fact-checking health-related claims has become increasingly critical as misinformation proliferates online. Effective verification requires both the retrieval of high-quality evidence and rigorous reasoning processes. In this paper, we propose a two-stage framework for health misinformation detection: Agreement Score Prediction followed by Multi-Agent Debate. In the first stage, we employ large language models (LLMs) to independently evaluate retrieved articles and compute an aggregated agreement score that reflects the overall evidence stance. When this score indicates insufficient consensus-falling below a predefined threshold-the system proceeds to a second stage. Multiple agents engage in structured debate to synthesize conflicting evidence and generate well-reasoned verdicts with explicit justifications. Experimental results demonstrate that our two-stage approach achieves superior performance compared to baseline methods, highlighting the value of combining automated scoring with collaborative reasoning for complex verification tasks.

arXiv:2512.09935v1 Announce Type: new

Abstract: Fact-checking health-related claims has become increasingly critical as misinformation proliferates online. Effective verification requires both the retrieval of high-quality evidence and rigorous reasoning processes. In this paper, we propose a two-stage framework for health misinformation detection: Agreement Score Prediction followed by Multi-Agent Debate. In the first stage, we employ large language models (LLMs) to independently evaluate retrieved articles and compute an aggregated agreement score that reflects the overall evidence stance. When this score indicates insufficient consensus-falling below a predefined threshold-the system proceeds to a second stage. Multiple agents engage in structured debate to synthesize conflicting evidence and generate well-reasoned verdicts with explicit justifications. Experimental results demonstrate that our two-stage approach achieves superior performance compared to baseline methods, highlighting the value of combining automated scoring with collaborative reasoning for complex verification tasks. Read More

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License. Read More

Microsoft’s Copilot usage analysis exposes the 2am philosophy question trendAI News F. Scott Fitzgerald observed that “in a real dark night of the soul, it is always three o’clock in the morning.” Microsoft’s latest Copilot usage analysis suggests this nocturnal tendency toward existential contemplation persists in the AI age – with religion and philosophy conversations rising through the rankings during early morning hours. The Microsoft AI

The post Microsoft’s Copilot usage analysis exposes the 2am philosophy question trend appeared first on AI News.

F. Scott Fitzgerald observed that “in a real dark night of the soul, it is always three o’clock in the morning.” Microsoft’s latest Copilot usage analysis suggests this nocturnal tendency toward existential contemplation persists in the AI age – with religion and philosophy conversations rising through the rankings during early morning hours. The Microsoft AI

The post Microsoft’s Copilot usage analysis exposes the 2am philosophy question trend appeared first on AI News. Read More