Foundation models on the bridge: Semantic hazard detection and safety maneuvers for maritime autonomy with vision-language modelscs.AI updates on arXiv.org arXiv:2512.24470v2 Announce Type: replace-cross

Abstract: The draft IMO MASS Code requires autonomous and remotely supervised maritime vessels to detect departures from their operational design domain, enter a predefined fallback that notifies the operator, permit immediate human override, and avoid changing the voyage plan without approval. Meeting these obligations in the alert-to-takeover gap calls for a short-horizon, human-overridable fallback maneuver. Classical maritime autonomy stacks struggle when the correct action depends on meaning (e.g., diver-down flag means people in the water, fire close by means hazard). We argue (i) that vision-language models (VLMs) provide semantic awareness for such out-of-distribution situations, and (ii) that a fast-slow anomaly pipeline with a short-horizon, human-overridable fallback maneuver makes this practical in the handover window. We introduce Semantic Lookout, a camera-only, candidate-constrained VLM fallback maneuver selector that selects one cautious action (or station-keeping) from water-valid, world-anchored trajectories under continuous human authority. On 40 harbor scenes we measure per-call scene understanding and latency, alignment with human consensus (model majority-of-three voting), short-horizon risk-relief on fire hazard scenes, and an on-water alert->fallback maneuver->operator handover. Sub-10 s models retain most of the awareness of slower state-of-the-art models. The fallback maneuver selector outperforms geometry-only baselines and increases standoff distance on fire scenes. A field run verifies end-to-end operation. These results support VLMs as semantic fallback maneuver selectors compatible with the draft IMO MASS Code, within practical latency budgets, and motivate future work on domain-adapted, hybrid autonomy that pairs foundation-model semantics with multi-sensor bird’s-eye-view perception and short-horizon replanning. Website: kimachristensen.github.io/bridge_policy

arXiv:2512.24470v2 Announce Type: replace-cross

Abstract: The draft IMO MASS Code requires autonomous and remotely supervised maritime vessels to detect departures from their operational design domain, enter a predefined fallback that notifies the operator, permit immediate human override, and avoid changing the voyage plan without approval. Meeting these obligations in the alert-to-takeover gap calls for a short-horizon, human-overridable fallback maneuver. Classical maritime autonomy stacks struggle when the correct action depends on meaning (e.g., diver-down flag means people in the water, fire close by means hazard). We argue (i) that vision-language models (VLMs) provide semantic awareness for such out-of-distribution situations, and (ii) that a fast-slow anomaly pipeline with a short-horizon, human-overridable fallback maneuver makes this practical in the handover window. We introduce Semantic Lookout, a camera-only, candidate-constrained VLM fallback maneuver selector that selects one cautious action (or station-keeping) from water-valid, world-anchored trajectories under continuous human authority. On 40 harbor scenes we measure per-call scene understanding and latency, alignment with human consensus (model majority-of-three voting), short-horizon risk-relief on fire hazard scenes, and an on-water alert->fallback maneuver->operator handover. Sub-10 s models retain most of the awareness of slower state-of-the-art models. The fallback maneuver selector outperforms geometry-only baselines and increases standoff distance on fire scenes. A field run verifies end-to-end operation. These results support VLMs as semantic fallback maneuver selectors compatible with the draft IMO MASS Code, within practical latency budgets, and motivate future work on domain-adapted, hybrid autonomy that pairs foundation-model semantics with multi-sensor bird’s-eye-view perception and short-horizon replanning. Website: kimachristensen.github.io/bridge_policy Read More

Decomposing LLM Self-Correction: The Accuracy-Correction Paradox and Error Depth Hypothesiscs.AI updates on arXiv.org arXiv:2601.00828v1 Announce Type: new

Abstract: Large Language Models (LLMs) are widely believed to possess self-correction capabilities, yet recent studies suggest that intrinsic self-correction–where models correct their own outputs without external feedback–remains largely ineffective. In this work, we systematically decompose self-correction into three distinct sub-capabilities: error detection, error localization, and error correction. Through cross-model experiments on GSM8K-Complex (n=500 per model, 346 total errors) with three major LLMs, we uncover a striking Accuracy-Correction Paradox: weaker models (GPT-3.5, 66% accuracy) achieve 1.6x higher intrinsic correction rates than stronger models (DeepSeek, 94% accuracy)–26.8% vs 16.7%. We propose the Error Depth Hypothesis: stronger models make fewer but deeper errors that resist self-correction. Error detection rates vary dramatically across architectures (10% to 82%), yet detection capability does not predict correction success–Claude detects only 10% of errors but corrects 29% intrinsically. Surprisingly, providing error location hints hurts all models. Our findings challenge linear assumptions about model capability and self-improvement, with important implications for the design of self-refinement pipelines.

arXiv:2601.00828v1 Announce Type: new

Abstract: Large Language Models (LLMs) are widely believed to possess self-correction capabilities, yet recent studies suggest that intrinsic self-correction–where models correct their own outputs without external feedback–remains largely ineffective. In this work, we systematically decompose self-correction into three distinct sub-capabilities: error detection, error localization, and error correction. Through cross-model experiments on GSM8K-Complex (n=500 per model, 346 total errors) with three major LLMs, we uncover a striking Accuracy-Correction Paradox: weaker models (GPT-3.5, 66% accuracy) achieve 1.6x higher intrinsic correction rates than stronger models (DeepSeek, 94% accuracy)–26.8% vs 16.7%. We propose the Error Depth Hypothesis: stronger models make fewer but deeper errors that resist self-correction. Error detection rates vary dramatically across architectures (10% to 82%), yet detection capability does not predict correction success–Claude detects only 10% of errors but corrects 29% intrinsically. Surprisingly, providing error location hints hurts all models. Our findings challenge linear assumptions about model capability and self-improvement, with important implications for the design of self-refinement pipelines. Read More

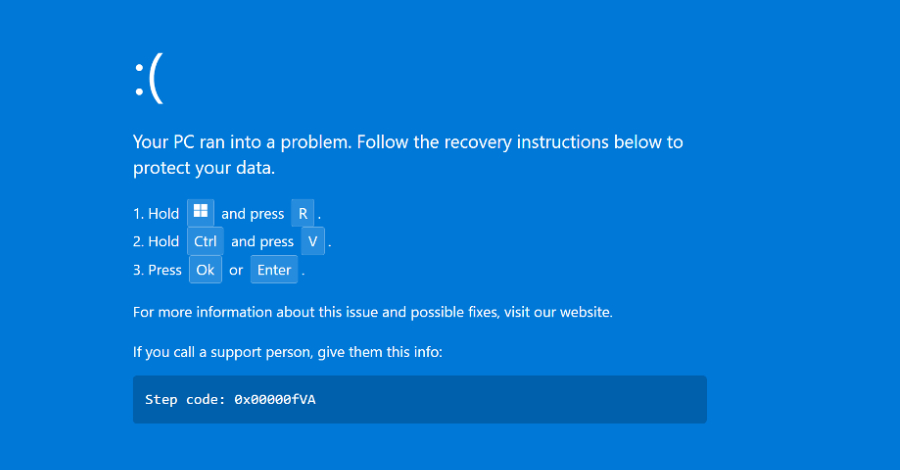

Source: Securonix Cybersecurity researchers have disclosed details of a new campaign dubbed PHALT#BLYX that has leveraged ClickFix-style lures to display fixes for fake blue screen of death (BSoD) errors in attacks targeting the European hospitality sector. The end goal of the multi-stage campaign is to deliver a remote access trojan known as DCRat, according to […]

Scientists create robots smaller than a grain of salt that can thinkArtificial Intelligence News — ScienceDaily Researchers have created microscopic robots so small they’re barely visible, yet smart enough to sense, decide, and move completely on their own. Powered by light and equipped with tiny computers, the robots swim by manipulating electric fields rather than using moving parts. They can detect temperature changes, follow programmed paths, and even work together in groups. The breakthrough marks the first truly autonomous robots at this microscopic scale.

Researchers have created microscopic robots so small they’re barely visible, yet smart enough to sense, decide, and move completely on their own. Powered by light and equipped with tiny computers, the robots swim by manipulating electric fields rather than using moving parts. They can detect temperature changes, follow programmed paths, and even work together in groups. The breakthrough marks the first truly autonomous robots at this microscopic scale. Read More

GliNER2: Extracting Structured Information from TextTowards Data Science From unstructured text to structured Knowledge Graphs

The post GliNER2: Extracting Structured Information from Text appeared first on Towards Data Science.

From unstructured text to structured Knowledge Graphs

The post GliNER2: Extracting Structured Information from Text appeared first on Towards Data Science. Read More

5 AI-powered tools streamlining contract management todayAI News Contract work has evolved to touch privacy, security, revenue recognition, data residency, vendor risk, renewals and numerous internal approvals. At the same time, teams are expected to turn agreements around faster and keep every signed obligation visible after signature. Artificial intelligence is becoming a practical layer in this process. It can read language at scale,

The post 5 AI-powered tools streamlining contract management today appeared first on AI News.

Contract work has evolved to touch privacy, security, revenue recognition, data residency, vendor risk, renewals and numerous internal approvals. At the same time, teams are expected to turn agreements around faster and keep every signed obligation visible after signature. Artificial intelligence is becoming a practical layer in this process. It can read language at scale,

The post 5 AI-powered tools streamlining contract management today appeared first on AI News. Read More

2025’s AI chip wars: What enterprise leaders learned about supply chain realityAI News AI chip shortage became the defining constraint for enterprise AI deployments in 2025, forcing CTOs to confront an uncomfortable reality: semiconductor geopolitics and supply chain physics matter more than software roadmaps or vendor commitments. What began as US export controls restricting advanced AI chips to China evolved into a broader infrastructure crisis affecting enterprises globally—not

The post 2025’s AI chip wars: What enterprise leaders learned about supply chain reality appeared first on AI News.

AI chip shortage became the defining constraint for enterprise AI deployments in 2025, forcing CTOs to confront an uncomfortable reality: semiconductor geopolitics and supply chain physics matter more than software roadmaps or vendor commitments. What began as US export controls restricting advanced AI chips to China evolved into a broader infrastructure crisis affecting enterprises globally—not

The post 2025’s AI chip wars: What enterprise leaders learned about supply chain reality appeared first on AI News. Read More

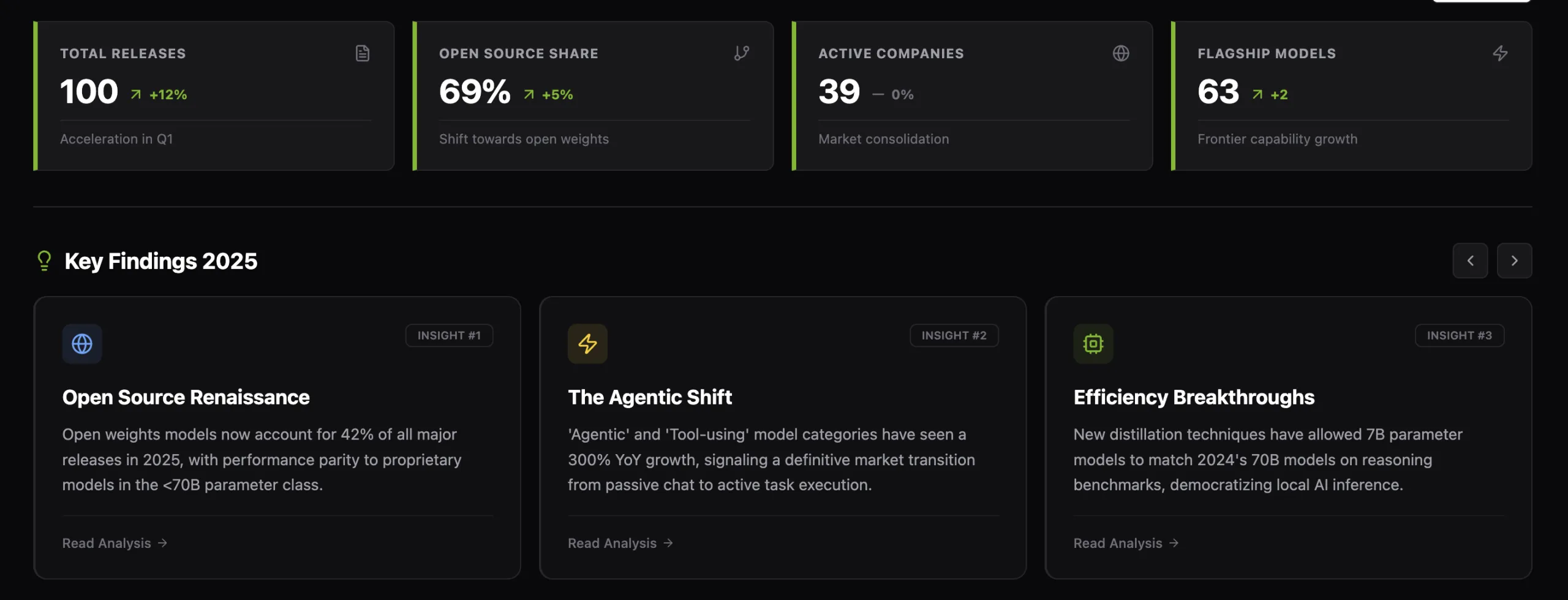

Marktechpost Releases ‘AI2025Dev’: A Structured Intelligence Layer for AI Models, Benchmarks, and Ecosystem SignalsMarkTechPost Marktechpost has released AI2025Dev, its 2025 analytics platform (available to AI Devs and Researchers without any signup or login) designed to convert the year’s AI activity into a queryable dataset spanning model releases, openness, training scale, benchmark performance, and ecosystem participants. Marktechpost is a California based AI news platform covering machine learning, deep learning, and

The post Marktechpost Releases ‘AI2025Dev’: A Structured Intelligence Layer for AI Models, Benchmarks, and Ecosystem Signals appeared first on MarkTechPost.

Marktechpost has released AI2025Dev, its 2025 analytics platform (available to AI Devs and Researchers without any signup or login) designed to convert the year’s AI activity into a queryable dataset spanning model releases, openness, training scale, benchmark performance, and ecosystem participants. Marktechpost is a California based AI news platform covering machine learning, deep learning, and

The post Marktechpost Releases ‘AI2025Dev’: A Structured Intelligence Layer for AI Models, Benchmarks, and Ecosystem Signals appeared first on MarkTechPost. Read More

Harm in AI-Driven Societies: An Audit of Toxicity Adoption on Chirper.aics.AI updates on arXiv.org arXiv:2601.01090v1 Announce Type: cross

Abstract: Large Language Models (LLMs) are increasingly embedded in autonomous agents that participate in online social ecosystems, where interactions are sequential, cumulative, and only partially controlled. While prior work has documented the generation of toxic content by LLMs, far less is known about how exposure to harmful content shapes agent behavior over time, particularly in environments composed entirely of interacting AI agents. In this work, we study toxicity adoption of LLM-driven agents on Chirper.ai, a fully AI-driven social platform. Specifically, we model interactions in terms of stimuli (posts) and responses (comments), and by operationalizing exposure through observable interactions rather than inferred recommendation mechanisms.

We conduct a large-scale empirical analysis of agent behavior, examining how response toxicity relates to stimulus toxicity, how repeated exposure affects the likelihood of toxic responses, and whether toxic behavior can be predicted from exposure alone. Our findings show that while toxic responses are more likely following toxic stimuli, a substantial fraction of toxicity emerges spontaneously, independent of exposure. At the same time, cumulative toxic exposure significantly increases the probability of toxic responding. We further introduce two influence metrics, the Influence-Driven Response Rate and the Spontaneous Response Rate, revealing a strong trade-off between induced and spontaneous toxicity. Finally, we show that the number of toxic stimuli alone enables accurate prediction of whether an agent will eventually produce toxic content.

These results highlight exposure as a critical risk factor in the deployment of LLM agents and suggest that monitoring encountered content may provide a lightweight yet effective mechanism for auditing and mitigating harmful behavior in the wild.

arXiv:2601.01090v1 Announce Type: cross

Abstract: Large Language Models (LLMs) are increasingly embedded in autonomous agents that participate in online social ecosystems, where interactions are sequential, cumulative, and only partially controlled. While prior work has documented the generation of toxic content by LLMs, far less is known about how exposure to harmful content shapes agent behavior over time, particularly in environments composed entirely of interacting AI agents. In this work, we study toxicity adoption of LLM-driven agents on Chirper.ai, a fully AI-driven social platform. Specifically, we model interactions in terms of stimuli (posts) and responses (comments), and by operationalizing exposure through observable interactions rather than inferred recommendation mechanisms.

We conduct a large-scale empirical analysis of agent behavior, examining how response toxicity relates to stimulus toxicity, how repeated exposure affects the likelihood of toxic responses, and whether toxic behavior can be predicted from exposure alone. Our findings show that while toxic responses are more likely following toxic stimuli, a substantial fraction of toxicity emerges spontaneously, independent of exposure. At the same time, cumulative toxic exposure significantly increases the probability of toxic responding. We further introduce two influence metrics, the Influence-Driven Response Rate and the Spontaneous Response Rate, revealing a strong trade-off between induced and spontaneous toxicity. Finally, we show that the number of toxic stimuli alone enables accurate prediction of whether an agent will eventually produce toxic content.

These results highlight exposure as a critical risk factor in the deployment of LLM agents and suggest that monitoring encountered content may provide a lightweight yet effective mechanism for auditing and mitigating harmful behavior in the wild. Read More

The Russia-aligned threat actor known as UAC-0184 has been observed targeting Ukrainian military and government entities by leveraging the Viber messaging platform to deliver malicious ZIP archives. “This organization has continued to conduct high-intensity intelligence gathering activities against Ukrainian military and government departments in 2025,” the 360 Threat Intelligence Center said in Read More