Reliable Audio Deepfake Detection in Variable Conditions via Quantum-Kernel SVMscs.AI updates on arXiv.org arXiv:2512.18797v1 Announce Type: cross

Abstract: Detecting synthetic speech is challenging when labeled data are scarce and recording conditions vary. Existing end-to-end deep models often overfit or fail to generalize, and while kernel methods can remain competitive, their performance heavily depends on the chosen kernel. Here, we show that using a quantum kernel in audio deepfake detection reduces falsepositive rates without increasing model size. Quantum feature maps embed data into high-dimensional Hilbert spaces, enabling the use of expressive similarity measures and compact classifiers. Building on this motivation, we compare quantum-kernel SVMs (QSVMs) with classical SVMs using identical mel-spectrogram preprocessing and stratified 5-fold cross-validation across four corpora (ASVspoof 2019 LA, ASVspoof 5 (2024), ADD23, and an In-the-Wild set). QSVMs achieve consistently lower equalerror rates (EER): 0.183 vs. 0.299 on ASVspoof 5 (2024), 0.081 vs. 0.188 on ADD23, 0.346 vs. 0.399 on ASVspoof 2019, and 0.355 vs. 0.413 In-the-Wild. At the EER operating point (where FPR equals FNR), these correspond to absolute false-positiverate reductions of 0.116 (38.8%), 0.107 (56.9%), 0.053 (13.3%), and 0.058 (14.0%), respectively. We also report how consistent the results are across cross-validation folds and margin-based measures of class separation, using identical settings for both models. The only modification is the kernel; the features and SVM remain unchanged, no additional trainable parameters are introduced, and the quantum kernel is computed on a conventional computer.

arXiv:2512.18797v1 Announce Type: cross

Abstract: Detecting synthetic speech is challenging when labeled data are scarce and recording conditions vary. Existing end-to-end deep models often overfit or fail to generalize, and while kernel methods can remain competitive, their performance heavily depends on the chosen kernel. Here, we show that using a quantum kernel in audio deepfake detection reduces falsepositive rates without increasing model size. Quantum feature maps embed data into high-dimensional Hilbert spaces, enabling the use of expressive similarity measures and compact classifiers. Building on this motivation, we compare quantum-kernel SVMs (QSVMs) with classical SVMs using identical mel-spectrogram preprocessing and stratified 5-fold cross-validation across four corpora (ASVspoof 2019 LA, ASVspoof 5 (2024), ADD23, and an In-the-Wild set). QSVMs achieve consistently lower equalerror rates (EER): 0.183 vs. 0.299 on ASVspoof 5 (2024), 0.081 vs. 0.188 on ADD23, 0.346 vs. 0.399 on ASVspoof 2019, and 0.355 vs. 0.413 In-the-Wild. At the EER operating point (where FPR equals FNR), these correspond to absolute false-positiverate reductions of 0.116 (38.8%), 0.107 (56.9%), 0.053 (13.3%), and 0.058 (14.0%), respectively. We also report how consistent the results are across cross-validation folds and margin-based measures of class separation, using identical settings for both models. The only modification is the kernel; the features and SVM remain unchanged, no additional trainable parameters are introduced, and the quantum kernel is computed on a conventional computer. Read More

Datasets for machine learning and for assessing the intelligence level of automatic patent search systemscs.AI updates on arXiv.org arXiv:2512.18384v1 Announce Type: cross

Abstract: The key to success in automating prior art search in patent research using artificial intelligence lies in developing large datasets for machine learning and ensuring their availability. This work is dedicated to providing a comprehensive solution to the problem of creating infrastructure for research in this field, including datasets and tools for calculating search quality criteria. The paper discusses the concept of semantic clusters of patent documents that determine the state of the art in a given subject, as proposed by the authors. A definition of such semantic clusters is also provided. Prior art search is presented as the task of identifying elements within a semantic cluster of patent documents in the subject area specified by the document under consideration. A generator of user-configurable datasets for machine learning, based on collections of U.S. and Russian patent documents, is described. The dataset generator creates a database of links to documents in semantic clusters. Then, based on user-defined parameters, it forms a dataset of semantic clusters in JSON format for machine learning. To evaluate machine learning outcomes, it is proposed to calculate search quality scores that account for semantic clusters of the documents being searched. To automate the evaluation process, the paper describes a utility developed by the authors for assessing the quality of prior art document search.

arXiv:2512.18384v1 Announce Type: cross

Abstract: The key to success in automating prior art search in patent research using artificial intelligence lies in developing large datasets for machine learning and ensuring their availability. This work is dedicated to providing a comprehensive solution to the problem of creating infrastructure for research in this field, including datasets and tools for calculating search quality criteria. The paper discusses the concept of semantic clusters of patent documents that determine the state of the art in a given subject, as proposed by the authors. A definition of such semantic clusters is also provided. Prior art search is presented as the task of identifying elements within a semantic cluster of patent documents in the subject area specified by the document under consideration. A generator of user-configurable datasets for machine learning, based on collections of U.S. and Russian patent documents, is described. The dataset generator creates a database of links to documents in semantic clusters. Then, based on user-defined parameters, it forms a dataset of semantic clusters in JSON format for machine learning. To evaluate machine learning outcomes, it is proposed to calculate search quality scores that account for semantic clusters of the documents being searched. To automate the evaluation process, the paper describes a utility developed by the authors for assessing the quality of prior art document search. Read More

Microsoft Teams will automatically enable messaging safety features by default in January to strengthen defenses against content tagged as malicious. […] Read More

The Clop ransomware gang has stolen the data of nearly 3.5 million University of Phoenix (UoPX) students, staff, and suppliers after breaching the university’s network in August. […] Read More

Coupang disclosed a data breach affecting 33.7 million customers after unauthorized access to personal data went undetected for nearly five months. Penta Security explains how the incident highlights insider credential abuse risks and why encrypting customer data beyond legal requirements can reduce exposure and limit damage. […] Read More

The latest variant of the MacSync information stealer targeting macOS systems is delivered through a digitally signed, notarized Swift application. […] Read More

Gistr: The Smart AI Notebook for Organizing KnowledgeKDnuggets This article explains how Gistr transforms the way data professionals interact with their most valuable asset: their accumulated knowledge.

This article explains how Gistr transforms the way data professionals interact with their most valuable asset: their accumulated knowledge. Read More

The Geometry of Laziness: What Angles Reveal About AI HallucinationsTowards Data Science A story about failing forward, spheres you can’t visualize, and why sometimes the math knows things before we do

The post The Geometry of Laziness: What Angles Reveal About AI Hallucinations appeared first on Towards Data Science.

A story about failing forward, spheres you can’t visualize, and why sometimes the math knows things before we do

The post The Geometry of Laziness: What Angles Reveal About AI Hallucinations appeared first on Towards Data Science. Read More

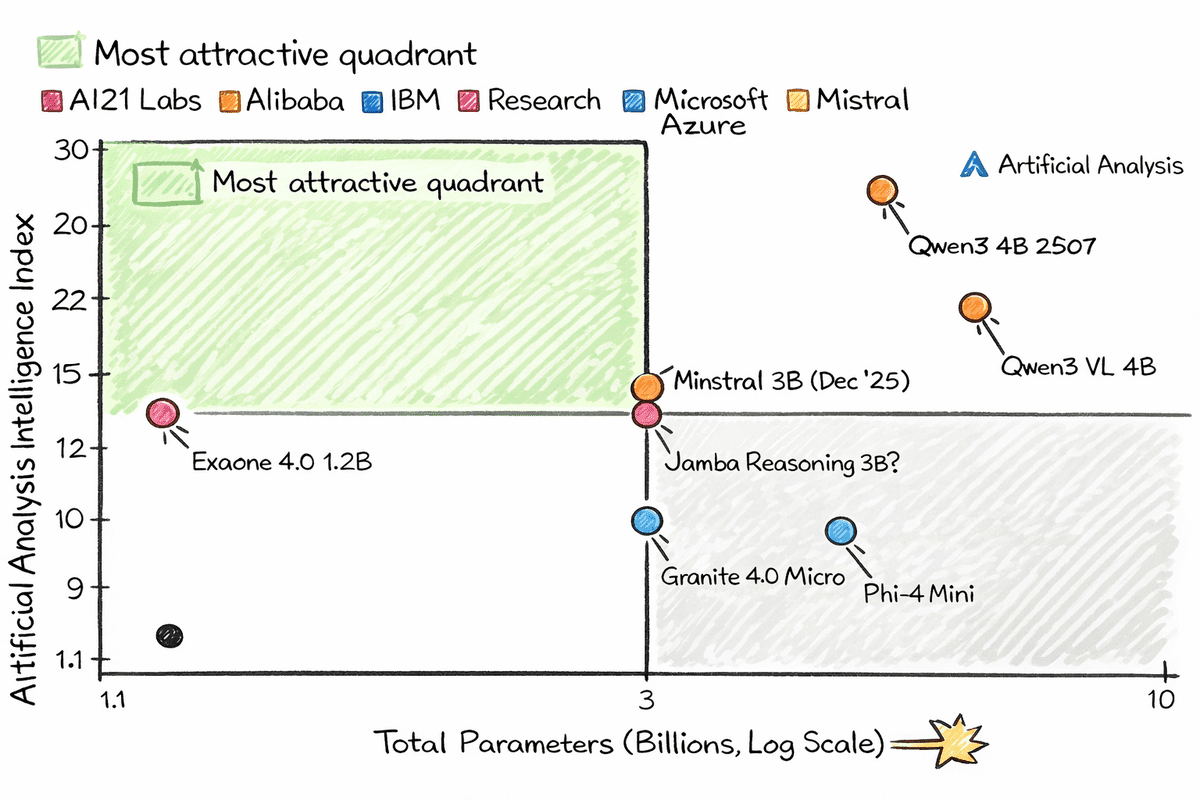

7 Tiny AI Models for Raspberry PiKDnuggets This is a list of top LLM and VLMs that are fast, smart, and small enough to run locally on devices as small as a Raspberry Pi or even a smart fridge.

This is a list of top LLM and VLMs that are fast, smart, and small enough to run locally on devices as small as a Raspberry Pi or even a smart fridge. Read More

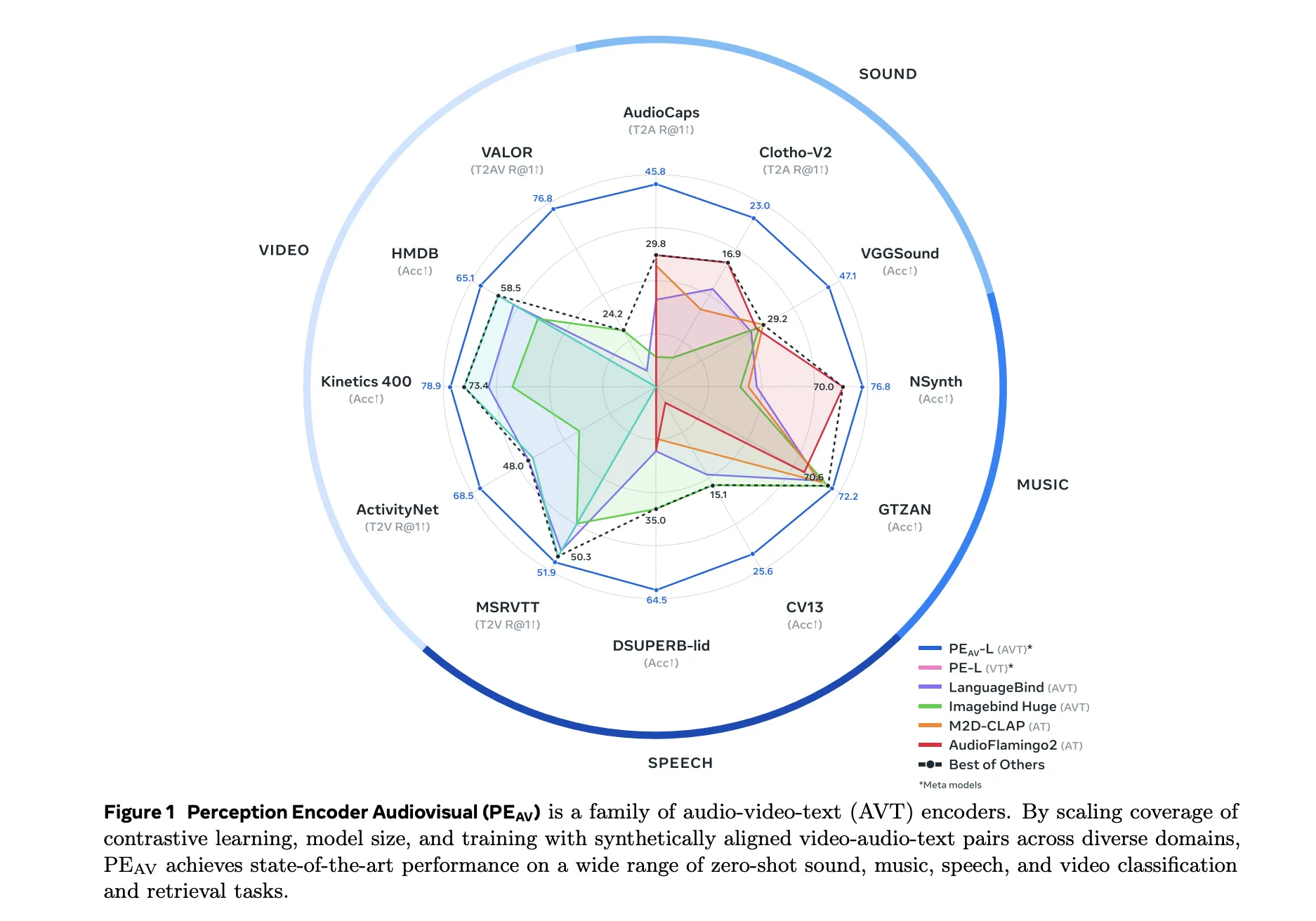

Meta AI Open-Sourced Perception Encoder Audiovisual (PE-AV): The Audiovisual Encoder Powering SAM Audio And Large Scale Multimodal RetrievalMarkTechPost Meta researchers have introduced Perception Encoder Audiovisual, PEAV, as a new family of encoders for joint audio and video understanding. The model learns aligned audio, video, and text representations in a single embedding space using large scale contrastive training on about 100M audio video pairs with text captions. From Perception Encoder to PEAV Perception Encoder,

The post Meta AI Open-Sourced Perception Encoder Audiovisual (PE-AV): The Audiovisual Encoder Powering SAM Audio And Large Scale Multimodal Retrieval appeared first on MarkTechPost.

Meta researchers have introduced Perception Encoder Audiovisual, PEAV, as a new family of encoders for joint audio and video understanding. The model learns aligned audio, video, and text representations in a single embedding space using large scale contrastive training on about 100M audio video pairs with text captions. From Perception Encoder to PEAV Perception Encoder,

The post Meta AI Open-Sourced Perception Encoder Audiovisual (PE-AV): The Audiovisual Encoder Powering SAM Audio And Large Scale Multimodal Retrieval appeared first on MarkTechPost. Read More