RMAAT: Astrocyte-Inspired Memory Compression and Replay for Efficient Long-Context Transformerscs.AI updates on arXiv.org arXiv:2601.00426v1 Announce Type: cross

Abstract: The quadratic complexity of self-attention mechanism presents a significant impediment to applying Transformer models to long sequences. This work explores computational principles derived from astrocytes-glial cells critical for biological memory and synaptic modulation-as a complementary approach to conventional architectural modifications for efficient self-attention. We introduce the Recurrent Memory Augmented Astromorphic Transformer (RMAAT), an architecture integrating abstracted astrocyte functionalities. RMAAT employs a recurrent, segment-based processing strategy where persistent memory tokens propagate contextual information. An adaptive compression mechanism, governed by a novel retention factor derived from simulated astrocyte long-term plasticity (LTP), modulates these tokens. Attention within segments utilizes an efficient, linear-complexity mechanism inspired by astrocyte short-term plasticity (STP). Training is performed using Astrocytic Memory Replay Backpropagation (AMRB), a novel algorithm designed for memory efficiency in recurrent networks. Evaluations on the Long Range Arena (LRA) benchmark demonstrate RMAAT’s competitive accuracy and substantial improvements in computational and memory efficiency, indicating the potential of incorporating astrocyte-inspired dynamics into scalable sequence models.

arXiv:2601.00426v1 Announce Type: cross

Abstract: The quadratic complexity of self-attention mechanism presents a significant impediment to applying Transformer models to long sequences. This work explores computational principles derived from astrocytes-glial cells critical for biological memory and synaptic modulation-as a complementary approach to conventional architectural modifications for efficient self-attention. We introduce the Recurrent Memory Augmented Astromorphic Transformer (RMAAT), an architecture integrating abstracted astrocyte functionalities. RMAAT employs a recurrent, segment-based processing strategy where persistent memory tokens propagate contextual information. An adaptive compression mechanism, governed by a novel retention factor derived from simulated astrocyte long-term plasticity (LTP), modulates these tokens. Attention within segments utilizes an efficient, linear-complexity mechanism inspired by astrocyte short-term plasticity (STP). Training is performed using Astrocytic Memory Replay Backpropagation (AMRB), a novel algorithm designed for memory efficiency in recurrent networks. Evaluations on the Long Range Arena (LRA) benchmark demonstrate RMAAT’s competitive accuracy and substantial improvements in computational and memory efficiency, indicating the potential of incorporating astrocyte-inspired dynamics into scalable sequence models. Read More

It’s complicated. The relationship of algorithmic fairness and non-discrimination provisions for high-risk systems in the EU AI Actcs.AI updates on arXiv.org arXiv:2501.12962v5 Announce Type: replace-cross

Abstract: What constitutes a fair decision? This question is not only difficult for humans but becomes more challenging when Artificial Intelligence (AI) models are used. In light of discriminatory algorithmic behaviors, the EU has recently passed the AI Act, which mandates specific rules for high-risk systems, incorporating both traditional legal non-discrimination regulations and machine learning based algorithmic fairness concepts. This paper aims to bridge these two different concepts in the AI Act through: First, a necessary high-level introduction of both concepts targeting legal and computer science-oriented scholars, and second, an in-depth analysis of the AI Act’s relationship between legal non-discrimination regulations and algorithmic fairness. Our analysis reveals three key findings: (1.) Most non-discrimination regulations target only high-risk AI systems. (2.) The regulation of high-risk systems encompasses both data input requirements and output monitoring, though these regulations are partly inconsistent and raise questions of computational feasibility. (3.) Finally, we consider the possible (future) interaction of classical EU non-discrimination law and the AI Act regulations. We recommend developing more specific auditing and testing methodologies for AI systems. This paper aims to serve as a foundation for future interdisciplinary collaboration between legal scholars and computer science-oriented machine learning researchers studying discrimination in AI systems.

arXiv:2501.12962v5 Announce Type: replace-cross

Abstract: What constitutes a fair decision? This question is not only difficult for humans but becomes more challenging when Artificial Intelligence (AI) models are used. In light of discriminatory algorithmic behaviors, the EU has recently passed the AI Act, which mandates specific rules for high-risk systems, incorporating both traditional legal non-discrimination regulations and machine learning based algorithmic fairness concepts. This paper aims to bridge these two different concepts in the AI Act through: First, a necessary high-level introduction of both concepts targeting legal and computer science-oriented scholars, and second, an in-depth analysis of the AI Act’s relationship between legal non-discrimination regulations and algorithmic fairness. Our analysis reveals three key findings: (1.) Most non-discrimination regulations target only high-risk AI systems. (2.) The regulation of high-risk systems encompasses both data input requirements and output monitoring, though these regulations are partly inconsistent and raise questions of computational feasibility. (3.) Finally, we consider the possible (future) interaction of classical EU non-discrimination law and the AI Act regulations. We recommend developing more specific auditing and testing methodologies for AI systems. This paper aims to serve as a foundation for future interdisciplinary collaboration between legal scholars and computer science-oriented machine learning researchers studying discrimination in AI systems. Read More

Mortar: Evolving Mechanics for Automatic Game Designcs.AI updates on arXiv.org arXiv:2601.00105v1 Announce Type: new

Abstract: We present Mortar, a system for autonomously evolving game mechanics for automatic game design. Game mechanics define the rules and interactions that govern gameplay, and designing them manually is a time-consuming and expert-driven process. Mortar combines a quality-diversity algorithm with a large language model to explore a diverse set of mechanics, which are evaluated by synthesising complete games that incorporate both evolved mechanics and those drawn from an archive. The mechanics are evaluated by composing complete games through a tree search procedure, where the resulting games are evaluated by their ability to preserve a skill-based ordering over players — that is, whether stronger players consistently outperform weaker ones. We assess the mechanics based on their contribution towards the skill-based ordering score in the game. We demonstrate that Mortar produces games that appear diverse and playable, and mechanics that contribute more towards the skill-based ordering score in the game. We perform ablation studies to assess the role of each system component and a user study to evaluate the games based on human feedback.

arXiv:2601.00105v1 Announce Type: new

Abstract: We present Mortar, a system for autonomously evolving game mechanics for automatic game design. Game mechanics define the rules and interactions that govern gameplay, and designing them manually is a time-consuming and expert-driven process. Mortar combines a quality-diversity algorithm with a large language model to explore a diverse set of mechanics, which are evaluated by synthesising complete games that incorporate both evolved mechanics and those drawn from an archive. The mechanics are evaluated by composing complete games through a tree search procedure, where the resulting games are evaluated by their ability to preserve a skill-based ordering over players — that is, whether stronger players consistently outperform weaker ones. We assess the mechanics based on their contribution towards the skill-based ordering score in the game. We demonstrate that Mortar produces games that appear diverse and playable, and mechanics that contribute more towards the skill-based ordering score in the game. We perform ablation studies to assess the role of each system component and a user study to evaluate the games based on human feedback. Read More

Beyond Perfect APIs: A Comprehensive Evaluation of LLM Agents Under Real-World API Complexitycs.AI updates on arXiv.org arXiv:2601.00268v1 Announce Type: cross

Abstract: We introduce WildAGTEval, a benchmark designed to evaluate large language model (LLM) agents’ function-calling capabilities under realistic API complexity. Unlike prior work that assumes an idealized API system and disregards real-world factors such as noisy API outputs, WildAGTEval accounts for two dimensions of real-world complexity: 1. API specification, which includes detailed documentation and usage constraints, and 2. API execution, which captures runtime challenges. Consequently, WildAGTEval offers (i) an API system encompassing 60 distinct complexity scenarios that can be composed into approximately 32K test configurations, and (ii) user-agent interactions for evaluating LLM agents on these scenarios. Using WildAGTEval, we systematically assess several advanced LLMs and observe that most scenarios are challenging, with irrelevant information complexity posing the greatest difficulty and reducing the performance of strong LLMs by 27.3%. Furthermore, our qualitative analysis reveals that LLMs occasionally distort user intent merely to claim task completion, critically affecting user satisfaction.

arXiv:2601.00268v1 Announce Type: cross

Abstract: We introduce WildAGTEval, a benchmark designed to evaluate large language model (LLM) agents’ function-calling capabilities under realistic API complexity. Unlike prior work that assumes an idealized API system and disregards real-world factors such as noisy API outputs, WildAGTEval accounts for two dimensions of real-world complexity: 1. API specification, which includes detailed documentation and usage constraints, and 2. API execution, which captures runtime challenges. Consequently, WildAGTEval offers (i) an API system encompassing 60 distinct complexity scenarios that can be composed into approximately 32K test configurations, and (ii) user-agent interactions for evaluating LLM agents on these scenarios. Using WildAGTEval, we systematically assess several advanced LLMs and observe that most scenarios are challenging, with irrelevant information complexity posing the greatest difficulty and reducing the performance of strong LLMs by 27.3%. Furthermore, our qualitative analysis reveals that LLMs occasionally distort user intent merely to claim task completion, critically affecting user satisfaction. Read More

Towards Automated Differential Diagnosis of Skin Diseases Using Deep Learning and Imbalance-Aware Strategiescs.AI updates on arXiv.org arXiv:2601.00286v1 Announce Type: cross

Abstract: As dermatological conditions become increasingly common and the availability of dermatologists remains limited, there is a growing need for intelligent tools to support both patients and clinicians in the timely and accurate diagnosis of skin diseases. In this project, we developed a deep learning based model for the classification and diagnosis of skin conditions. By leveraging pretraining on publicly available skin disease image datasets, our model effectively extracted visual features and accurately classified various dermatological cases. Throughout the project, we refined the model architecture, optimized data preprocessing workflows, and applied targeted data augmentation techniques to improve overall performance. The final model, based on the Swin Transformer, achieved a prediction accuracy of 87.71 percent across eight skin lesion classes on the ISIC2019 dataset. These results demonstrate the model’s potential as a diagnostic support tool for clinicians and a self assessment aid for patients.

arXiv:2601.00286v1 Announce Type: cross

Abstract: As dermatological conditions become increasingly common and the availability of dermatologists remains limited, there is a growing need for intelligent tools to support both patients and clinicians in the timely and accurate diagnosis of skin diseases. In this project, we developed a deep learning based model for the classification and diagnosis of skin conditions. By leveraging pretraining on publicly available skin disease image datasets, our model effectively extracted visual features and accurately classified various dermatological cases. Throughout the project, we refined the model architecture, optimized data preprocessing workflows, and applied targeted data augmentation techniques to improve overall performance. The final model, based on the Swin Transformer, achieved a prediction accuracy of 87.71 percent across eight skin lesion classes on the ISIC2019 dataset. These results demonstrate the model’s potential as a diagnostic support tool for clinicians and a self assessment aid for patients. Read More

Narrative-to-Scene Generation: An LLM-Driven Pipeline for 2D Game Environmentscs.AI updates on arXiv.org arXiv:2509.04481v2 Announce Type: replace-cross

Abstract: Recent advances in large language models (LLMs) enable compelling story generation, but connecting narrative text to playable visual environments remains an open challenge in procedural content generation (PCG). We present a lightweight pipeline that transforms short narrative prompts into a sequence of 2D tile-based game scenes, reflecting the temporal structure of stories. Given an LLM-generated narrative, our system identifies three key time frames, extracts spatial predicates in the form of “Object-Relation-Object” triples, and retrieves visual assets using affordance-aware semantic embeddings from the GameTileNet dataset. A layered terrain is generated using Cellular Automata, and objects are placed using spatial rules grounded in the predicate structure. We evaluated our system in ten diverse stories, analyzing tile-object matching, affordance-layer alignment, and spatial constraint satisfaction across frames. This prototype offers a scalable approach to narrative-driven scene generation and lays the foundation for future work on multi-frame continuity, symbolic tracking, and multi-agent coordination in story-centered PCG.

arXiv:2509.04481v2 Announce Type: replace-cross

Abstract: Recent advances in large language models (LLMs) enable compelling story generation, but connecting narrative text to playable visual environments remains an open challenge in procedural content generation (PCG). We present a lightweight pipeline that transforms short narrative prompts into a sequence of 2D tile-based game scenes, reflecting the temporal structure of stories. Given an LLM-generated narrative, our system identifies three key time frames, extracts spatial predicates in the form of “Object-Relation-Object” triples, and retrieves visual assets using affordance-aware semantic embeddings from the GameTileNet dataset. A layered terrain is generated using Cellular Automata, and objects are placed using spatial rules grounded in the predicate structure. We evaluated our system in ten diverse stories, analyzing tile-object matching, affordance-layer alignment, and spatial constraint satisfaction across frames. This prototype offers a scalable approach to narrative-driven scene generation and lays the foundation for future work on multi-frame continuity, symbolic tracking, and multi-agent coordination in story-centered PCG. Read More

Reasoning in Action: MCTS-Driven Knowledge Retrieval for Large Language Modelscs.AI updates on arXiv.org arXiv:2601.00003v1 Announce Type: new

Abstract: Large language models (LLMs) typically enhance their performance through either the retrieval of semantically similar information or the improvement of their reasoning capabilities. However, a significant challenge remains in effectively integrating both retrieval and reasoning strategies to optimize LLM performance. In this paper, we introduce a reasoning-aware knowledge retrieval method that enriches LLMs with information aligned to the logical structure of conversations, moving beyond surface-level semantic similarity. We follow a coarse-to-fine approach for knowledge retrieval. First, we identify a contextually relevant sub-region of the knowledge base, ensuring that all sentences within it are relevant to the context topic. Next, we refine our search within this sub-region to extract knowledge that is specifically relevant to the reasoning process. Throughout both phases, we employ the Monte Carlo Tree Search-inspired search method to effectively navigate through knowledge sentences using common keywords. Experiments on two multi-turn dialogue datasets demonstrate that our knowledge retrieval approach not only aligns more closely with the underlying reasoning in human conversations but also significantly enhances the diversity of the retrieved knowledge, resulting in more informative and creative responses.

arXiv:2601.00003v1 Announce Type: new

Abstract: Large language models (LLMs) typically enhance their performance through either the retrieval of semantically similar information or the improvement of their reasoning capabilities. However, a significant challenge remains in effectively integrating both retrieval and reasoning strategies to optimize LLM performance. In this paper, we introduce a reasoning-aware knowledge retrieval method that enriches LLMs with information aligned to the logical structure of conversations, moving beyond surface-level semantic similarity. We follow a coarse-to-fine approach for knowledge retrieval. First, we identify a contextually relevant sub-region of the knowledge base, ensuring that all sentences within it are relevant to the context topic. Next, we refine our search within this sub-region to extract knowledge that is specifically relevant to the reasoning process. Throughout both phases, we employ the Monte Carlo Tree Search-inspired search method to effectively navigate through knowledge sentences using common keywords. Experiments on two multi-turn dialogue datasets demonstrate that our knowledge retrieval approach not only aligns more closely with the underlying reasoning in human conversations but also significantly enhances the diversity of the retrieved knowledge, resulting in more informative and creative responses. Read More

AI Interview Series #5: Prompt CachingMarkTechPost Question: Imagine your company’s LLM API costs suddenly doubled last month. A deeper analysis shows that while user inputs look different at a text level, many of them are semantically similar. As an engineer, how would you identify and reduce this redundancy without impacting response quality? What is Prompt Caching? Prompt caching is an optimization

The post AI Interview Series #5: Prompt Caching appeared first on MarkTechPost.

Question: Imagine your company’s LLM API costs suddenly doubled last month. A deeper analysis shows that while user inputs look different at a text level, many of them are semantically similar. As an engineer, how would you identify and reduce this redundancy without impacting response quality? What is Prompt Caching? Prompt caching is an optimization

The post AI Interview Series #5: Prompt Caching appeared first on MarkTechPost. Read More

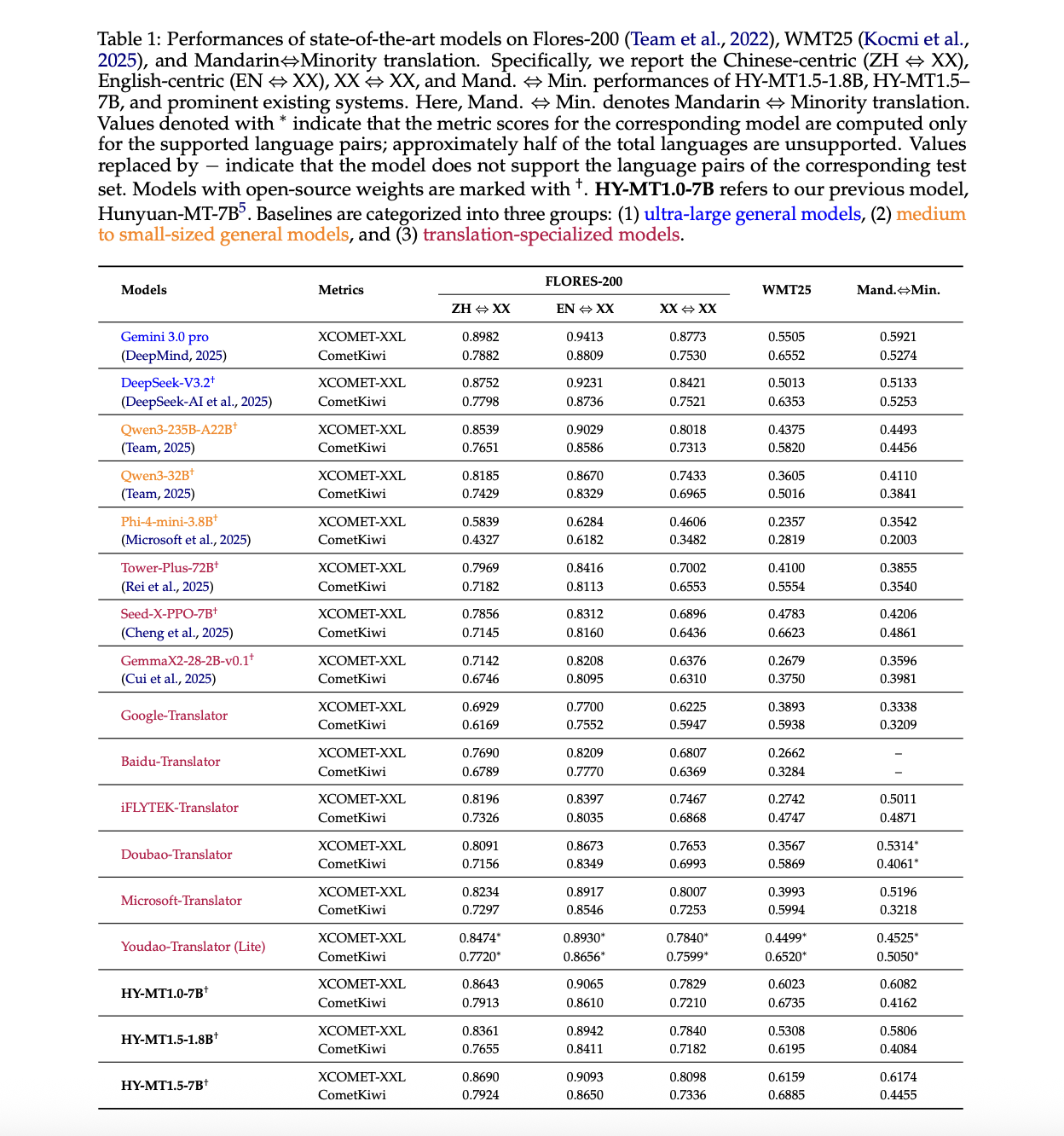

Tencent Researchers Release Tencent HY-MT1.5: A New Translation Models Featuring 1.8B and 7B Models Designed for Seamless on-Device and Cloud DeploymentMarkTechPost Tencent Hunyuan researchers have released HY-MT1.5, a multilingual machine translation family that targets both mobile devices and cloud systems with the same training recipe and metrics. HY-MT1.5 consists of 2 translation models, HY-MT1.5-1.8B and HY-MT1.5-7B, supports mutual translation across 33 languages with 5 ethnic and dialect variations, and is available on GitHub and Hugging Face

The post Tencent Researchers Release Tencent HY-MT1.5: A New Translation Models Featuring 1.8B and 7B Models Designed for Seamless on-Device and Cloud Deployment appeared first on MarkTechPost.

Tencent Hunyuan researchers have released HY-MT1.5, a multilingual machine translation family that targets both mobile devices and cloud systems with the same training recipe and metrics. HY-MT1.5 consists of 2 translation models, HY-MT1.5-1.8B and HY-MT1.5-7B, supports mutual translation across 33 languages with 5 ethnic and dialect variations, and is available on GitHub and Hugging Face

The post Tencent Researchers Release Tencent HY-MT1.5: A New Translation Models Featuring 1.8B and 7B Models Designed for Seamless on-Device and Cloud Deployment appeared first on MarkTechPost. Read More

LLM-Pruning Collection: A JAX Based Repo For Structured And Unstructured LLM CompressionMarkTechPost Zlab Princeton researchers have released LLM-Pruning Collection, a JAX based repository that consolidates major pruning algorithms for large language models into a single, reproducible framework. It targets one concrete goal, make it easy to compare block level, layer level and weight level pruning methods under a consistent training and evaluation stack on both GPUs and

The post LLM-Pruning Collection: A JAX Based Repo For Structured And Unstructured LLM Compression appeared first on MarkTechPost.

Zlab Princeton researchers have released LLM-Pruning Collection, a JAX based repository that consolidates major pruning algorithms for large language models into a single, reproducible framework. It targets one concrete goal, make it easy to compare block level, layer level and weight level pruning methods under a consistent training and evaluation stack on both GPUs and

The post LLM-Pruning Collection: A JAX Based Repo For Structured And Unstructured LLM Compression appeared first on MarkTechPost. Read More