6 Docker Tricks to Simplify Your Data Science ReproducibilityKDnuggets Read these 6 tricks for treating your Docker container like a reproducible artifact, not a disposable wrapper.

Read these 6 tricks for treating your Docker container like a reproducible artifact, not a disposable wrapper. Read More

Stop Blaming the Data: A Better Way to Handle Covariance ShiftTowards Data Science Instead of using shift as an excuse for poor performance, use Inverse Probability Weighting to estimate how your model should perform in the new environment

The post Stop Blaming the Data: A Better Way to Handle Covariance Shift appeared first on Towards Data Science.

Instead of using shift as an excuse for poor performance, use Inverse Probability Weighting to estimate how your model should perform in the new environment

The post Stop Blaming the Data: A Better Way to Handle Covariance Shift appeared first on Towards Data Science. Read More

Ray: Distributed Computing for All, Part 1Towards Data Science From single to multi-core on your local PC and beyond

The post Ray: Distributed Computing for All, Part 1 appeared first on Towards Data Science.

From single to multi-core on your local PC and beyond

The post Ray: Distributed Computing for All, Part 1 appeared first on Towards Data Science. Read More

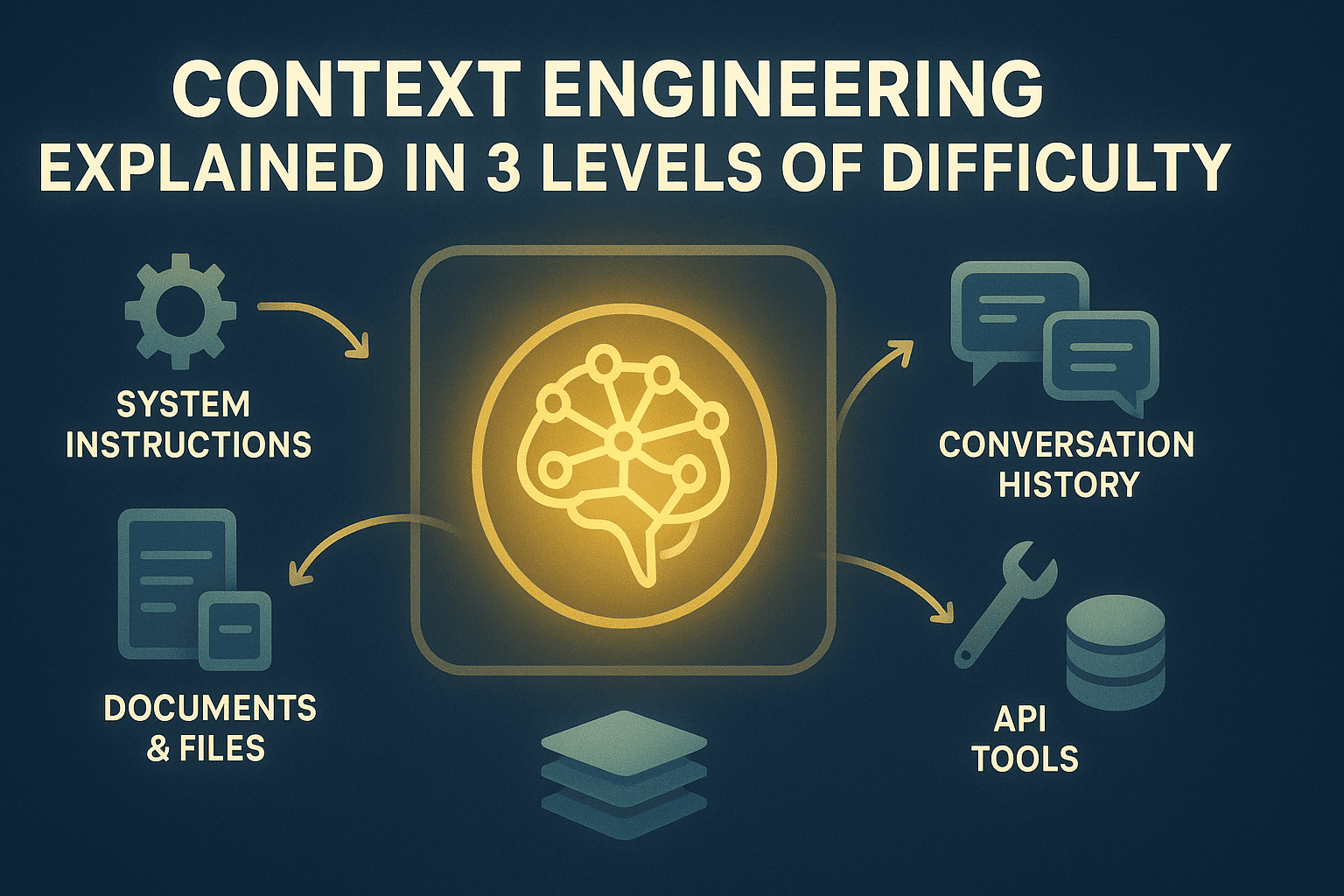

Context Engineering Explained in 3 Levels of DifficultyKDnuggets Long-running LLM applications degrade when context is unmanaged. Context engineering turns the context window into a deliberate, optimized resource. Learn more in this article.

Long-running LLM applications degrade when context is unmanaged. Context engineering turns the context window into a deliberate, optimized resource. Learn more in this article. Read More

Feature Detection, Part 3: Harris Corner DetectionTowards Data Science Finding the most informative points in images

The post Feature Detection, Part 3: Harris Corner Detection appeared first on Towards Data Science.

Finding the most informative points in images

The post Feature Detection, Part 3: Harris Corner Detection appeared first on Towards Data Science. Read More

I Asked ChatGPT, Claude and DeepSeek to Build TetrisKDnuggets Which of these state-of-the-art models writes the best code?

Which of these state-of-the-art models writes the best code? Read More

Brightspeed, one of the largest fiber broadband companies in the United States, is investigating security breach and data theft claims made by the Crimson Collective extortion gang. […] Read More

Cybersecurity researchers have disclosed details of a new Python-based information stealer called VVS Stealer (also styled as VVS $tealer) that’s capable of harvesting Discord credentials and tokens. The stealer is said to have been on sale on Telegram as far back as April 2025, according to a report from Palo Alto Networks Unit 42. “VVS […]

Ilya Lichtenstein, who was sentenced to prison last year for money laundering charges in connection with his role in the massive hack of cryptocurrency exchange Bitfinex in 2016, said he has been released early. In a post shared on X last week, the 38-year-old announced his release, crediting U.S. President Donald Trump’s First Step Act. […]

Ask, Clarify, Optimize: Human-LLM Agent Collaboration for Smarter Inventory Controlcs.AI updates on arXiv.org arXiv:2601.00121v1 Announce Type: new

Abstract: Inventory management remains a challenge for many small and medium-sized businesses that lack the expertise to deploy advanced optimization methods. This paper investigates whether Large Language Models (LLMs) can help bridge this gap. We show that employing LLMs as direct, end-to-end solvers incurs a significant “hallucination tax”: a performance gap arising from the model’s inability to perform grounded stochastic reasoning. To address this, we propose a hybrid agentic framework that strictly decouples semantic reasoning from mathematical calculation. In this architecture, the LLM functions as an intelligent interface, eliciting parameters from natural language and interpreting results while automatically calling rigorous algorithms to build the optimization engine.

To evaluate this interactive system against the ambiguity and inconsistency of real-world managerial dialogue, we introduce the Human Imitator, a fine-tuned “digital twin” of a boundedly rational manager that enables scalable, reproducible stress-testing. Our empirical analysis reveals that the hybrid agentic framework reduces total inventory costs by 32.1% relative to an interactive baseline using GPT-4o as an end-to-end solver. Moreover, we find that providing perfect ground-truth information alone is insufficient to improve GPT-4o’s performance, confirming that the bottleneck is fundamentally computational rather than informational. Our results position LLMs not as replacements for operations research, but as natural-language interfaces that make rigorous, solver-based policies accessible to non-experts.

arXiv:2601.00121v1 Announce Type: new

Abstract: Inventory management remains a challenge for many small and medium-sized businesses that lack the expertise to deploy advanced optimization methods. This paper investigates whether Large Language Models (LLMs) can help bridge this gap. We show that employing LLMs as direct, end-to-end solvers incurs a significant “hallucination tax”: a performance gap arising from the model’s inability to perform grounded stochastic reasoning. To address this, we propose a hybrid agentic framework that strictly decouples semantic reasoning from mathematical calculation. In this architecture, the LLM functions as an intelligent interface, eliciting parameters from natural language and interpreting results while automatically calling rigorous algorithms to build the optimization engine.

To evaluate this interactive system against the ambiguity and inconsistency of real-world managerial dialogue, we introduce the Human Imitator, a fine-tuned “digital twin” of a boundedly rational manager that enables scalable, reproducible stress-testing. Our empirical analysis reveals that the hybrid agentic framework reduces total inventory costs by 32.1% relative to an interactive baseline using GPT-4o as an end-to-end solver. Moreover, we find that providing perfect ground-truth information alone is insufficient to improve GPT-4o’s performance, confirming that the bottleneck is fundamentally computational rather than informational. Our results position LLMs not as replacements for operations research, but as natural-language interfaces that make rigorous, solver-based policies accessible to non-experts. Read More