Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Table of Contents

Hello Everyone, Help us grow our community by sharing and/or supporting us on other platforms. This allow us to show verification that what we are doing is valued. It also allows us to plan and allocate resources to improve what we are doing, as we then know others are interested/supportive.

Pressed For Time?

Review or Download our Article Insights to gain knowledge with the time you have!

Agentic AI vs AI Agents

Most companies waste money on the wrong AI technology because they confuse two fundamentally different things. They buy “agentic AI platforms” when they need a single agent, or deploy disconnected agents when they actually need a coordinated system. The confusion isn’t just semantic. It determines whether your implementation succeeds or burns through budget without delivering value.

Understanding the difference between AI agents and agentic AI changes how you architect solutions, what you spend, and what results you get. This guide explains both concepts, when to use each, and how to build them.

What is Agentic AI? by Lisa YuWhat Is Agentic AI

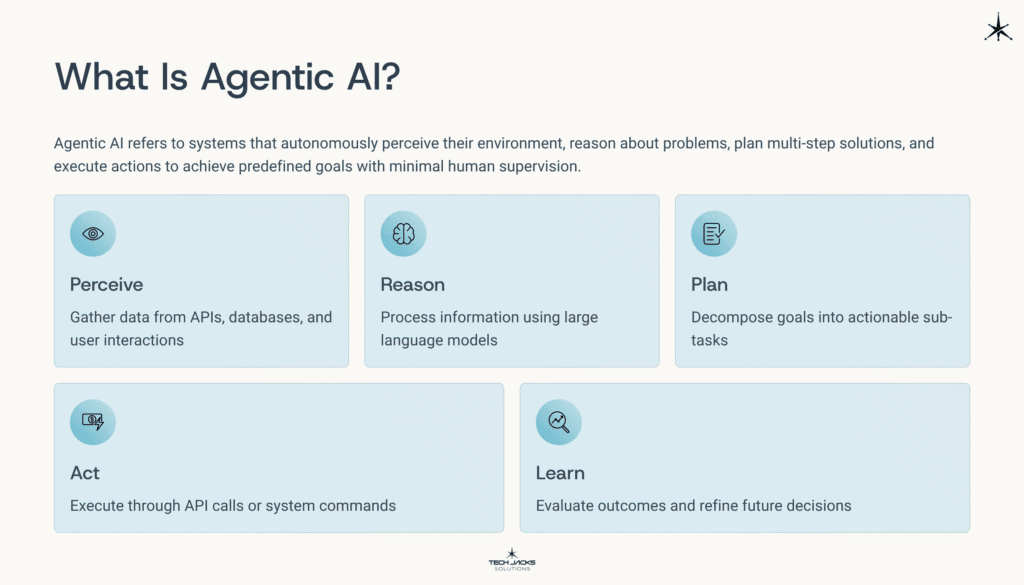

Agentic AI refers to systems that autonomously perceive their environment, reason about problems, plan multi-step solutions, and execute actions to achieve predefined goals with minimal human supervision. IBM defines agentic AI as systems that perceive, reason, plan, and execute autonomously. AWS describes it as AI operating with minimal human intervention to accomplish complex objectives. The UK Government characterizes these as systems capable of autonomous action in dynamic environments.

Think of agentic AI as an orchestra conductor, not a single musician. The conductor coordinates specialized players, manages timing, adjusts the performance based on how things unfold. No individual musician could create that result alone.

Academic research published on arXiv defines agentic AI as a paradigm shift toward orchestrated autonomy. It’s a system architecture, not just smarter software.

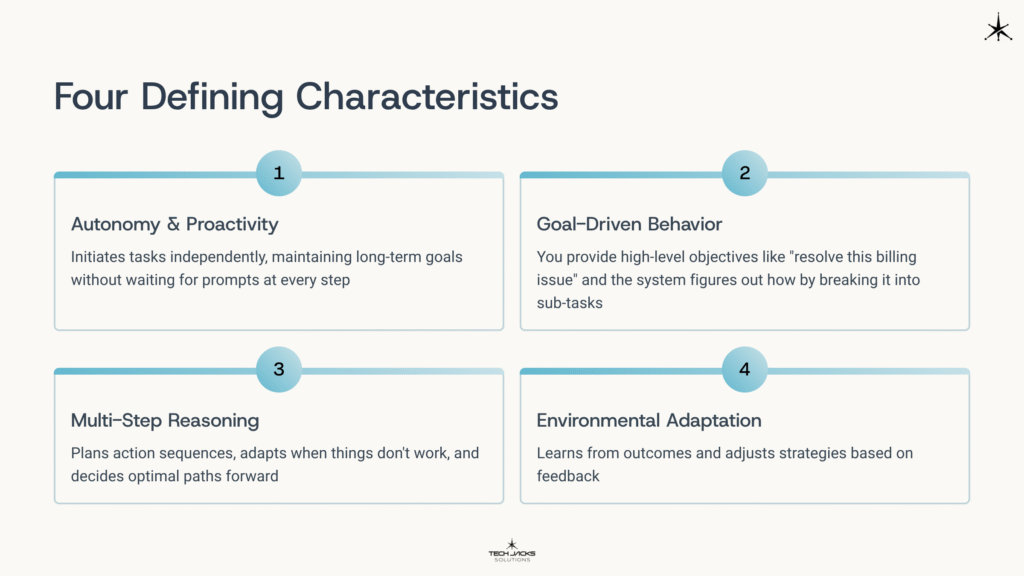

Four characteristics define these systems. Autonomy and proactivity mean they initiate tasks independently, maintaining long-term goals without waiting for prompts at every step. Goal-driven behavior means you provide high-level objectives like “resolve this billing issue” and the system figures out how by breaking it into sub-tasks. Multi-step reasoning allows planning action sequences, adapting when things don’t work, and deciding optimal paths forward. Environmental adaptation means learning from outcomes and adjusting strategies based on feedback.

For implementors, the technical foundation is the agentic loop. This cycle runs continuously: Perceive (gather data from APIs, databases, user interactions), Reason (process information using large language models), Plan (decompose goals into actionable sub-tasks), Act (execute through API calls or system commands), and Learn (evaluate outcomes and refine future decisions). This isn’t simple input-output. It’s dynamic adaptation based on results.

The practical meaning matters more than academic definitions. A digital tool requires you to operate it. A digital employee operates on your behalf. That’s what agentic AI means. You delegate outcomes, not just tasks.

What Is an AI Agent

An AI agent is an individual software component designed to perform a specific task autonomously. It’s a building block, not a complete system. IBM explains AI agent components as specialized entities that perceive their environment, make decisions, and execute actions within defined scope. Google Cloud describes agents as fundamental building blocks of intelligent systems.

Four properties distinguish agents from regular programs. Autonomy means operating independently, making decisions without constant external control. Reactivity means perceiving the environment and responding to changes. Proactivity means taking initiative toward goals rather than just reacting. Social ability means interacting with other agents or humans through communication.

Think of an AI agent as a single specialist. You wouldn’t hire one specialist to run your entire business, but you need specialists for specific functions. A spam filter is a simple agent. It perceives incoming email, decides whether it’s spam, and moves messages to appropriate folders. One job, autonomous execution.

The technical architecture includes four core modules. The perception layer gathers inputs through APIs or data feeds. The processing engine (a language model, machine learning model, or rule-based logic) analyzes inputs and makes decisions. The action executor carries out chosen actions through API calls or system commands. The memory system maintains context and stores learned patterns.

Types of AI Agents

AI Agent Types by Lisa YuAI agents come in several categories, each suited to different applications. Understanding these types helps you choose the right approach for your needs.

Simple reactive agents respond to immediate stimuli without maintaining state. They follow if-then rules. If condition X occurs, take action Y. These work for straightforward automation where appropriate responses are clear and consistent.

Model-based agents maintain internal representations of their environment. They understand how the world works and predict outcomes. A customer service routing agent tracks conversation history and sentiment to decide whether to continue automated handling or escalate to a human.

Goal-based agents work toward specific objectives, evaluating actions based on how well each supports their goals. An appointment scheduling agent finds mutually convenient meeting times considering calendars, preferences, and priorities.

Learning agents improve over time. They try approaches, evaluate results, and refine decision-making based on feedback. A content recommendation agent gets better as it learns which suggestions users find valuable.

LLM-powered agents leverage large language models for reasoning. These understand natural language instructions, break complex requests into steps, and generate human-like responses. They excel when tasks involve language understanding, code generation, or complex reasoning.

Technical classification includes retrieval agents using RAG (Retrieval Augmented Generation) to search knowledge bases, tool-using agents that call APIs and functions, reasoning agents employing chain-of-thought logic, and multi-modal agents handling text, vision, and audio.

Examples span the complexity spectrum. A simple agent monitors server logs and alerts when error rates spike. A sophisticated agent uses language models to understand customer questions, search knowledge bases, and generate responses. An advanced agent analyzes code repositories, identifies vulnerabilities, suggests fixes, and submits pull requests for review.

Research published in MDPI reviews agent architectures and evaluation approaches, providing technical context for how modern agents are built and assessed.

The crucial point is scope. Each agent operates within boundaries. It doesn’t coordinate with other systems unless explicitly programmed to. It doesn’t manage multi-step workflows requiring different capabilities. It does its specific job autonomously. When multiple agents work together, they form multi-agent systems that require orchestration.

AI Agents vs Agentic AI: Understanding the Difference

Here’s where confusion causes real problems. People use these terms interchangeably, but they describe fundamentally different things.

| Aspect | AI Agent | Agentic AI |

| Scope | Single specialized task | End-to-end process orchestration |

| Components | One autonomous unit | Multiple coordinated agents |

| Planning | Task execution | Strategic goal decomposition |

| Coordination | None required | Multi-agent orchestration essential |

| Autonomy | Function-level | System-level |

| Complexity | Narrow capability | Complex workflow management |

For beginners, think of it this way. An AI agent is one violin player who knows their part perfectly. Agentic AI is the entire orchestra with a conductor coordinating musicians, managing timing, ensuring everything works together to create something no individual could achieve alone.

For implementors, the technical differences run deeper. Individual agents operate independently with their own perception-decision-action loop. Agentic AI requires orchestration architecture. Microsoft’s Azure documentation details patterns: sequential execution where agents work in pipelines, parallel processing where multiple agents handle different aspects simultaneously, hierarchical structures with supervisor agents coordinating workers, and collaborative patterns where agents negotiate and share information.

State management becomes crucial. Individual agents maintain their own context. Agentic systems need shared memory so agents coordinate. When a research agent finds information, the analysis agent needs access. When analysis identifies concerns, the communication agent needs that context.

This matters because vendors routinely engage in “agent washing,” marketing simple tools as sophisticated agentic systems. A chatbot following a decision tree isn’t agentic AI. A single-purpose automation tool isn’t an agentic system just because it uses an LLM.

Business implications are substantial. Building a single agent might take weeks with a small team. Implementing an agentic system could require months and significantly more resources. The first might cost thousands monthly. The second could run tens or hundreds of thousands. But capabilities and value differ proportionally.

Governance requirements diverge too. Individual agents can be managed like standard software with testing, access controls, and monitoring. Agentic systems require continuous oversight, dynamic risk assessment, and human checkpoints for critical decisions. OpenAI’s governance practices outline additional controls needed when autonomous systems make consequential decisions.

The Model Context Protocol (MCP) provides standardized approaches for agents to share context and coordinate actions securely in agentic systems.

Agentic AI vs AI Agents by Lisa YuHow Do AI Agents Work

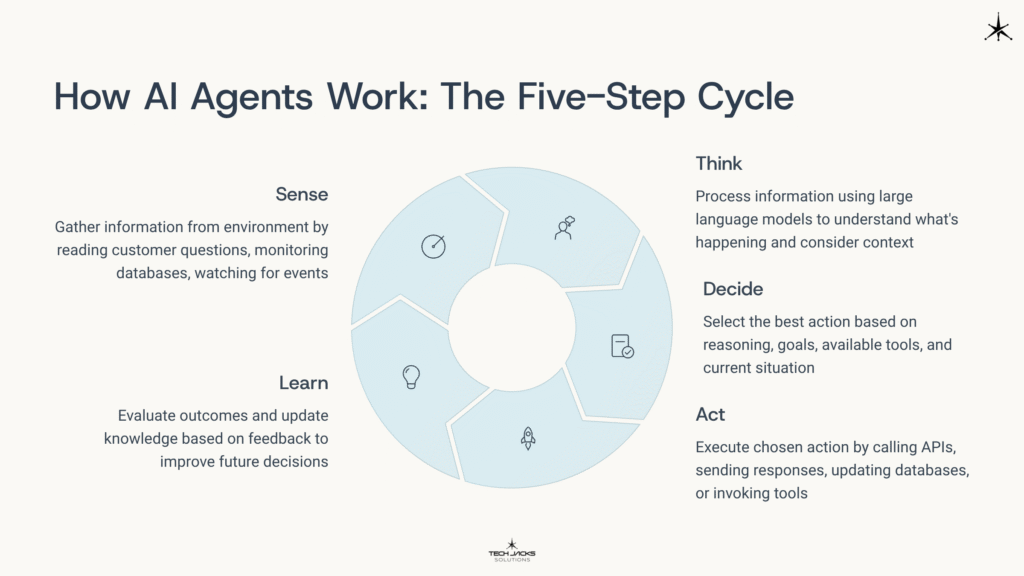

Let’s break down the operational cycle. For beginners, think of five connected steps that repeat continuously.

Sense. The agent gathers information from its environment by reading customer questions, monitoring databases, watching for events, or pulling data from APIs.

Think. The agent processes information to understand what’s happening. Modern agents typically use large language models for reasoning. The LLM interprets input, considers relevant context from memory, and evaluates possible responses.

Decide. Based on reasoning, the agent selects the best action. This decision considers the agent’s goals, available tools, and current situation.

Act. The agent executes its chosen action by calling APIs to retrieve data, sending responses to users, updating databases, or invoking external tools.

Learn. The agent evaluates outcomes. Did the action achieve the intended result? It updates knowledge based on feedback, improving future decisions.

For implementors, foundation models serve as reasoning engines. IBM’s work on agentic reasoning details how language models provide natural language understanding, complex reasoning, and structured output generation. Function calling allows models to invoke specific tools based on reasoning about what action is needed.

Memory architectures come in multiple forms. Short-term memory maintains conversation context through the prompt itself. Long-term memory uses vector databases for semantic search (RAG). The agent searches its knowledge base for relevant information, then incorporates findings into reasoning. Episodic memory tracks past interactions, allowing reference to previous decisions.

Tool integration follows specific patterns. Function schemas describe available tools, parameters, and expected outputs. When the agent decides to use a tool, it generates properly formatted API calls. Error handling and retry logic ensure robustness when tools fail.

Consider a customer service agent. A customer asks “Where’s my package?” The agent perceives the question through chat. It processes the query using an LLM to understand the customer wants tracking information. It decides to query the order database. It executes by calling an API with the customer’s account ID. It receives tracking information. It learns this question type typically requires estimated delivery dates, so it retrieves that proactively. It formats a response: “Your package is in Phoenix and should arrive Thursday.”

The technical stack includes input layers (APIs, sensors, interfaces), intelligence layers (LLMs, ML models, rule engines), and output layers (actions, responses, system integrations). Prompts shape agent behavior fundamentally. System prompts define purpose, personality, constraints, and available tools. Guardrails ensure safe operation through rate limiting, input validation, output filtering, timeouts, and human approval workflows for consequential actions.

How to Build an AI Agent

Building an AI agent requires a systematic approach that works whether you’re coding from scratch or configuring existing platforms. The key is understanding when to build with code versus when to create through configuration.

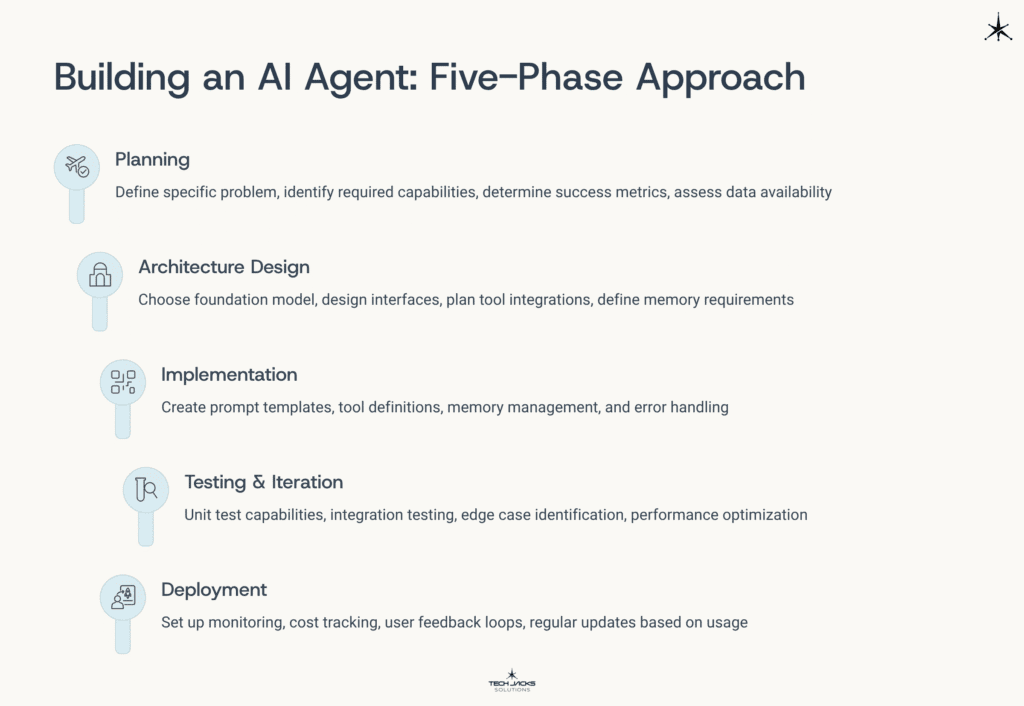

Building with Code: The Five-Phase Approach

Phase 1 is planning. Define the specific problem clearly. Don’t say “improve customer service.” Say “automatically respond to order status inquiries using our order management API.” Identify required capabilities. Does it retrieve information? Analyze data? Generate content? Determine success metrics like response accuracy, time saved, or cost reduction. Assess data availability and quality.

Phase 2 covers architecture design. Choose your foundation model based on requirements. GPT-4 excels at complex reasoning. Claude demonstrates strong instruction-following. Open-source models like Llama offer cost advantages for simpler tasks. Design input and output interfaces for how requests reach the agent and results get delivered. Plan tool and API integrations for external system interactions. Define memory requirements for context retention.

Phase 3 is implementation. You’ll need prompt templates defining agent behavior, tool definitions describing available APIs, memory management for context retention, and error handling for graceful failures.

Phase 4 involves testing and iteration. Unit test individual capabilities separately. Can the agent parse user intents correctly? Does it call APIs with proper parameters? Handle errors gracefully? Integration testing verifies the agent works with actual external systems. Edge case identification finds scenarios that break the agent. What happens if APIs are slow or unavailable? If user input is ambiguous? Performance optimization reduces latency and costs.

Phase 5 is deployment. Set up monitoring and logging to track agent behavior. Cost tracking matters because LLM API calls get expensive. Monitor token usage and set budgets. User feedback loops allow continuous improvement. How do users rate responses? Where does it struggle? Regular updates to prompts, tools, and behavior based on real-world usage keep the agent effective.

Framework Comparison for Developers

IBM analyzes top frameworks for enterprise use. Here’s what implementors need to know.

LangChain works best for rapid prototyping with extensive tooling. Strengths include a massive ecosystem of integrations and strong community support. Use it for custom business process automation.

AutoGen from Microsoft excels at multi-agent collaboration and enterprise integration. It’s designed for role-based systems where agents have specialized responsibilities. Built-in conversation patterns support coordination.

CrewAI targets beginners and team-based systems. It’s intuitive with role definitions making sense to non-technical users. Minimal code required. Perfect for marketing teams or content workflows.

LangGraph specializes in complex state management and cyclical workflows. Graph-based architecture allows visual design. Excellent for conditional logic, loops, or complex state transitions.

Key development considerations apply regardless of framework. Error handling must account for LLM unpredictability. Models sometimes refuse requests, misunderstand instructions, or generate invalid tool calls. Your code needs graceful degradation. Rate limiting prevents runaway API usage. Security requires careful API key management and data sanitization. Observability through logging and tracing is essential. Scalability considerations matter for high request volumes.

Creating Through Configuration

For those who prefer configuration over coding, several platforms enable agent creation without programming.

OpenAI’s custom GPTs let you configure ChatGPT with specific instructions, knowledge files, and capabilities. Anthropic’s Claude and Google’s Gemini offer similar agent builders. Microsoft Copilot Studio provides enterprise-focused configuration tools.

The creation workflow is straightforward. Select the platform fitting your needs. Define the agent’s purpose clearly and describe its personality (formal, casual, technical, friendly). Upload knowledge files or connect data sources. Configure available tools and APIs. Set behavioral guardrails like refusing certain requests or requiring approval for specific actions. Test extensively with realistic scenarios. Deploy and monitor.

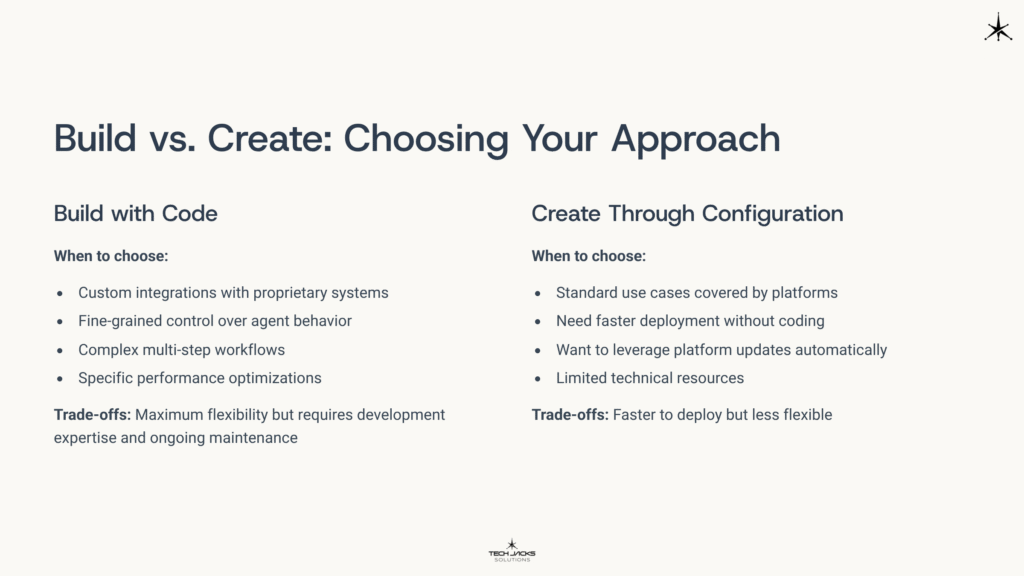

Build vs. Create: Choosing Your Approach

Build with code when you need custom integrations with proprietary systems, fine-grained control over agent behavior, complex multi-step workflows, or specific performance optimizations. This approach offers maximum flexibility but requires development expertise and ongoing maintenance.

Create through configuration when you have standard use cases covered by existing platforms, need faster deployment without coding, want to leverage platform updates automatically, or have limited technical resources. This approach is faster to deploy but less flexible.

For beginners, start with no-code platforms like Zapier AI Actions or Make.com. These connect AI models to thousands of apps through visual interfaces. Framework templates provide another middle ground: pre-built code you can adapt. Find a template similar to your use case, modify prompts, swap in your APIs, adjust logic.

First Project Ideas

An email summarization agent takes email content and extracts key points. Tools needed: an LLM API and email system access. Build time: ~2-4 hours for a basic version for developers familiar with APIs and LLMs, potentially more.

A document Q&A agent lets you ask questions about PDF files. It uses RAG: chunk PDFs into sections, embed chunks in a vector database, retrieve relevant sections for questions, generate cited answers. Tools needed: vector database (Pinecone has free tier), LLM, PDF parser. Build time: 4-8 hours for developers with RAG experience. This project teaches retrieval augmented generation concepts.

Common mistakes to avoid: over-complicating initial scope kills projects, insufficient error handling makes agents fragile, not testing edge cases means breaking on real-world messiness, ignoring API costs leads to bill shock, and skipping monitoring prevents debugging.

Community resources accelerate learning. GitHub repositories contain thousands of example agents. Discord communities offer real-time troubleshooting. YouTube tutorials provide visual walkthroughs. Open-source tools like Hugging Face models (many free), free LLM APIs with usage limits, and local deployment through Ollama or LM Studio make experimentation accessible.

Building Agentic AI Systems: Multi-Agent Orchestration

Scaling from individual agents to coordinated agentic systems requires different thinking. You’re architecting how agents work together, not just building more agents.

Start with process mapping. Document your end-to-end workflow before designing any agents. What are all the steps? Which require human judgment? Which could be automated? Where do decisions depend on information from previous steps?

Agent specialization follows from mapping. Identify discrete capabilities needed (research, analysis, communication, execution). Define specific roles and responsibilities for each agent. Design communication protocols for information sharing.

Microsoft’s Azure orchestration patterns detail implementation approaches. NVIDIA’s analysis of agentic AI provides additional architectural context. Sequential patterns work like waterfalls where Agent A completes work, then Agent B takes over. Parallel patterns allow simultaneous execution when tasks are independent. Hierarchical patterns use supervisor agents coordinating workers. Collaborative patterns let agents negotiate peer-to-peer.

State management across agents is crucial. Shared memory lets agents access information from others’ work. Context passing ensures agents have needed background. Message queues handle communication in distributed systems.

Consider a financial due diligence system. The research agent scrapes websites, retrieves SEC filings, and searches news. The analysis agent runs financial models, performs risk assessment, and identifies concerning patterns. The synthesis agent creates comprehensive reports and executive summaries. The communication agent sends stakeholder updates and answers questions. The orchestrator manages workflow, ensures quality gates, handles errors, and maintains timelines.

Enterprise considerations extend beyond technical architecture. Integration with existing systems requires APIs and middleware. Security involves multi-layer authentication, data encryption, and audit logging. Compliance demands audit trails and decision transparency. Scalability requires load balancing and resource allocation. Cost optimization comes from architectural choices like agent pooling and caching.

Deloitte’s research on autonomous agents discusses enterprise adoption challenges and best practices. The MCP risk matrix provides comprehensive guidance on security for agentic system communication.

How to Use AI Agents

Effective usage matters as much as correct building. For beginners, identify suitable tasks. Look for work that’s repetitive, follows patterns, requires information gathering, involves data entry, needs content summarization, or generates standard reports.

Give clear instructions with specific, measurable goals. Don’t say “help with marketing.” Say “draft three social media posts highlighting our new feature, each under 280 characters, targeting small business owners.” Iterate by starting simple then adding complexity. Implement human-in-the-loop review for critical decisions. Provide feedback that helps agents learn.

For implementors, deployment strategies require careful planning. Pilot programs with limited scope test in low-risk environments. Gradual rollout with monitoring prevents surprises at scale. A/B testing against existing processes proves value objectively. Performance benchmarking establishes baselines.

Integration best practices ensure smooth operation. API-first architecture means designing clean interfaces from the start. Standardized data formats prevent integration headaches. Versioning and rollback capabilities let you recover from bad updates. Rate limiting protects systems from overload.

Operational considerations include user training and change management, documentation and knowledge bases, incident response procedures, and continuous monitoring dashboards.

AI Agents in Practice: Industry Examples

Real-world applications demonstrate what works. Customer service represents the most mature single-agent application. IBM documents customer service agents handling intent classification, knowledge base retrieval, ticket resolution, and routing. Measured outcomes include response time reduction from minutes to seconds and notable improvements in customer satisfaction scores.

DevOps automation showcases agentic systems coordinating specialized agents. Automated testing pipelines run comprehensive suites whenever code changes. Deployment orchestration manages complex multi-service deployments. Incident detection and remediation identifies problems early. Performance monitoring continuously tunes systems.

Deloitte’s analysis of banking applications describes risk assessment workflows gathering data from multiple sources, analyzing according to sophisticated models, and producing comprehensive reports. Fraud detection coordinates agents monitoring different signal types. Compliance monitoring checks for regulatory violations. Portfolio management agents analyze market conditions.

Healthcare applications require extra caution. Agents assist with diagnostic support by synthesizing information, not making diagnoses themselves. Administrative task automation reduces paperwork. Research literature review helps doctors stay current. Clinical trial matching connects patients with appropriate studies.

The beginner takeaway: start with single-agent use cases. The implementor takeaway: scale to agentic systems when coordination genuinely adds value.

Should You Build an AI Agent or Agentic AI System?

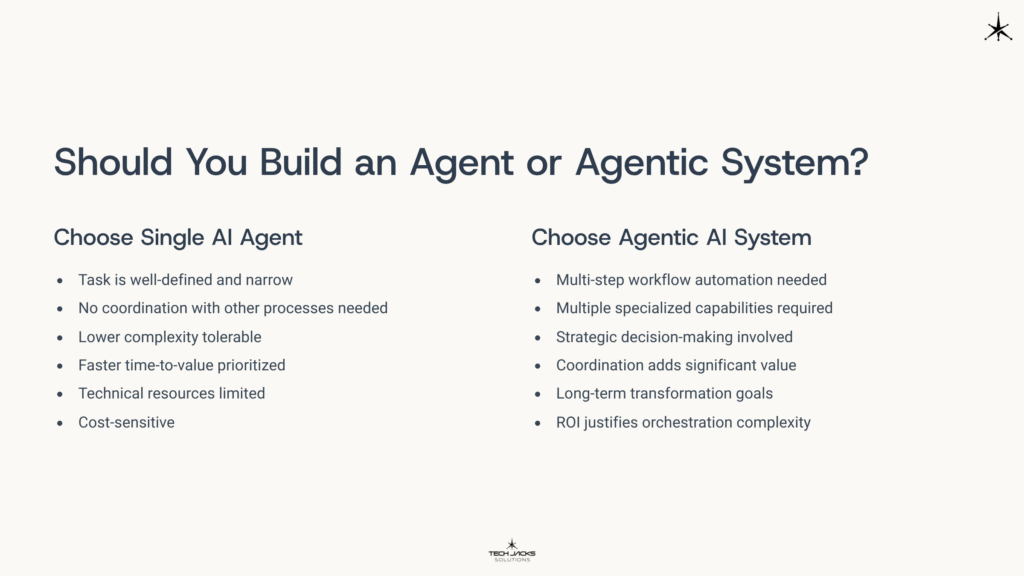

This decision determines your project’s scope, cost, timeline, and likelihood of success.

Choose a single AI agent when your task is well-defined and narrow, no coordination with other processes is needed, lower complexity is tolerable, faster time-to-value is prioritized, technical resources are limited, and you’re cost-sensitive.

Choose an agentic AI system when multi-step workflow automation is needed, multiple specialized capabilities are required, strategic decision-making is involved, coordination adds significant value, you have long-term transformation goals, and ROI justifies orchestration complexity.

Assessment questions help clarify the decision. Can one specialized component solve this? Do sub-tasks need to share context and coordinate? Is dynamic planning required? What level of autonomy is acceptable? What are the failure implications?

Governance considerations differ significantly. Single agents need standard software testing, access controls, and monitoring. Agentic systems require continuous oversight, dynamic risk assessment, and human checkpoints for critical decisions. The NIST AI Risk Management Framework provides principles for both approaches. The MCP governance guidelines offer specific guidance for agentic systems.

Cost-benefit analysis weighs development complexity against operational efficiency, initial investment versus long-term value, and risk exposure against capability gains.

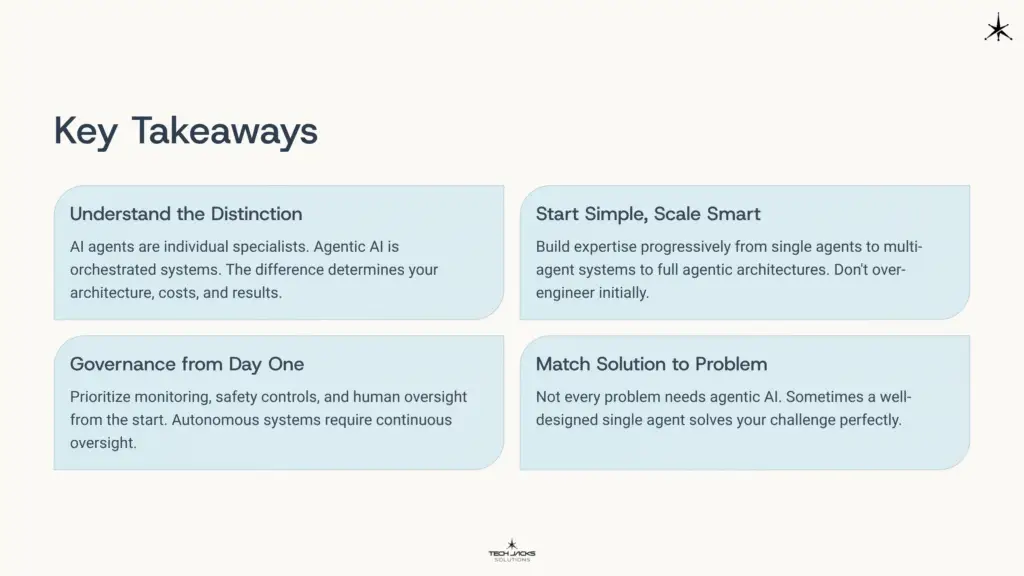

Not every problem needs agentic AI. Sometimes a well-designed single agent solves your challenge perfectly. But when you do need coordinated autonomy across multiple tasks, understanding you’re building a system rather than deploying a tool changes everything about your approach.

Start with clear requirements. Build expertise progressively from single agents to multi-agent systems to full agentic architectures. Prioritize governance and monitoring from day one. The distinction between AI agents and agentic AI isn’t academic. It’s the difference between successful implementation and expensive failure.

Frequently Asked Questions

Can I build an AI agent without coding?

Yes. No-code platforms like Zapier AI Actions, Make.com, and Microsoft Copilot Studio let you create functional agents through configuration. You define the agent’s purpose, connect data sources, and set rules through visual interfaces. These work well for standard use cases like customer service routing, email automation, or simple data retrieval. For custom requirements or complex logic, you’ll need coding or developer support.

What’s the difference between AI agents and chatbots?

Chatbots follow predefined conversation flows or scripts. They respond to inputs but don’t take autonomous action beyond the conversation. AI agents perceive their environment, make decisions, and execute actions across multiple systems. A chatbot answers questions. An agent answers questions, checks your database, updates records, sends notifications, and learns from outcomes. Chatbots are a subset of what AI agents can do.

How much does it cost to build an AI agent?

Costs vary dramatically based on complexity. A simple agent using existing platforms might cost $100-500 monthly in API fees. A custom-built agent for specific business processes could require +$10,000 in development costs plus $$ monthly in operational expenses. Each deployment will require appropriate requirement gathering, design, and architecture – – cost will vary on use case.

Which framework should I choose: LangChain, AutoGen, or CrewAI?

Choose based on your use case. LangChain excels for prototyping and applications needing extensive tool integrations. AutoGen works best for enterprise environments requiring multi-agent collaboration with strong governance. CrewAI suits beginners and non-technical users who want role-based agent teams. LangGraph handles complex workflows needing sophisticated state management. Start with CrewAI for simplicity, LangChain for flexibility, or AutoGen for enterprise scale.

Do I need a data science team to implement AI agents?

Not necessarily. Building simple agents with existing platforms requires API integration skills, not data science expertise. A developer familiar with REST APIs and basic programming can build functional agents. However, production-grade systems benefit from people who understand machine learning concepts, prompt engineering, and system architecture. Agentic AI systems coordinating multiple agents typically need experienced AI engineers. Many organizations start by partnering with consultants, then build internal capability.

How do I prevent my AI agent from making mistakes?

Implement multiple safety layers. Use input validation to ensure the agent only processes appropriate requests. Set explicit boundaries in system prompts defining what the agent can and cannot do. Implement human-in-the-loop approval for high-stakes actions like financial transactions or data deletion. Add output filtering to catch inappropriate responses before they reach users. Use rate limiting to prevent runaway API costs. Test extensively with edge cases before production deployment. Monitor continuously and implement automatic shutoffs if the agent behaves unexpectedly.

What’s the biggest challenge in building agentic AI systems?

State management and coordination complexity. When multiple agents work together, ensuring they share context appropriately, don’t duplicate work, and recover gracefully from errors becomes exponentially harder. Each agent might work perfectly in isolation but fail when coordinated. Orchestration patterns help, but you’re essentially building distributed systems with autonomous components that make unpredictable decisions. The second biggest challenge is cost control. Multiple agents making numerous LLM calls can burn through budgets quickly without proper monitoring.

Can AI agents integrate with my existing systems?

Yes, if your systems have APIs. Agents interact with external systems through API calls, so any software with a REST API, database connection, or webhook can integrate. Common integrations include CRMs (Salesforce, HubSpot), databases (PostgreSQL, MongoDB), communication platforms (Slack, email), cloud storage (Google Drive, S3), and business tools (Jira, Notion). Legacy systems without APIs require middleware or API wrappers. The Model Context Protocol (MCP) provides standardized approaches for secure agent-system communication.

How long does it take to build a production-ready AI agent?

Timeline depends on complexity and existing infrastructure. A simple agent handling one task with clear requirements: 2-4 weeks from concept to production. A sophisticated agent with multiple integrations, complex logic, and enterprise requirements: 2-4 months. A full agentic AI system coordinating multiple specialized agents: 4-12 months. These estimates assume experienced developers. First-time builders should double these timelines. Most time goes to testing edge cases, refining prompts, and ensuring reliability rather than initial development. However, these timelines are entirely based on each unique use case, skill, experience and resources of those that may plan, develop, and implement these tools, so estimates vary.

What programming languages work best for building AI agents?

Python dominates AI agent development. Most frameworks (LangChain, AutoGen, CrewAI) are Python-first with extensive libraries. JavaScript/TypeScript works well for agents integrated into web applications. C# suits enterprise environments using Microsoft’s ecosystem. The language matters less than choosing frameworks with good LLM integrations and active communities. If you’re starting fresh, use Python. If you have an existing tech stack, use whatever language has decent LLM libraries.

When should I build one agent versus an agentic AI system?

Build a single agent when you have one clearly defined task that doesn’t require coordination with other processes. Examples: answering customer FAQs, summarizing documents, monitoring logs for specific patterns. Build an agentic system when you need end-to-end workflow automation requiring multiple specialized capabilities that must coordinate. Examples: complete customer service resolution (triage + research + response + follow-up), financial due diligence (research + analysis + reporting), complex DevOps pipelines (testing + deployment + monitoring + remediation). If your workflow diagram shows multiple distinct steps that need shared context, you need a system.

Can small businesses benefit from AI agents, or is this only for enterprises?

Small businesses can benefit significantly, often more than enterprises because they have fewer legacy systems to integrate. Start with targeted, high-impact use cases like customer inquiry handling, appointment scheduling, invoice processing, or social media management. Use no-code platforms to minimize development costs. Focus on agents that augment existing staff rather than complex multi-agent systems. Small businesses typically see ROI faster because they can implement and iterate quickly without enterprise governance overhead. Many successful implementations cost under $5,000 in setup and $500/month to operate.

Ready To Test Your Knowledge?

Resources

Core Definitions & Frameworks

Agentic AI Fundamentals:

- IBM: What Is Agentic AI? – Comprehensive definition and core concepts

- AWS: What is Agentic AI? – Technical overview and architecture

- UK Government: AI Insights – Agentic AI – Policy and governance perspective

- NVIDIA Blog: What Is Agentic AI? – Industry perspective on autonomous systems

Academic Research:

- arXiv: AI Agents vs. Agentic AI – A Conceptual Taxonomy – Formal taxonomy distinguishing agents from systems

- MDPI: The Rise of Agentic AI – Review of definitions, frameworks, architectures, and evaluation metrics

AI Agent Components & Architecture

Agent Fundamentals:

- IBM: Components of AI Agents – Technical breakdown of agent architecture

- IBM: What is Agentic Reasoning? – How agents make decisions

- Google Cloud: What is Agentic AI? – Building blocks and differentiators

- IBM: Multi-Agent Systems – Coordination and collaboration patterns

Development Frameworks & Tools

Framework Documentation:

- LangChain – Official site for the leading LLM application framework

- LangGraph: Multi-Agent Systems – Graph-based agentic workflows

- Microsoft AutoGen Research – Multi-agent conversation framework

- IBM: What is CrewAI? – Role-based agent orchestration

Framework Comparison:

- IBM: Top AI Agent Frameworks – Enterprise framework analysis

- AWS: Build Multi-Agent Systems with LangGraph – Implementation guide

Orchestration & Architecture Patterns

System Design:

- Microsoft Azure: AI Agent Orchestration Patterns – Sequential, parallel, hierarchical patterns

- IBM: What is AI Agent Orchestration? – Coordination mechanisms

- Technical Guide to Multi-Agent Orchestration – Implementation best practices

Governance & Security

Responsible Development:

- OpenAI: Practices for Governing Agentic AI Systems – Governance framework and controls

- Model Context Protocol (MCP): Standards for secure agent communication and coordination

- MCP Risk Matrix: Comprehensive security guidance for agentic systems

- MCP Governance Guidelines: Policy frameworks for autonomous systems

Industry Applications & Use Cases

Enterprise Implementation:

- Deloitte: Autonomous Generative AI Agents – Under Development – Enterprise adoption challenges

- Deloitte: Agentic AI in Banking – Financial services applications

- IBM: AI Agents in Customer Service – Support automation patterns

Standards & Best Practices

Risk Management:

- NIST AI Risk Management Framework – Voluntary guidance for AI system governance

- Model Context Protocol (MCP) – Communication standards for agent coordination

About This Guide: This comprehensive resource covers both AI agents (individual components) and agentic AI systems (orchestrated multi-agent architectures). All sources listed above were referenced in creating this guide and provide pathways for deeper exploration of specific topics.