AI Paradox Weekly #7: The Agentic Crucible (November 18-December 2, 2025)

- Home

- AI Paradox Weekly #7: The Agentic Crucible (November 18-December 2, 2025)

The AI Paradox Weekly

November 18th – December 2nd

The Battle for Efficiency, Security, and Compliance

Table of Contents

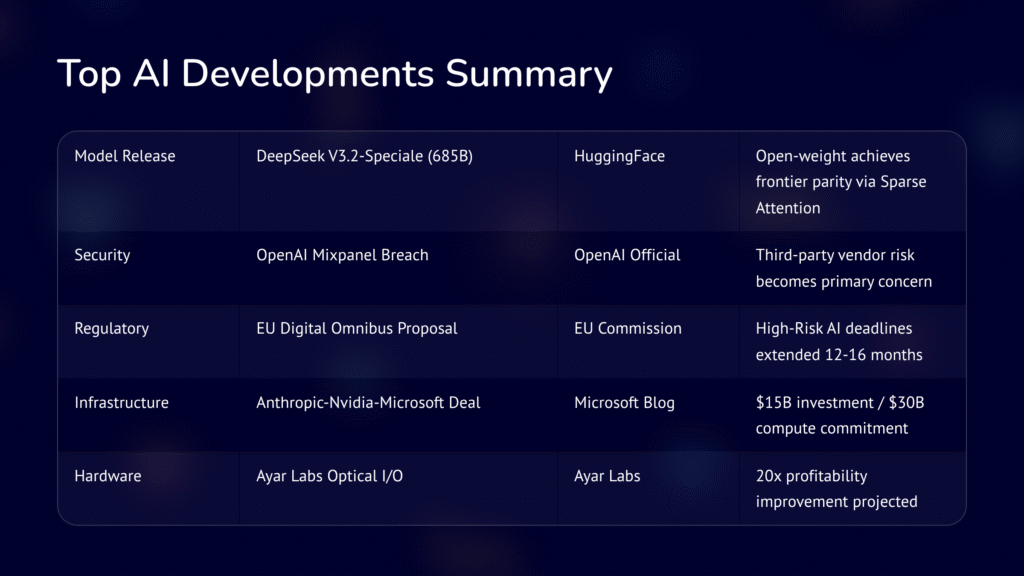

TL:DR The Essential Intel

When you are pressed for time - and just need the goods

- DeepSeek just changed the game. Their V3.2 model matches frontier proprietary models on complex reasoning while introducing Sparse Attention architecture that slashes compute costs. Open-weight is no longer “catching up” (it’s competing head-to-head). If you’re still assuming closed APIs are your only option for serious work, it’s time to reassess.

- Your AI vendor’s vendors are now your problem. OpenAI disclosed a security breach through Mixpanel, a third-party analytics provider. Customer data was exposed. OpenAI’s core systems weren’t touched, but that’s cold comfort if your data was in that vendor’s hands. Third-party risk management just became an AI governance priority.

- The EU just bought you 16 months. The European Commission’s Digital Omnibus proposal extends High-Risk AI compliance deadlines to late 2027/2028. This isn’t a free pass (it’s a window to build governance right instead of scrambling at the deadline).

- Compute access is the new strategic currency. Anthropic locked in a $30 billion infrastructure commitment to Nvidia and Microsoft in exchange for $15B in investment. If you can’t secure long-term compute, you can’t compete at the frontier. The market noticed (both stocks dipped on the news).

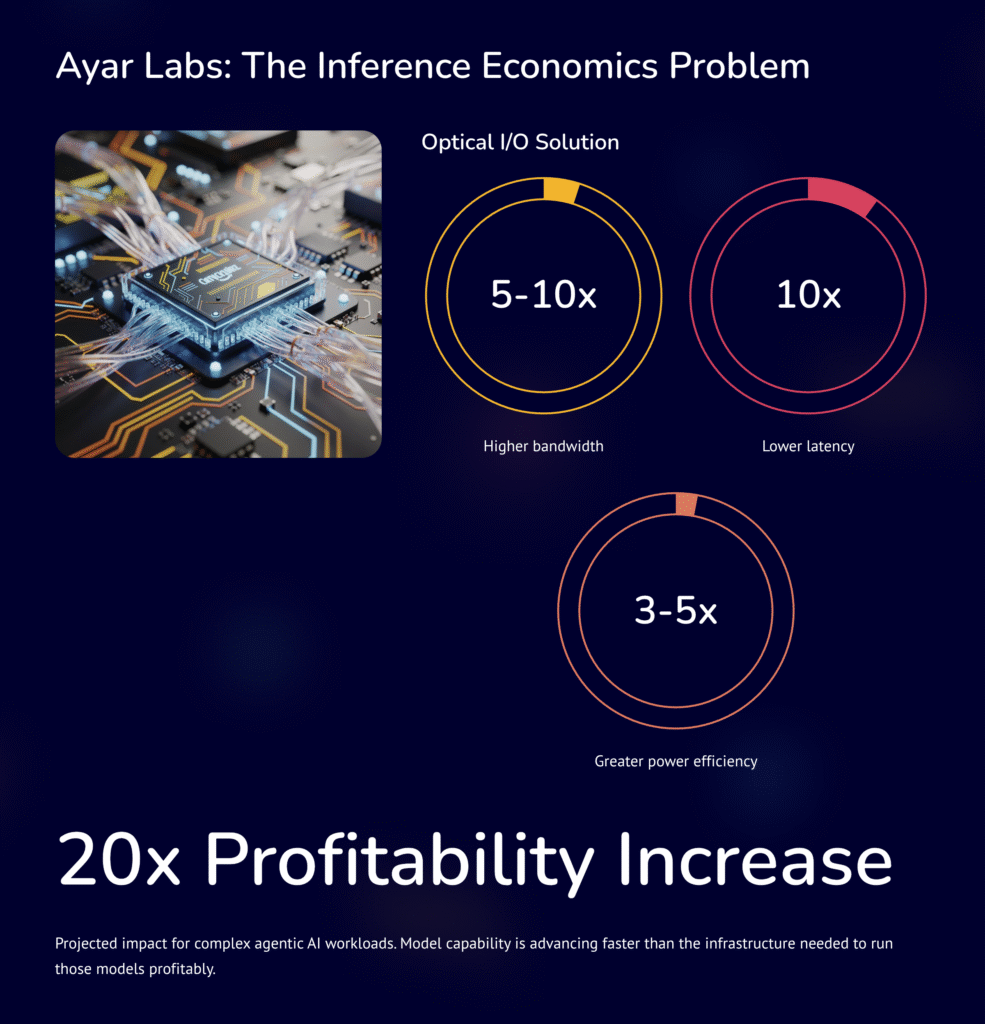

- Hardware is the real bottleneck. Ayar Labs says their optical interconnects could make complex AI workloads 20x more profitable. Current infrastructure can’t keep pace with what models can do. The economics of AI depend on solving this.

Introduction: The Agentic Crucible

This two-week period delivered a clear message: the rules of the AI industry are being rewritten.

Three developments defined the cycle. First, open-weight models proved they can match proprietary frontier systems on complex reasoning tasks. DeepSeek’s V3.2 release isn’t just another model drop (it’s proof that architectural efficiency can close capability gaps that were supposed to take years to bridge). The competitive moat around closed APIs is eroding faster than anyone expected.

Second, operational security took center stage in the worst way. OpenAI’s Mixpanel breach reminded everyone that your AI security posture is only as strong as your weakest vendor. Enterprise API customers woke up to find that the attack surface extends far beyond their foundation model provider.

Third, regulators acknowledged reality. The EU’s proposed compliance timeline extension signals that even the world’s most ambitious AI regulation framework needs more time to implement properly. For organizations still figuring out their AI governance approach, this is a gift (but only if you use it).

The pattern is clear: capability is accelerating, but so is complexity. The organizations that thrive will be those that can move fast on adoption while maintaining robust security and governance practices. That’s the paradox of this moment.

Section 1: The Titans (Strategic Maneuvers of Industry Leaders)

DeepSeek: The Efficiency Breakthrough

COMPANY ANNOUNCEMENT: DeepSeek V3.2 Validates Open-Weight Frontier Competition

- Sources: HuggingFace Model Card, r/LocalLLaMA Discussion

- Time: December 2, 2025

Key Details:

- Released V3.2-Speciale: a 685B parameter model built for efficiency and autonomous task execution

- Introduced DeepSeek Sparse Attention (DSA), a new attention mechanism that reduces computational complexity for long-context processing while maintaining performance

- Used a Scalable Reinforcement Learning Framework with a Large-Scale Agentic Task Synthesis Pipeline for training

- Claims gold-medal performance on 2025 International Mathematical Olympiad (IMO) and International Olympiad in Informatics (IOI)

- Reports reasoning on par with Gemini-3.0-Pro and superior to GPT-5 on rigorous benchmarks

Why This Matters for Your Organization:

The assumption that you need expensive proprietary APIs for serious AI work is now outdated. DeepSeek’s architecture demonstrates that cost-per-token efficiency (not raw scale) is becoming the competitive differentiator.

For IT leaders evaluating AI infrastructure, this means:

- Open-weight deployment options deserve serious consideration

- Vendor lock-in to any single API provider carries increasing risk

- Total cost of ownership calculations need updating

The efficiency war has begun, and it benefits buyers.

OpenAI: Consumer Growth Meets Security Realityn

COMPANY ANNOUNCEMENT: ChatGPT Shopping Tool Launch & Mixpanel Security Breach

- Sources: OpenAI Official Statement, Bloomberg, BleepingComputer

- Time: November 30 – December 1, 2025

Key Details:

The Good News (November 30):

- Launched a free AI-powered shopping research tool using GPT-5 mini

- Uses a quiz format to gather user preferences (budget, size, color)

- Draws from quality sources like Reddit reviews, explicitly deprioritizing paid marketing

- Strategic move into e-commerce discovery ahead of the holiday season

The Bad News (December 1):

- Disclosed that customer data was exposed through Mixpanel, a third-party analytics vendor

- Attackers gained unauthorized access to customer information and API-related analytics data

- OpenAI’s core infrastructure and ChatGPT were not compromised

- Response: Customer notifications, vendor relationship terminated, expanded security reviews across all vendors

Why This Matters for Your Organization:

This is a wake-up call for anyone using AI APIs in production. The breach didn’t happen because OpenAI’s systems failed (it happened because a vendor in their supply chain was compromised).

Your security perimeter now includes every tool your AI provider uses for analytics, monitoring, logging, and support. If you haven’t mapped your AI supply chain, you’re operating blind.

Action Items:

- Audit all third-party integrations in your AI stack

- Review vendor security assessments for AI providers

- Update incident response plans to include AI supply chain scenarios

Section 2: The Foundation (Hardware, Infrastructure, and Cloud)

The Incumbents: Nvidia, Microsoft, and the Compute Dependency Loop

INFRASTRUCTURE: Strategic Partnerships Define the Hardware Landscape

- Sources: Microsoft Blog, CNBC Palantir Earnings

- Time: November 2025

Key Details:

- Nvidia: Continues reporting robust earnings, confirming its position as the essential supplier of AI compute. Q3 results strong, but market expectations remain extremely high.

- Microsoft: The Anthropic deal locks in a major AI customer while extending Azure’s role as critical infrastructure for frontier development.

- The Deal Structure: The $15B investment / $30B commitment arrangement demonstrates that compute access is now the strategic currency of the AI race.

Why This Matters for Your Organization:

The hardware layer of AI is consolidating around a small number of players. Understanding these relationships matters because:

- Your AI provider’s costs are heavily influenced by their hardware arrangements

- Long-term pricing stability depends on infrastructure access

- Concentration at the hardware layer creates systemic risk

The Challengers: Ayar Labs and the Inference Economics Problem

HARDWARE INNOVATOR: Optical I/O as the Path to AI Profitability

- Sources: Ayar Labs, SiliconANGLE

- Time: November 21, 2025 (Supercomputing 2025)

Key Details:

- Current electrical interconnect technology constrains the economic viability of complex AI inference

- Ayar Labs’ optical I/O solution offers:

- 5-10x higher bandwidth

- 10x lower latency

- 3-5x greater power efficiency

- Projected impact: 20x profitability increase for complex agentic AI workloads

Why This Matters for Your Organization:

Model capability is advancing faster than the infrastructure needed to run those models profitably. This gap explains why AI services remain expensive and why some promised capabilities aren’t yet economically viable at scale.

Organizations planning AI infrastructure investments should watch the optical interconnect space. The economics of AI deployment could shift significantly as this technology matures.

Section 3: The Vanguard (Emerging Models and Disruptive Innovators)

DeepSeek: Engineering the Cost-Effective Agent

FOUNDATION MODEL INNOVATOR: V3.2 Proves Efficiency-First Architecture Works

- Source: HuggingFace

- Time: December 2, 2025

Key Details:

- V3.2’s Sparse Attention mechanism represents a fundamental architectural shift

- The model explicitly targets agentic use cases: tool use, multi-step reasoning, autonomous task execution

- Open-weight release enables deployment without API dependencies

- Claims parity with frontier closed models on demanding benchmarks

Why This Matters for Your Organization:

DeepSeek’s approach directly addresses the core economic challenge of agentic AI: agents require many inference calls, and each call costs money. By dramatically reducing per-call compute requirements, this architecture makes production-ready AI agents financially viable for the first time.

If you’ve shelved agent projects due to inference costs, it’s time to revisit those calculations.

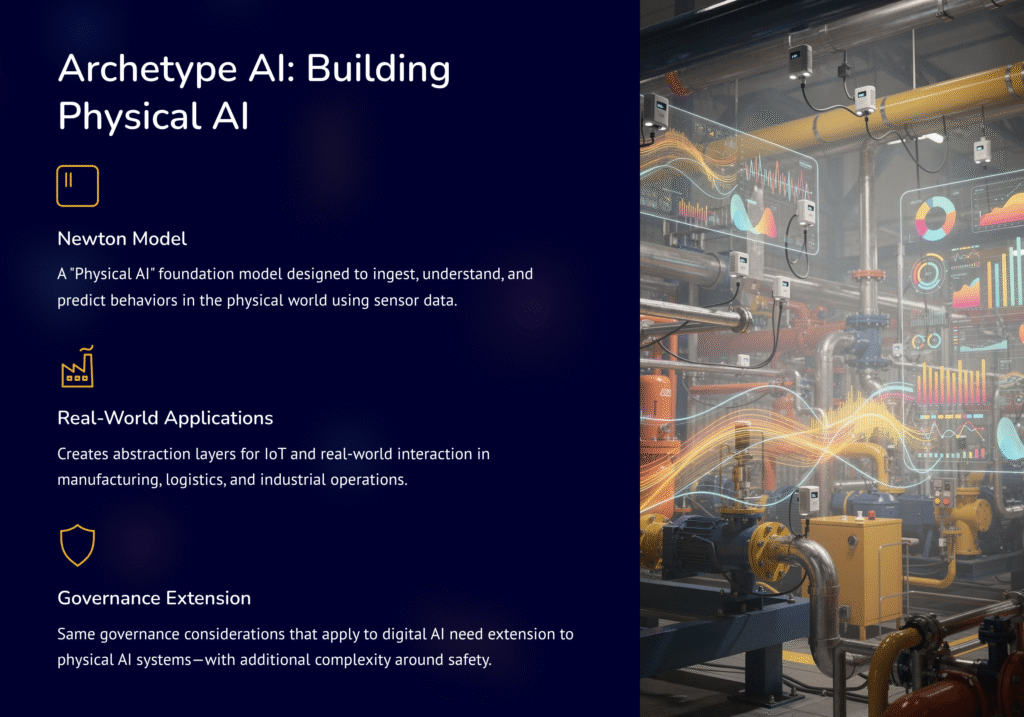

Archetype AI: Building the Physical AI Foundation

FOUNDATION MODEL INNOVATOR: Newton Model for Sensor and Physical World Data

- Source: Archetype AI

- Time: Ongoing development

Key Details:

- Building Newton, a “Physical AI” foundation model

- Designed to ingest, understand, and predict behaviors in the physical world using sensor data

- Creates abstraction layers for IoT and real-world interaction

Why This Matters for Your Organization:

AI is moving beyond text and images into sensor data, physical systems, and real-world interaction. Organizations in manufacturing, logistics, facilities management, and industrial operations should track this space closely.

The same governance and security considerations that apply to digital AI will need extension to physical AI systems (with additional complexity around safety and real-world consequences).

Section 4: The Ecosystem (Developer Tools, Open Source, and Agent Frameworks)

This section analyzes the market movements and geopolitical landscape that provide the financial and legal context for the industry’s developments.

ComfyUI-R1: Autonomous MLOps Workflow Generation

AGENT DEVELOPMENT: LLM That Writes and Executes Computer Vision Pipelines

- Source: GitHub ML-Papers-of-the-Week

- Time: November 2025

Key Details:

- ComfyUI-R1 is a large language model designed to autonomously generate executable computer vision workflows

- Achieved 97% format validity on generated workflows

- Improved execution pass rate by 11% over GPT-4o baselines on ComfyBench benchmark

Why This Matters for Your Organization:

The race is evolving from “AI that generates code snippets” to “AI that generates and executes complete technical pipelines.” This dramatically accelerates deployment timelines for computer vision and automation systems.

MLOps teams should explore how these capabilities could streamline workflow development and reduce time-to-production.

Roboflow: Real-Time Vision at Production Scale

PLATFORM UPDATE: SAM 3 Integration and Live Stream Processing

- Source: Roboflow Changelog

- Time: November 2025

Key Details:

- Full integration and fine-tuning support for Segment Anything Model 3 (SAM 3)

- New capability: Run computer vision Workflows on live camera streams directly from the web editor

- Tools for AI-powered annotation and SAM 3 API creation

Why This Matters for Your Organization:

Real-time computer vision is becoming accessible without deep ML expertise. Platforms like Roboflow are democratizing capabilities that previously required specialized teams.

For organizations in retail, security, manufacturing, or logistics, the barrier to deploying vision AI has dropped significantly.

HuggingFace: The Open-Source Pulse

OPEN SOURCE: DeepSeek V3.2 Dominates Model Releases

- Source: HuggingFace Collections

- Time: December 2, 2025

Key Details:

- DeepSeek-V3.2 release generated immediate traction on HuggingFace

- Continued growth in model availability and community engagement

- Trend toward efficiency-focused architectures in popular releases

Why This Matters for Your Organization:

HuggingFace remains the central hub for open-source AI. Tracking trending models and community discussions provides early signal on which approaches are gaining developer adoption.

Section 5: Capital, Markets, and Governance

Venture & Investment Flows: The Infrastructure Play

BUSINESS & FINANCIAL: Capital Concentrates in Compute and Specialized AI

- Sources: Microsoft Blog, CNBC

- Time: November 2025

Key Details:

- The Anthropic-Nvidia-Microsoft deal represents a new model: massive compute supply agreements as alternatives to traditional M&A

- Investment focus remains on:

- Specialized inference hardware

- Architectural efficiency innovations

- Defense technology applications

- Strategic agreements effectively lock model developers into specific cloud/hardware ecosystems

Why This Matters for Your Organization:

The investment thesis is clear: infrastructure and efficiency win. Organizations evaluating AI vendors should consider their infrastructure partnerships and long-term cost structures.

Public Market Pulse: Strong Earnings, Cautious Sentiment

BUSINESS & FINANCIAL: Nvidia and Palantir Confirm Enterprise Demand

- Sources: CNBC Palantir Earnings, Palantir Investor Relations

- Time: Q3 2025 Earnings

Key Details:

- Nvidia: Robust Q3 earnings, reinforcing its position as the essential AI compute supplier

- Palantir: Strong performance with guidance for 61% Q4 revenue growth (its highest sequential growth ever)

- Market differentiating between profitable infrastructure providers and high-investment frontier model developers

Why This Matters for Your Organization:

The market is increasingly skeptical of AI companies that burn cash pursuing frontier capabilities without clear paths to profitability. Infrastructure and specialized enterprise AI platforms (like Palantir) are being valued differently than pure research plays.

Regulatory & Policy Landscape

REGULATORY: EU Proposes Major Compliance Timeline Extension

- Sources: EU Commission Official, Cooley, Crowell, White & Case

- Time: November 19, 2025 (publication)

Key Details:

The European Commission’s Digital Omnibus on AI Regulation Proposal acknowledges implementation difficulties and proposes significant timeline changes:

| AI System Category | Original Deadline | Proposed New Deadline | Extension |

|---|---|---|---|

| High-Risk (Annex III Use Cases) | August 2, 2026 | December 2, 2027 | ~16 months |

| High-Risk (Embedded Products) | August 2, 2027 | August 2, 2028 | 12 months |

Additional Proposed Changes:

- Relaxed registration requirements for certain low-risk AI in high-risk domains

- Provisions for processing special category personal data (with safeguards) for AI bias mitigation

US Policy Environment:

No significant new federal AI policy announcements during this period. The US approach continues to favor accelerated deployment with light regulatory touch.

Why This Matters for Your Organization:

The EU timeline extension is not a signal that regulation is weakening (it’s acknowledgment that compliance is complex). Smart organizations will use this window to:

- Complete AI system inventories now

- Build governance frameworks by design

- Implement documentation and audit trails before deadlines

Building your AI governance framework? Explore Tech Jacks Solutions’ compliance resources for NIST AI RMF, ISO 42001, and EU AI Act guidance.

Section 6: The Research Frontier (Academic and Scientific Breakthroughs)

Key Research Themes: Trustworthiness and Autonomous Systems

RESEARCH & TECHNICAL: Making AI More Reliable and Self-Sufficient

- Sources: University of Arizona, Stanford HAI, GitHub

- Time: November 18-24, 2025

Key Developments:

University of Arizona: Ray Tracing for AI Uncertainty (Nov 18)

- Novel method adapting ray tracing (computer graphics technique) to quantify AI uncertainty

- Enables AI systems to recognize when their predictions are unreliable

- Code made publicly available for immediate adoption

Stanford HAI: Self-Learning Mechanical Circuits (Nov 24)

- “Steampunk” machines capable of learning without electronics

- Path toward robust, low-power AI systems for extreme environments

- Supports development of Physical AI for real-world deployment

Mistral Magistral: Pure RL for Reasoning (Nov 2025)

- Reinforcement learning from scratch without teacher model distillation

- 50% reasoning improvement on benchmarks

- Validates efficient pathways to specialized capabilities

Why This Matters for Your Organization:

These research directions address critical gaps in current AI systems:

- Uncertainty quantification is essential for high-stakes deployment (medical, financial, legal)

- Physical AI extends governance considerations to real-world systems

- Efficient training methods reduce the resource barrier to specialized AI development

Institutional Initiatives: Applied AI Research

ACADEMIC: Universities Advancing Domain-Specific AI Applications

- Sources: Stanford HAI Research, Google Research

- Time: November 2025

Key Details:

- Stanford HAI continues advancing Physical AI and policy-relevant research

- Google Research published work on Generative UI and scientific discovery acceleration

- Academic focus shifting from pure capability research to applied, high-impact problems

Why This Matters for Your Organization:

Academic research provides early signal on capabilities that will become commercially available in 12-24 months. Tracking institutional initiatives helps anticipate technology curves and prepare governance approaches.

Section 6: The Research Frontier (Academic and Scientific Breakthroughs)

Conclusion: Forward Outlook and Emerging Signals

This two-week cycle revealed the AI industry’s current inflection point. Three forces are reshaping the landscape simultaneously:

1. The Efficiency Revolution is Real

DeepSeek V3.2 proves that architectural innovation can close capability gaps without requiring the largest training budgets. The competitive barrier is shifting from “who can scale bigger” to “who can deliver better cost-per-token economics.” This benefits every organization that uses AI (more competition means better options at lower prices).

2. Security Extends to the Supply Chain

The Mixpanel breach exposed a reality that security teams need to internalize: your AI risk surface includes every vendor your AI provider uses. Third-party risk management isn’t optional (it’s a core governance requirement). Organizations should audit their AI supply chains now, before an incident forces the issue.

3. Regulatory Time is a Strategic Asset

The EU’s proposed compliance timeline extension provides breathing room, but only for organizations that use it wisely. Building governance frameworks now (while deadlines are further away) means compliance by design rather than last-minute scrambling. The organizations that invest in AI governance today will have competitive advantages when enforcement begins.

The groundwork for the Agent Era was laid this period. The question is whether your organization is building the governance infrastructure to support it.

Executive Summary & Governance Takeaways

This period marks a critical transition defined by:

- Capability Convergence: Open-weight models achieve frontier performance through efficiency, not just scale

- Supply Chain Exposure: Third-party vendor breaches reveal extended attack surfaces in AI ecosystems

- Regulatory Reprieve: EU compliance extensions provide strategic window for proactive governance

The paradox: AI capability is accelerating faster than ever, but so is the complexity of deploying it safely and compliantly.

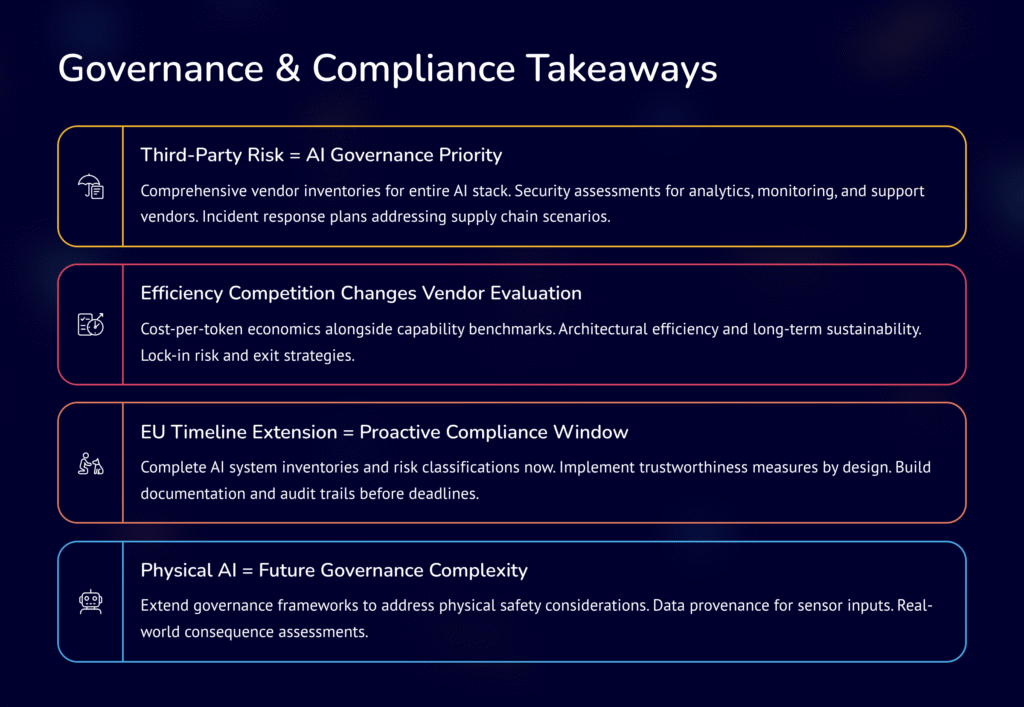

Governance & Compliance Takeaways

Third-Party Risk = AI Governance Priority

The Mixpanel breach demonstrates that AI security extends beyond your foundation model provider. Your governance program must include:

- Comprehensive vendor inventories for the entire AI stack

- Security assessments for analytics, monitoring, and support vendors

- Incident response plans that address supply chain scenarios

Efficiency Competition Changes Vendor Evaluation

With open-weight models matching proprietary performance, vendor selection criteria should expand:

- Cost-per-token economics alongside capability benchmarks

- Architectural efficiency and long-term sustainability

- Lock-in risk and exit strategies

EU Timeline Extension = Proactive Compliance Window

The proposed 12-16 month delays create opportunity. Organizations should:

- Complete AI system inventories and risk classifications now

- Implement trustworthiness measures by design

- Build documentation and audit trails before deadlines

Physical AI = Future Governance Complexity

Research advances in sensor AI and mechanical intelligence signal coming compliance challenges. Begin extending governance frameworks to address:

- Physical safety considerations

- Data provenance for sensor inputs

- Real-world consequence assessments

Bottom Line

The AI industry entered a new phase during this period. Efficiency matters as much as capability. Security extends to your vendors’ vendors. Regulation is coming, but there’s time to prepare properly.

Organizations that build robust governance now will be positioned to:

- Adopt AI with compliance confidence

- Mitigate security and reputational risks

- Build trust with regulators, partners, and customers

The Agentic Era is arriving. Is your governance infrastructure ready?

Get Expert Guidance

Need help building your AI governance framework?

Tech Jacks Solutions provides resources on:

- NIST AI RMF implementation

- ISO 42001 compliance

- EU AI Act readiness

- AI security best practices