Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated: August,28th 2025

Table of Contents

Pressed For Time

Review or Download our 2-3 min Quick Slides or the 5-7 min Article Insights to gain knowledge with the time you have!

Review or Download our 2-3 min Quick Slides or the 5-7 min Article Insights to gain knowledge with the time you have!

AI Acceptable Use Policies: Your 2025 Compliance Blueprint

Here’s what happened in 2024: The EU AI Act entered into force Aug 1st, 2024. NIST released voluntary guidance (AI RMF) that’s becoming the de facto US standard. Meanwhile, AWS became the first major cloud provider to achieve ISO/IEC 42001 AI management certification.

What does this mean for your organization? For organizations providing or deploying AI in the EU (especially high-risk systems) documented governance is expected and, in many cases, required under specific provisions (e.g., QMS, documentation). If you’re competing with companies like AWS, Google, and Microsoft – who all have formal AI governance frameworks – you’re already behind.

An AI Acceptable Use Policy isn’t just another compliance document. It’s the primary instrument for translating high-level ethical principles and complex regulatory requirements into concrete, auditable corporate practice. This guide shows you exactly how to build one using proven frameworks.

What’s an AI Acceptable Use Policy?

Think of it as rules for AI. But deeper.

An AI Acceptable Use Policy is a formal framework governing how artificial intelligence gets developed, bought, deployed, and used in your organization. It translates fuzzy ethical principles and complex regulations into concrete, auditable corporate practice.

Traditional IT policies don’t cut it for AI. Machine learning systems create unique risks: algorithmic bias, privacy implications, automated decisions that affect real people, potential for harm at massive scale. Your policy needs to address these specifically.

What It Actually Covers

Technologies: Everything AI-related. Machine learning models, natural language processing tools, computer vision systems, generative AI. Both internal development and third-party services employees use.

People: All employees, contractors, anyone with access to your tech resources or data.

Data Rules: Specific guidelines for different data types. (Pro tip: explicitly prohibit putting sensitive information into unapproved AI tools.)

Risk Categories: Clear frameworks separating low-risk productivity tools from high-risk systems affecting individual rights or safety.

Most companies get this wrong by being too vague. Specificity matters.

Why You Need This (And Why Now)

The regulatory landscape shifted dramatically in 2024. But here’s what most executives don’t realize: the companies already implementing AI governance aren’t doing it just for compliance. They’re doing it for competitive advantage.

Legal Requirements Are Here

EU AI Act: Mandatory requirements for high-risk AI systems. Risk management, quality processes, post-market monitoring. Documented governance frameworks aren’t optional if you serve EU markets.

NIST Standards: The AI Risk Management Framework started voluntary. It’s becoming the de facto US standard. The framework’s GOVERN, MAP, MEASURE, and MANAGE functions call for/expect policy artifacts.

ISO Certification: ISO/IEC 42001 is the world’s first AI Management System standard. Early adopters like AWS already achieved certification. Market expectations are forming.

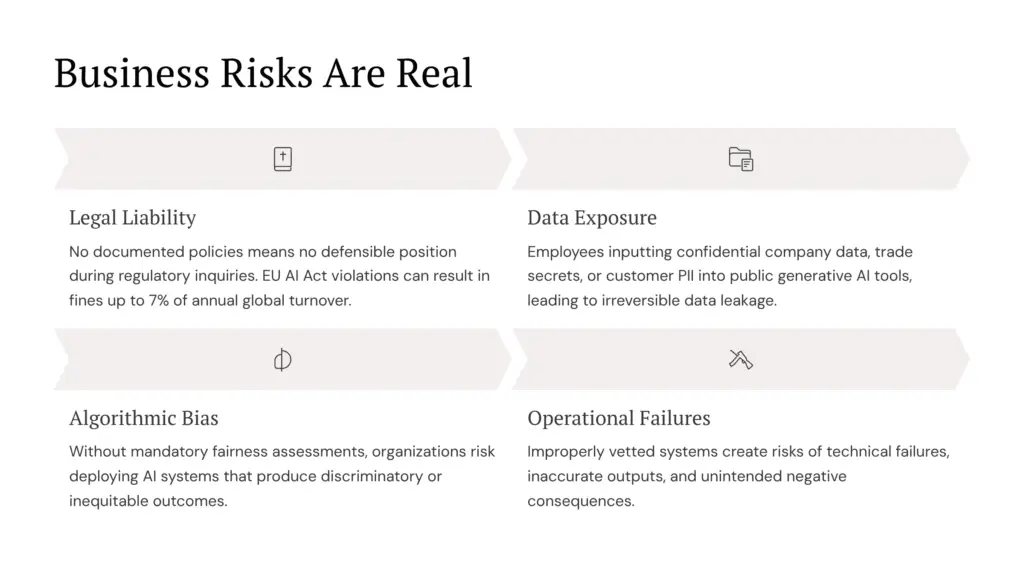

Business Risks Are Real

What specific risks does your organization face without proper AI governance?

Legal Liability: No documented policies means no defensible position during regulatory inquiries. Penalties: up to €35M or 7% for prohibited practices; up to 3% for other infringements (Article 99).” EU AI Act violations can result in fines up to 7% of annual global turnover.

Data Exposure: One of the most common risks documented is employees inputting confidential company data, trade secrets, or customer PII into public generative AI tools, which could otherwise lead to irreversible data leakage and loss of competitive advantage.

Algorithmic Bias: Without mandatory fairness assessments, organizations risk deploying AI systems that produce discriminatory or inequitable outcomes, which can cause significant brand damage and loss of customer trust.

Operational Failures: Improperly vetted systems create risks of technical failures, inaccurate outputs, and unintended negative consequences that could disrupt business operations.

Smart companies see AI governance as competitive advantage, not compliance burden.

Framework Alignment: EU, US, and International Standards

Effective governance aligns with major regulations. This isn’t about checking boxes – it’s about building defensible, structured approaches.

EU AI Act Compliance

The EU AI Act imposes strict obligations on high-risk systems. Your policy directly supports compliance:

Risk Management (Article 9): Mandate specific processes for identifying, analyzing, and mitigating risks throughout system lifecycles.

Quality Management (Article 17): Define roles, responsibilities, training requirements, documentation standards, design control procedures.

Post-Market Monitoring (Article 72): Require monitoring plans for high-risk systems. Review schedules, performance metrics, user feedback collection.

Incident Reporting (Article 73): Clear definitions of serious incidents. Mandatory internal reporting ensures timely regulatory notification.

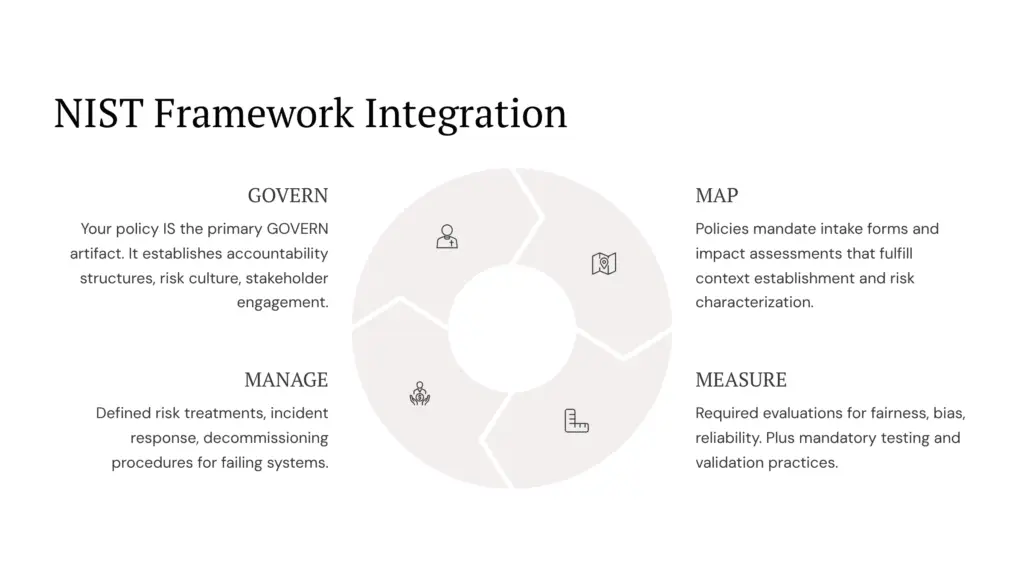

NIST Framework Integration

The NIST AI RMF provides practical guidance your policy can operationalize:

GOVERN: Your policy IS the primary GOVERN artifact. It establishes accountability structures, risk culture, stakeholder engagement.

MAP: Policies mandate intake forms and impact assessments that fulfill context establishment and risk characterization.

MEASURE: Required evaluations for fairness, bias, reliability. Plus mandatory testing and validation practices.

MANAGE: Defined risk treatments, incident response, decommissioning procedures for failing systems.

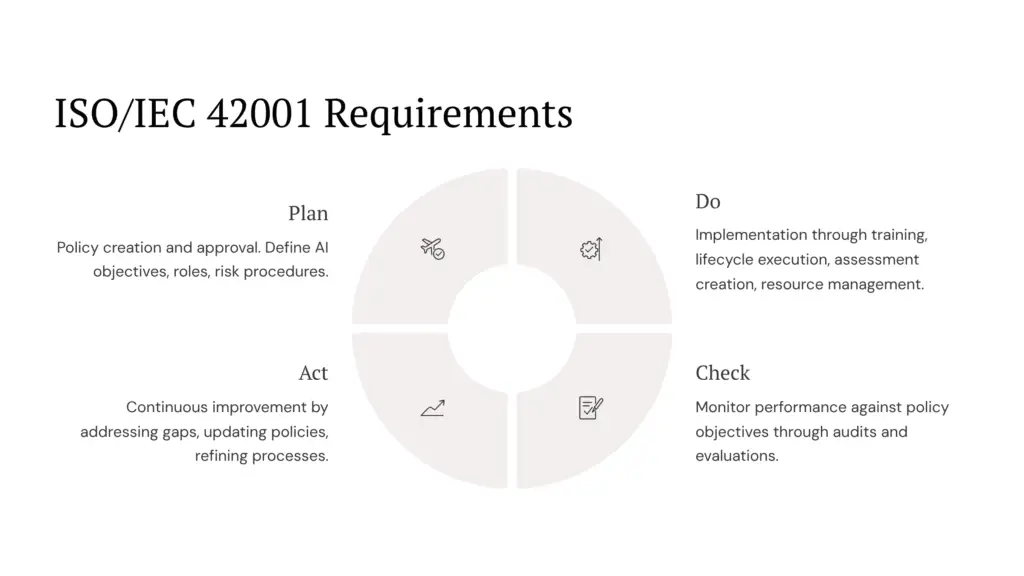

ISO/IEC 42001 Requirements

ISO/IEC 42001 uses the Plan-Do-Check-Act improvement cycle:

Plan: Policy creation and approval. Define AI objectives, roles, risk procedures.

Do: Implementation through training, lifecycle execution, assessment creation, resource management.

Check: Monitor performance against policy objectives through audits and evaluations.

Act: Continuous improvement by addressing gaps, updating policies, refining processes.

Building Your Policy: Step-by-Step (The Foundation-First Approach)

Here’s the reality: most AI governance initiatives fail because organizations jump straight to writing policies without laying proper groundwork. The research shows successful implementation requires systematic preparation.

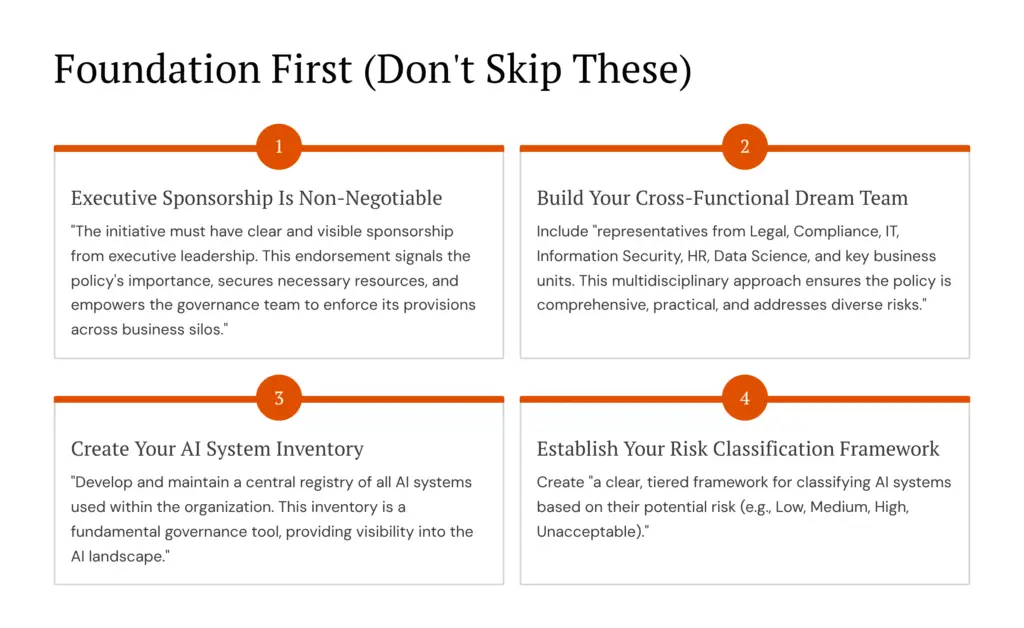

Foundation First (Don’t Skip These)

Executive Sponsorship Is Non-Negotiable: According to the source research, “The initiative must have clear and visible sponsorship from executive leadership. This endorsement signals the policy’s importance, secures necessary resources, and empowers the governance team to enforce its provisions across business silos.”

Why does this matter? Without executive backing, you’ll hit the first organizational silo and stop dead.

Start with an AI Charter – It’s a Great Investment

Build Your Cross-Functional Dream Team: The research specifies exactly who you need: “representatives from Legal, Compliance, IT, Information Security, HR, Data Science, and key business units. This multidisciplinary approach ensures the policy is comprehensive, practical, and addresses the diverse risks and needs of the organization.”

AI Governance Committee to Mitigate Risks

Create Your AI System Inventory: You can’t govern what you don’t know exists. The research emphasizes: “Develop and maintain a central registry of all AI systems used within the organization. This inventory is a fundamental governance tool, providing visibility into the AI landscape and serving as the basis for risk assessment and oversight.”

Build out your AI System Inventory and Use Case Trackers:

AI Use Case Tracker – Inventories

Why you Need an AI Use Case Tracker (And what to track)

Establish Your Risk Classification Framework: The framework should provide “a clear, tiered framework for classifying AI systems based on their potential risk (e.g., Low, Medium, High, Unacceptable). This taxonomy should be informed by regulatory definitions, such as the risk categories in the EU AI Act, to ensure a consistent approach to risk management.”

Development Process

Audit Current Policies: Review existing data governance, security, privacy, IT acceptable use policies. Find intersections and gaps.

Interview Stakeholders: Talk to working group members and key users. Understand current AI usage, business needs, risk concerns from different perspectives.

Draft Content: Write comprehensive policy covering purpose, scope, governance structure, permitted uses, prohibited activities, data rules, risk processes, documentation requirements, training procedures.

Iterate Based on Feedback: Circulate drafts among working group. Incorporate suggestions to improve clarity and practicality.

Get Official Approval: Submit through governance channels for formal sign-off. Publish in central, accessible location.

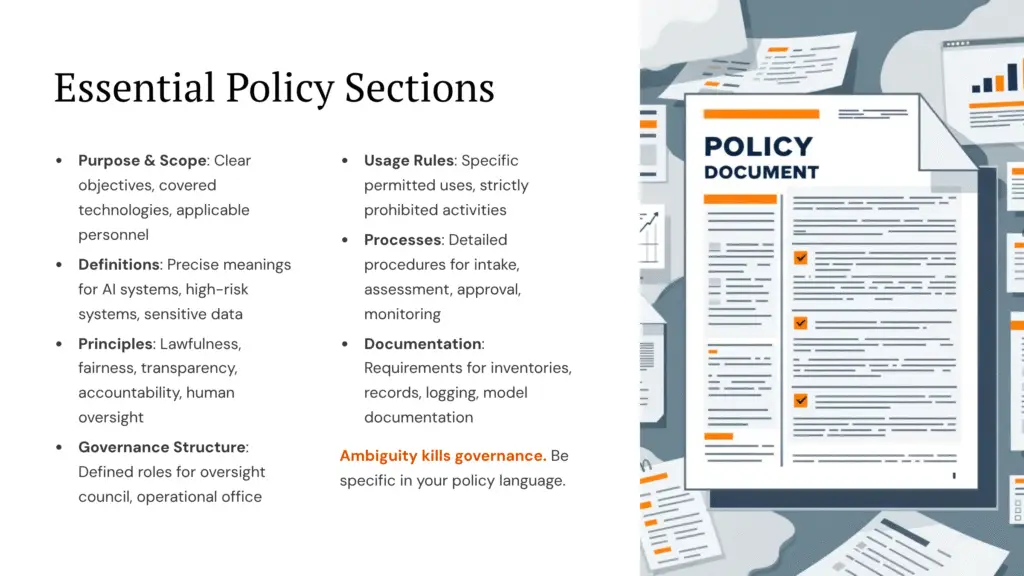

Essential Policy Sections

Purpose & Scope: Clear objectives, covered technologies, applicable personnel, organizational boundaries.

Definitions: Precise meanings for AI systems, high-risk systems, sensitive data, prohibited practices. Ambiguity kills governance.

Principles: Lawfulness, fairness, transparency, accountability, human oversight, security, privacy commitments.

Governance Structure: Defined roles for oversight council, operational office, system owners, all personnel.

Usage Rules: Specific permitted uses, strictly prohibited activities, data governance requirements, oversight models.

Processes: Detailed procedures for intake, assessment, approval, monitoring, incident management.

Documentation: Requirements for inventories, records, logging, model documentation.

Sample Policy Language (Illustrative Examples)

The following examples are provided for demonstration purposes only. Each organization must develop customized policy language that reflects their specific risk profile, regulatory requirements, and operational context.

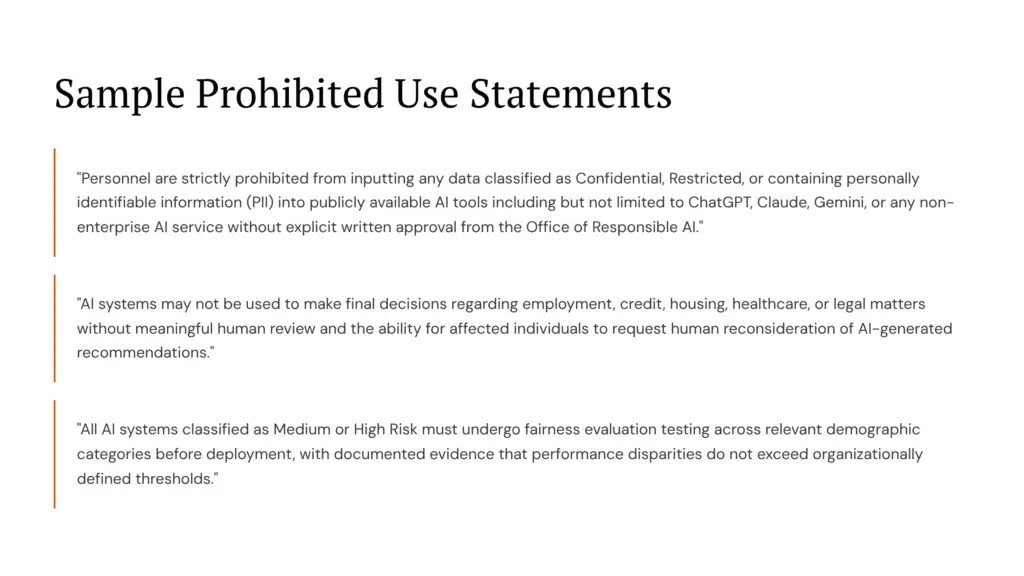

Sample Prohibited Use Statements

Data Protection Example: “Personnel are strictly prohibited from inputting any data classified as Confidential, Restricted, or containing personally identifiable information (PII) into publicly available AI tools including but not limited to ChatGPT, Claude, Gemini, or any non-enterprise AI service without explicit written approval from the Office of Responsible AI.”

Decision-Making Restrictions: “AI systems may not be used to make final decisions regarding employment, credit, housing, healthcare, or legal matters without meaningful human review and the ability for affected individuals to request human reconsideration of AI-generated recommendations.”

Bias Prevention Requirement: “All AI systems classified as Medium or High Risk must undergo fairness evaluation testing across relevant demographic categories before deployment, with documented evidence that performance disparities do not exceed organizationally defined thresholds.”

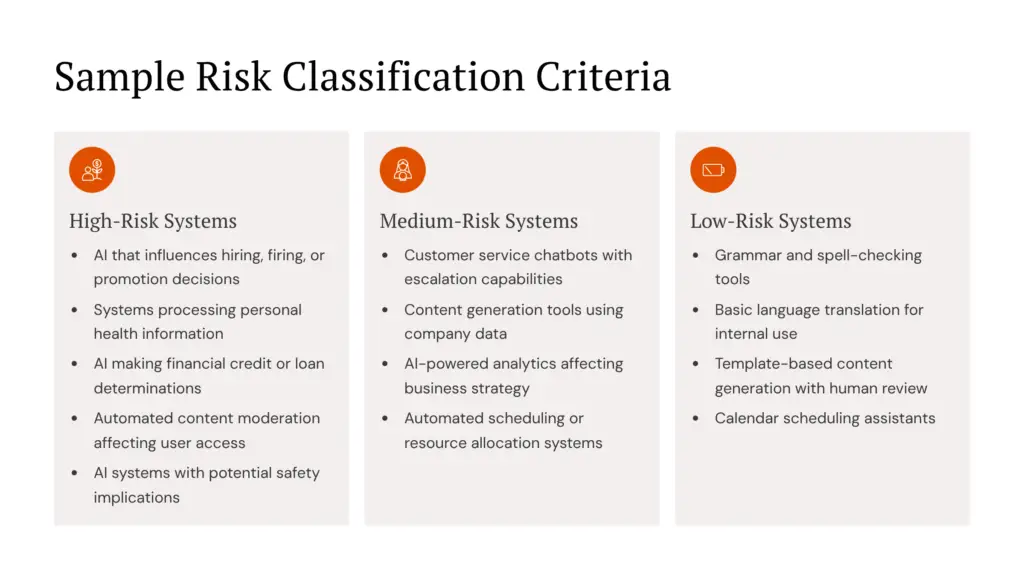

Sample Risk Classification Criteria

High-Risk Systems:

- AI that influences hiring, firing, or promotion decisions

- Systems processing personal health information

- AI making financial credit or loan determinations

- Automated content moderation affecting user access

- AI systems with potential safety implications

Medium-Risk Systems:

- Customer service chatbots with escalation capabilities

- Content generation tools using company data

- AI-powered analytics affecting business strategy

- Automated scheduling or resource allocation systems

Low-Risk Systems:

- Grammar and spell-checking tools

- Basic language translation for internal use

- Template-based content generation with human review

- Calendar scheduling assistants

These classifications are illustrative examples. Organizations must develop risk categories based on their specific use cases, industry requirements, and regulatory obligations.

Template Resources for Implementation

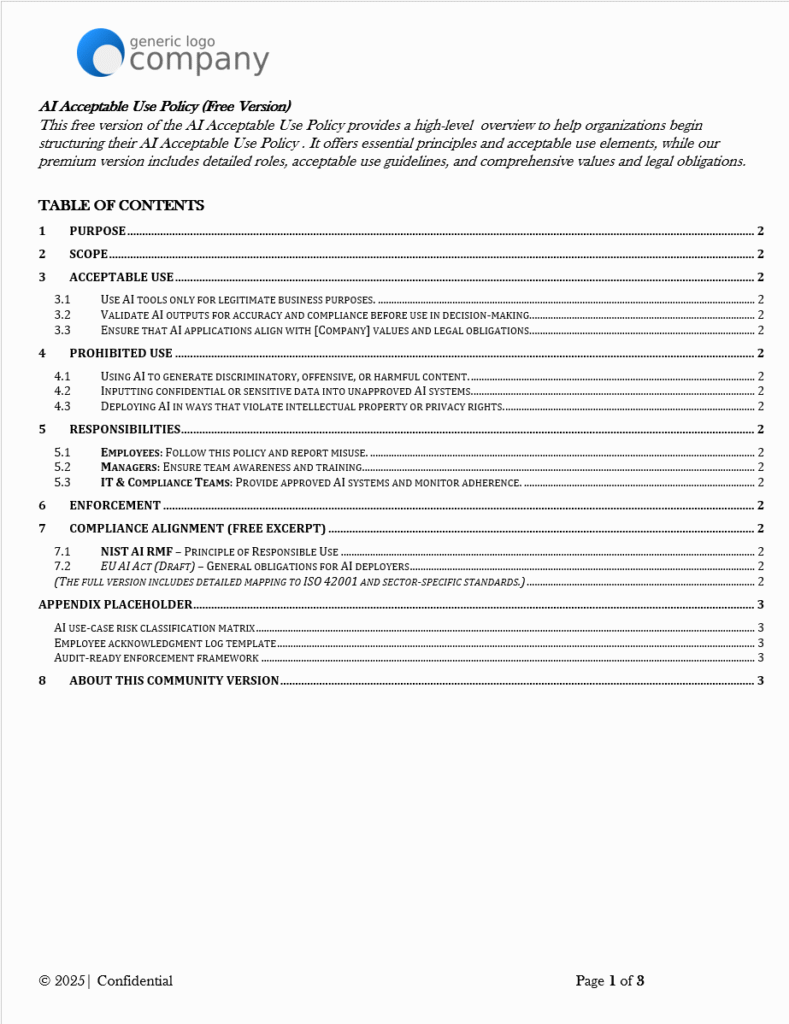

Developing comprehensive AI governance policies from scratch is often a multi-week effort, requiring coordination across legal, security, and IT teams.. Tech Jacks Solutions offers pre-built AI Acceptable Use Policy templates designed to accelerate implementation:

Community Edition: Using ready-made templates can significantly accelerate policy development, often cutting the drafting cycle down to a fraction of the time compared to starting with a blank page.

Professional Edition: Comprehensive policy suite including intake forms, impact assessment templates, and governance procedures. Organizations adopting structured templates typically find they can move from first draft to approval much faster, since the baseline structure is already in place.

These templates serve as starting points and must be customized to reflect each organization’s specific risk profile, regulatory requirements, and operational context.

Implementation: 90-Day Rollout

Policy documents don’t implement themselves. Success requires change management focused on enablement rather than mandate.

Days 1-30: Launch

Announce Properly: Executive presentations, company communications, intranet features. Establish official channels for questions.

Train Everyone: Mandatory web-based training covering policy purpose, principles, definitions, examples. Specialized sessions for developers, data scientists, procurement teams.

Days 31-60: Integration

Embed Processes: AI intake forms become mandatory for project initiation and IT procurement. No exceptions.

Require Assessments: Impact assessments become deliverables within development lifecycles for medium and high-risk projects.

Provide Support: Weekly office hours for guidance. Start building system inventory with known enterprise systems.

Days 61-90: Optimization

Review Performance: First analysis of submissions to identify challenges and improvement opportunities. Survey early adopters for feedback.

Make Improvements: Update templates, guidance, training based on experience. Publish first governance dashboard showing metrics.

Real-World Examples: Who’s Already Winning

Leading organizations aren’t waiting for regulations to force their hand. Here’s what early movers have accomplished:

Technology Leaders Setting the Standard

AWS: First Mover Advantage: In a significant industry milestone, AWS achieved ISO/IEC 42001 certification for Amazon Bedrock and other services. This makes them “the first major cloud provider to do so” according to their official announcement. No publicly verifiable success metrics are available, but the certification itself represents substantial competitive positioning.

Microsoft’s Systematic Approach: Microsoft operates under six core principles for responsible AI: Fairness, Reliability & Safety, Privacy & Security, Inclusiveness, Transparency, and Accountability. Their approach demonstrates how large organizations can operationalize AI ethics at scale.

Google’s Comprehensive Framework: Google’s AI Principles guide development and deployment through governance processes covering “model development, application deployment, and post-launch monitoring.” Additionally, Google Cloud Platform services are ISO/IEC 42001:2023 compliant.

Enterprise and Government Leadership

Salesforce’s Practical Implementation: Their AI Acceptable Use Policy includes specific restrictions: prohibiting use of their services “for certain automated decision-making processes with legal effects unless there is meaningful human review.”

Government Standards in Action: The U.S. Department of Homeland Security demonstrates government-scale AI governance, requiring all AI use to be “lawful, safe, secure, responsible, trustworthy, and human-centered.”

What’s notable about these examples? None started with perfect policies. They started with frameworks and improved iteratively.

Quick Classification Guide for Common AI Tools

This guide provides illustrative examples for demonstration purposes. Organizations must evaluate their specific use cases and regulatory requirements when classifying AI systems.

Frequently Used AI Tools – Risk Assessment

| AI Tool/Service | Typical Use Case | Suggested Risk Level | Key Considerations |

| ChatGPT/Claude (Free) | Content drafting, brainstorming | Medium-High | Data input restrictions critical |

| ChatGPT Enterprise | Business content creation | Low-Medium | With proper data controls |

| Grammarly Business | Writing assistance | Low | Limited data processing |

| Salesforce Einstein | CRM insights, lead scoring | Medium | Customer data involvement |

| Custom ML Models | Recommendations, predictions | High | Depends on data and decisions |

| GitHub Copilot | Code generation | Medium | IP and security considerations |

| Canva AI Features | Design assistance | Low | Creative applications |

| Zoom AI Companion | Meeting summaries | Medium | Privacy and confidentiality |

Decision Framework Questions

Ask yourself these questions to determine risk level:

- Data Sensitivity: Does this AI process confidential, personal, or proprietary data?

- Decision Impact: Can this AI’s outputs directly affect individuals’ rights, opportunities, or access to services?

- Human Oversight: Is there meaningful human review before AI outputs are used for important decisions?

- Error Consequences: What happens if this AI makes a mistake or produces biased results?

- Regulatory Scope: Does this use case fall under specific regulatory requirements (EU AI Act high-risk categories)?

These questions serve as general guidance. Each organization should develop detailed classification criteria based on their specific risk tolerance and regulatory obligations.

Policies need practical tools that operationalize requirements into daily workflows.

Intake Forms

Every new AI project starts with structured intake capturing business problems, data sources, sensitivity levels, users, decision autonomy, risk assessments, success metrics.

Impact Assessments

Medium and high-risk systems need comprehensive analysis covering stakeholder impacts, fairness considerations, bias evaluation, privacy implications, safety concerns, data governance, technical performance, security testing, human oversight plans, specific mitigation strategies.

Governance Roles

Clear accountability prevents gaps:

Oversight Council: Senior leadership for strategy, policy approval, high-risk decisions.

Operational Office: Daily governance including intake reviews, assessments, inventory management.

System Owners: Individual accountability for specific systems throughout their lifecycles.

Why This Matters

Organizations implementing comprehensive AI governance realize significant advantages. EU AI Fines Article 99

Risk Protection

Documented governance provides defensible positions during regulatory inquiries. Systematic processes create auditable evidence of risk-based management approaches. Framework alignment ensures regulatory readiness while reducing compliance costs.

Business Benefits

Clear guidelines empower confident experimentation within boundaries. Public responsible AI commitments build stakeholder trust. Mature governance enables faster, safer adoption than competitors without frameworks.

Operational Improvements

Standardized processes reduce decision time while ensuring consistent evaluation. Centralized documentation creates learning repositories. Mandatory testing improves system reliability.

Measuring Success

Governance requires ongoing measurement and improvement.

Coverage Metrics: Percentage of AI systems in inventory, training completion rates, exception request patterns.

Risk Management: High-risk systems with completed assessments, decision timeframes by risk tier, mitigation effectiveness scores.

Operational Health: Incident detection and resolution times, monitoring alert closure rates, employee satisfaction with processes.

Future-Proofing Your Framework

Technology and regulations evolve rapidly. Sustainable governance needs built-in adaptability.

Change Management: Formal procedures ensure policies stay current with technological advances, regulatory updates, organizational learning.

Technology Anticipation: Flexible definitions encompass future AI capabilities like advanced multimodal systems and autonomous agents.

Regulatory Monitoring: Active tracking enables proactive updates rather than reactive scrambling.

Success comes from treating AI governance as competitive advantage rather than compliance burden. Well-designed policies enable innovation safely while building essential trust for long-term success.

The organizations thriving in AI adoption have one thing in common: they started governance early and iterated continuously. Don’t wait for the next regulatory surprise or AI incident to force your hand.

Your Next Steps:

- Start with inventory – List all AI tools currently used in your organization

- Classify using the guide above – Determine risk levels for each tool

- Assemble your working group – Get Legal, IT, Security, and business representatives

- Choose your approach: Build from scratch (20-40 hours) or start with proven templates

- Begin with high-risk systems – Address your biggest exposures first

Need expertise developing AI governance frameworks? Building effective policies requires knowledge across technology, legal, and regulatory domains. Specialized templates and guidance can accelerate your governance journey while avoiding common implementation pitfalls – reducing development time by 60-85% compared to starting from scratch.

Try our community edition (free) AI Acceptable Use policy to get started.

Our Professional Edition AI Acceptable Use Policy Covers Organization and Enterprise Needs.

Resources and References

Regulatory Frameworks

- EU AI Act – European Commission

- EU AI Act Explorer

- NIST AI Risk Management Framework

- NIST AI RMF 1.0 Publication

- ISO/IEC 42001 Standard

Standards and Compliance Resources

Industry Examples and Implementations

- AWS ISO/IEC 42001 Certification

- Microsoft Responsible AI

- Google AI Principles

- Salesforce AI Acceptable Use Policy

- U.S. Department of Homeland Security AI Governance

Definitions of Key Terms

Clearly define critical terms like “Generative AI,” “Sensitive Data,” “Algorithmic Bias,” and “High-Risk AI Use Cases.” Providing precise definitions helps maintain consistency and clarity across the organization.

Examples:

- AI Acceptable Use Policy (AUP): A document outlining the allowed and restricted ways in which artificial intelligence technologies can be used within an organization.

- Generative AI (GenAI): A type of artificial intelligence that can generate new content, such as text, images, audio, and video, based on the data it has been trained on.

- Ethical Principles (in AI): Fundamental values and guidelines that should govern the development and use of AI technologies, such as fairness, transparency, accountability, and beneficence.

- Data Privacy: The right of individuals to control how their personal information is collected, used, and disclosed. Regulations like GDPR and CCPA/CPRA aim to protect data privacy.

- Intellectual Property Rights: Legal rights that protect creations of the mind, such as inventions, literary and artistic works, designs, and symbols. Examples include patents, copyrights, and trademarks.

- Bias (in AI): Systemic errors in AI algorithms that result in unfair or discriminatory outcomes for certain groups of people. Bias can be present in training data or introduced during model development.

- Guardrails (in AI): Mechanisms and controls implemented to ensure that AI systems operate safely, ethically, and within acceptable boundaries. This includes filtering inputs and outputs.

- Compliance: Adherence to laws, regulations, standards, contractual obligations, and internal policies.

- Risk Management Framework: A structured approach to identifying, assessing, and controlling potential risks within an organization.

- Transparency (in AI): The extent to which the workings and decision-making processes of an AI system are understandable and explainable to humans.