AI Bias Assessment — Automated Assessment Tool with Real-Time Compliance Scoring

A structured, multi-framework bias assessment template designed to support organizations in evaluating AI bias risks across 9 lifecycle stages with automated compliance dashboards, gap tracking, and executive-level risk indicators.

[Download Now]

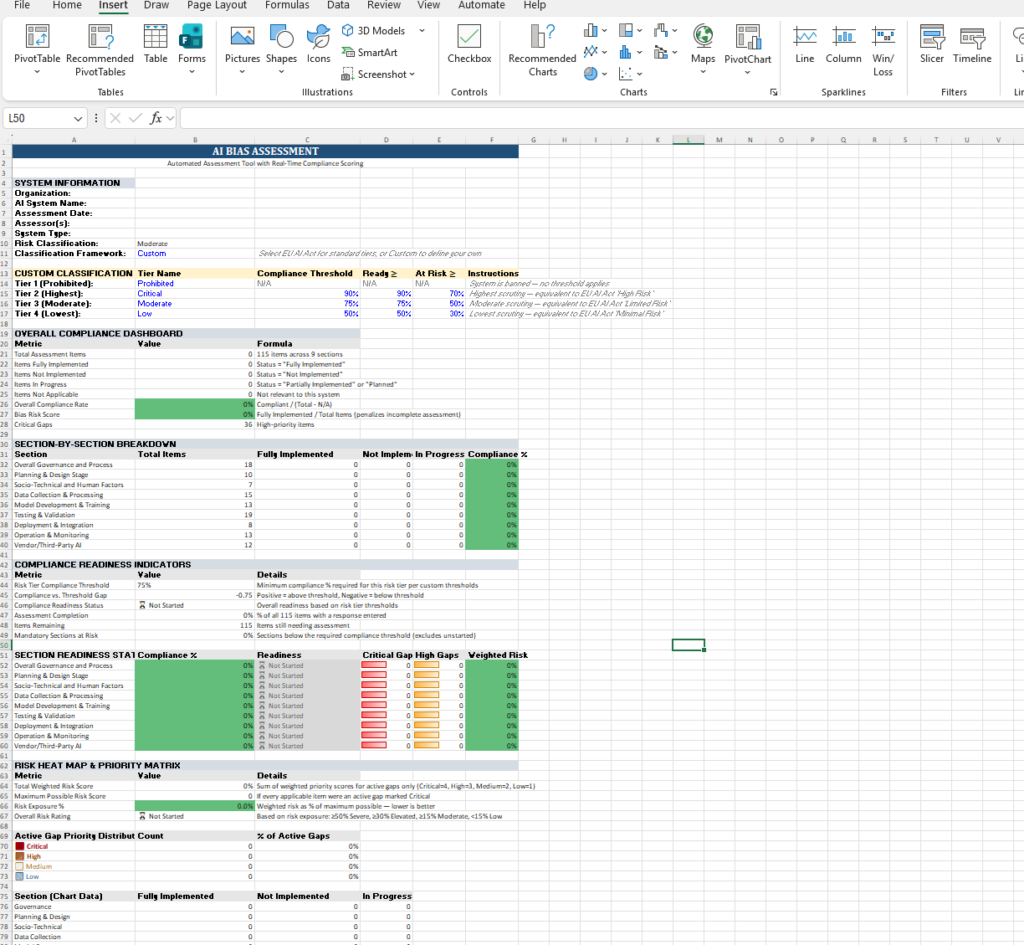

This template provides a structured self-evaluation framework containing 115 assessment items across 9 AI lifecycle sections. Each item maps directly to specific clauses and articles from 7 recognized standards and regulations. The workbook includes automated formulas that calculate compliance rates, weighted risk scores, and section-level readiness indicators in real time as assessors complete the checklist. Organizations will need to customize responses, evidence documentation, and priority weightings to reflect their specific AI systems, deployment contexts, and regulatory exposure. This template is designed to reduce the time required to build a bias assessment program from scratch, providing pre-mapped framework references, built-in scoring logic, and a ready-to-use gap analysis tracker.

Key Benefits

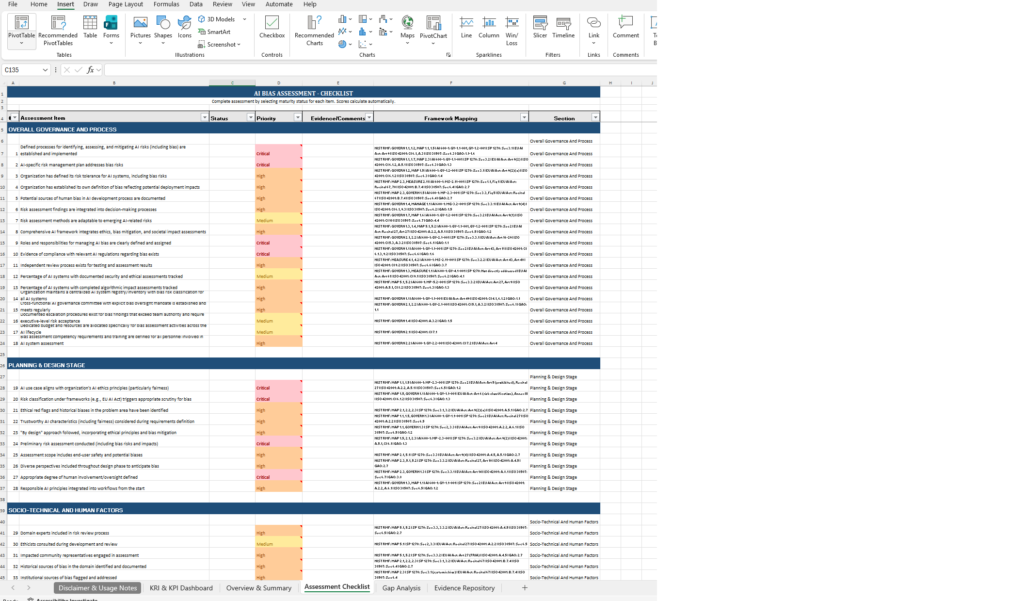

- ✅ Provides a 115-item assessment checklist covering Governance, Planning & Design, Socio-Technical Factors, Data Collection, Model Development, Testing & Validation, Deployment, Operations, and Vendor/Third-Party AI

- ✅ Includes automated real-time compliance scoring with formulas that calculate section-by-section and overall compliance rates

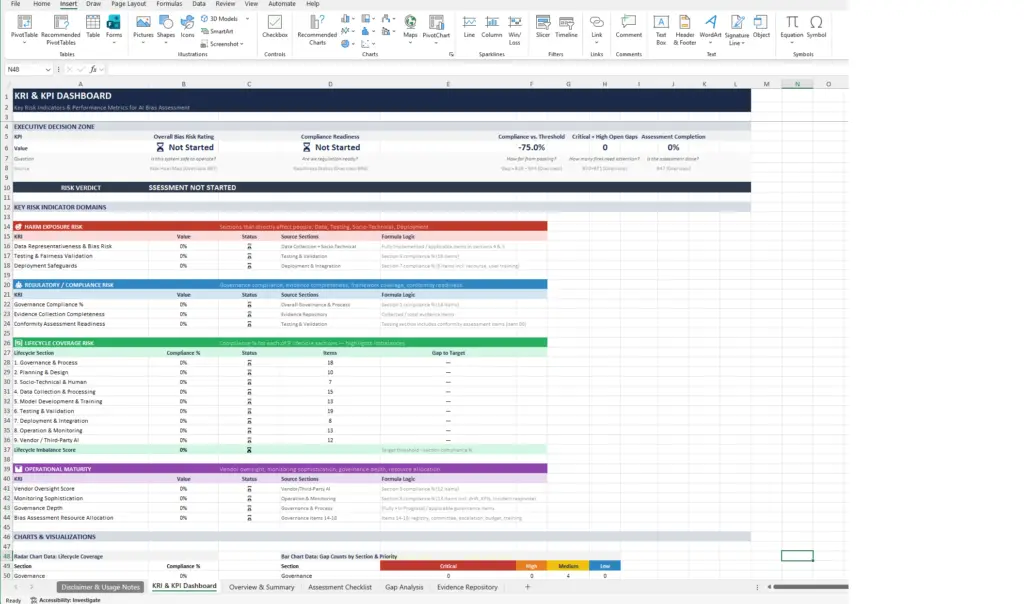

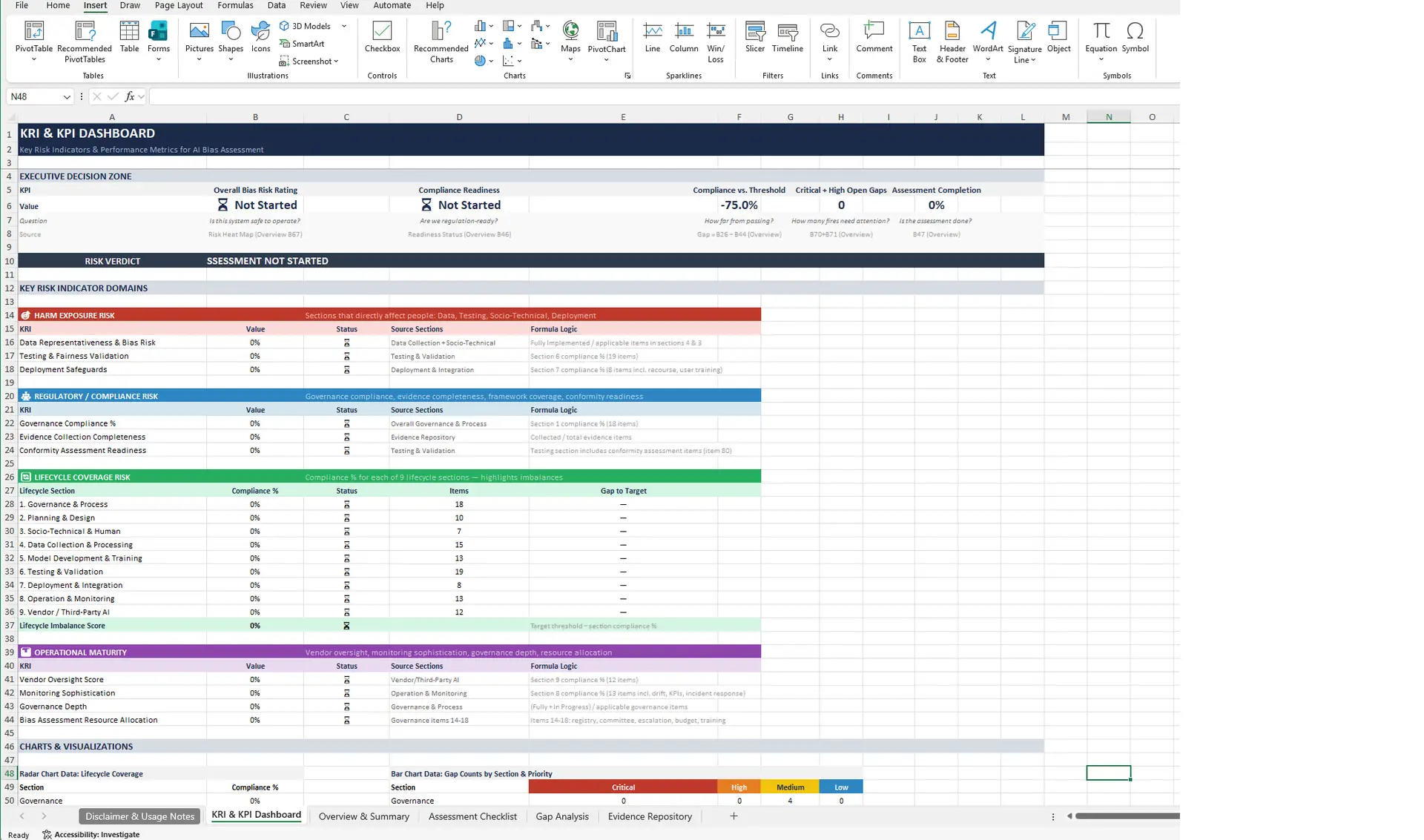

- ✅ Features a KRI & KPI Dashboard with executive-level risk indicators, lifecycle coverage analysis, and chart-ready data

- ✅ Contains built-in gap analysis that auto-populates non-compliant items with fields for target dates and remediation owners

- ✅ Supports dual risk classification modes: EU AI Act tiers (Unacceptable/High/Limited/Minimal) and fully customizable tiers with adjustable thresholds

- ✅ Maps each assessment item to specific references across NIST AI RMF 1.0, NIST AI 600-1, NIST SP 1270, EU AI Act articles and recitals, ISO/IEC 42001:2023, ISO/IEC 38507:2022, and GAO AI Accountability Framework

- ✅ Includes a 35-item Evidence Repository organized by category (Bias Assessment, Data, Testing, Governance, Monitoring) with status tracking and document location fields

- ✅ Provides a weighted Risk Heat Map with severity ratings (LOW / MODERATE / ELEVATED / SEVERE) based on priority-weighted gap scores

Who Uses This?

Designed for:

- AI Governance Officers and AI Ethics Leads responsible for bias oversight programs

- Risk Managers and Compliance Officers evaluating AI systems against regulatory frameworks

- Data Scientists and ML Engineers who need structured bias evaluation checkpoints during development

- Internal Audit Teams conducting AI system reviews

- Organizations preparing for or working toward EU AI Act requirements or ISO/IEC 42001 alignment

- Consultants and advisors supporting clients with AI governance program development

The template includes 6 interconnected worksheets: Disclaimer & Usage Notes (with a detailed step-by-step guide, priority customization guidance, and KRI dashboard interpretation guide), KRI & KPI Dashboard, Overview & Summary, Assessment Checklist, Gap Analysis & Remediation Tracker, and Evidence Repository.

Why This Matters

AI bias isn’t an abstract concern reserved for academic papers. When a hiring algorithm filters out qualified candidates based on proxy variables, or a credit scoring model produces disparate outcomes across demographic groups, the consequences are concrete and measurable. Regulations are catching up to this reality. The EU AI Act establishes binding legal requirements for high-risk AI systems, including specific articles addressing data quality, bias mitigation, human oversight, and conformity assessment. NIST’s AI Risk Management Framework and its companion publications (AI 600-1 for generative AI, SP 1270 for bias specifically) provide voluntary but increasingly referenced guidance for structuring AI governance programs.

The challenge for most organizations isn’t awareness that bias matters. It’s knowing where to start, what to measure, and how to document progress in a way that maps to recognized frameworks. Building a bias assessment program from scratch means interpreting hundreds of pages of standards, defining your own checklist items, creating scoring logic, and designing reporting structures. That’s before anyone actually assesses a single AI system.

This template provides that starting structure. It translates requirements from 7 frameworks into 115 specific, assessable items organized by lifecycle stage, with pre-built scoring, gap tracking, and executive reporting. It doesn’t replace the judgment calls your team needs to make about risk tolerance and control implementation. It provides the organized framework for making and documenting those calls.

Framework Alignment

- NIST AI Risk Management Framework (AI RMF 1.0): Assessment items map to GOVERN, MAP, MEASURE, and MANAGE functions with specific sub-function references

- NIST AI 600-1: Includes Generative AI profile identifiers (GV, MP, MS, MG series) for items relevant to GenAI-specific bias risks

- NIST SP 1270: References Towards a Standard for Identifying and Managing Bias in Artificial Intelligence, including sections on statistical, contextual, and systemic bias

- EU AI Act: Maps to specific Articles (Art 5, 6, 9, 10, 13, 14, 25, 27, 43, 72, 86) and Recitals addressing prohibited practices, high-risk requirements, transparency, data governance, and post-market monitoring

- ISO/IEC 42001:2023: References AI Management System (AIMS) clauses (Cl 4-10) and Annex A/B controls relevant to bias governance and assessment

- ISO/IEC 38507:2022: Includes governance implications references (Sec 6.1-6.7) for organizational oversight of AI systems

- GAO AI Accountability Framework: Maps to governance, data, performance, and monitoring practice areas

Key Features

- 115 Assessment Items Across 9 Lifecycle Sections: Governance & Process (18 items), Planning & Design (10 items), Socio-Technical & Human Factors (7 items), Data Collection & Processing (15 items), Model Development & Training (13 items), Testing & Validation (19 items), Deployment & Integration (8 items), Operation & Monitoring (13 items), and Vendor/Third-Party AI (12 items)

- Five-Level Maturity Scale: Each item uses Fully Implemented, Partially Implemented, Planned, Not Implemented, or N/A rather than binary Yes/No, providing more granular insight into implementation depth

- Four-Level Priority Weighting: Items carry Critical, High, Medium, or Low priority based on framework criticality signals, with pre-populated defaults and guidance for customization based on regulatory exposure, harm potential, compensating controls, system type, and deployment context

- Automated Real-Time Dashboards: Overview & Summary tab includes compliance dashboard, section-by-section breakdown, compliance readiness indicators, section readiness status, risk heat map, and active gap priority distribution, all calculated via live formulas

- KRI & KPI Dashboard: Executive Decision Zone with 5 KPI cards (Overall Bias Risk Rating, Compliance Readiness, Compliance vs. Threshold, Critical + High Open Gaps, Assessment Completion), plus domain-level KRIs for Harm Exposure, Regulatory/Compliance, Lifecycle Coverage, and Operational Maturity

- Auto-Populating Gap Analysis: Non-compliant items (Not Implemented, Partially Implemented, Planned) automatically surface in the Gap Analysis & Remediation Tracker with fields for target dates and owners

- Evidence Repository: 35 documentation items organized across 5 categories (Bias Assessment, Data, Testing, Governance, Monitoring & Operations) with status tracking (Collected/Pending/Missing/N/A) and document location fields

- Dual Classification Mode: Supports EU AI Act risk tiers with pre-set thresholds or fully customizable tiers where organizations define their own tier names, compliance thresholds, and readiness cutoffs

Comparison Table: Building From Scratch vs. Using This Template

| Area | Starting From Scratch | This Template |

|---|---|---|

| Framework Mapping | Requires interpreting 7 separate standards and manually identifying relevant requirements | 115 items pre-mapped to specific clauses, articles, and sections across all 7 frameworks |

| Scoring Logic | Must design compliance calculation methodology, weighting system, and readiness thresholds from scratch | Automated formulas calculate compliance rates, weighted risk scores, and readiness indicators in real time |

| Gap Tracking | Requires building separate tracking system, manually identifying non-compliant items | Gap Analysis tab auto-populates with all non-compliant items, includes remediation fields |

| Executive Reporting | Must design dashboards, KRI/KPI structures, and visualization frameworks | KRI & KPI Dashboard with executive decision zone, domain-level risk indicators, and chart-ready data |

| Evidence Management | Requires defining documentation requirements and building collection tracking | 35-item Evidence Repository with categories, status tracking, and location fields |

| Risk Classification | Requires defining risk tiers, thresholds, and readiness criteria | Dual mode: EU AI Act pre-set tiers or fully customizable organization-defined tiers |

FAQ Section

Q: What frameworks does this assessment template map to? A: Each of the 115 assessment items maps to specific references across NIST AI RMF 1.0, NIST AI 600-1, NIST SP 1270, EU AI Act (Articles and Recitals), ISO/IEC 42001:2023, ISO/IEC 38507:2022, and GAO AI Accountability Framework. Framework mappings are provided in Column F of the Assessment Checklist for traceability. Organizations should verify references against their own licensed copies of applicable standards.

Q: Do I need to complete all 115 items? A: No. The dashboard tracks completion progress and all metrics update incrementally. Items can be marked N/A if not applicable to your AI system or organizational context. N/A items are automatically excluded from compliance rate calculations.

Q: Does this template guarantee compliance with the EU AI Act or ISO/IEC 42001? A: No. This template is designed as a self-evaluation tool for internal use to help organizations gauge readiness and track progress. It does not constitute a formal audit, certification assessment, or legal compliance determination. Compliance with the EU AI Act, ISO/IEC 42001 certification, or alignment with NIST frameworks requires formal evaluation by qualified assessors or accredited certification bodies.

Q: What software is required? A: The template requires Microsoft 365 (Excel for Windows, Mac, or Web) or Google Sheets, both of which are fully compatible. Excel 2021 (perpetual license) provides partial compatibility (the Gap Analysis tab uses HSTACK() which is unavailable in that version). Excel 2019 or earlier is not supported due to required FILTER() and HSTACK() dynamic array functions.

Q: Can I customize the risk classification thresholds? A: Yes. The template supports two classification modes. EU AI Act mode uses standard tiers (Unacceptable/High/Limited/Minimal) with pre-set compliance thresholds. Custom mode allows organizations to define their own tier names, compliance thresholds, and readiness cutoffs. Editable cells are highlighted within the Overview & Summary tab.

Q: What file format is the template delivered in? A: The template is delivered as an .xlsx file optimized for Microsoft Excel and Google Sheets to ensure proper formatting, formula functionality, and collaborative editing capabilities.

Ideal For Section

- Organizations deploying AI systems that may fall under EU AI Act high-risk classification

- Companies building or expanding AI governance programs that need structured bias assessment tooling

- Risk and compliance teams conducting internal AI system reviews against recognized frameworks

- AI development teams seeking lifecycle-stage checkpoints for bias evaluation during development and deployment

- Consultants and advisory firms supporting clients with AI governance assessments

- Organizations pursuing or preparing for ISO/IEC 42001 certification that need bias-specific assessment coverage

- Internal audit departments building AI audit programs

Pricing Strategy Options

Single Template: Contact for pricing based on organizational requirements and customization needs. Bundle Option: May be combined with additional AI governance templates (such as AI policy, risk management, or impact assessment templates) depending on organizational compliance scope. Enterprise Option: Available as part of comprehensive AI governance documentation suites.

⚖️ Differentiator

This template provides a structured, multi-framework bias assessment tool that maps 115 specific assessment items to 7 recognized standards and regulations across 9 AI lifecycle stages. Unlike single-framework checklists that address bias as one line item among many governance topics, this template focuses specifically on bias assessment depth, with dedicated coverage of data representativeness, proxy variable analysis, intersectional bias, feedback loop risks, fairness-accuracy trade-offs, GenAI-specific bias categories, and vendor/third-party AI oversight. The automated scoring, dual classification modes, KRI/KPI dashboard, and auto-populating gap analysis are designed to reduce the manual effort typically required to build and maintain a bias assessment program, while the Evidence Repository provides a structured approach to documentation collection that supports audit preparation.

Disclaimer: This template is designed as a self-evaluation and internal assessment tool. It does not constitute a formal audit, certification assessment, or legal compliance determination. Organizations should consult qualified legal and compliance professionals for definitive regulatory guidance. Framework mappings are provided as guidance and do not represent exhaustive coverage of any single standard.