AI Bias Assessment Checklist Template

A structured framework designed to support organizations in identifying, documenting, and addressing bias risks throughout the AI system lifecycle, with alignment to EU AI Act risk classification requirements.

[Download Now]

This template provides a ready-to-customize checklist for conducting AI bias assessments across ten distinct lifecycle stages. Organizations can use this framework to document their bias risk identification processes, track remediation priorities, and maintain evidence for audit purposes.

The checklist requires customization to reflect your organization’s specific AI systems, risk tolerance definitions, and operational context. While comprehensive in scope, implementation timelines vary based on organizational maturity and the complexity of AI systems being assessed.

Key Benefits

✓ Provides a structured framework covering 96 individual assessment items across 10 lifecycle stages

✓ Includes a RACI matrix template for defining bias management responsibilities

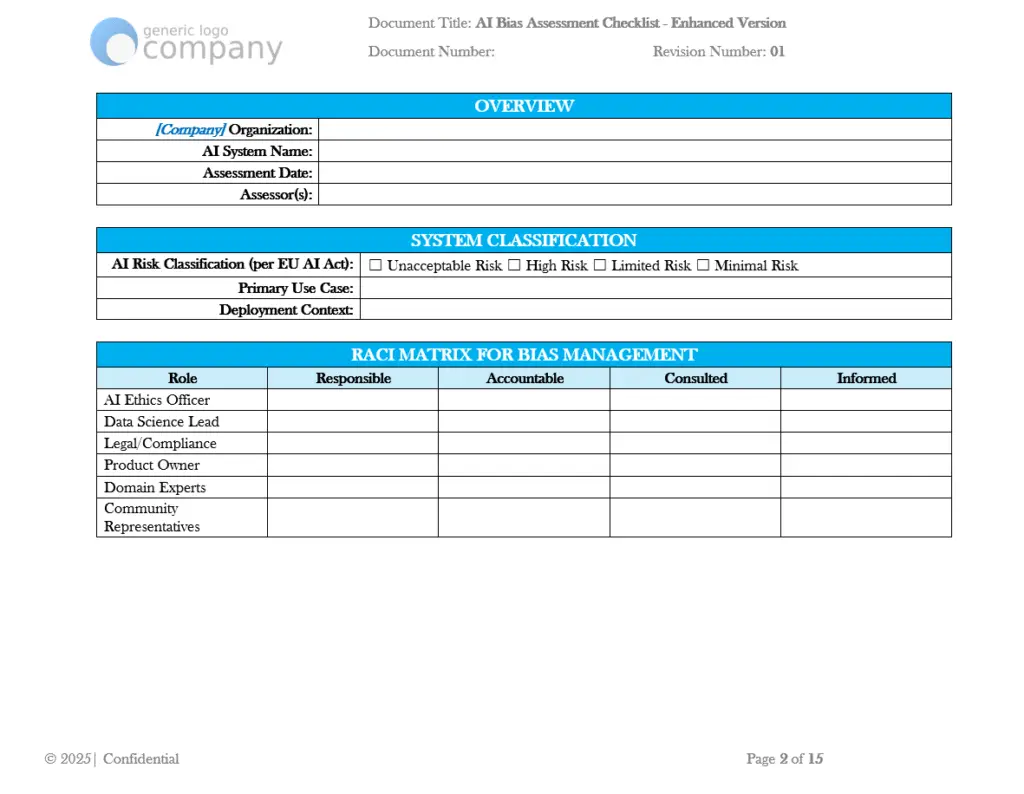

✓ Supports EU AI Act risk classification documentation (Unacceptable, High, Limited, Minimal Risk categories)

✓ Features built-in evidence and artifact tracking columns for each assessment item

✓ Contains a bias risk scoring matrix for likelihood and impact evaluation

✓ Includes approval chain documentation and version history tracking

Who Uses This?

This template is designed for:

- AI Ethics Officers and Responsible AI teams conducting governance assessments

- Data Science and ML Engineering leads documenting bias mitigation efforts

- Compliance and Legal teams preparing for regulatory reviews

- Risk Management professionals evaluating AI system impacts

- Product Owners overseeing AI-enabled features and services

What’s Included

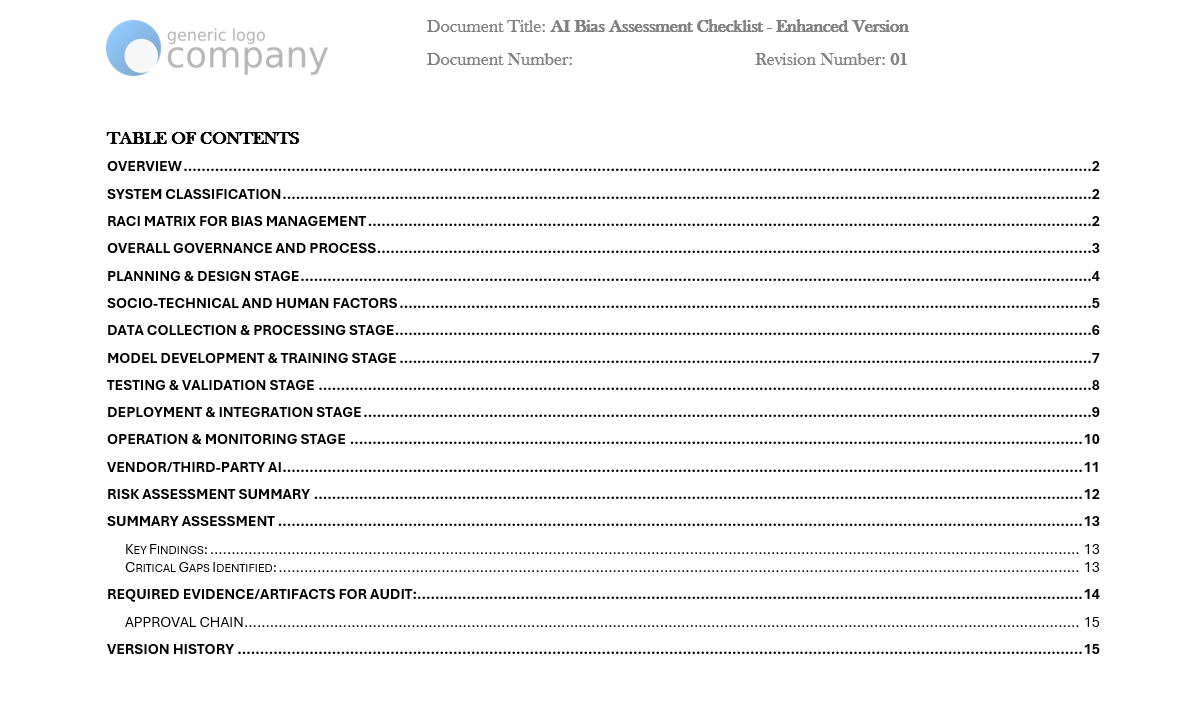

The template contains assessment sections covering governance processes, planning and design, socio-technical factors, data collection, model development, testing and validation, deployment, operations monitoring, and vendor/third-party AI evaluation. Each section includes Yes/No/N/A response options, priority indicators (High/Medium/Low), and dedicated columns for evidence documentation and comments.

Why This Matters

AI systems can perpetuate or amplify existing biases in ways that create discriminatory outcomes. Organizations deploying AI face increasing scrutiny from regulators, customers, and stakeholders regarding fairness and bias management practices.

The EU AI Act establishes specific requirements for high-risk AI systems, including documentation of risk management processes and bias mitigation measures. Organizations without systematic approaches to bias assessment may face compliance gaps, reputational risks, and potential harm to affected populations.

A structured checklist approach helps teams move from ad-hoc bias reviews to documented, repeatable assessment processes. This documentation can support internal governance requirements, customer due diligence requests, and regulatory inquiries.

Framework Alignment

This template incorporates concepts and terminology aligned with:

- EU AI Act risk classification categories and compliance requirements

- Trustworthy AI characteristics including fairness, accountability, and transparency

- Responsible AI principles for ethical AI development and deployment

- Fairness metrics including demographic parity and equal opportunity measures

- Conformity assessment documentation practices for regulatory compliance

Key Features

The template includes the following components:

- System Classification Section: Documents AI risk level per EU AI Act categories, primary use case, and deployment context

- RACI Matrix: Assigns Responsible, Accountable, Consulted, and Informed roles for AI Ethics Officer, Data Science Lead, Legal/Compliance, Product Owner, Domain Experts, and Community Representatives

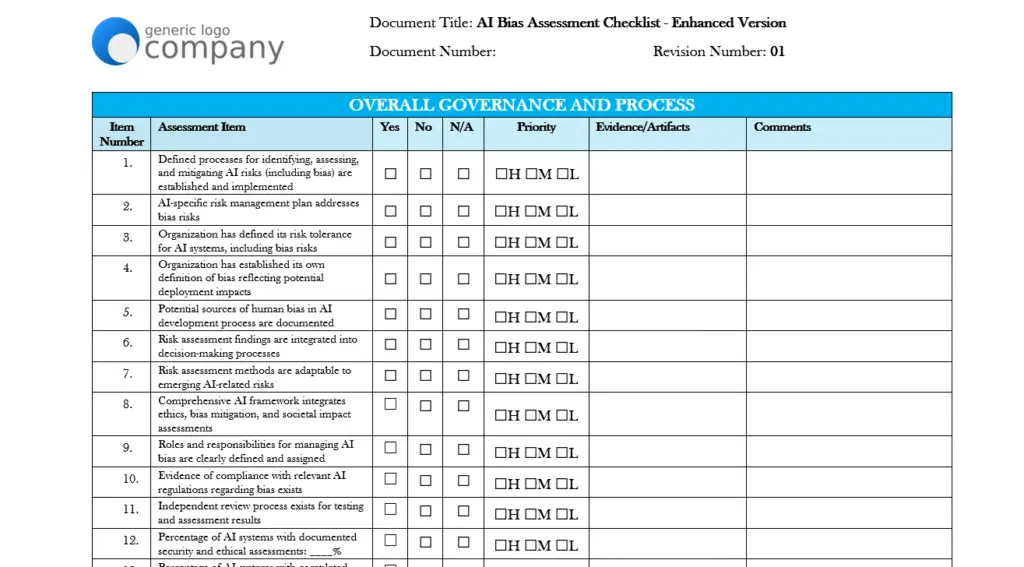

- Overall Governance (13 items): Covers risk management processes, risk tolerance definitions, bias documentation, and compliance evidence

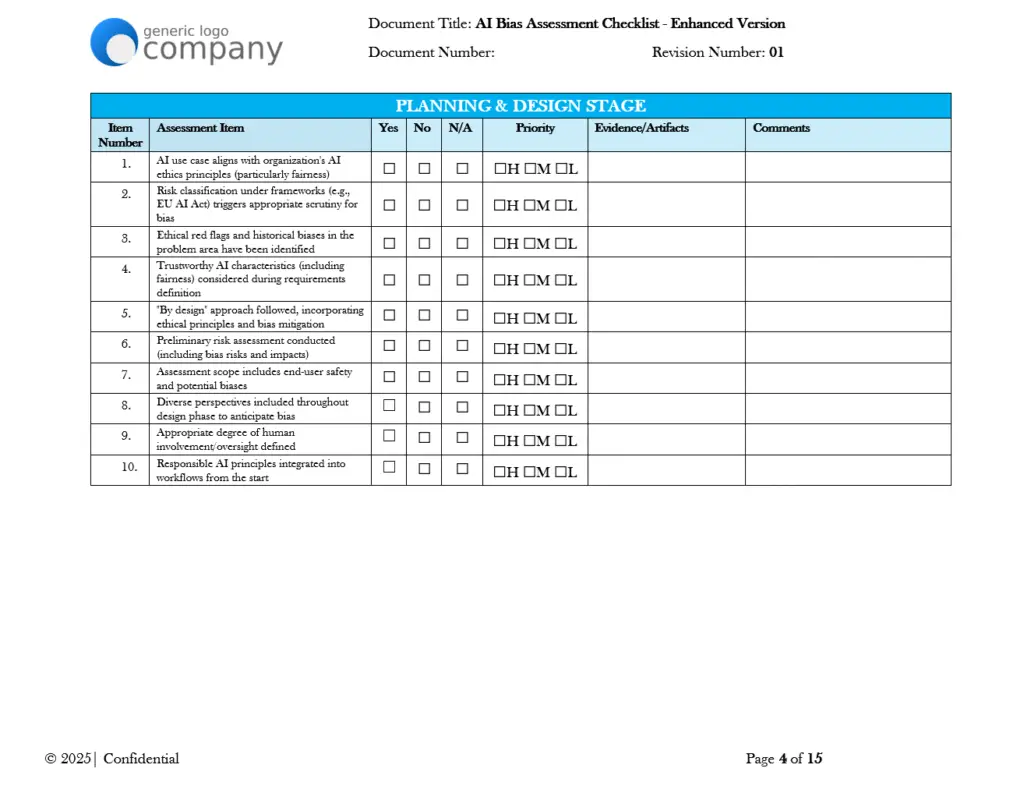

- Planning & Design (10 items): Addresses ethics alignment, risk classification, historical bias identification, and human oversight requirements

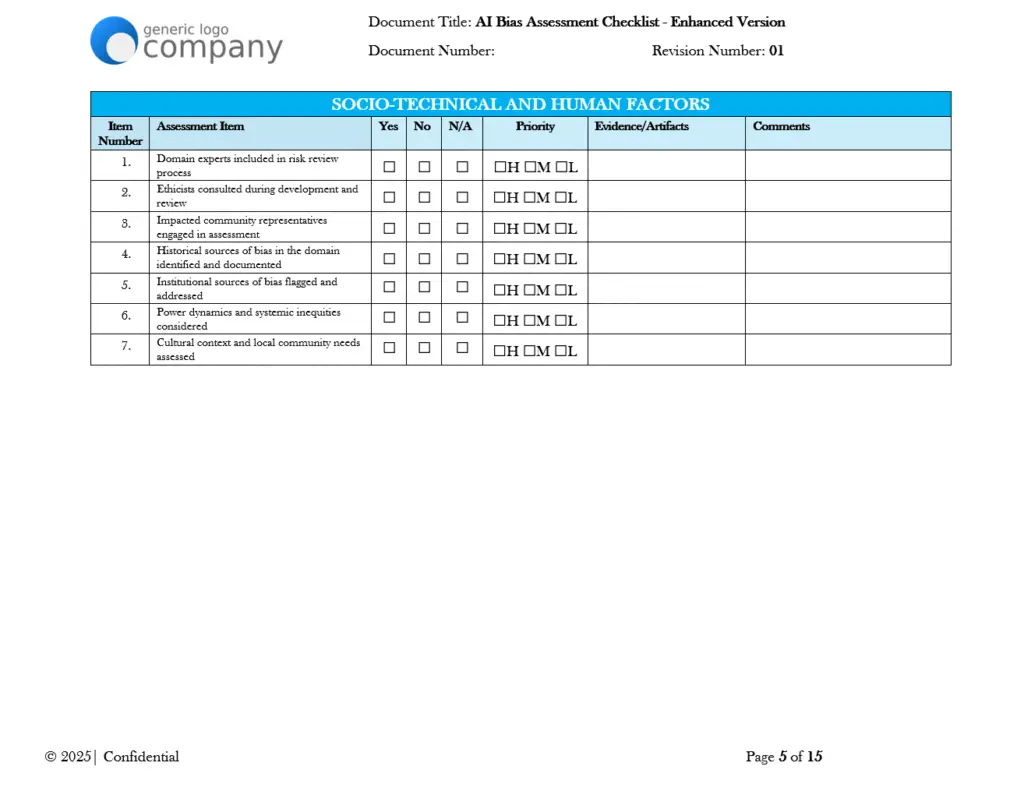

- Socio-Technical Factors (7 items): Evaluates domain expert involvement, community engagement, institutional bias, and cultural context

- Data Collection & Processing (11 items): Assesses data representativeness, provenance documentation, sensitive variable handling, and labeling bias

- Model Development & Training (10 items): Covers bias measurement techniques, mitigation methods, fairness constraints, and explainability

- Testing & Validation (17 items): Documents fairness metrics, disparate impact testing, third-party evaluation, and conformity assessment

- Deployment & Integration (6 items): Addresses user training, human oversight, accessibility, and pre-deployment checklists

- Operations & Monitoring (11 items): Covers post-deployment monitoring, alerting mechanisms, incident response, and ongoing audits

- Vendor/Third-Party AI (11 items): Evaluates vendor risk management, transparency, model cards, and contractual requirements

- Risk Assessment Summary: Includes a scoring matrix for likelihood, impact, and mitigation priority across six risk factors

- Summary Assessment Section: Provides fields for compliance rate, key findings, critical gaps, and prioritized action items

- Evidence Checklist: Documents required artifacts including fairness metrics reports, datasheets, audit findings, and model cards

- Approval Chain: Captures sign-offs from Assessor, Technical Reviewer, Ethics Officer, Legal/Compliance, and Executive Sponsor

Comparison: Generic Approach vs. Structured Template

| Aspect | Generic/Ad-Hoc Approach | Professional Template |

|---|---|---|

| Coverage | Inconsistent, varies by assessor | 96 items across 10 lifecycle stages |

| Documentation | Scattered notes, emails | Centralized checklist with evidence tracking |

| Accountability | Unclear ownership | RACI matrix with defined roles |

| Regulatory Alignment | May miss required elements | EU AI Act risk classification integration |

| Audit Readiness | Difficult to demonstrate | Built-in evidence and approval tracking |

| Repeatability | Different each time | Standardized assessment process |

FAQ Section

What file format is this template? The template is provided in Microsoft Word (.docx) format for collaborative editing capabilities. Documents are optimized for Microsoft Word to ensure proper formatting and table rendering.

Does this template guarantee compliance with the EU AI Act? No. This template provides a structured framework designed to support bias assessment documentation. Compliance with the EU AI Act depends on many factors specific to your AI systems, use cases, and organizational context. Organizations should consult legal and compliance professionals regarding their specific regulatory obligations.

How long does it take to complete an assessment using this template? Assessment timelines vary significantly based on the complexity of the AI system being evaluated, organizational maturity in AI governance, availability of existing documentation, and the depth of analysis required. The template provides structure but the effort depends on your specific circumstances.

Can this template be used for AI systems that are not high-risk under the EU AI Act? Yes. While the template includes EU AI Act risk classification, the assessment items apply broadly to AI bias evaluation regardless of regulatory classification. Organizations can mark items as N/A where not applicable to their specific context.

What expertise is needed to use this template effectively? Effective use typically requires familiarity with AI/ML development practices, understanding of bias concepts and fairness metrics, and knowledge of your organization’s specific AI systems. The template is designed to facilitate cross-functional collaboration among technical, legal, ethics, and domain experts.

Does this template include the actual fairness metrics or testing tools? No. This template provides a documentation framework for recording assessment results. Organizations need separate tools and processes for actually measuring fairness metrics, conducting bias testing, and implementing mitigation techniques.

Ideal For

- Organizations developing or deploying AI systems that may impact individuals or groups

- Companies preparing for EU AI Act compliance requirements

- Teams establishing or maturing their Responsible AI governance programs

- Enterprises conducting vendor due diligence on third-party AI solutions

- Risk management functions incorporating AI into their assessment scope

- Internal audit teams evaluating AI governance controls

Pricing Strategy Options

Single Template: Contact for pricing based on organizational requirements and intended use.

Bundle Option: May be combined with related AI governance templates depending on organizational compliance scope.

Enterprise Option: Available as part of comprehensive AI governance documentation suites for organizations with multiple AI systems or deployment contexts.

Differentiator

This template provides a lifecycle-based approach to AI bias assessment, covering the full journey from planning through ongoing operations rather than focusing solely on pre-deployment testing. The inclusion of socio-technical factors, vendor assessment criteria, and a structured RACI matrix distinguishes this from simpler checklists that address only technical bias metrics. The built-in evidence tracking and approval chain components support organizations in maintaining documentation that can serve multiple purposes: internal governance, customer due diligence, and regulatory inquiry responses.

Note: This template provides a documentation framework requiring customization. Actual bias detection, measurement, and mitigation require separate technical processes and expertise. This template does not guarantee regulatory compliance or specific outcomes.