Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated January 15th, 2026

Hello Everyone, Help us grow our community by sharing and/or supporting us on other platforms. This allow us to show verification that what we are doing is valued. It also allows us to plan and allocate resources to improve what we are doing, as we then know others are interested/supportive.

Table of Contents

Understanding ISO 42001 Clause 8: Where AI Governance Gets Real

You’ve got policies. You’ve mapped risks. Now what?

Clause 8 of ISO/IEC 42001:2023 answers that question. It’s titled “Operation” and it’s where governance stops being theoretical. This clause forces organizations to prove they’re actually doing what their policies say they’ll do.

Disclaimer: This article discusses general principles aligned with ISO standards. Consult ISO/IEC 42001:2023 directly for specific compliance requirements.

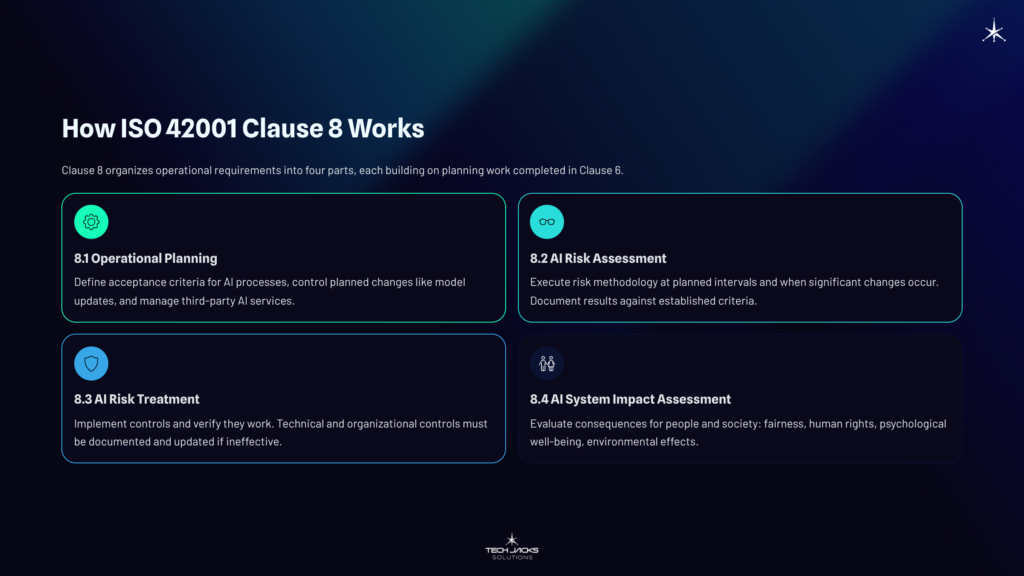

How ISO 42001 Clause 8 Works: The Four Sub-Clauses

Clause 8 organizes operational requirements into four parts. Each builds on planning work completed in Clause 6.

8.1 Operational Planning and Control covers the daily mechanics. Organizations define acceptance criteria for AI processes (think minimum accuracy thresholds or maximum bias scores), control planned changes like model updates, and manage third-party AI services. When you’re using a vendor’s model or purchased dataset, this sub-clause requires you to ensure it meets your standards.

8.2 AI Risk Assessment requires executing the methodology you defined during planning. Not once. At planned intervals and whenever significant changes occur. Model retraining? New deployment context? That triggers a new assessment. Organizations must document results showing risks were evaluated against established criteria.

8.3 AI Risk Treatment means implementing controls and verifying they work. Technical controls might include bias mitigation algorithms or human oversight triggers. Organizational controls could be usage policies or training requirements. ISO 42001 Annex A provides reference controls, but organizations can design their own. The catch: if a treatment doesn’t work, you update your approach.

8.4 AI System Impact Assessment is where ISO 42001 differs most from other standards. This isn’t about organizational risk. It evaluates consequences for people and society: fairness, human rights, psychological well-being, environmental effects. The EU AI Act requires similar fundamental rights impact assessments for high-risk systems (the regulation entered force August 2024), so organizations building this capability now are preparing for regulatory requirements already taking shape.

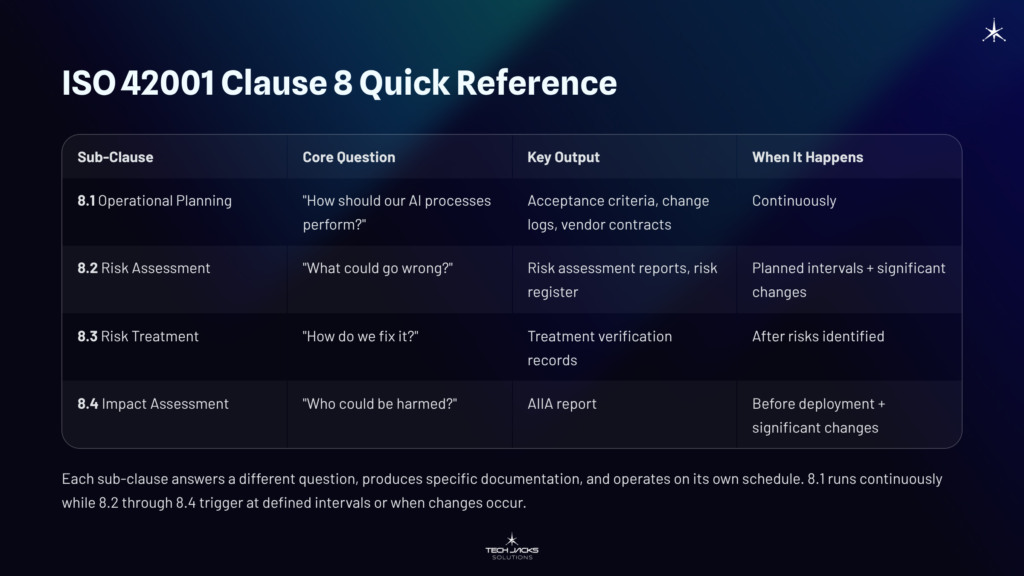

ISO 42001 Clause 8 Quick Reference

| Sub-Clause | Core Question | Key Output | When It Happens |

|---|---|---|---|

| 8.1 Operational Planning | “How should our AI processes perform?” | Acceptance criteria, change logs, vendor contracts | Continuously |

| 8.2 Risk Assessment | “What could go wrong?” | Risk assessment reports, risk register | Planned intervals + significant changes |

| 8.3 Risk Treatment | “How do we fix it?” | Treatment verification records | After risks identified |

| 8.4 Impact Assessment | “Who could be harmed?” | AIIA report | Before deployment + significant changes |

This table captures the essentials. Each sub-clause answers a different question, produces specific documentation, and operates on its own schedule. Novices should understand that 8.1 runs continuously while 8.2 through 8.4 trigger at defined intervals or when changes occur.

Why AI Risk Management Requires Clause 8

Auditors don’t care about your intentions.

They want documented proof. Operational logs. Bias testing records. Evidence that when your acceptance criteria weren’t met, corrective actions followed. Organizations fail audits not because they lack policies but because they can’t demonstrate execution. The distinction sounds subtle until you’re sitting across from an auditor who asks for your evidence register and you realize you only have process descriptions.

AI systems also change in ways traditional software doesn’t. Model drift degrades accuracy over time. Bias can emerge as training data shifts. A lending model trained on 2022 data might perform differently when economic conditions change in 2025. The requirement to reassess at planned intervals prevents systems from quietly becoming problems while everyone assumes they’re fine.

For executives, Clause 8 generates the evidence that transforms AI governance from a cost center into demonstrable accountability. When regulators ask how you’re managing AI risk, you point to documented assessments and verified treatments. When customers ask about responsible AI practices, you have specific answers backed by records. This isn’t abstract compliance work. It’s building the audit trail that protects the organization.

AI Governance Business Value: What Clause 8 Delivers

Executives often ask what Clause 8 actually delivers. Here’s the concrete value:

| Business Outcome | How Clause 8 Delivers It |

|---|---|

| Audit protection | Documented evidence proves governance functions, not just exists |

| Regulatory readiness | AIIA process prepares for EU AI Act and emerging requirements |

| Reduced liability exposure | Systematic risk treatment creates defensible decision records |

| Stakeholder confidence | Verifiable practices replace trust-me assertions |

| Operational resilience | Planned interval assessments catch model drift before failures |

The cost of skipping Clause 8 isn’t obvious until something goes wrong. Organizations without documented governance face harder questions from regulators, less favorable audit outcomes, and weaker legal positions if AI systems cause harm. The investment in operational execution pays off in risk avoided.

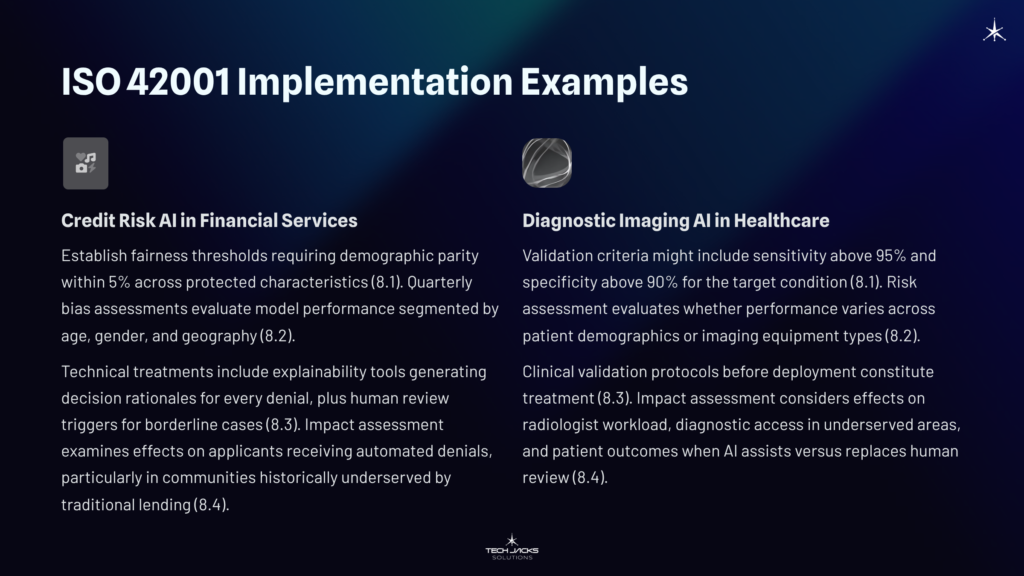

ISO 42001 Implementation Examples

Consider a credit risk AI in financial services. The organization might establish fairness thresholds requiring demographic parity within 5% across protected characteristics (8.1). Quarterly bias assessments would evaluate model performance segmented by age, gender, and geography (8.2). Technical treatments could include explainability tools generating decision rationales for every denial, plus human review triggers for borderline cases (8.3). Impact assessment would examine effects on applicants receiving automated denials, particularly in communities historically underserved by traditional lending (8.4).

A diagnostic imaging AI in healthcare would follow different specifics but the same structure. Validation criteria might include sensitivity above 95% and specificity above 90% for the target condition (8.1). Risk assessment would evaluate whether performance varies across patient demographics or imaging equipment types (8.2). Clinical validation protocols before deployment would constitute treatment (8.3). Impact assessment would consider effects on radiologist workload, diagnostic access in underserved areas, and patient outcomes when AI assists versus replaces human review (8.4).

These aren’t hypothetical. They represent the practical application of abstract requirements in contexts where AI systems affect real decisions about real people.

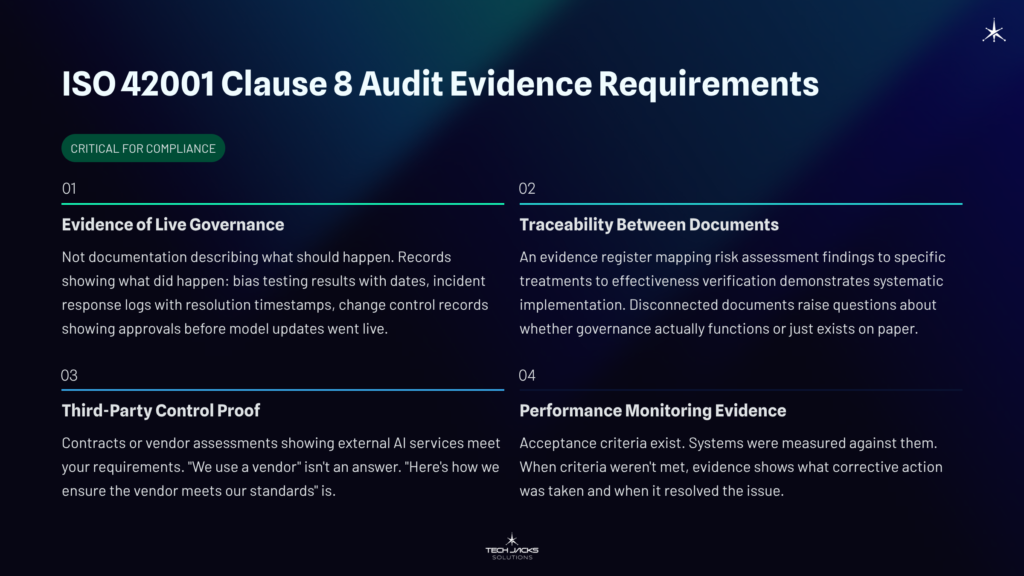

ISO 42001 Clause 8 Audit Evidence Requirements

Evidence of live governance. Not documentation describing what should happen. Records showing what did happen. Bias testing results with dates. Incident response logs with resolution timestamps. Change control records showing approvals before model updates went live.

Traceability between documents matters more than document volume. An evidence register mapping risk assessment findings to specific treatments to effectiveness verification demonstrates systematic implementation. If your risk register identifies “potential demographic bias” but your treatment records don’t show bias testing results, auditors notice the gap. Disconnected documents raise questions about whether governance actually functions or just exists on paper.

Third-party control proof. Contracts or vendor assessments showing external AI services meet your requirements. Organizations using cloud AI APIs still own governance obligations for how those services affect their stakeholders. “We use a vendor” isn’t an answer. “Here’s how we ensure the vendor meets our standards” is.

Performance monitoring evidence. Acceptance criteria exist. Systems were measured against them. When criteria weren’t met, evidence shows what corrective action was taken and when it resolved the issue.

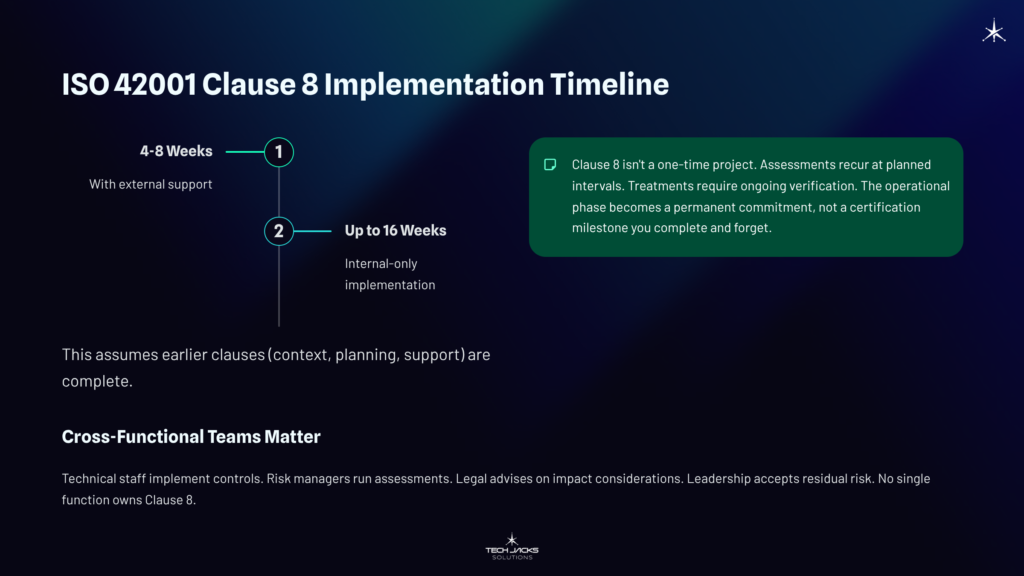

ISO 42001 Clause 8 Implementation Timeline

Implementation guidance suggests 4 to 8 weeks with external support. Internal-only implementation typically takes up to 16 weeks. This assumes earlier clauses (context, planning, support) are complete.

Clause 8 isn’t a one-time project. Assessments recur at planned intervals. Treatments require ongoing verification. The operational phase becomes a permanent commitment, not a certification milestone you complete and forget.

Cross-functional teams matter here. Technical staff implement controls. Risk managers run assessments. Legal advises on impact considerations. Leadership accepts residual risk. No single function owns Clause 8.

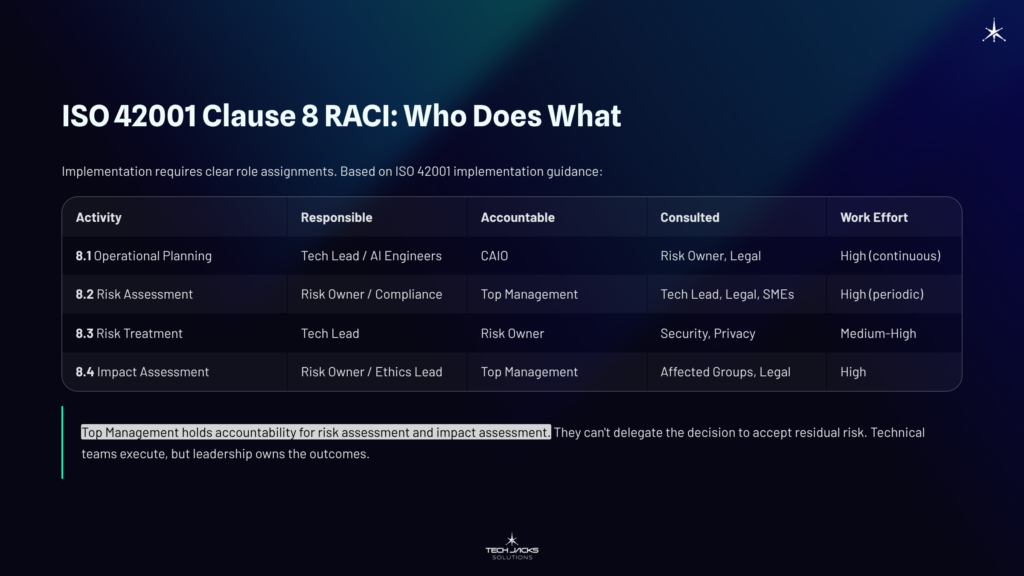

ISO 42001 Clause 8 RACI: Who Does What

Implementation requires clear role assignments. Based on ISO 42001 implementation guidance:

| Activity | Responsible | Accountable | Consulted | Work Effort |

|---|---|---|---|---|

| 8.1 Operational Planning | Tech Lead / AI Engineers | CAIO | Risk Owner, Legal | High (continuous) |

| 8.2 Risk Assessment | Risk Owner / Compliance | Top Management | Tech Lead, Legal, SMEs | High (periodic) |

| 8.3 Risk Treatment | Tech Lead | Risk Owner | Security, Privacy | Medium-High |

| 8.4 Impact Assessment | Risk Owner / Ethics Lead | Top Management | Affected Groups, Legal | High |

Notice that Top Management holds accountability for risk assessment and impact assessment. They can’t delegate the decision to accept residual risk. Technical teams execute, but leadership owns the outcomes. This distinction matters during audits when assessors ask who approved deployment of systems with known limitations.

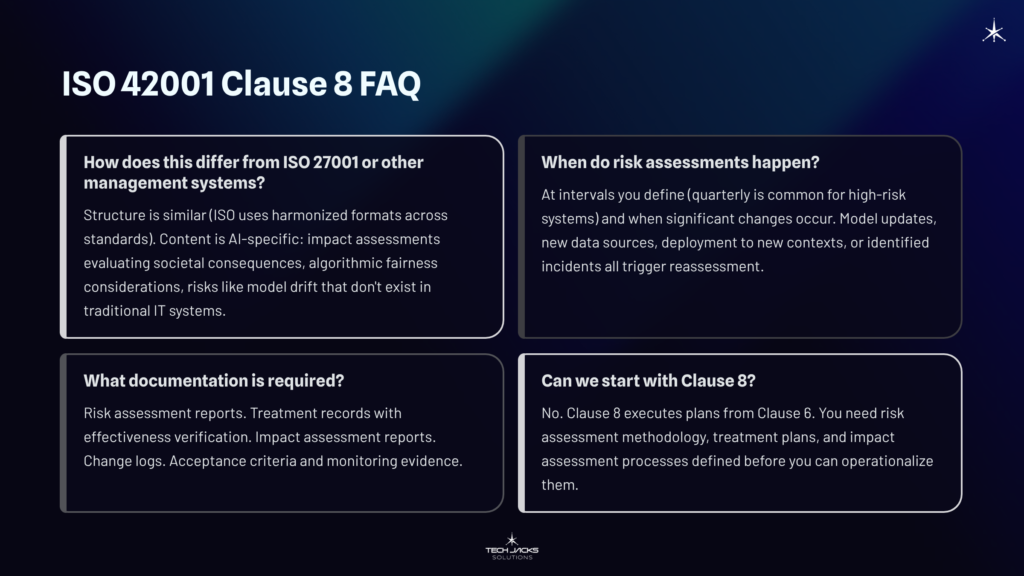

ISO 42001 Clause 8 FAQ

How does this differ from ISO 27001 or other management systems?

Structure is similar (ISO uses harmonized formats across standards). Content is AI-specific: impact assessments evaluating societal consequences, algorithmic fairness considerations, risks like model drift that don’t exist in traditional IT systems.

When do risk assessments happen?

At intervals you define (quarterly is common for high-risk systems) and when significant changes occur. Model updates, new data sources, deployment to new contexts, or identified incidents all trigger reassessment.

What documentation is required?

Risk assessment reports. Treatment records with effectiveness verification. Impact assessment reports. Change logs. Acceptance criteria and monitoring evidence.

Can we start with Clause 8?

No. Clause 8 executes plans from Clause 6. You need risk assessment methodology, treatment plans, and impact assessment processes defined before you can operationalize them.

AI Governance Standards and Resources

Core Standards:

- ISO/IEC 42001:2023 — AI management systems

- ISO/IEC 23894:2023 — AI risk management guidance

- ISO/IEC 42005 — AI system impact assessment guidance

Related Frameworks:

- NIST AI Risk Management Framework — US framework for AI risk management

- EU AI Act (Regulation 2024/1689) — EU regulatory framework for AI systems

Supporting Standards:

- ISO/IEC 27001:2022 — Information security management

- ISO 31000:2018 — Risk management guidelines

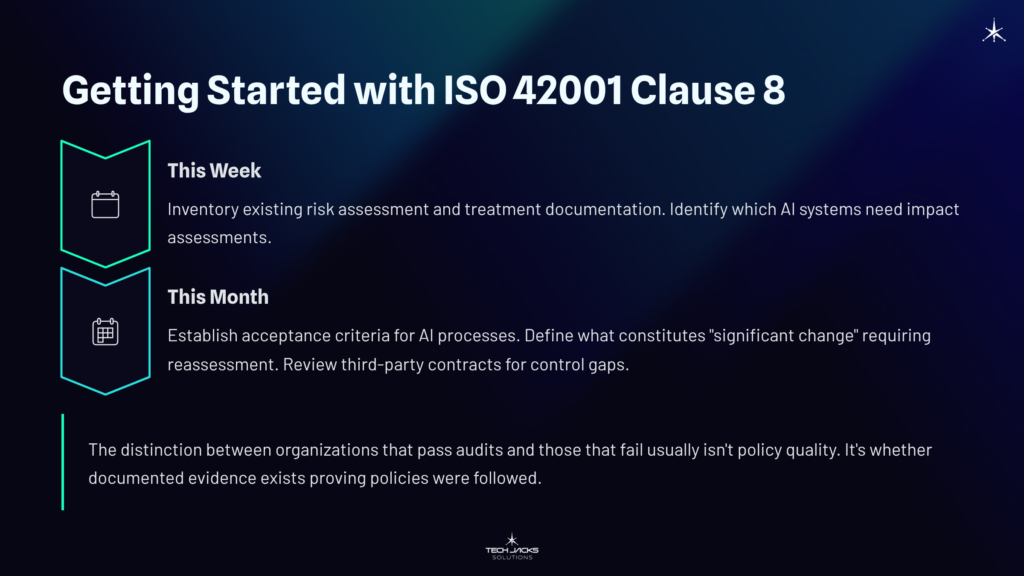

Getting Started with ISO 42001 Clause 8

This week: inventory existing risk assessment and treatment documentation. Identify which AI systems need impact assessments.

This month: establish acceptance criteria for AI processes. Define what constitutes “significant change” requiring reassessment. Review third-party contracts for control gaps.

The distinction between organizations that pass audits and those that fail usually isn’t policy quality. It’s whether documented evidence exists proving policies were followed.

Ready to Test Your Knowledge?

This article provides educational guidance on AI governance principles aligned with ISO/IEC 42001:2023. Obtain the official standard from ISO or your national standards body for specific requirements.