Introduction: Why “Objectives” Matter More Than You Think

Every organization implementing AI faces the same challenge: how to balance innovation with responsibility. ISO/IEC 42001 provides the framework, but most people miss its core insight.

The entire standard revolves around a deceptively simple concept: objectives. Not compliance checkboxes or technical specifications, but the fundamental question of what you’re trying to achieve with AI. Get this wrong, and everything else becomes busywork.

Here are three essential truths about ISO 42001 objectives that will transform how you approach the standard.

1. Two Lists, Two Purposes: The Architecture of Choice

ISO 42001 doesn’t give you one list of objectives. It gives you two, serving completely different purposes.

The Mandatory Foundation: Control Objectives in Annex A

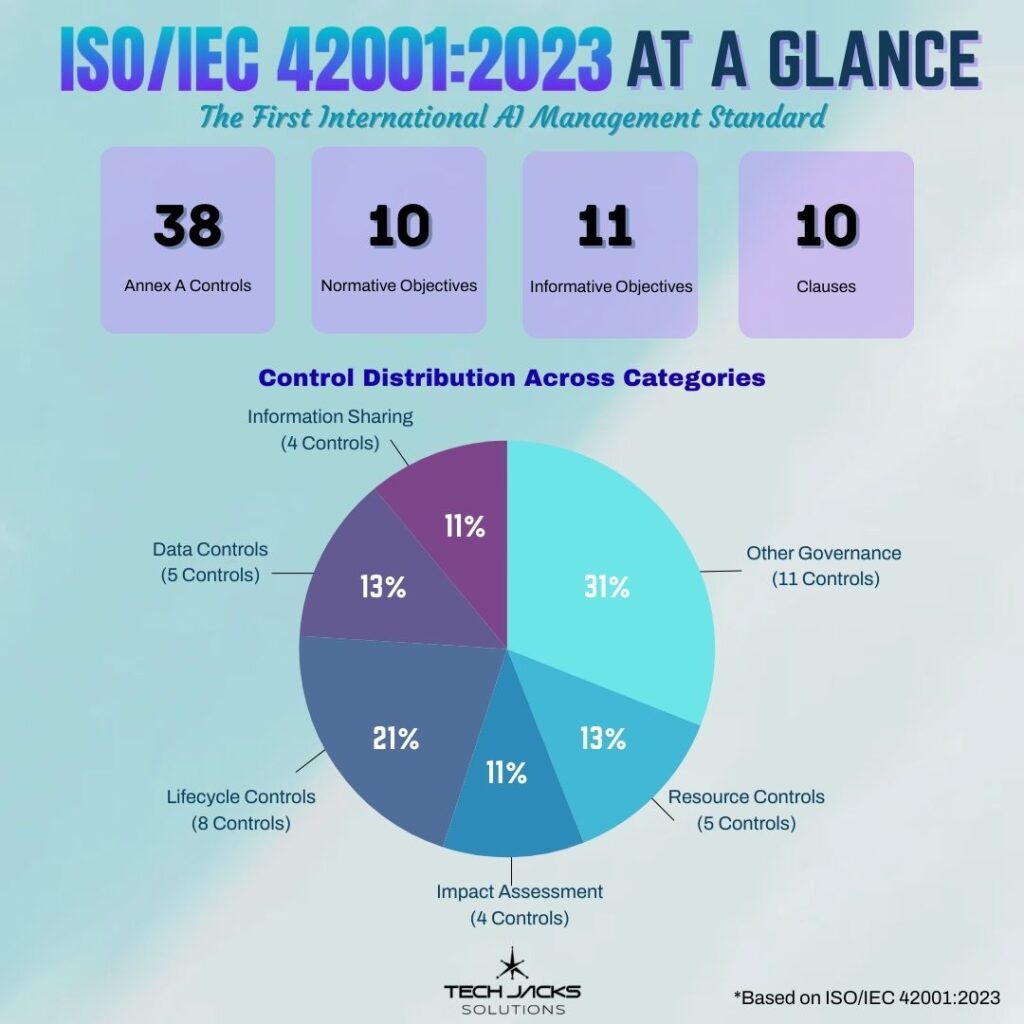

The standard’s Annex A contains nine control objective categories (numbered from 2 through 10, with section 1 being introductory text). These normative requirements encompass 38 specific controls that form your risk management infrastructure.*

*Structure confirmed in ISO/IEC 42001:2023 official publication, December 2023.

These control categories address essential areas such as:

- Policy development and alignment

- Organizational structure and accountability

- Resource management and documentation

- Impact assessment processes

- System development and deployment practices

- Data governance and quality

- Stakeholder communication

- Performance monitoring and measurement

- Third-party relationship management

Think of these as your building code: the non-negotiable structural requirements that ensure your AI management system won’t collapse. They’re process-oriented, focusing on how you manage AI rather than what you achieve with it.

The Strategic Compass: Organizational Objectives in Annex C

The standard’s Annex C presents eleven potential organizational objectives as informative guidance (not mandatory requirements). These address outcome-focused areas that organizations might consider, such as:

- Organizational accountability structures

- Technical expertise development

- Data quality and availability

- Environmental sustainability

- Fairness and non-discrimination

- System maintainability

- Privacy protection

- Operational robustness

- Safety considerations

- Security measures

- Transparency and explainability

Additionally, this annex identifies six categories of risk sources that organizations should consider when implementing AI systems.

These serve as your architectural style guide: helping define the character and values you want your AI systems to embody. They’re outcome-oriented, focusing on the real-world impacts and benefits you’re trying to achieve.

Key Insight: Similar considerations are addressed in ISO/IEC 23894’s risk management guidance, confirming they’re not just ethical nice-to-haves but core risk considerations that regulators and auditors will examine.

Why This Distinction Matters | Iso 42001 objectives

The separation is deliberate and powerful:

- Annex A ensures you have robust processes (the how)

- Annex C helps you define meaningful goals (the what and why)

You must implement the required controls from Annex A, but you choose which suggested objectives from Annex C matter most to your organization. A healthcare AI company might prioritize safety and privacy, while a financial services firm might emphasize fairness and transparency.

2. The Definition That Changes Everything

The standard defines an objective with radical simplicity: a result to be achieved.

The accompanying guidance clarifies that in AI management systems, organizations set their own objectives consistent with their AI policy to achieve specific results.

This transforms ISO 42001 from a compliance exercise into strategic planning. You’re not handed a universal checklist; you’re empowered to define success on your terms.

From Compliance to Strategy

Traditional thinking: “What objectives does ISO require?”

ISO 42001 thinking: “What results do we want our AI to achieve?”

This shift has profound implications:

- Your objectives must align with your overall AI policy

- They should be measurable when practical

- They drive your risk assessment processes

- They determine which controls you implement

3. Objectives as the Cornerstone of Risk Management

The standard reveals its elegance through three interlocking concepts:

- Objectives represent results to be achieved

- Risk represents uncertainty that could affect achieving objectives

- AI systems are designed to generate outputs for human-defined objectives

The logic is inescapable: You cannot assess AI risk without first defining your objectives.

The Risk Management Cascade

This creates a logical cascade that drives the entire standard:

- Define objectives: What results are we trying to achieve?

- Identify risks: What uncertainties could prevent achieving those results?

- Select controls: Which controls address these risks?

- Implement measures: How do we operationalize these controls?

- Monitor performance: Are we achieving our objectives?

Without clear objectives, you’re essentially trying to navigate without a destination. Risk becomes meaningless because you don’t know what you’re protecting.

Practical Application

Consider an AI-powered lending system:

Weak approach: “We need to comply with ISO 42001”

- Generic objectives

- Checkbox mentality

- Controls implemented without clear purpose

Strong approach: “Our objectives are fair lending decisions and regulatory compliance”

- Specific objectives: For example, an organization might set targets such as reducing bias in loan approvals by 40%, maintaining approval accuracy above 95%

- Targeted risk assessment: What could cause discriminatory outcomes or regulatory violations?

- Purposeful controls: Enhanced data quality checks, comprehensive impact assessments, continuous performance monitoring

Implementation Roadmap: Putting Objectives First

Sample Timeline (adjust based on organizational complexity and resources):

Step 1: Define Your North Star (Weeks 1-2)

- Review the suggested organizational objectives as inspiration, not prescription

- Align with business strategy and stakeholder values

- Document 3 to 5 specific, measurable AI objectives

Step 2: Map Your Requirements (Weeks 3-4)

- Review all control objective categories

- Identify which controls directly support your objectives

- Document your Statement of Applicability

Step 3: Assess Your Gaps (Weeks 5-6)

- Evaluate current practices against required controls

- Identify risks to achieving your objectives

- Prioritize gaps based on risk level

Step 4: Build Your System (Months 2-6)

- Implement controls systematically

- Create procedures that connect controls to objectives

- Establish metrics that measure objective achievement

Common Pitfalls to Avoid

- Counting confusion: The control objectives begin at section 2, with section 1 containing introductory material. Don’t mistake the section count for the actual number of control objectives.

- Mixing mandatory and optional: The controls in Annex A become required once you determine they’re necessary for your risks. The objectives in Annex C are suggestions for consideration.

- Generic objectives: “Be responsible with AI” isn’t measurable. Consider instead: “Reduce algorithmic bias in hiring decisions by 50% within 12 months” which provides clear metrics and timeline.

- Objective overload: Starting with 15 objectives makes measurement impossible. Begin with 3 to 5 core objectives and expand later.

- Static thinking: Objectives should evolve as your AI maturity grows. Plan for regular reviews as part of your management system.

The Bottom Line: It’s Your Standard

ISO 42001’s genius lies not in prescribing universal AI objectives but in providing a framework for achieving your objectives responsibly. The standard’s two-list structure (mandatory controls plus suggested objectives) gives you both discipline and freedom.

Before diving into controls, assessments, and documentation, answer the fundamental question: What results do we want our AI to achieve?

Get this right, and ISO 42001 transforms from a compliance burden into a strategic enabler. Get it wrong, and you’re just checking boxes without knowing why.

Success with ISO 42001 implementation likely depends more on having a clear vision of what you’re trying to achieve, supported by a systematic approach to getting there, than on exhaustive documentation alone.

Note: This analysis provides educational commentary about ISO/IEC 42001:2023 based on publicly available information about the standard’s structure. For implementation, always consult the official standard document and qualified professionals. Specific metrics, timelines, and examples are illustrative and should be adapted to your organization’s context.