Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated: January 28th, 2026

Table of Contents

NIST AI RMF Function Overviews: View Articles

What is NIST AI RMF Measure?

What is NIST AI RMF Measure? It’s the function that answers a critical question: can you prove your AI system actually works as intended?

MEASURE in 60 Seconds: You’ve mapped your AI system’s context. Now what? MEASURE is where you actually test whether things work as intended. It’s the third function of NIST’s AI Risk Management Framework, and it creates the evidence trail that separates “we thought it worked” from “we verified it works.” UnitedHealth learned this lesson publicly when appeals data later showed that roughly 90% of denials associated with the nH Predict algorithm were overturned. MEASURE won’t catch every problem, but it provides the documentation that demonstrates due diligence.

Who This Article Is For

- New to AI governance? Start here for foundational concepts in plain language.

- Compliance or security professional? You’ll find RACI matrices and framework crosswalks for implementation.

- Executive? Focus on the Business Impact section and the 60-second summary above.

This article covers MEASURE at an introductory level. For context-setting, see the NIST AI RMF MAP guide. For organizational foundations, review the GOVERN function.

Executive Summary

MEASURE is the analytical engine of NIST’s AI Risk Management Framework. It employs quantitative, qualitative, and mixed-method tools to analyze, assess, benchmark, and monitor AI risk. The function includes 22 subcategories across four categories.

Where MAP identifies what could go wrong, MEASURE determines whether it actually is going wrong. The function evaluates trustworthiness characteristics including validity, reliability, safety, security, privacy, fairness, and explainability. Skip this step and your risk decisions lack evidence.

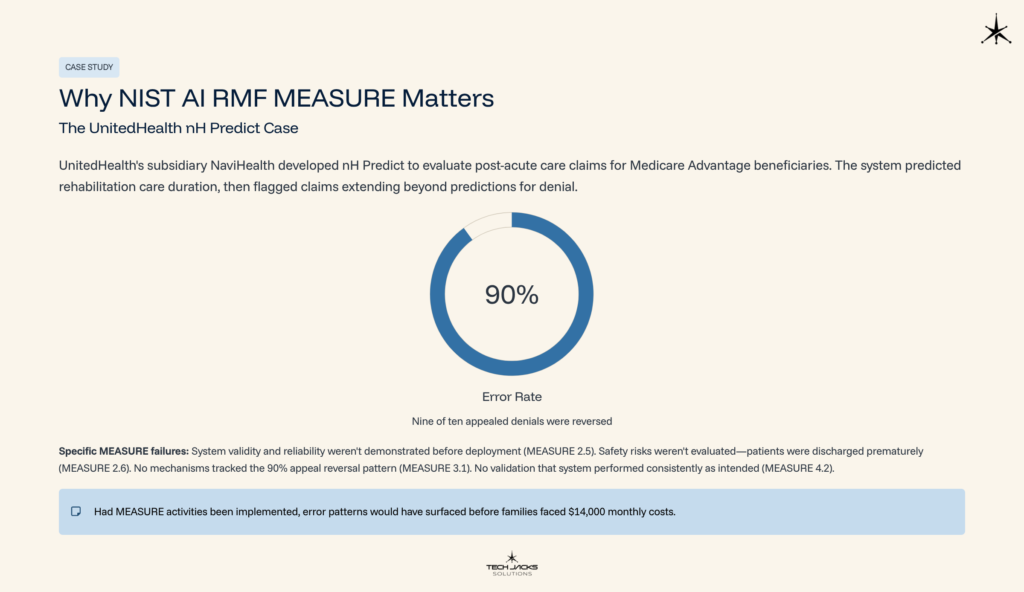

Why NIST AI RMF MEASURE Matters: A Real Example

The UnitedHealth nH Predict case demonstrates what happens when organizations deploy AI without adequate measurement.

UnitedHealth’s subsidiary NaviHealth developed an algorithm called nH Predict to evaluate post-acute care claims for Medicare Advantage beneficiaries. The system predicted how long patients needed rehabilitation care, then flagged claims extending beyond that prediction for denial. According to court filings tracked by Georgetown Law, the algorithm had a 90% error rate (nine of ten appealed denials were reversed). STAT News reported that UnitedHealth continued using the algorithm because only 0.2% of patients filed appeals.

Specific MEASURE failures in this case:

- MEASURE 2.5 violated: System validity and reliability were not demonstrated before deployment

- MEASURE 2.6 absent: Safety risks weren’t evaluated (patients were discharged prematurely)

- MEASURE 3.1 missing: No mechanisms tracked the 90% appeal reversal pattern

- MEASURE 4.2 gap: No validation that system performed consistently as intended

In February 2025, a federal court allowed breach of contract claims to proceed. DLA Piper’s legal analysis noted this ruling underscores that AI-based coverage denials can expose insurers to serious legal challenges. One plaintiff’s family paid $12,000 to $14,000 monthly out of pocket for nearly a year. Had MEASURE activities been implemented, the error patterns would have surfaced before litigation September 2025, the judge denied UnitedHealth’s attempt to limit discovery. Had MEASURE activities been implemented, the error patterns would have surfaced before families faced $14,000 monthly costs.

Business Impact: Why Executives Should Care

The UnitedHealth case isn’t isolated. Workday faces a certified collective action over alleged AI hiring discrimination. On May 16, 2025, Judge Rita Lin granted preliminary certification under the Age Discrimination in Employment Act, potentially including millions of job applicants. A 2024 University of Washington study by researchers Kyra Wilson and Aylin Caliskan found AI resume screening systems preferred white-associated names 85% of the time, with Black male-associated names never preferred over white male candidates in comparisons. The peer-reviewed research was presented at the AAAI/ACM Conference on AI, Ethics, and Society.

Organizations deploying AI without proper measurement face legal exposure (class actions, regulatory investigations), operational costs (system shutdowns, mandatory audits), and reputational damage. MEASURE creates documentation demonstrating due diligence. When regulators ask “how do you know this works?”, MEASURE provides answers.

Understanding NIST AI RMF MEASURE’s Role

Four functions comprise the framework: Govern, Map, Measure, Manage. MAP establishes context for individual AI systems. MEASURE takes that context and applies rigorous analysis through Test, Evaluation, Verification, and Validation (TEVV) processes. The outputs feed directly into MANAGE for risk response decisions.

One critical distinction: MEASURE isn’t a one-time gate. AI systems drift over time as real-world data diverges from training data. Continuous measurement catches problems before they become lawsuits.

The Four Categories of NIST AI RMF MEASURE

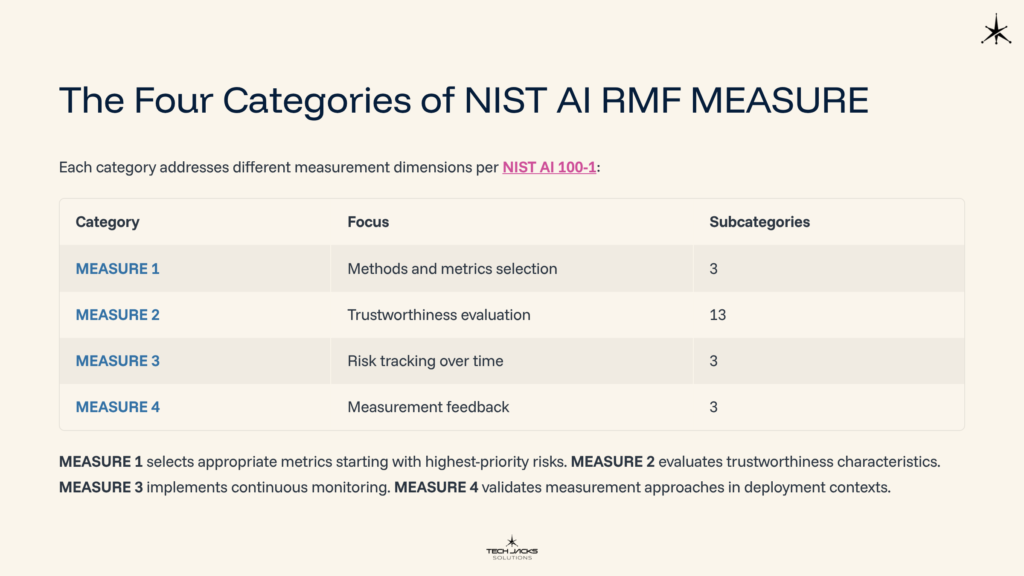

Each category addresses different measurement dimensions per NIST AI 100-1:

| Category | Focus | Subcategories |

| MEASURE 1 | Methods and metrics selection | 3 |

| MEASURE 2 | Trustworthiness evaluation | 13 |

| MEASURE 3 | Risk tracking over time | 3 |

| MEASURE 4 | Measurement feedback | 3 |

MEASURE 1 selects appropriate metrics starting with highest-priority risks. MEASURE 2 evaluates trustworthiness characteristics (safety, security, fairness, privacy, explainability). MEASURE 3 implements continuous monitoring. MEASURE 4 validates measurement approaches in deployment contexts.

How to Implement NIST AI RMF MEASURE: Top 5 Starting Points

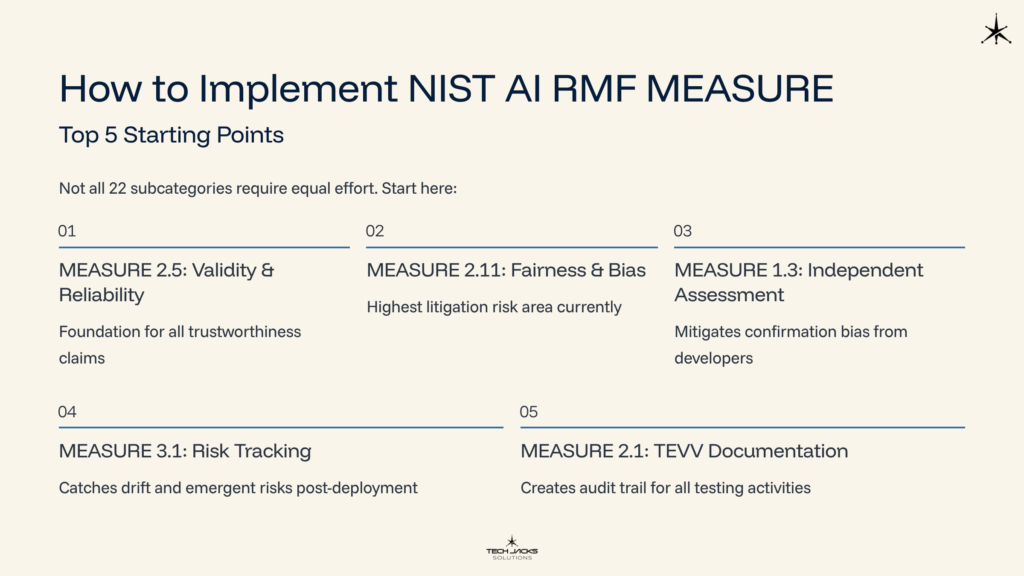

Not all 22 subcategories require equal effort. Start here:

| Priority | Subcategory | Why Start Here |

| 1 | MEASURE 2.5 (Validity & Reliability) | Foundation for all trustworthiness claims |

| 2 | MEASURE 2.11 (Fairness & Bias) | Highest litigation risk area currently |

| 3 | MEASURE 1.3 (Independent Assessment) | Mitigates confirmation bias from developers |

| 4 | MEASURE 3.1 (Risk Tracking) | Catches drift and emergent risks post-deployment |

| 5 | MEASURE 2.1 (TEVV Documentation) | Creates audit trail for all testing activities |

RACI Matrix for MEASURE Implementation

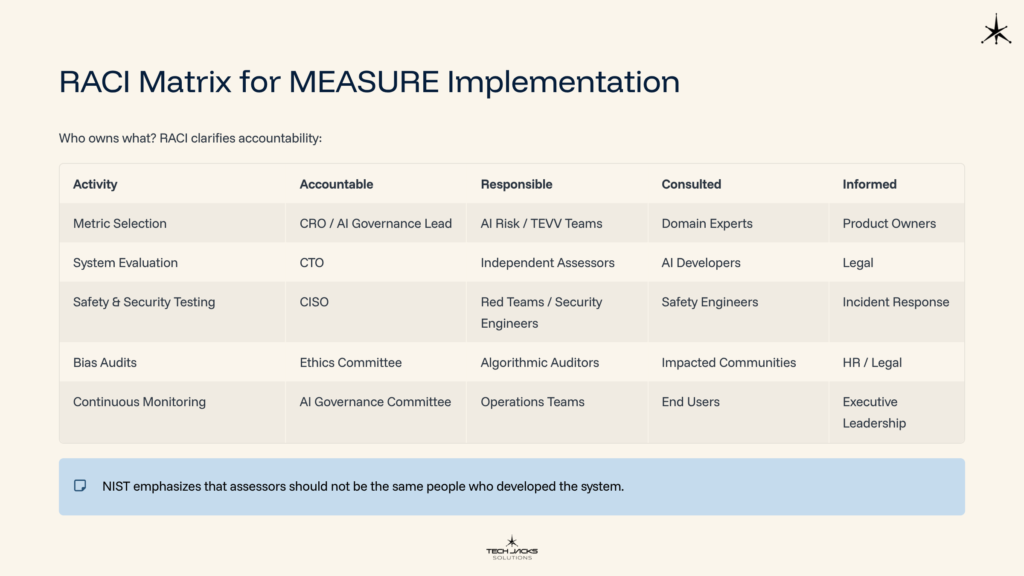

Who owns what? RACI clarifies accountability:

| Activity | Accountable | Responsible | Consulted | Informed |

| Metric Selection | CRO / AI Governance Lead | AI Risk / TEVV Teams | Domain Experts | Product Owners |

| System Evaluation | CTO | Independent Assessors | AI Developers | Legal |

| Safety & Security Testing | CISO | Red Teams / Security Engineers | Safety Engineers | Incident Response |

| Bias Audits | Ethics Committee | Algorithmic Auditors | Impacted Communities | HR / Legal |

| Continuous Monitoring | AI Governance Committee | Operations Teams | End Users | Executive Leadership |

NIST emphasizes that assessors should not be the same people who developed the system.

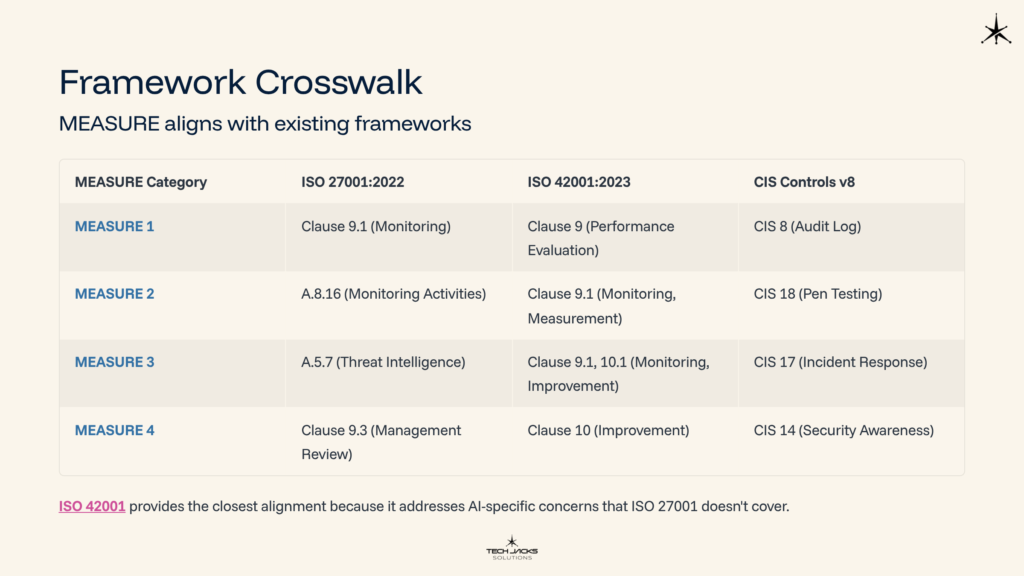

Framework Crosswalk

MEASURE aligns with existing frameworks:

| MEASURE Category | ISO 27001:2022 | ISO 42001:2023 | CIS Controls v8 |

| MEASURE 1 | Clause 9.1 (Monitoring) | Clause 9 (Performance Evaluation) | CIS 8 (Audit Log) |

| MEASURE 2 | A.8.16 (Monitoring Activities) | Clause 9.1 (Monitoring, Measurement) | CIS 18 (Pen Testing) |

| MEASURE 3 | A.5.7 (Threat Intelligence) | Clause 9.1, 10.1 (Monitoring, Improvement) | CIS 17 (Incident Response) |

| MEASURE 4 | Clause 9.3 (Management Review) | Clause 10 (Improvement) | CIS 14 (Security Awareness) |

ISO 42001 provides the closest alignment because it addresses AI-specific concerns that ISO 27001 doesn’t cover.

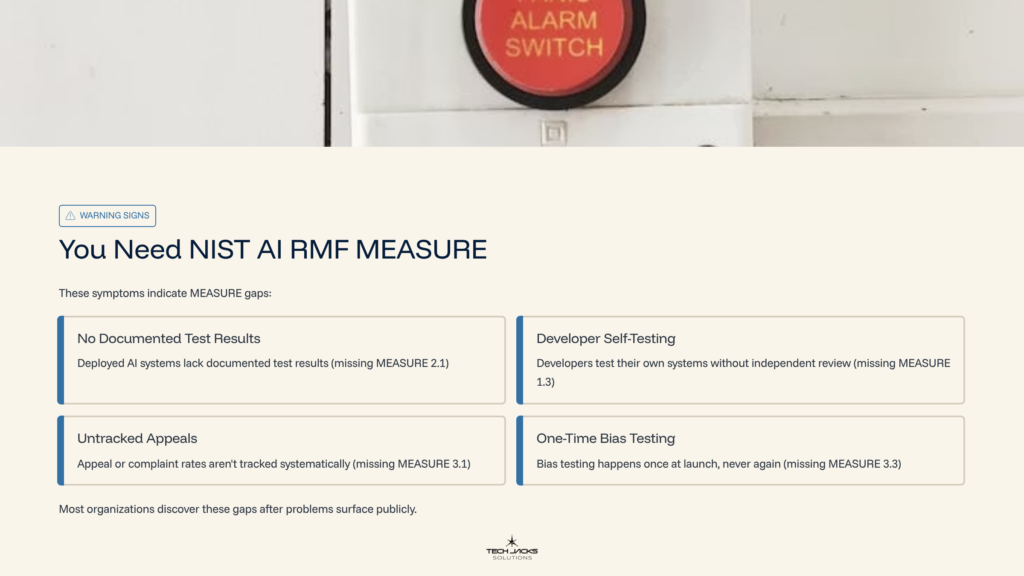

Warning Signs You Need NIST AI RMF MEASURE

These symptoms indicate MEASURE gaps:

- No documented test results for deployed AI systems (missing MEASURE 2.1)

- Developers test their own systems without independent review (missing MEASURE 1.3)

- Appeal or complaint rates aren’t tracked systematically (missing MEASURE 3.1)

- Bias testing happens once at launch, never again (missing MEASURE 3.3)

Most organizations discover these gaps after problems surface publicly.

Frequently Asked Questions

Q: What’s the difference between MAP and MEASURE? MAP identifies risks and establishes context. MEASURE quantifies and tracks those risks through testing and monitoring.

Q: How often should MEASURE activities occur? Initial TEVV before deployment, then continuously during operation. Frequency depends on risk level.

Q: Can MEASURE be outsourced? Testing can be. Accountability cannot. You remain responsible for AI systems you deploy.

Q: What if we can’t measure certain risks? Document them explicitly. MEASURE 1.1 requires documenting risks that cannot be measured.

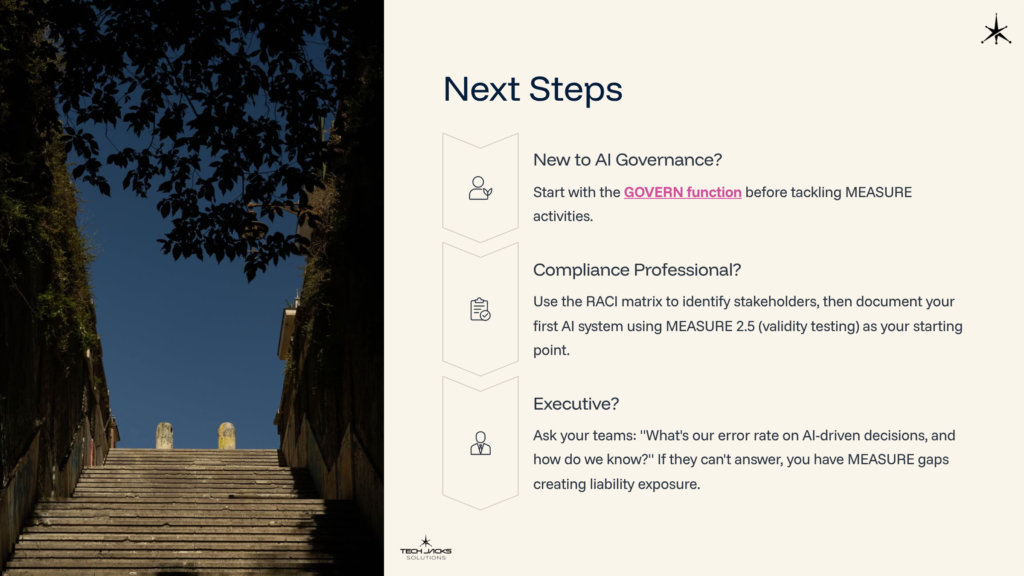

Next Steps

New to AI governance? Start with the GOVERN function before tackling MEASURE activities.

Compliance professional? Use the RACI matrix to identify stakeholders, then document your first AI system using MEASURE 2.5 (validity testing) as your starting point.

Executive? Ask your teams: “What’s our error rate on AI-driven decisions, and how do we know?” If they can’t answer, you have MEASURE gaps creating liability exposure.

Ready to Test Your Knowledge?

Article based on NIST AI 100-1 (Artificial Intelligence Risk Management Framework) and supporting documentation from the NIST Trustworthy and Responsible AI Resource Center.

Case References:

- Estate of Gene B. Lokken v. UnitedHealth Group, CASE 0:23-cv-03514-JRT-SGE (D. Minn. 2025)

- Mobley v. Workday, Inc., Case No. 23-cv-00770-RFL (N.D. Cal. 2025)

Research Citations:

- Wilson, K. & Caliskan, A. (2024). “Gender, Race, and Intersectional Bias in Resume Screening via Language Model Retrieval.” Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, 7(1), 1578-1590.