Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated: January 2nd, 2026

Table of Contents

NIST AI RMF Function Overviews: View Articles

What is NIST AI RMF MAP?

MAP in 60 Seconds: Before you deploy an AI system, it makes sense to understand what it does, who it affects, and what could go wrong. That’s NIST MAP. It’s the “context setting” function of NIST’s AI Risk Management Framework. Skip it and you’re flying blind. A tenant screening company learned this the hard way when inadequate context mapping contributed to a $2.275 million discrimination settlement. MAP won’t guarantee compliance, but it forces the right questions before problems become lawsuits.

Who This Article Is For

- New to AI governance? Start here. We’ll explain the concepts in plain language.

- Compliance or security professional? You’ll find framework crosswalks and RACI matrices to jumpstart implementation.

- Executive? Focus on the “Business Impact” section and the 60-second summary above.

This article covers NIST MAP at an introductory level. For organizational foundations, see the NIST AI RMF GOVERN guide. Deeper dives into MEASURE and MANAGE functions are coming soon.

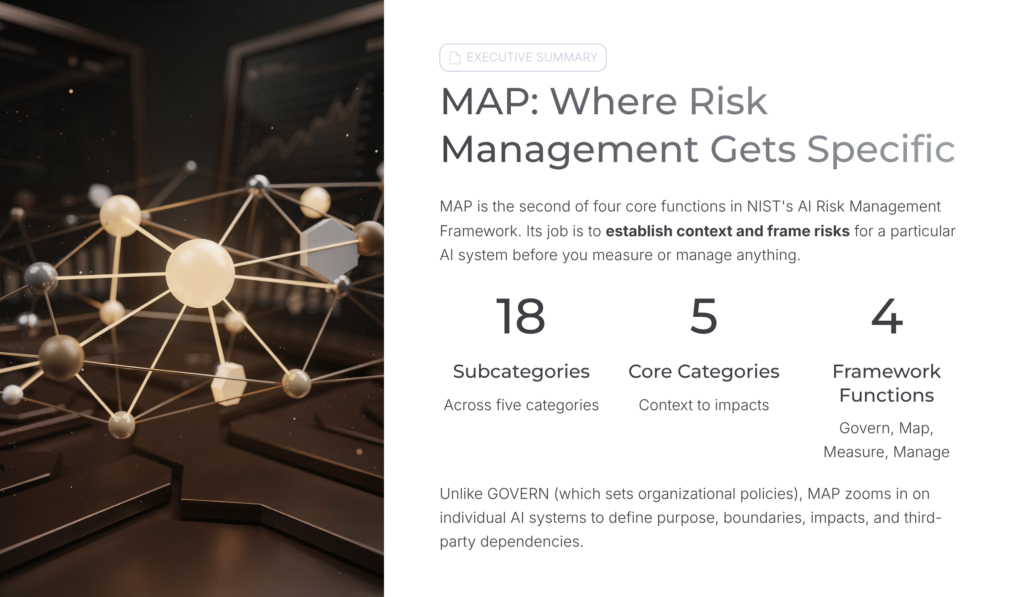

Executive Summary

MAP is where risk management gets specific. It’s the second of four core functions in NIST’s AI Risk Management Framework, and its job is to establish context and frame risks for a particular AI system before you measure or manage anything.

The function includes 18 subcategories across five categories. Unlike GOVERN (which sets organizational policies), NIST MAP zooms in on individual AI systems to define their purpose, boundaries, potential impacts, and third-party dependencies. Skip this step and you’re guessing (you can’t accurately measure risks without understanding what you’re dealing with).

Why NIST AI RMF MAP Matters: A Real Example

The SafeRent Solutions case shows what happens when organizations skip NIST MAP activities.

SafeRent deployed an AI tenant screening algorithm that assigned scores to rental applicants. Nobody mapped the context properly. In November 2024, U.S. District Judge Angel Kelley approved a $2.275 million settlement after plaintiffs proved the algorithm disproportionately harmed Black and Hispanic housing voucher recipients (Louis, et al. v. SafeRent Solutions, D. Mass.).

Specific MAP failures:

- MAP 1.1 violated: Context-specific impacts on protected communities weren’t documented

- MAP 2.2 absent: Knowledge limits weren’t documented (credit scores don’t predict rent payment ability)

- MAP 3.2 missing: Potential costs to individuals weren’t examined against risk tolerance

- MAP 5.1 gap: Likelihood and magnitude of discriminatory impacts were never characterized

Had SafeRent implemented MAP, these subcategories would have required documenting deployment context, system limitations, and impact assessments before deployment.

Business Impact: Why Executives Should Care

The SafeRent case isn’t unique. Organizations deploying AI without proper context mapping face:

- Legal liability — Discrimination claims, regulatory fines, class action settlements

- Reputational damage — Public incidents erode customer trust and brand value

- Operational disruption — Forced system shutdowns, mandated product changes, ongoing monitoring requirements

NIST MAP doesn’t guarantee you’ll avoid these outcomes. But it creates documentation that demonstrates due diligence, surfaces risks before deployment, and establishes the foundation for defensible decisions. When regulators or courts ask “what did you know and when did you know it,” MAP provides answers.

Understanding NIST AI RMF MAP’s Role

Four functions: Govern, Map, Measure, Manage.

GOVERN establishes organizational policies and accountability. NIST MAP focuses on system-specific context setting. It answers the critical questions: What is this AI system designed to do? Who does it affect? What are its limitations? What could go wrong?

The information gathered during MAP feeds directly into MEASURE (risk assessment) and MANAGE (risk response). Skip MAP and those functions operate blind.

The Five Categories of NIST AI RMF MAP

Each category addresses a different context dimension per NIST AI 100-1:

| Category | Focus | Subcategories |

| MAP 1 | Context is established | 6 |

| MAP 2 | System categorization | 3 |

| MAP 3 | Capabilities, benefits, costs | 5 |

| MAP 4 | Third-party component risks | 2 |

| MAP 5 | Impact characterization | 2 |

MAP 1 documents intended purposes, legal requirements, and risk tolerances. MAP 2 defines technical methods, knowledge limits, and TEVV (Test, Evaluation, Verification, and Validation) considerations. MAP 3 examines benefits, costs, and operator proficiency. MAP 4 maps third-party software, data, and IP risks. MAP 5 characterizes impact likelihood/magnitude and establishes feedback mechanisms.

Top 5 NIST MAP Subcategories by Implementation Ease

Not all subcategories require equal effort. Start here:

| Priority | Subcategory | Why Start Here |

| 1 | MAP 1.1 (Intended Purpose & Context) | Foundation for everything else; primarily documentation |

| 2 | MAP 2.1 (Define Tasks & Methods) | Technical teams already know this — just write it down |

| 3 | MAP 1.5 (Risk Tolerance) | Leverages existing GOVERN outputs; enables decisions |

| 4 | MAP 2.2 (Knowledge Limits) | Prevents scope creep and misuse; low technical barrier |

| 5 | MAP 4.1 (Third-Party Risks) | Most organizations use external AI — you need early visibility |

These five establish minimum viable context for informed risk decisions.

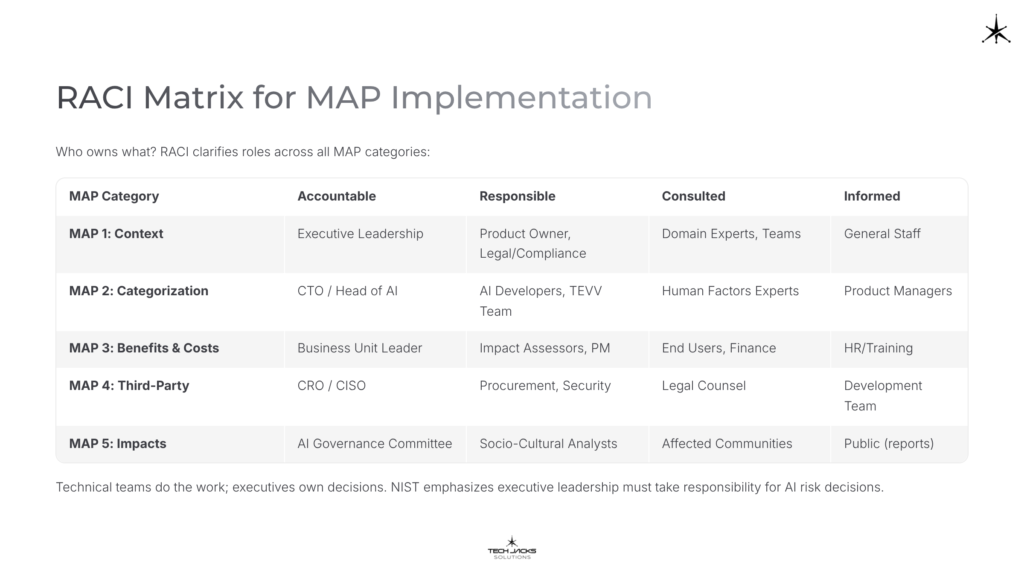

RACI Matrix for MAP Implementation

Who owns what? RACI (Responsible, Accountable, Consulted, Informed) clarifies roles:

| MAP Category | Accountable | Responsible | Consulted | Informed |

| MAP 1: Context | Executive Leadership | Product Owner, Legal/Compliance | Domain Experts, Interdisciplinary Teams | General Staff |

| MAP 2: Categorization | CTO / Head of AI | AI Developers, TEVV Team | Human Factors Experts | Product Managers |

| MAP 3: Benefits & Costs | Business Unit Leader | Impact Assessors, Product Manager | End Users, Finance | HR/Training |

| MAP 4: Third-Party | CRO / CISO | Procurement, Security Lead | Legal Counsel | Development Team |

| MAP 5: Impacts | AI Governance Committee | Socio-Cultural Analysts | Affected Communities | Public (via reports) |

Technical teams do the work; executives own decisions. NIST emphasizes executive leadership must take responsibility for AI risk decisions (Playbook).

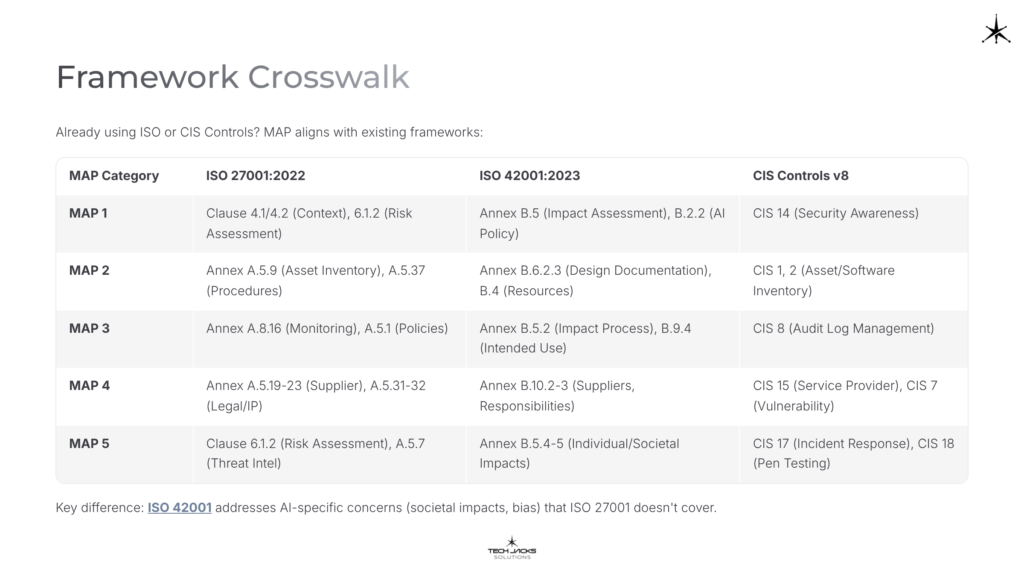

Framework Crosswalk

Already using ISO or CIS Controls? MAP aligns with existing frameworks:

| MAP Category | ISO 27001:2022 | ISO 42001:2023 | CIS Controls v8 |

| MAP 1 | Clause 4.1/4.2 (Context), 6.1.2 (Risk Assessment) | Annex B.5 (Impact Assessment), B.2.2 (AI Policy) | CIS 14 (Security Awareness) |

| MAP 2 | Annex A.5.9 (Asset Inventory), A.5.37 (Procedures) | Annex B.6.2.3 (Design Documentation), B.4 (Resources) | CIS 1, 2 (Asset/Software Inventory) |

| MAP 3 | Annex A.8.16 (Monitoring), A.5.1 (Policies) | Annex B.5.2 (Impact Process), B.9.4 (Intended Use) | CIS 8 (Audit Log Management) |

| MAP 4 | Annex A.5.19-23 (Supplier), A.5.31-32 (Legal/IP) | Annex B.10.2-3 (Suppliers, Responsibilities) | CIS 15 (Service Provider), CIS 7 (Vulnerability) |

| MAP 5 | Clause 6.1.2 (Risk Assessment), A.5.7 (Threat Intel) | Annex B.5.4-5 (Individual/Societal Impacts) | CIS 17 (Incident Response), CIS 18 (Pen Testing) |

Key difference: ISO 42001 addresses AI-specific concerns (societal impacts, bias) that ISO 27001 doesn’t cover.

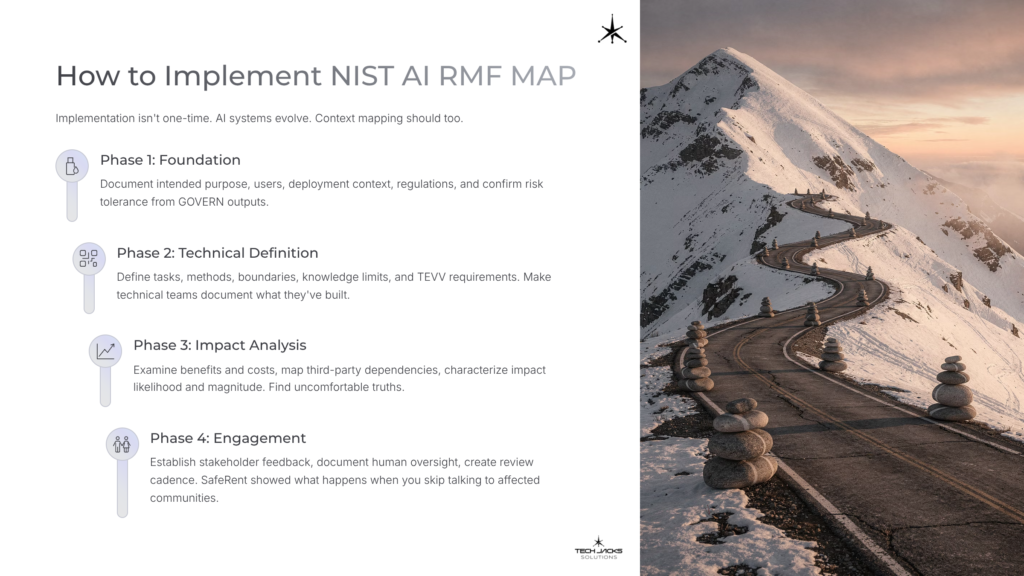

How to Implement NIST AI RMF MAP

Implementation isn’t one-time. AI systems evolve. Context mapping should too.

Phase 1: Foundation — Document intended purpose, users, deployment context, regulations, and confirm risk tolerance from GOVERN outputs.

Phase 2: Technical Definition — Define tasks, methods, boundaries, knowledge limits, and TEVV requirements. Make technical teams document what they’ve built.

Phase 3: Impact Analysis — Examine benefits and costs, map third-party dependencies, characterize impact likelihood and magnitude. This is where you find uncomfortable truths.

Phase 4: Engagement — Establish stakeholder feedback, document human oversight, create review cadence. SafeRent showed what happens when you skip talking to affected communities.

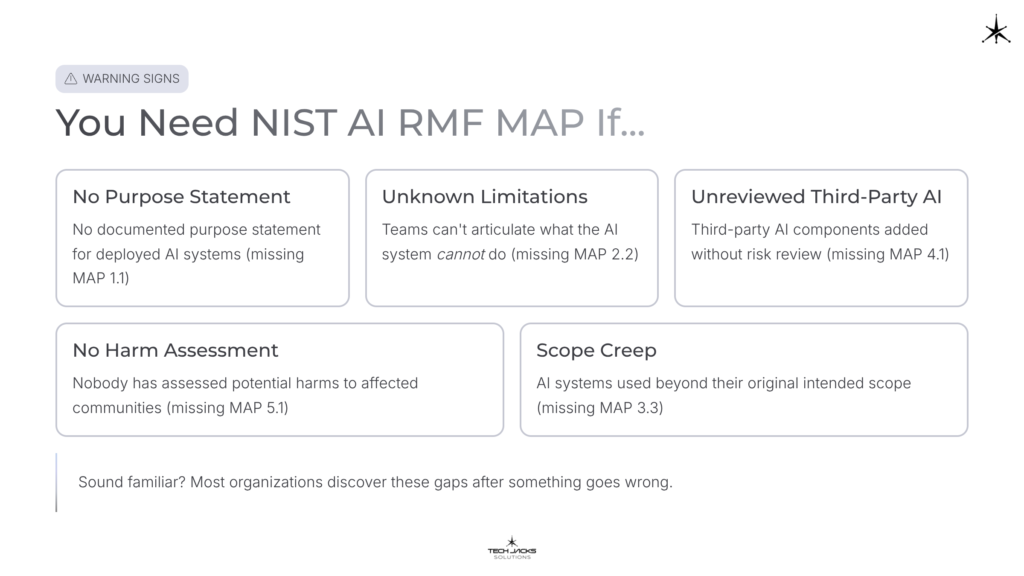

Warning Signs You Need NIST AI RMF MAP

These symptoms indicate MAP gaps:

- No documented purpose statement for deployed AI systems (missing MAP 1.1)

- Teams can’t articulate what the AI system cannot do (missing MAP 2.2)

- Third-party AI components added without risk review (missing MAP 4.1)

- Nobody has assessed potential harms to affected communities (missing MAP 5.1)

- AI systems used beyond their original intended scope (missing MAP 3.3)

Sound familiar? Most organizations discover these gaps after something goes wrong.

Frequently Asked Questions

Q: What’s the difference between GOVERN and MAP? GOVERN operates at the organizational level (policies, accountability). MAP operates at the AI system level (context, impacts for a specific system).

Q: Do we need MAP for every AI system? Yes, but depth varies. High-risk systems require comprehensive mapping; low-risk tools need abbreviated documentation.

Q: Can MAP be done after deployment? Yes, but its value is highest during design when findings can influence architecture.

Q: How does MAP relate to MEASURE and MANAGE? MAP provides context for risk assessment (MEASURE) and response (MANAGE). Without MAP, those functions lack essential inputs.

Next Steps

If you’re new to AI governance: Start with the GOVERN function to establish organizational foundations before tackling system-specific MAP activities.

If you’re in compliance or security: Use the RACI matrix above to identify stakeholders, then document your first AI system using MAP 1.1 (intended purpose) as your starting point.

If you’re an executive: Ensure your teams can answer the Warning Signs questions. If they can’t, you have MAP gaps that create liability exposure.

Ready To Test Your Knowledge?

Article based on NIST AI 100-1 (Artificial Intelligence Risk Management Framework) and supporting documentation from the NIST Trustworthy and Responsible AI Resource Center.