Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated: 10/30/2025

Table of Contents

Hello Everyone, Help us grow our community by sharing and/or supporting us on other platforms. This allow us to show verification that what we are doing is valued. It also allows us to plan and allocate resources to improve what we are doing, as we then know others are interested/supportive.

Your doctor’s office can now screen for diabetic eye disease without sending you to a specialist. The system (IDx-DR, FDA-approved in 2018) catches 87 out of 100 cases that need treatment. It learned by studying thousands of retinal images, not by following a checklist someone programmed.

That’s what makes machine learning different.

A German screening program tested AI on nearly half a million women. Radiologists using the system found 17.6% more breast cancers than those reading mammograms the traditional way. Same images, better results. The AI learned patterns from previous scans that even experienced radiologists might miss.

Or look at manufacturing. Factories wire up equipment with vibration sensors and temperature monitors. The data feeds into systems that predict failures days before they happen, cutting maintenance costs by 60% in some plants. Nobody programmed rules for every grinding bearing or overheating motor. The system learned what trouble looks like.

Machine learning matters whether you’re building products, making business decisions, or just trying to understand why technology keeps getting better at things we thought only humans could do.

What is Machine Learning? by Lisa YuWhat Is Machine Learning?

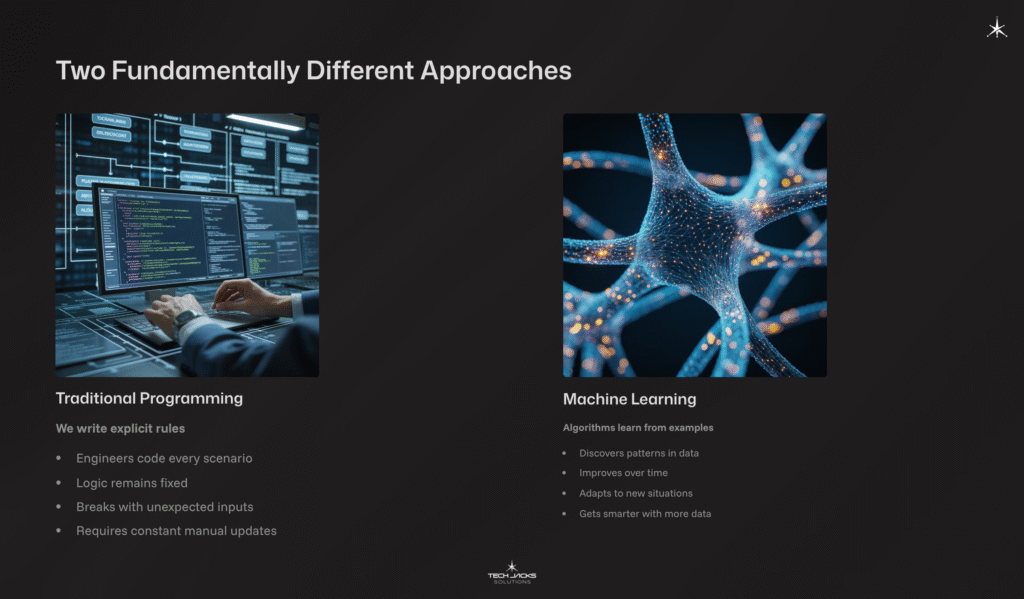

Machine learning is a computational technique that enables systems to learn from data or experience and improve their performance over time. Unlike traditional programming where you explicitly code every rule and decision, ML systems optimize their behavior by identifying patterns in data.

Here’s the crucial difference: In traditional programming, a developer specifies exact computational steps. Medical imaging techniques like CT, MRI, and PET generate vast amounts of data that require efficient analysis and interpretation. Writing rules to identify every possible abnormality in a medical scan? Nearly impossible. Machine learning flips this. Feed the system thousands of labeled images (normal tissue versus tumors), and it learns to distinguish patterns radiologists might spend years learning to recognize.

The system trains on existing data, discovers patterns, then applies those patterns to new situations. Research from Deloitte indicates predictive maintenance can decrease maintenance costs by up to 10% while boosting runtime by as much as 20%. That improvement happens without anyone updating the underlying code rules.

Why Machine Learning Matters Now

Three converging factors make this the ML era: massive datasets, powerful computing hardware, and refined algorithms.

Modern devices generate vast amounts of data. Companies like Google and Meta process billions of images, videos, and text documents daily. The exponential growth in data generation over the past decades has created the conditions necessary for machine learning to flourish.

Graphics Processing Units (GPUs), originally designed for video games, turned out to be exceptionally efficient at the mathematical operations ML requires. GPU acceleration has dramatically reduced training times for neural networks. Tasks that previously required days or weeks of computation can now complete in hours or minutes, depending on model complexity and dataset size.

Algorithmic breakthroughs, particularly in deep learning since 2012, enabled capabilities that seemed impossible a decade ago. Computer vision systems now identify objects in images with accuracy matching human experts. Language models write coherent text, translate between languages, and answer complex questions.

The Core Concept: Learning Without Explicit Programming

Traditional software follows instructions. ML systems learn from examples.

Consider teaching a child to identify animals. You don’t provide a detailed specification (“four legs, fur, specific ear shape”). Instead, you show them dozens of pictures: “This is a dog. This is a cat.” They learn to distinguish features without you explicitly defining every characteristic.

ML works similarly. The system examines data, identifies patterns, builds an internal model representing those patterns, then applies that model to new data. This process is called training, and the resulting internal representation is the model.

The model isn’t pre-programmed logic. It’s a mathematical construct whose behavior depends entirely on the training data used to create it. Feed it different examples, and you’ll get a different model with different capabilities and blind spots.

Four Fundamental Approaches to Machine Learning

Machine learning splits into four primary paradigms based on how systems learn:

4 Main Categories of by Lisa YuSupervised Learning

The system learns from labeled examples. You provide input-output pairs, and it learns the relationship between them.

Think of it as learning with a teacher who provides correct answers. Show the system thousands of images labeled “cat” or “dog,” and it learns to classify new images correctly. Feed it historical house prices with features like square footage and location, and it learns to predict prices for new listings.

Supervised learning powers most commercial ML applications because businesses have clear targets they want to predict: Will this customer cancel their subscription? Is this transaction fraudulent? What product should we recommend?

Unsupervised Learning

No labels here. The system explores data looking for inherent patterns and structures.

Customer segmentation exemplifies this approach. Feed purchase history into a clustering algorithm, and it groups similar customers without being told what makes a “good” grouping. Netflix might discover viewer segments you never explicitly defined: binge-watchers who prefer international content, weekend-only viewers who love documentaries, or families sharing accounts with diverse tastes.

Unsupervised learning excels at discovering unknown patterns and reducing complex data to essential features.

Semi-Supervised Machine Learning

Uses both labeled and unlabeled data during training. Why does this matter? Labeling is expensive. Getting doctors to annotate 10,000 medical images costs serious money. But you might have a million unlabeled images sitting around. Semi-supervised learning trains on your small labeled set, then extends that knowledge using patterns it finds in the unlabeled data.

Reinforcement Learning

The system learns through trial and error, receiving rewards for beneficial actions and penalties for poor ones.

This approach trained the AI that beat world champions at Go and StarCraft. The system plays millions of games, learning strategies that maximize winning. It doesn’t need labeled examples of “good moves.” Instead, it explores different strategies and learns from which ones lead to victory.

Reinforcement learning handles sequential decision problems where actions have long-term consequences, making it valuable for robotics, game AI, and optimization problems.

Essential Machine Learning Terminology

Understanding these terms helps you navigate ML conversations and literature:

Model: The mathematical construct that makes predictions. After training on data, this is what you deploy to production.

Training: The process of optimizing model parameters using data. During training, the system adjusts internal values to minimize prediction errors.

Parameters: Internal variables the model adjusts during training. In a neural network, these are the weights and biases determining how inputs transform into outputs.

Hyperparameters: Configuration choices you make before training starts, like learning rate or network architecture. Unlike parameters, these aren’t learned from data.

Validation Data: Validation Data: Data held aside during training to tune model choices and prevent overfitting. Cross-validation takes this further by splitting training data into multiple folds. You train and validate on different combinations, which gives you more reliable performance estimates than trusting a single split. Standard practice for serious work.

Test Data: A final holdout set used to evaluate model performance on truly unseen examples.

Overfitting: When a model memorizes training data rather than learning generalizable patterns. It performs brilliantly on training data but fails on new examples.

Generalization: The ability to perform well on new, unseen data. This is what you actually care about in production systems.

Bias-Variance Tradeoff: Every model faces this tension. Simple models miss important patterns (high bias) but make consistent predictions (low variance). Complex models capture intricate patterns (low bias) but memorize noise (high variance). You’re looking for the sweet spot where total error is lowest, not trying to eliminate both

The Machine Learning Project Lifecycle

Building a useful ML system involves far more than training a model. The complete workflow includes:

1. Problem Definition

Start by clearly defining the business problem. “Predict customer churn” is vague. “Predict which customers will cancel in the next 30 days with sufficient accuracy to make targeted retention offers profitable” provides clear success criteria.

This step determines whether ML is even appropriate for your problem. Not every prediction problem needs machine learning.

2. Data Collection and Preparation

Data preparation typically represents the majority of project effort. Real-world data is messy, incomplete, and inconsistent. You’ll spend significant effort cleaning errors, handling missing values, and transforming data into formats ML algorithms can process.

Poor data quality guarantees poor model performance. No amount of algorithmic sophistication compensates for fundamentally flawed data.

3. Exploratory Data Analysis

Before building models, understand your data. What patterns exist? Are there obvious correlations? Do certain features predict the outcome strongly? Are there outliers or errors that need addressing?

This analysis guides feature engineering and model selection decisions.

4. Model Training and Evaluation

Select appropriate algorithms, train models on your prepared data, and rigorously evaluate performance using held-out test data. Compare multiple approaches to find what works best for your specific problem.

Evaluation metrics matter immensely. Accuracy isn’t always the right measure. Consider fraud detection where 99% of transactions are legitimate. A model that always predicts “not fraud” achieves 99% accuracy while providing zero value.

You need metrics matching your business reality. Precision tells you how many flagged transactions were actually fraud. Recall tells you how many fraudulent transactions you caught. F1-score balances both. AUC-ROC measures separation quality across all thresholds. Choose what aligns with real costs in your domain.

5. Deployment and Monitoring

Getting a trained model into production systems where it makes real predictions requires engineering work: APIs for serving predictions, infrastructure for handling request load, monitoring for performance degradation.

Models don’t remain accurate forever. Data distributions shift. Customer behavior changes. Competitors adapt. Continuous monitoring detects when retraining is needed.

Real-World Applications Transforming Industries

ML isn’t theoretical. It drives critical systems across healthcare, finance, transportation, and commerce:

Healthcare: ML analyzes medical images to detect cancers, predicts patient deterioration in ICUs, accelerates drug discovery by identifying promising compounds, and personalizes treatment recommendations based on genetic profiles.

Finance: Algorithmic trading systems execute millions of trades based on ML predictions. Fraud detection prevents billions in losses. Credit scoring evaluates loan applications more accurately than traditional rules-based systems.

Transportation: Self-driving vehicles navigate using computer vision and reinforcement learning. Logistics companies optimize delivery routes. Airlines predict maintenance needs before failures occur.

Digital Services: Recommendation engines drive engagement for Netflix, Spotify, and Amazon. Voice assistants understand natural language. Translation services break language barriers in real-time.

These applications share a common thread: problems too complex for explicit programming where large datasets and clear success metrics make ML the right tool.

Common Misconceptions About Machine Learning

Myth: ML is magic that solves any problem.

Reality: ML is a tool requiring careful problem formulation, quality data, appropriate algorithm selection, and continuous maintenance.

Myth: More data always improves performance.

Reality: More quality data helps. More poor-quality data makes things worse. A small, carefully curated dataset often outperforms a massive messy one.

Myth: Complex models always outperform simple ones.

Reality: Simpler models often generalize better and are easier to debug, maintain, and explain. Start simple.

Myth: Once deployed, ML models run forever without maintenance.

Reality: Model performance degrades over time as data distributions shift. Production ML requires ongoing monitoring and retraining.

The Mathematics Foundation

Machine learning rests on statistical and mathematical foundations you can’t avoid:

Linear Algebra: Data representations, neural network operations, and dimensionality reduction all rely heavily on matrix operations and vector spaces.

Calculus: Optimization algorithms use derivatives to find parameter values minimizing prediction errors. Understanding gradient descent requires calculus fundamentals.

Probability and Statistics: ML is fundamentally about learning probability distributions from data. Bayesian methods, confidence intervals, and hypothesis testing all draw from statistical theory.

Optimization Theory: Training algorithms search parameter spaces for optimal values. Understanding convergence, local minima, and regularization requires optimization concepts.

You don’t need a PhD in mathematics to start learning ML. But sustained progress requires building these foundations gradually.

Getting Started: Your Learning Path

For beginners, focus on understanding concepts before diving into implementation details:

- Learn Python programming basics. Python dominates ML development due to its simplicity and extensive library ecosystem.

- Build mathematical foundations gradually. Work through practical problems that reinforce linear algebra, calculus, and probability concepts.

- Study classical algorithms before deep learning. Understand decision trees, linear regression, and k-means clustering. These foundational algorithms teach core concepts with less complexity than neural networks.

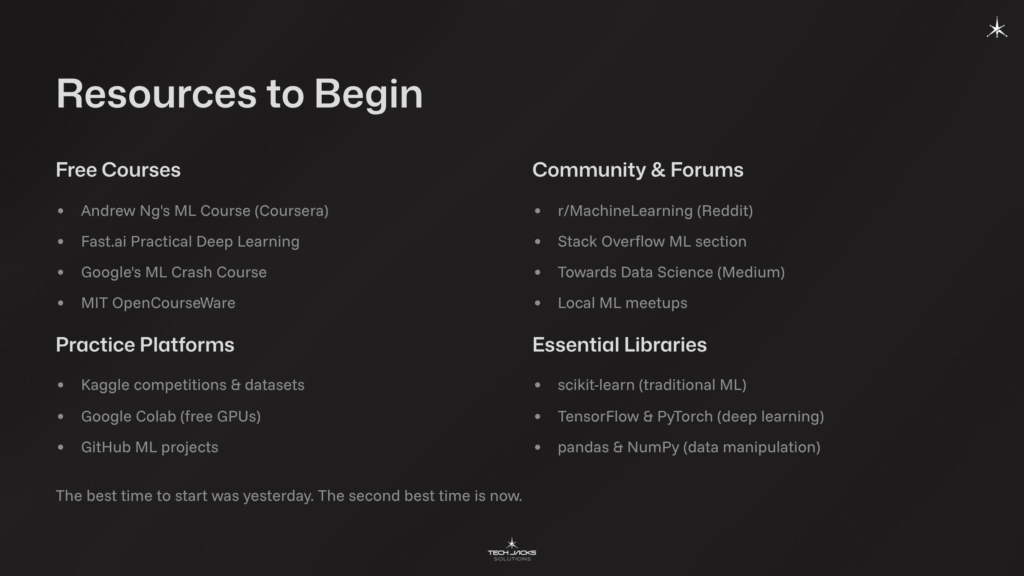

- Complete hands-on projects using real datasets. Kaggle competitions and public datasets let you practice the complete workflow from problem definition through model deployment.

- Engage with the community. Follow ML blogs, attend conferences, participate in online forums. The field evolves rapidly, and community engagement keeps you current.

Check out our Overview of AWS Machine Learning Certifications:

Free Courses to Start Your Journey

Several world-class ML courses are available at no cost:

Machine Learning Specialization by Andrew Ng (Coursera/DeepLearning.AI): This three-course series provides the strongest theoretical foundation available online. Andrew Ng, co-founder of Coursera and former head of Google Brain, teaches supervised learning, unsupervised learning, and best practices. The course uses Python, NumPy, and scikit-learn. Over 4.8 million learners have completed previous versions since it launched in 2012. You can audit all course content free; certificates require payment.

Practical Deep Learning for Coders (fast.ai): Jeremy Howard and Rachel Thomas created this free course using a unique “top-down” approach. You build working models from day one, then progressively understand the theory. The course uses PyTorch and the fastai library. Perfect for coders who learn best by doing.

Machine Learning Crash Course (Google): Google’s internal ML training, released publicly for free. Covers fundamental concepts with interactive exercises using TensorFlow. Includes 25 lessons, 40 exercises, and real datasets from Google’s work.

Introduction to Machine Learning (Kaggle Learn): Short, focused micro-courses teaching practical ML skills. Each course takes 1-4 hours and includes hands-on coding in Kaggle’s integrated environment. No setup required.

3Blue1Brown’s Neural Networks Series (YouTube): Grant Sanderson’s visual explanations make complex mathematical concepts intuitive. While not a full course, these videos provide the best available explanations of how neural networks actually learn.

Frequently Asked Questions

Q: How long does it take to learn machine learning?

Building foundational skills in programming, mathematics, and core algorithms requires consistent study over an extended period. The timeline varies significantly based on prior background. Those with programming experience may progress faster than complete beginners. Reaching professional competence typically requires 1-2 years of practical project experience. Mastery is a lifelong pursuit as the field continuously evolves.

Q: Do I need a PhD to work in machine learning?

No. While research roles typically require advanced degrees, most applied ML engineering positions value practical skills and project experience over academic credentials. Many successful ML engineers have bachelor’s degrees or are self-taught.

Q: What programming languages should I learn?

Python is essential. The overwhelming majority of ML development uses Python due to libraries like NumPy, Pandas, scikit-learn, TensorFlow, and PyTorch. R has niche applications in statistics and academia. For production systems, knowledge of Java, C++, or Scala can be valuable for performance-critical components.

Q: Should I learn ML or deep learning first?

Start with traditional ML. Understanding decision trees, linear models, and ensemble methods teaches fundamental concepts applicable across the field. Deep learning is a subset of ML with additional complexity. Build foundations first.

Q: What’s the difference between AI, machine learning, and deep learning?

Artificial Intelligence is the broadest concept: systems exhibiting intelligent behavior. Machine Learning is a subset of AI focused on learning from data. Deep Learning is a subset of ML using neural networks with multiple layers. The terms often blur in casual conversation, but this hierarchy represents the technical relationship.

Q: Can I learn machine learning for free?

Absolutely. Coursera, edX, fast.ai, and university open courseware provide excellent free resources. Kaggle offers free datasets and competitions. The ML community shares knowledge generously through blogs, YouTube channels, and open-source projects.

Q: What industries hire machine learning engineers?

Virtually every industry now employs ML talent. Technology companies are obvious employers, but healthcare, finance, retail, manufacturing, agriculture, energy, and entertainment all leverage ML extensively. The skills transfer across domains.

Q: Is machine learning going to replace programmers?

ML augments rather than replaces human expertise. While ML automates certain coding tasks (like code completion tools), it creates new roles requiring hybrid skills: understanding both domain problems and ML capabilities. The nature of software engineering is evolving, not disappearing.

Next Steps in Your ML Journey

Start with a clear goal. Career change? Specific application? Research contribution? Your goal determines your path.

Practice consistently. Thirty minutes daily beats weekend marathons. ML concepts build progressively.

Build in public. Share projects on GitHub. Write about what you’re learning. Contribute to open-source projects. Public work creates accountability and connects you with the community.

Machine learning is changing how we solve complex problems. Understanding it means understanding the systems running the world.

This article provides foundational knowledge for understanding machine learning concepts, applications, and learning paths. All claims are sourced from authoritative references in machine learning history, education, and industry practice.

Ready to Test Your Knowledge?

References and Further Reading

Historical Sources

- History of Machine Learning – Clickworker

- Timeline of Machine Learning – Wikipedia

- History of AI Timeline – Coursera

Educational Resources

- Machine Learning Specialization – Coursera

- Practical Deep Learning – fast.ai

- ML Crash Course – Google

- Statistical Machine Learning – IBM