Author: Derrick D. Jackson

Title: Founder & Senior Director of Cloud Security Architecture & Risk

Credentials: CISSP, CRISC, CCSP

Last updated: 10/07/2025

Hello Everyone, Help us grow our community by sharing and/or supporting us on other platforms. This allow us to show verification that what we are doing is valued. It also allows us to plan and allocate resources to improve what we are doing, as we then know others are interested/supportive.

Table of Contents

5 W’s of AI Governance | What is AI Governance?

AI governance isn’t optional . It’s the foundation that separates organizations using AI successfully from those creating expensive disasters.

Companies deploying AI without proper governance face a brutal reality. When algorithms fail, they don’t just crash. They discriminate, leak data, or make life-changing decisions incorrectly. These failures happen regularly.

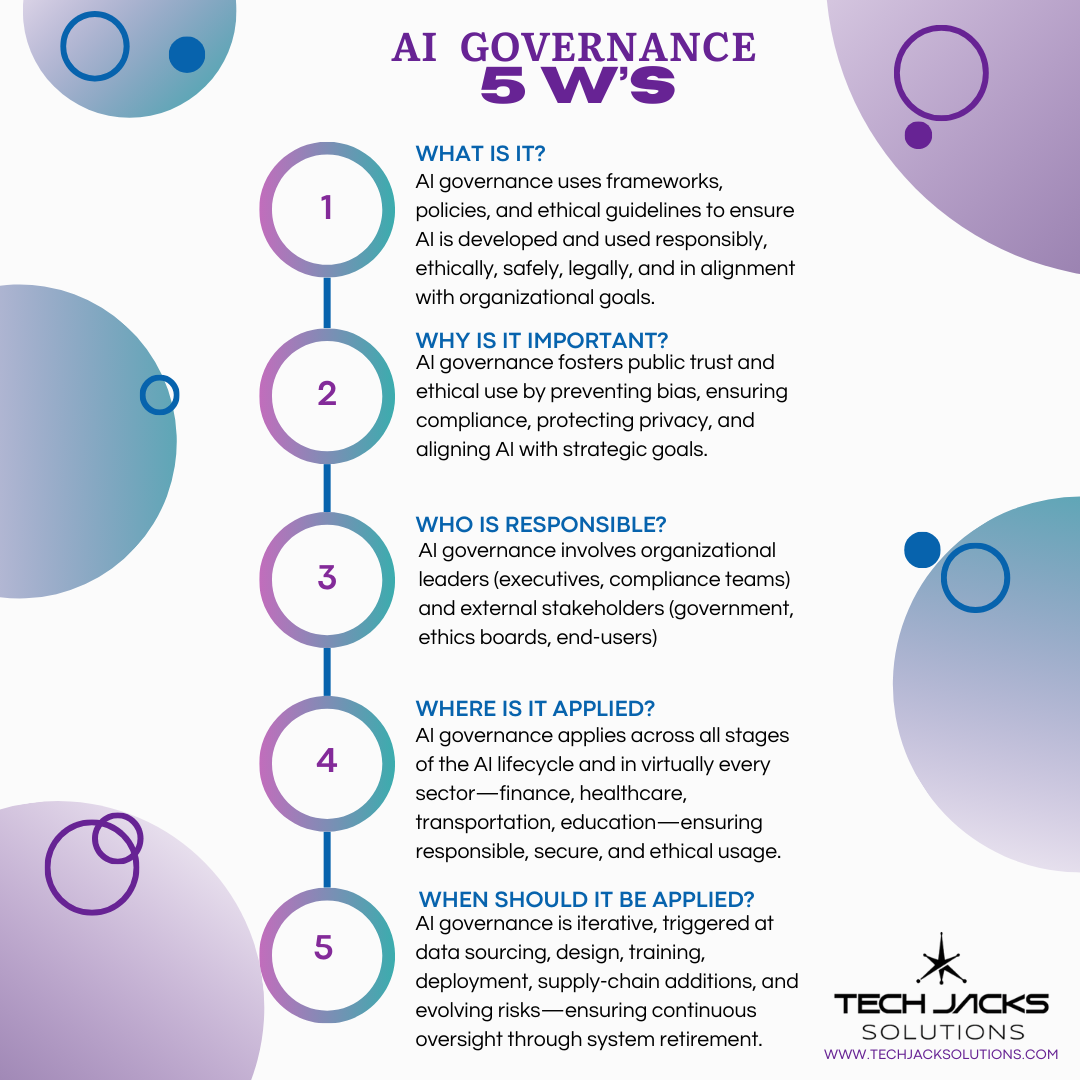

What Is AI Governance?

AI governance is your organization’s system for keeping artificial intelligence safe, legal, ethical, and strategically aligned. It’s the management layer ensuring your AI does what it should (and doesn’t do what it shouldn’t).

The U.S. National Institute of Standards and Technology (NIST) calls governance the “GOVERN” function in their AI Risk Management Framework (the oversight mechanism for every AI decision your organization makes). ISO/IEC 42001 treats it as a certifiable management system, similar to how ISO 27001 handles information security.

Organizations with mature governance implement dozens or even hundreds of specific controls across these areas. Why? Fixing problems after deployment costs significantly more than preventing them through proactive governance.

What Does AI Governance Actually Cover?

Different frameworks organize governance differently. Here’s how the two most-cited U.S. frameworks break it down:

| Traditional Approach (GAO) | What It Means | NIST AI RMF Approach | What It Means |

| Strategic Direction | Does this AI project serve business goals? | Policies & Processes | Are rules in place for managing AI risks? |

| Structure & Accountability | Who decides and who’s responsible? | Accountability Structures | Are teams trained and empowered to manage risk? |

| Values & Ethics | What principles guide AI decisions? | Workforce Diversity | Do different perspectives inform decisions? |

| Workforce Development | Do people have the right skills? | Risk Culture | Does the organization communicate about AI risks? |

| Stakeholder Engagement | Are affected communities involved? | Stakeholder Engagement | Is external feedback collected and used? |

| Risk Management | How are AI risks identified and handled? | Third-Party Management | Are vendor and supply chain risks addressed? |

| Technical Specifications | Does the system meet requirements? | ||

| Compliance | Does it follow laws and regulations? | ||

| Transparency | Can people understand how it works? |

Key Differences

GAO Framework: Built for government accountability and audits. Separates organizational governance (strategy, people, values) from system governance (technical specs, compliance, transparency).

NIST Framework: Built for continuous risk management. Treats governance as a cross-cutting function that runs through every phase of AI development and deployment.

Both frameworks address risk management, accountability, stakeholder involvement, and compliance. They organize these concepts differently but cover similar ground.

Which One Should You Use?

Neither framework is mandatory for most organizations (unless you’re a federal agency). Many companies reference both.

GAO’s structure works well for board reporting and annual governance reviews. NIST’s categories work well for day-to-day risk management during system development.

You don’t need to choose one exclusively. Understanding both helps you build governance that’s defensible to regulators and practical for your teams.

Sources: GAO-21-519SP Artificial Intelligence Accountability Framework for the Intelligence Community; NIST AI 100-1 AI Risk Management Framework 1.0

NIST VS ISO VS EU by Lisa Yu

Why Is AI Governance Important?

Ungoverned AI creates five categories of damage:

Regulatory penalties. The EU AI Act fines companies up to €35 million or 7% of global annual turnover for non-compliance. The Act prohibits certain uses entirely (social scoring, subliminal manipulation) and heavily regulates high-risk applications affecting health, safety, employment, or fundamental rights.

Algorithmic bias at scale. Amazon scrapped its AI recruiting tool in 2018 after discovering it penalized resumes containing the word “women’s” and downgraded graduates from all-women’s colleges. The system taught itself male candidates were preferable because it learned from a decade of male-dominated historical data. A healthcare algorithm studied by UC Berkeley and University of Chicago researchers affected over 200 million Americans and systematically recommended fewer Black patients for additional care compared to equally sick white patients. The algorithm used healthcare spending as a proxy for health needs, but systemic inequalities meant less was spent caring for Black patients.

Security vulnerabilities. NIST’s Adversarial Machine Learning taxonomy documents how unprotected models face data poisoning, model extraction, and prompt injection attacks. Researchers demonstrate these attacks succeed against production systems lacking proper security controls.

Institutional failures. The Dutch childcare benefits scandal saw over 20,000 families falsely accused of fraud between 2013 and 2019. The algorithmic risk scoring system explicitly flagged dual nationality as a fraud indicator. Families were ordered to repay tens of thousands of euros. The Dutch parliament called it “unprecedented injustice.” The entire government resigned in January 2021, and officials acknowledged institutional racism in May 2022.

Data leakage risks. Companies face exposure when employees share proprietary information with public AI systems. Organizations implement immediate access restrictions after discovering sensitive data entered into commercial AI tools.

Mature governance enables teams to deploy AI systems faster and more safely. Clear frameworks reduce deployment uncertainty by establishing pre-approved patterns, standardized review processes, and defined decision authorities. Organizations with documented governance can demonstrate their practices to regulators, customers, and partners, building trust through transparency rather than opacity.

Who Is Responsible for AI Governance?

Governance requires a coalition, not a czar.

The typical AI Governance Committee includes:

- Chief Data/AI Officer (strategy and standards)

- Chief Information Security Officer (security controls)

- General Counsel (legal compliance)

- Chief Risk Officer (risk assessment)

- Domain executives (business alignment)

The Cloud Security Alliance’s AI Organizational Responsibilities framework provides detailed RACI charts showing how different teams collaborate on data security, model monitoring, and fairness. Examples from their framework:

- Data scientists: responsible for bias testing

- MLOps engineers: accountable for model monitoring

- Legal team: consulted on regulatory requirements

- Business owners: informed of deployment decisions

Major financial institutions structure governance through dedicated AI divisions with specialists across trading, risk, compliance, and technology. Their governance boards bring together diverse perspectives to address AI challenges.

External stakeholders matter too. Auditors verify controls. Regulators enforce standards. Affected users deserve transparency about AI’s impact on them.

Five interconnected principles form the foundation of effective AI governance. Organizations implementing these principles can deploy AI faster and more safely than those treating governance as compliance paperwork.

Transparency makes everything else possible. You can’t govern what you can’t see. Transparency means documenting what data trains your models, how algorithms make decisions, and what assumptions are baked into the system. NIST’s AI Risk Management Framework identifies transparency as essential to trustworthy AI. This doesn’t mean publishing proprietary algorithms. It means stakeholders understand how the system works at an appropriate level. Technical teams need architectural documentation. Business owners need decision logic. Users need explanations for outcomes affecting them.

Accountability requires clear ownership. Every AI system needs someone accountable for its behavior. Not just during development. In production. When something goes wrong at 2 AM. The Cloud Security Alliance’s AI governance frameworks provide RACI matrices showing who’s responsible (does the work), accountable (owns the outcome), consulted (provides input), and informed (kept updated). Data scientists don’t own production systems. MLOps engineers don’t make ethical decisions. Legal doesn’t deploy models. Governance clarifies these boundaries.

Fairness testing catches bias before deployment. Testing for fairness isn’t optional. Organizations use tools like IBM’s AI Fairness 360 or Microsoft’s Fairlearn to probe models for demographic disparities before launch. Testing compares outcomes across protected groups. Does your loan approval algorithm treat applicants differently based on zip code? Does your resume screening favor certain universities? These patterns hide in training data. Testing surfaces them.

Safety controls prevent harm. AI safety means asking “what could go wrong?” before it does. This includes technical safety (model robustness, adversarial testing) and operational safety (human oversight, rollback capabilities, monitoring alerts). Organizations deploy gradual rollouts, starting with small user percentages. Automatic rollback triggers when metrics decline. Human review remains available for high-stakes decisions.

Human oversight maintains ultimate authority. Algorithms recommend. Humans decide. This principle applies most strictly to high-stakes domains like healthcare, employment, criminal justice, and lending. ISO/IEC 42001 specifies that organizations define when human review is mandatory and what authority humans retain to override algorithmic outputs.

These principles interconnect. Transparency enables accountability. Accountability drives fairness testing. Fairness testing reveals safety gaps. Safety controls require human oversight. Organizations implementing all five create governance that accelerates deployment rather than blocking it.

Where Is AI Governance Applied?

Governance follows AI everywhere. No exceptions.

Healthcare. Clinical decision support and diagnostic AI require governance for validation, performance monitoring, and clinician training. The FDA regulates many AI-based medical devices as Software as Medical Device (SaMD).

Finance. AI processing loan applications must comply with fair lending laws, monitor for discriminatory patterns, and maintain audit trails for regulators.

Critical infrastructure. Smart grids and autonomous transport systems require governance focused on reliability and safety to prevent service disruptions.

Employment and education. Hiring algorithms and grading systems need oversight to ensure fairness and transparency.

The EU AI Act labels these domains “high-risk,” requiring:

- Detailed decision logging

- Human oversight capabilities

- Regular bias assessments

- User transparency reports

ISO 42001 and NIST AI RMF provide equivalent safeguards for global organizations.

Cloud deployments need special attention. Major cloud providers offer built-in governance features for drift detection and explainability. Organizations still need policies for deployment authorization, testing protocols, and retirement procedures.

What Mature Governance Enables

Organizations treating governance as infrastructure rather than compliance unlock advantages competitors miss.

Speed improves. Teams deploy AI faster when pre-approved patterns exist. Engineers don’t reinvent ethics for every project. Legal doesn’t review every decision from scratch. Clear guardrails enable safe experimentation.

Trust compounds. Customers choose vendors who document their AI practices. Regulators audit less frequently when controls are proven. Partners integrate more readily with systems they understand.

Talent stays. Ethical engineers choose employers building responsible systems. Data scientists want their work deployed, not shelved over governance concerns. Operations teams value systems designed for monitoring.

Scale becomes sustainable. Systems built with governance from the start handle growth better than those retrofitted later. The alternative teaches through failure. But AI failures don’t just crash — they discriminate, leak data, or make life-altering mistakes at scale.

Governance prevents your AI from becoming a cautionary tale.

When Should AI Governance Be Applied?

Always. Governance runs continuously through five lifecycle stages:

Plan & Design. Before writing code, governance committees review project proposals against responsible AI principles. Projects failing ethical review don’t proceed.

Build & Validate. Organizations document each model’s intended use, limitations, and test results. Testing tools help teams probe models for bias before deployment.

Deploy & Operate. Companies use gradual rollouts for algorithm changes, starting with small user percentages. Automatic rollback capabilities activate if metrics decline. Human oversight remains available.

Monitor & Improve. Fairness toolkits run regular bias checks on production algorithms. When drift is detected, models retrain automatically or alert operators.

Retire. Old models require secure deletion, especially those trained on personal data. Teams document lessons learned for future projects.

NIST’s MAP-MEASURE-MANAGE cycle and ISO 42001’s Plan-Do-Check-Act loop reinforce that governance is evergreen, not a one-time checklist.

Building Your Governance Foundation

Start with these five actions based on established frameworks:

Define your program. Document policies aligned with NIST AI RMF, ISO 42001, and relevant regulations. Leading organizations publish governance frameworks to demonstrate transparency.

Align objectives. Connect governance to business goals. Link risk controls directly to business metrics while preventing harmful outcomes.

Assign clear roles. Everyone from board to junior engineers needs defined responsibilities. Use RACI charts. Update job descriptions. Create escalation paths.

Apply consistently. Governance covers every AI touchpoint, including third-party models. When using external AI services, organizations must validate them against their governance standards.

Adopt continuous improvement. Schedule monthly governance reviews, quarterly policy updates, and annual external audits. Governance frameworks evolve through multiple iterations as organizations learn.

4 Foundations for AI Governance by Lisa YuImplementation Patterns

Leading organizations build governance around existing risk management frameworks:

Financial services typically establish AI Review Boards combining technology and ethics perspectives. Models receive risk tier ratings determining testing requirements. Higher-risk models require more frequent performance reporting.

Healthcare organizations often create AI Centers of Excellence governing clinical algorithms. These frameworks mandate clinical review for AI touching patient care. Deployment requires appropriate medical oversight.

Manufacturing companies governing AI in autonomous equipment apply safety-critical systems standards. This includes testing protocols, simulations, and operator override capabilities. Human confirmation remains essential.

These patterns show governance accelerates innovation rather than blocking it. Standardized processes reduce deployment times by eliminating uncertainty and rework. Clear rules enable faster decisions.

Companies treating AI governance as overhead miss the point. It’s infrastructure. You wouldn’t run production systems without monitoring, backups, or security. AI needs identical operational discipline.

The alternative? Learning through catastrophe. But AI failures don’t just crash. They discriminate, violate privacy at scale, or make life-altering mistakes. Governance prevents your AI from becoming a cautionary tale.

Organizations embedding governance from day zero transform AI from a compliance headache into a competitive, trustworthy, and scalable advantage.

Learn more about implementing your AI Governance Committee in our article:

AI Governance Committee Implementation: 8 Basic Stages to Mitigate Risks

Ready to Test Your Knowledge?

Visit our AI Governance Hub: Learn More

LaTanya Jackson

March 26, 2025This information about AI Governance is very helpful. I actually only heard of Data Governance in the Information Technology industry, so this was definitely an eye opener.

BC

August 16, 2025Solid framework, Derrick.

The 8-stage approach is practical – especially framing this as a business problem rather than just tech. Your point about governance committees as “early warning systems” hits the mark, especially with regulations changing so rapidly.

The 300% growth stat for governance roles is compelling. Good to see concrete career pathways instead of just problem identification.