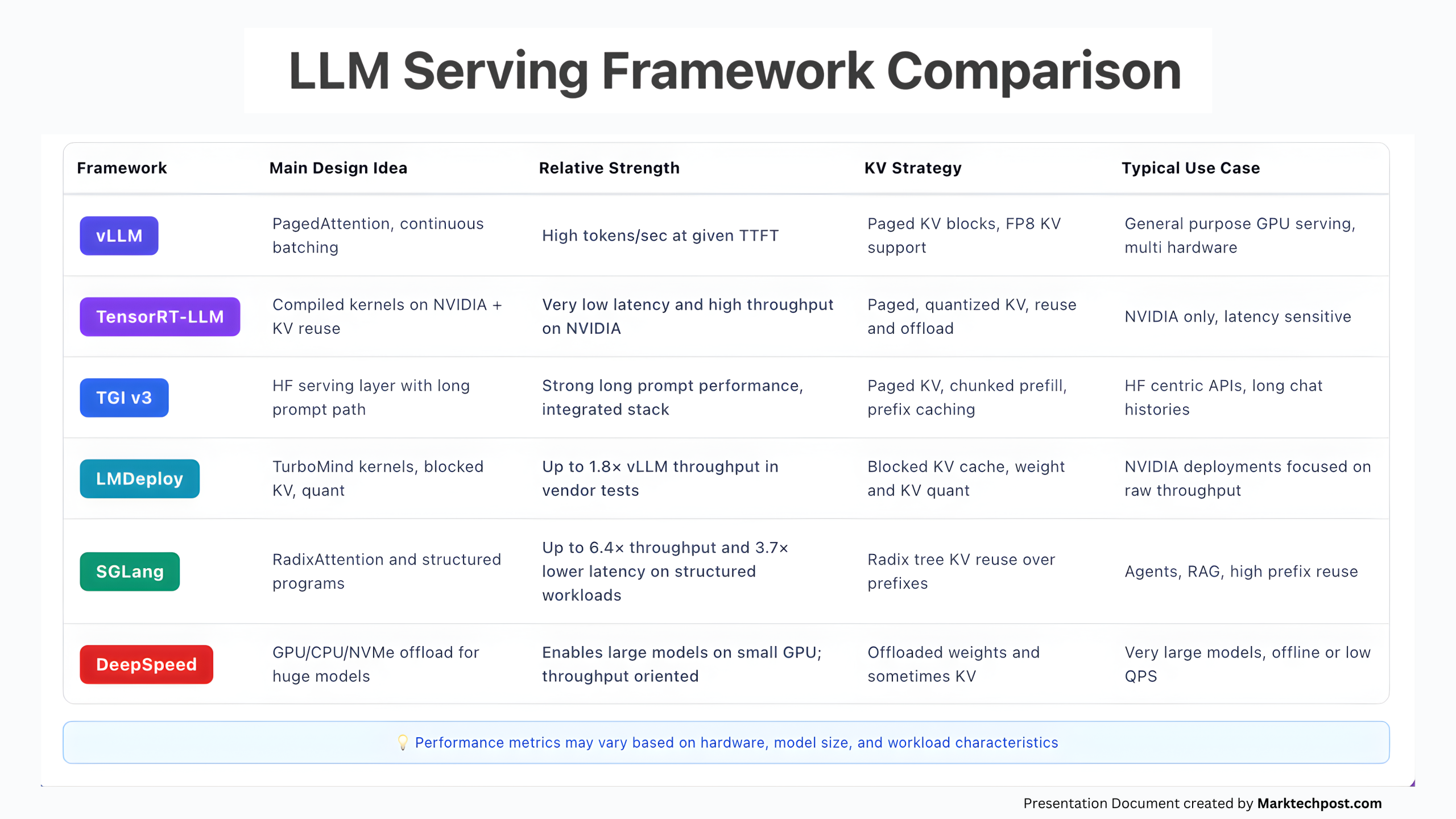

Large language models are now limited less by training and more by how fast and cheaply we can serve tokens under real traffic. That comes down to three implementation details: how the runtime batches requests, how it overlaps prefill and decode, and how it stores and reuses the KV cache. Different engines make different tradeoffs

The post Comparing the Top 6 Inference Runtimes for LLM Serving in 2025 appeared first on MarkTechPost. Read More