NIST Measure Function SOP

A structured procedural framework designed to support systematic assessment, analysis, tracking, and monitoring of AI risks and impacts

[Download Now – $5.00]

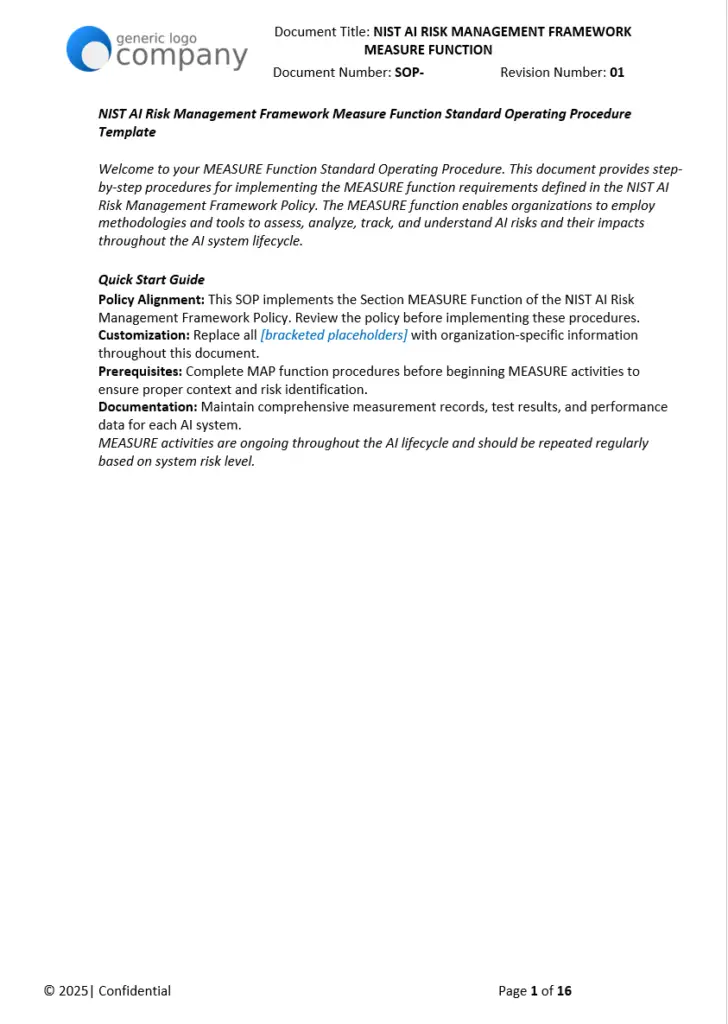

This Standard Operating Procedure (SOP) template provides detailed, step-by-step procedures for implementing the MEASURE function of the NIST AI Risk Management Framework. The MEASURE function enables organizations to employ methodologies and tools to assess, analyze, track, and understand AI risks and their impacts throughout the AI system lifecycle using quantitative, qualitative, or mixed-method approaches.

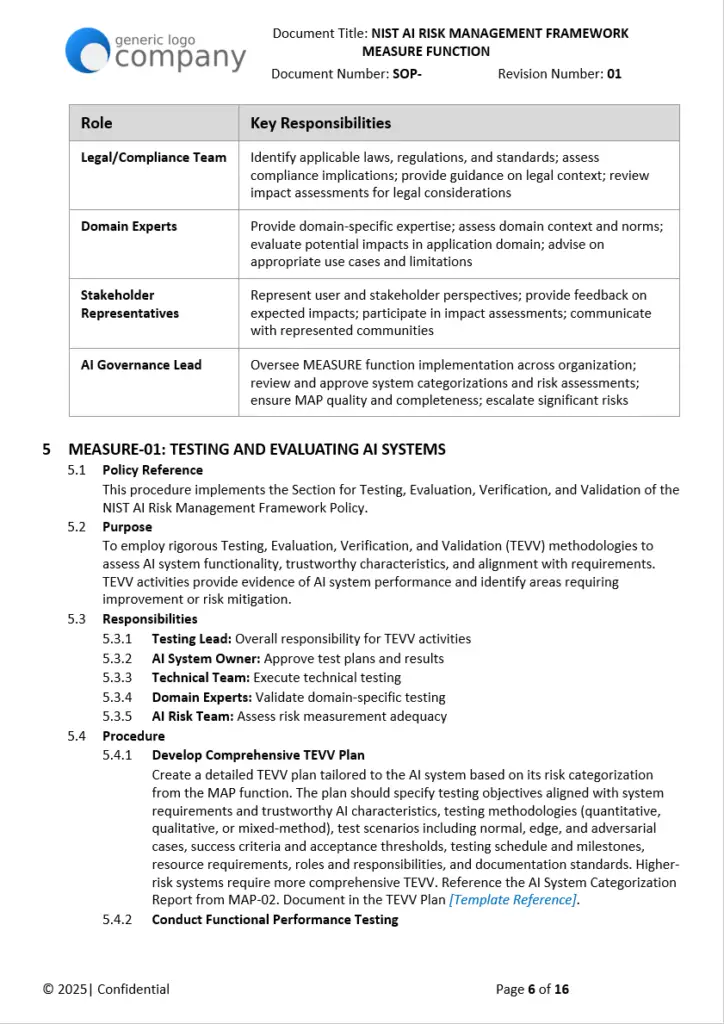

The template requires customization with organization-specific information, testing methodologies, and measurement systems. All bracketed placeholders need replacement with your organizational details. This structured approach provides a comprehensive procedural framework with pre-built procedures for TEVV (Testing, Evaluation, Verification, and Validation), metrics tracking, controls validation, and continuous monitoring that organizations can customize rather than developing measurement documentation from scratch.

Key Benefits

✓ Complete TEVV framework – Detailed procedures for testing AI system functionality, trustworthy characteristics, and performance across diverse conditions

✓ Metrics tracking infrastructure – Comprehensive guidance for defining, collecting, analyzing, and documenting AI system metrics with baseline and threshold specifications

✓ Controls validation procedures – Systematic approach for validating risk management control design effectiveness and operating effectiveness

✓ Performance analysis protocols – Structured processes for analyzing real-world AI system performance, impacts, and fairness outcomes in operational deployment

✓ Continuous monitoring guidance – Complete frameworks for detecting model drift, data drift, performance degradation, and trustworthiness deterioration

✓ Risk-based testing requirements – Procedures scaled to AI system risk categorization with more rigorous requirements for higher-risk systems

✓ Comprehensive deliverable specifications – Each procedure includes specific deliverables with format guidance and frequency requirements

Who Uses This?

This template is designed for:

- AI Testing Leads implementing comprehensive TEVV programs

- Data Scientists establishing metrics tracking and drift detection systems

- Internal Audit teams validating AI risk management controls

- AI System Owners responsible for performance monitoring and impact assessment

- Risk Management teams measuring and tracking AI-specific risks

- Organizations at any maturity level seeking structured AI measurement capabilities

What’s Included

The template contains 13 structured sections covering:

- Complete TEVV procedures for functional performance, trustworthy characteristics, stress testing, fairness, explainability, security, privacy, and human-AI interaction (MEASURE.01)

- Metrics definition, collection infrastructure, automated tracking, baselines, thresholds, and dashboards (MEASURE.02)

- Risk controls inventory, testing procedures, design and operating effectiveness validation, and gap analysis (MEASURE.03)

- Operational performance analysis, real-world impact assessment, fairness outcomes, and incident investigation (MEASURE.04)

- Continuous monitoring systems for model drift, data drift, performance degradation, and trustworthiness (MEASURE.05)

Each procedure section includes: policy reference, purpose statement, role responsibilities, detailed step-by-step procedures, comprehensive deliverable specifications, and documentation requirements.

Why This Matters

The NIST AI Risk Management Framework identifies MEASURE as essential for employing methodologies and tools to assess, analyze, track, and understand AI risks throughout the system lifecycle. Without rigorous measurement practices, organizations cannot validate whether AI systems perform as expected, meet trustworthy AI characteristics, or produce fair and equitable outcomes in real-world deployment.

Testing and evaluation provide empirical evidence of AI system capabilities and limitations. Metrics tracking enables early detection of performance degradation or emerging risks. Controls validation ensures implemented risk mitigations operate effectively. Performance and impact analysis reveals whether predicted risks materialize and identifies unanticipated consequences. Continuous monitoring detects drift and degradation requiring intervention.

Organizations deploying AI systems face growing expectations for transparency about system performance, fairness testing results, and ongoing monitoring practices. Regulators increasingly require evidence of systematic testing and measurement. This template provides structured procedures for generating that evidence while supporting continuous improvement of AI system trustworthiness.

Framework Alignment

This template explicitly aligns with:

- NIST AI Risk Management Framework 1.0 – Implements all MEASURE function subcategories (MEASURE.01 through MEASURE.05)

- NIST AI 600-1 – Addresses trustworthy AI characteristics through systematic testing and validation procedures

- OECD AI Principles – Incorporates transparency, accountability, and robustness testing

- General Quality Management Systems – Procedural structure can support organizations with existing measurement and monitoring frameworks

Key Features

Testing, Evaluation, Verification, and Validation (TEVV) Comprehensive procedures for developing TEVV plans tailored to AI system risk categorization, conducting functional performance testing with accuracy and precision metrics, executing systematic testing of seven trustworthy AI characteristics, performing stress and boundary testing under degraded conditions, conducting fairness and bias testing across demographic groups, validating transparency and explainability with representative users, testing security against adversarial attacks, assessing privacy preservation and de-identification effectiveness, and executing human-AI interaction testing for usability and oversight.

Metrics Tracking and Documentation Detailed guidance for defining system-specific performance, business value, risk, fairness, trustworthiness, operational, and data quality metrics, establishing metrics collection infrastructure with automated pipelines, implementing automated metrics tracking with real-time dashboards and alerts, defining baseline values and threshold ranges for each metric, creating role-specific dashboards for executive, operational, technical, and compliance audiences, documenting metrics methodology with calculation formulas and data sources, establishing metrics review schedules based on system risk level, and tracking metrics over time in historical databases.

Risk Controls Validation Systematic procedures for inventorying all technical, process, organizational, and physical risk management controls, designing control testing procedures for both design and operating effectiveness, testing whether controls are appropriately designed to mitigate target risks, validating that controls operate as designed in practice through sampling and evidence review, collecting and organizing control evidence for audit purposes, interviewing control owners to assess practical execution and challenges, identifying control gaps, weaknesses, and circumvention opportunities, and documenting validation results with severity classifications and remediation recommendations.

Performance and Impact Analysis Structured processes for collecting operational performance data across diverse conditions and user groups, analyzing system performance trends using statistical techniques, measuring actual versus expected performance with variance analysis, assessing real-world impacts on individuals, organizations, and society, analyzing fairness and equity outcomes across demographic groups in deployment, investigating incidents and failures with root cause analysis and corrective actions, gathering stakeholder feedback through surveys, interviews, and complaint analysis, and synthesizing comprehensive performance and impact reports.

Continuous Monitoring and Measurement Updates Complete frameworks for establishing continuous monitoring systems with real-time dashboards and automated alerting, monitoring for model drift using baseline predictions and distribution shifts, monitoring for data drift with statistical comparisons to training data distributions, tracking performance degradation through comparison to historical baselines, monitoring trustworthy characteristics including fairness, explainability, reliability, and security metrics, detecting anomalies and outliers indicating potential attacks or misuse, updating measurement approaches as understanding improves and new risks emerge, and triggering reassessment based on drift detection, system updates, or identified impacts.

Risk-Based Measurement Requirements Procedures explicitly scaled to AI system risk categorization from MAP function, with high-risk systems requiring weekly detailed reviews and comprehensive TEVV coverage, medium-risk systems requiring bi-weekly reviews with standard testing protocols, and low-risk systems requiring monthly reviews with focused measurement. Testing depth, frequency, and rigor increase proportionally with system risk level.

Evidence Generation and Audit Support Each procedure specifies deliverables providing audit trail of measurement activities, including TEVV plans and comprehensive test results, metrics definitions with baseline and threshold documentation, control validation reports with evidence documentation, performance and impact assessment reports, and monitoring system configurations with drift detection records.

Integration with Other NIST AI RMF Functions Procedures reference prerequisites from GOVERN function (governance structures and policies) and MAP function (system categorization and risk identification), support MANAGE function through controls validation and performance monitoring, and create feedback loops where measurement findings inform risk management decisions.

Comparison Table: Ad-Hoc Testing vs. Professional SOP Template

| Aspect | Ad-Hoc Testing Approach | Professional MEASURE SOP Template |

|---|---|---|

| TEVV Coverage | Inconsistent testing focused on basic functionality | Comprehensive framework covering functional performance, seven trustworthy characteristics, stress conditions, fairness, explainability, security, privacy, and human-AI interaction |

| Metrics Program | Reactive metrics defined after issues emerge | Proactive metrics definition across performance, business value, risk, fairness, trustworthiness, operational, and data quality dimensions with automated tracking |

| Controls Validation | Assumed controls work as intended | Systematic validation of both design effectiveness and operating effectiveness with evidence sampling and gap analysis |

| Performance Monitoring | Post-incident analysis when problems occur | Continuous monitoring with real-time dashboards, automated alerting, and statistical trend analysis |

| Drift Detection | Manual observation of degraded performance | Automated detection systems for model drift, data drift, and distribution shifts with threshold-based alerts |

| Fairness Testing | Limited or absent fairness assessment | Comprehensive fairness and bias testing across demographic groups with multiple fairness metrics and root cause investigation |

| Documentation | Sparse documentation requiring reconstruction | Built-in deliverable specifications creating comprehensive audit trail and evidence repository |

| Risk-Based Rigor | Uniform approach regardless of system risk | Scaled procedures with measurement frequency and depth aligned to AI system risk categorization |

FAQ Section

Q: Does this template guarantee AI system compliance or certification?

A: This template provides procedural guidance designed to support NIST AI RMF MEASURE function implementation. Effective measurement depends on proper customization to organizational context, consistent execution of testing and monitoring procedures, and interpretation of results by qualified personnel. The template serves as implementation guidance for establishing measurement capabilities, not a guarantee of compliance outcomes or certification readiness.

Q: What technical expertise is required to implement these procedures?

A: Implementation requires personnel with expertise in AI/ML testing methodologies, statistical analysis, metrics engineering, and the specific AI techniques used in your systems. Fairness testing requires understanding of bias sources and fairness metrics. Security testing requires knowledge of adversarial attacks. Continuous monitoring requires skills in anomaly detection and drift analysis. Organizations should assess whether internal expertise is sufficient or external support is needed.

Q: How does this integrate with existing software testing processes?

A: The template’s TEVV procedures can complement existing software testing by adding AI-specific testing dimensions (fairness, explainability, drift detection) to standard functional and security testing. Organizations with established QA processes can integrate AI-specific test cases into existing test management systems. The metrics tracking and continuous monitoring procedures can extend existing application performance monitoring infrastructure.

Q: Are example test cases or metrics included in the template?

A: The template provides procedural guidance for developing test plans, defining metrics, and establishing monitoring systems specific to each AI system. Organizations need to develop actual test cases based on their system’s functionality, data, and risk profile. The template specifies what should be tested and measured rather than providing pre-defined test cases, as effective testing requires system-specific design.

Q: What file format is the template provided in?

A: Documents are optimized for Microsoft Word to ensure proper formatting and collaborative editing capabilities. The .docx format supports organizational version control, comment tracking, and iterative refinement as procedures are customized and implemented.

Q: How frequently should MEASURE procedures be executed?

A: Frequency depends on AI system risk categorization. The template specifies high-risk systems require weekly detailed reviews with continuous monitoring, medium-risk systems require bi-weekly reviews with ongoing metrics tracking, and low-risk systems require monthly reviews with periodic testing. Initial TEVV occurs before deployment, with reassessment triggered by significant changes, drift detection, incidents, or scheduled intervals.

Ideal For

- Organizations implementing comprehensive AI testing and evaluation programs aligned with NIST AI RMF guidance

- AI/ML teams establishing systematic measurement practices for model performance, fairness, and trustworthiness

- Internal audit and compliance teams responsible for validating AI risk management control effectiveness

- Data science teams building metrics tracking, drift detection, and continuous monitoring capabilities

- AI governance leads requiring evidence-based reporting on AI system performance and impacts

- Organizations preparing for AI governance audits or assessments requiring documented testing procedures

- Enterprises managing multiple AI systems and needing standardized measurement approaches across portfolio

- Teams transitioning from ad-hoc testing to systematic, risk-based measurement programs

Pricing Strategy

Single Template – $5.00

Immediate download access upon purchase. Single-user organizational license for customization and internal use.

Bundle Option

This MEASURE Function SOP template may be combined with other NIST AI RMF function templates (GOVERN, MAP, MANAGE) and supporting testing documentation depending on organizational AI lifecycle coverage requirements and measurement program maturity.

Enterprise Option

Organizations requiring multiple licenses, technical consultation on testing methodologies, or comprehensive AI measurement program development should contact us for enterprise pricing and implementation support services.

⚖️ Differentiator

This template provides comprehensive procedural detail for all five MEASURE function subcategories with specific step-by-step guidance for testing, metrics tracking, controls validation, performance analysis, and continuous monitoring. Unlike generic AI testing guides that focus primarily on model accuracy, this SOP template addresses the full spectrum of measurement activities required by NIST AI RMF including systematic testing of trustworthy characteristics, fairness and bias assessment, controls validation, real-world impact analysis, and drift detection.

The template’s risk-based approach scales measurement rigor to system criticality, with detailed specifications for high-risk systems requiring comprehensive TEVV and continuous monitoring, while providing appropriate procedures for medium and low-risk systems. Built-in deliverable specifications for each procedure create comprehensive audit trails supporting both internal governance and external compliance requirements. The integration points with GOVERN, MAP, and MANAGE functions provide a cohesive measurement program aligned with overall AI risk management strategy.

Implementation Notes

Getting Started

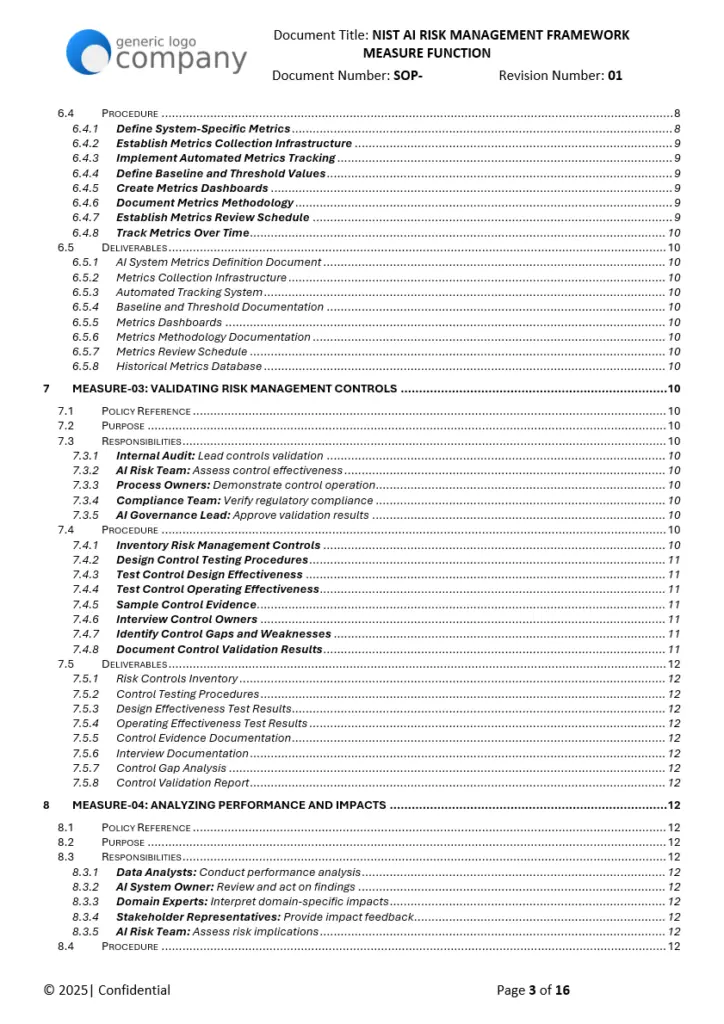

Organizations should complete MAP function procedures before implementing MEASURE activities to ensure AI systems have been properly categorized and risks identified. The Quick Start Guide section provides implementation sequence recommendations highlighting the dependency on risk categorization for determining measurement rigor.

Prerequisites and Dependencies

MEASURE procedures assume existence of governance structures from GOVERN function and risk identification from MAP function. Organizations should establish AI governance policies, accountability structures, and risk categorization before investing in comprehensive measurement infrastructure. The template references specific outputs from MAP function including AI System Categorization Reports and Impact Characterization Documents.

Customization Best Practices

- Replace all bracketed placeholders with organization-specific information systematically

- Adapt TEVV procedures to specific AI techniques used (deep learning, traditional ML, rule-based systems)

- Define metrics aligned with organizational KPIs and stakeholder expectations

- Select fairness metrics appropriate for application domain and demographic context

- Integrate monitoring infrastructure with existing observability and APM platforms

- Establish testing frequency based on system risk categorization and organizational risk tolerance

- Pilot procedures with a representative AI system before organization-wide rollout

Technical Infrastructure Requirements

Effective implementation requires supporting infrastructure including test data management systems for storing diverse test datasets, metrics collection and analytics platforms for automated tracking, model and data drift detection tools with statistical analysis capabilities, monitoring dashboards with real-time visualization and alerting, and evidence management systems for organizing audit documentation.

Document Control

The template includes document control sections for version history tracking, approver signatures, and review schedules. Organizations should establish change management processes for SOP updates as measurement methodologies evolve, new testing techniques emerge, or regulatory requirements change.

Note: This template provides procedural guidance designed to support NIST AI RMF MEASURE function implementation. Effective measurement requires technical expertise in AI/ML testing, statistical analysis, and the specific AI techniques deployed. Organizations should assess whether internal capabilities are sufficient or external expertise is needed for implementing comprehensive measurement programs. The template serves as a structured starting point requiring customization to organizational context, AI system characteristics, and risk management requirements.

Product Type: Digital Download (Microsoft Word .docx format)

Version: 1.0

Last Updated: February 2026

License: Single organization use with rights to customize and adapt for internal AI measurement purposes

Legal Disclaimer

This template is provided for informational and implementation guidance purposes. It does not constitute technical, testing, or professional advice. Organizations should engage qualified AI/ML testing professionals to ensure measurement procedures are appropriate for their specific AI systems and risk profiles. Effectiveness of measurement procedures depends on proper customization, technical implementation, qualified personnel execution, and ongoing monitoring and improvement. Testing and measurement results should be interpreted by individuals with appropriate domain expertise and statistical knowledge.