Free NIST AI RMF 1.0 Self-Assessment Tool

Structured Framework Assessment for AI Risk Management Programs

[Download Now]

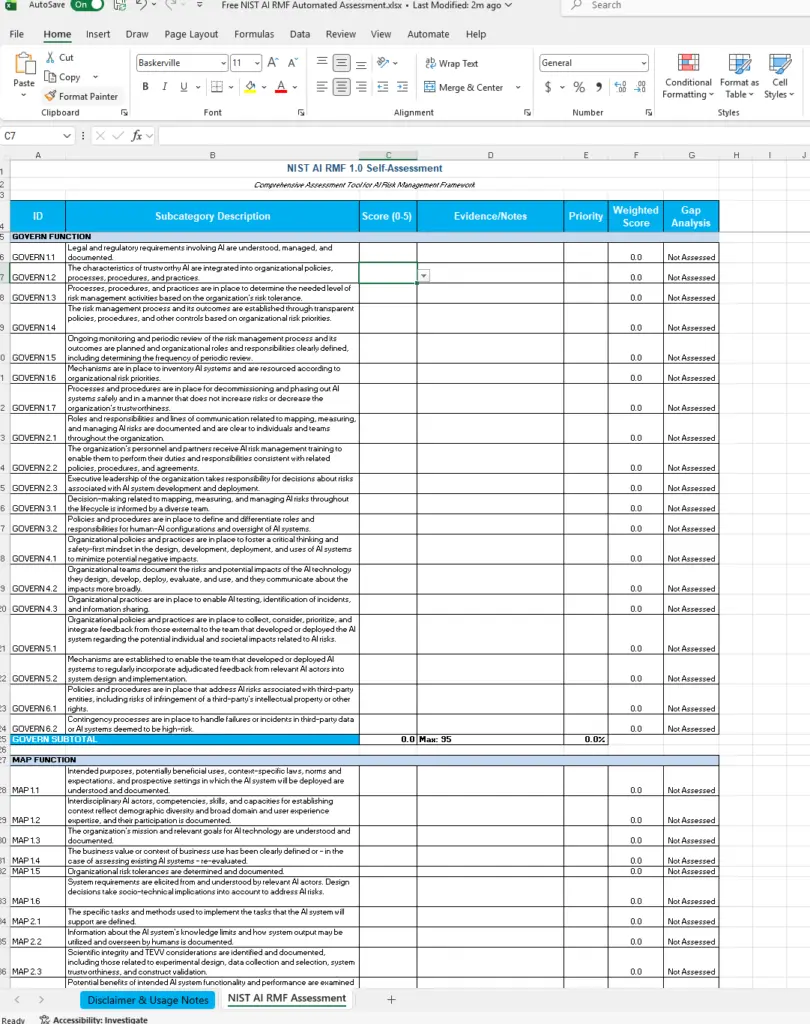

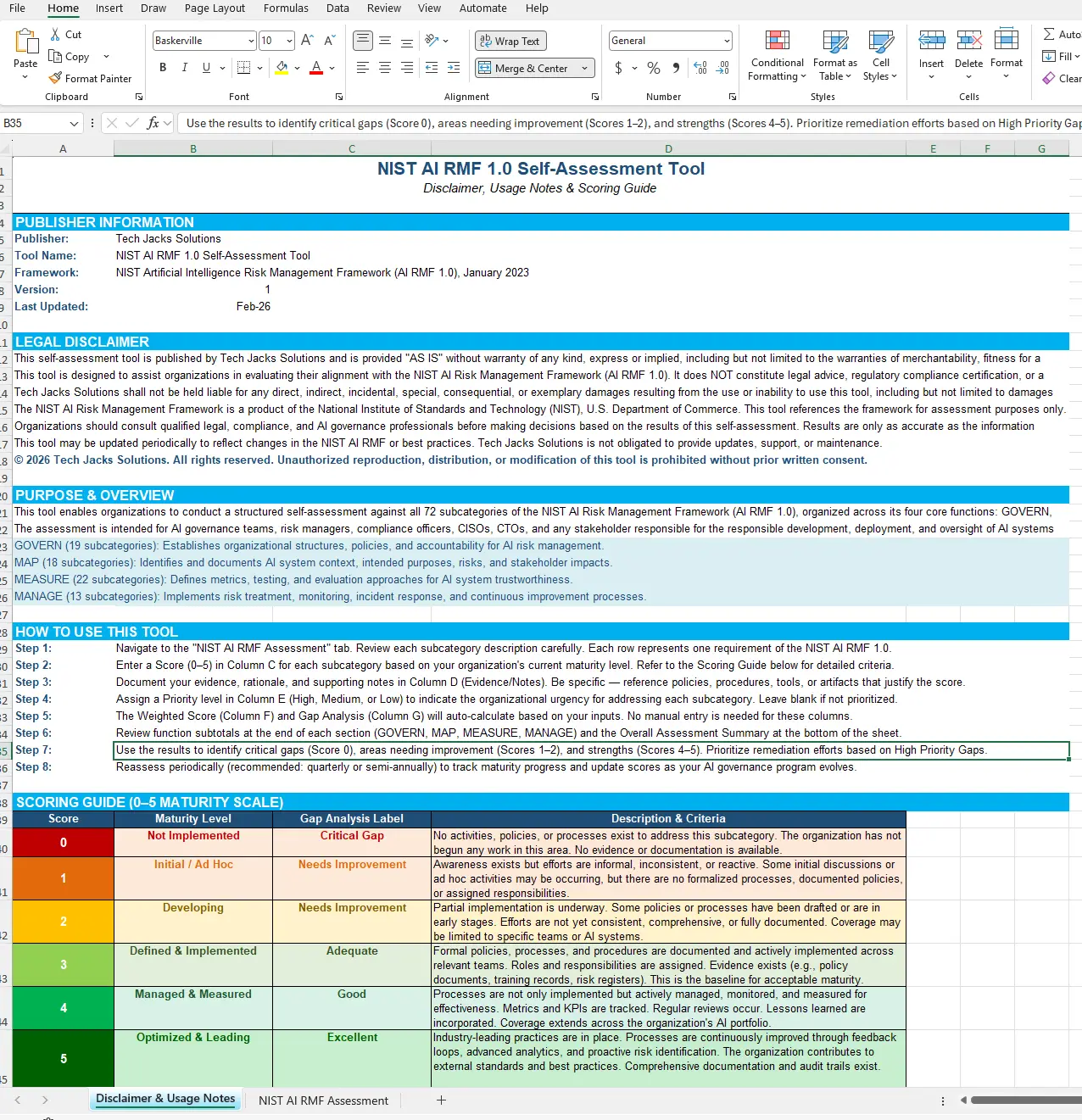

This self-assessment spreadsheet is designed to support organizations in conducting a comprehensive evaluation against the NIST Artificial Intelligence Risk Management Framework (AI RMF 1.0). The tool includes 72 assessment items organized across the framework’s four core functions, with automated scoring calculations, priority weighting, and gap analysis features.

The tool requires customization to your organization’s specific AI systems, risk environment, and governance structure. Organizations should expect to invest time documenting evidence, consulting the official NIST AI RMF 1.0 documentation (NIST AI 100-1), and involving cross-functional stakeholders in the assessment process. Results provide a baseline snapshot of AI governance maturity but do not constitute formal compliance certification or audit readiness.

Key Benefits

✓ Provides framework for evaluating organizational alignment with all 72 NIST AI RMF 1.0 subcategories

✓ Includes automated weighted scoring formulas based on organizational priority levels

✓ Supports systematic documentation of evidence and assessment rationale

✓ Enables tracking of AI governance maturity progression through periodic reassessment

✓ Designed for cross-functional collaboration across AI governance teams

✓ Includes comprehensive usage guidance with maturity level definitions (0-5 scale)

Who Uses This Tool?

Designed for AI Governance Officers, Risk Managers, Compliance Teams, Chief Information Security Officers (CISOs), Chief Technology Officers (CTOs), Data Scientists, Legal Counsel, Internal Audit Teams, and organizations developing or deploying AI systems requiring structured risk management approaches.

Preview of Included Content

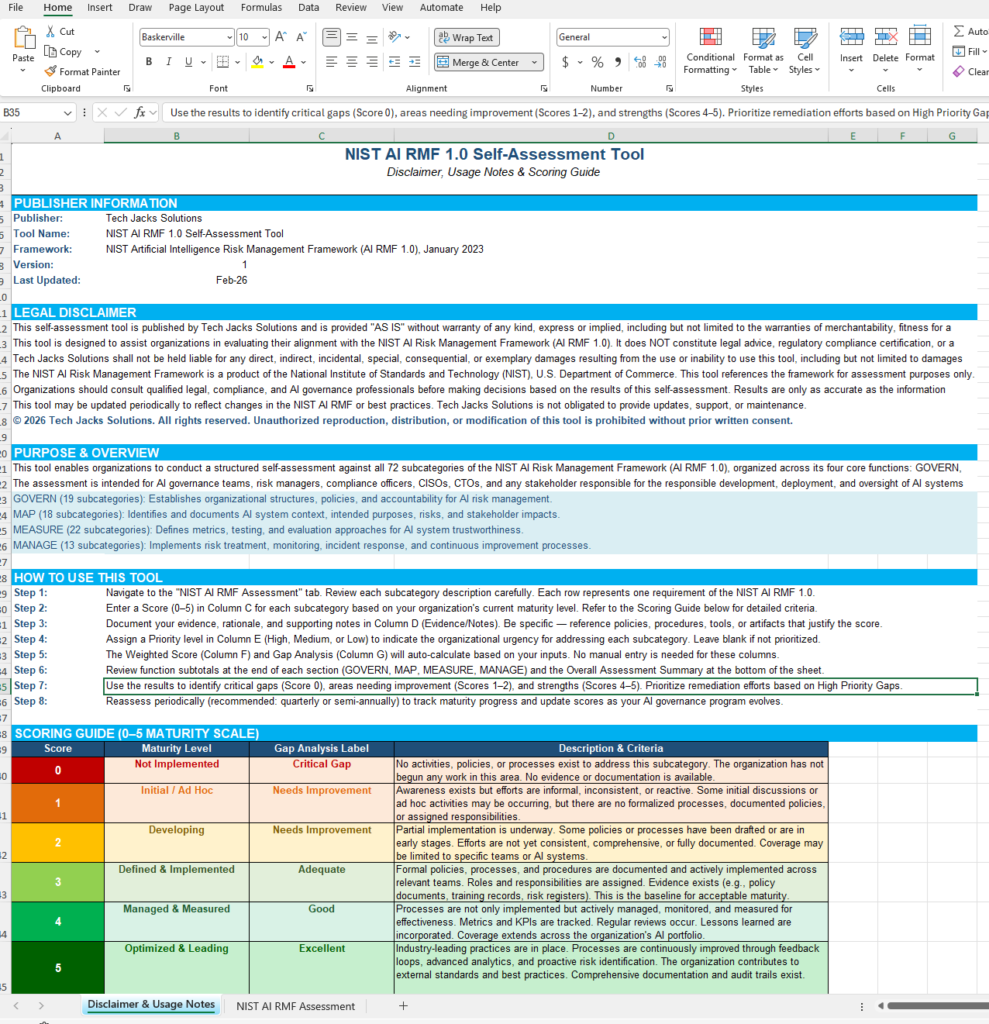

The tool includes two primary worksheets: (1) Assessment Sheet containing all 72 NIST AI RMF subcategories with scoring columns, evidence documentation fields, priority assignment, automated weighted scoring, and gap analysis calculations, and (2) Disclaimer & Usage Notes providing detailed scoring guidance, maturity level definitions, step-by-step instructions, priority weighting methodology, column reference guide, and best practices recommendations.

Why This Matters: The AI Risk Management Challenge

The NIST AI Risk Management Framework (AI RMF 1.0), published in January 2023 by the National Institute of Standards and Technology, provides voluntary guidance for organizations managing risks associated with artificial intelligence systems. The framework addresses challenges including algorithmic bias, transparency limitations, accountability gaps, security vulnerabilities, and trustworthiness concerns across the AI system lifecycle.

Organizations face increasing pressure from stakeholders, regulators, and customers to demonstrate responsible AI development and deployment practices. The NIST AI RMF provides a common language and structured approach for identifying, assessing, and managing AI-related risks. However, translating the framework’s 72 subcategories into actionable organizational assessments requires systematic evaluation tools.

This assessment tool is designed to support organizations in conducting baseline evaluations of their AI governance programs, identifying critical capability gaps, and prioritizing remediation efforts based on organizational risk tolerance and strategic objectives.

Framework Alignment

Based on the NIST Artificial Intelligence Risk Management Framework (AI RMF 1.0), published January 2023 by the National Institute of Standards and Technology, U.S. Department of Commerce. The tool references framework subcategories for assessment purposes and does not modify or replace the official NIST guidance.

Framework Coverage:

- GOVERN Function (19 subcategories): Organizational structures, policies, roles, responsibilities, and accountability mechanisms for AI risk management

- MAP Function (18 subcategories): Context identification, stakeholder engagement, AI system categorization, impact assessment, and risk documentation

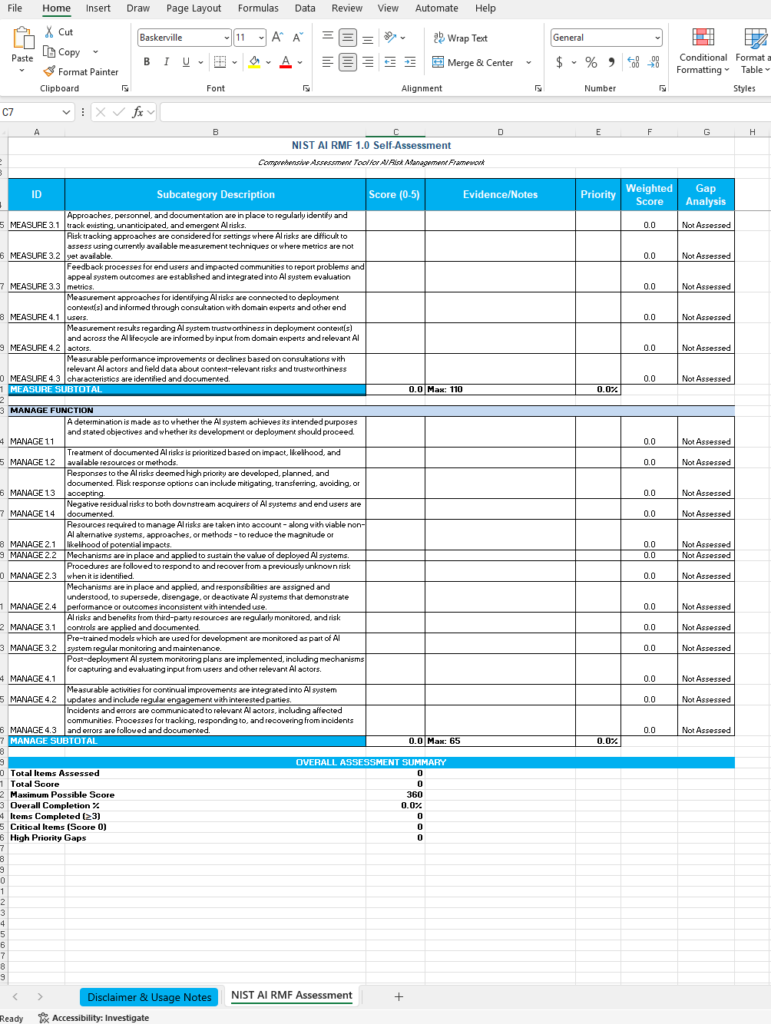

- MEASURE Function (22 subcategories): Metrics definition, testing approaches, evaluation methodologies, and trustworthiness validation

- MANAGE Function (13 subcategories): Risk treatment implementation, monitoring processes, incident response, and continuous improvement mechanisms

Key Features

The following features are included in the assessment spreadsheet based on the tool’s actual structure and functionality:

Assessment Structure

- 72 individual assessment items mapped to NIST AI RMF 1.0 subcategories

- Four functional sections (GOVERN, MAP, MEASURE, MANAGE) with subtotal calculations

- Official NIST framework language included for each subcategory requirement

Scoring Methodology

- 0-5 maturity scale with defined criteria for each level (Not Implemented to Optimized & Leading)

- Six maturity classifications: Not Implemented (0), Initial/Ad Hoc (1), Developing (2), Defined & Implemented (3), Managed & Measured (4), Optimized & Leading (5)

- Evidence documentation column for supporting rationale and policy references

Automated Calculations

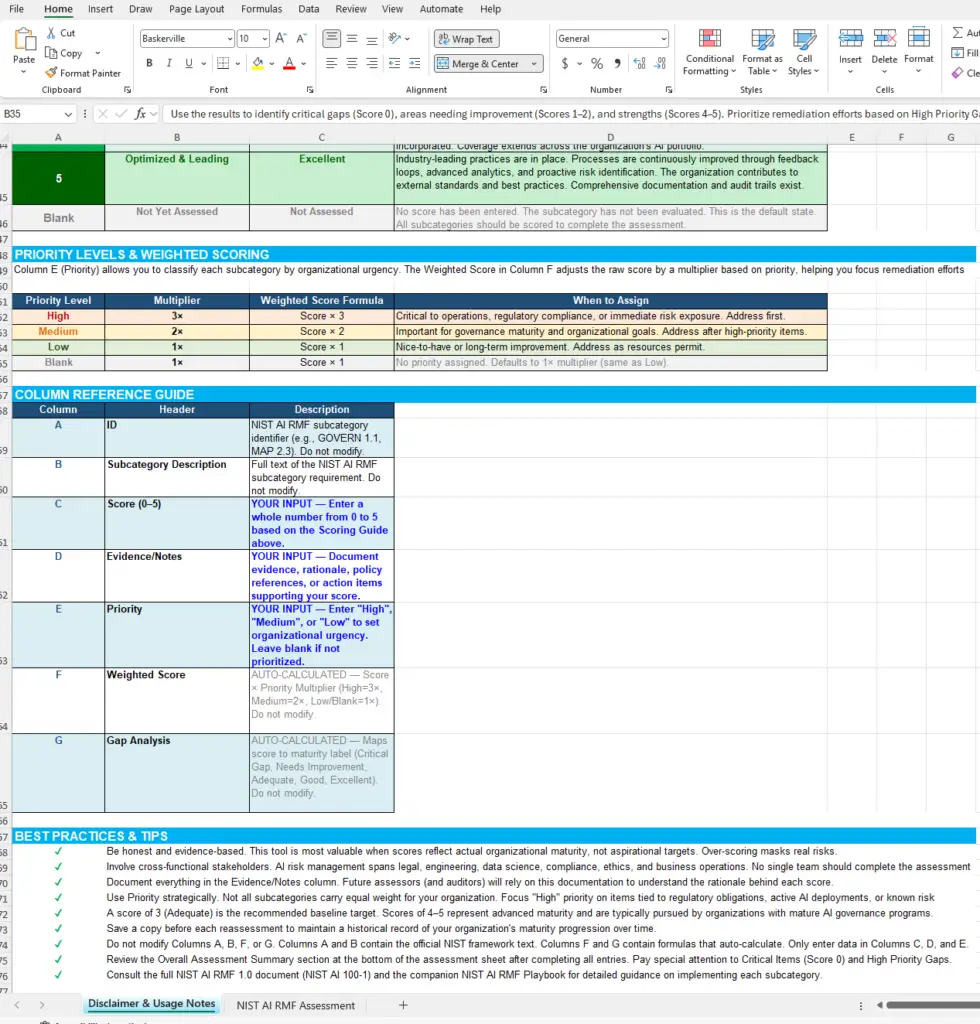

- Weighted scoring formulas applying priority multipliers (High=3×, Medium=2×, Low=1×)

- Automated gap analysis labels based on score values (Critical Gap, Needs Improvement, Adequate, Good, Excellent)

- Function subtotals and overall assessment summary metrics

- Completion percentage tracking and critical item identification

Priority Management

- Organizational priority assignment field (High, Medium, Low)

- Weighted score calculations emphasizing high-priority items

- Strategic focus on critical gaps requiring immediate attention

Documentation Support

- Comprehensive disclaimer and legal usage terms

- Eight-step usage instructions with detailed guidance

- Maturity level definitions with specific implementation criteria

- Column reference guide explaining each field’s purpose

- Best practices recommendations for cross-functional assessment

Assessment Tracking

- Overall summary section tracking total items assessed, completion percentage, items meeting baseline maturity (score ≥3), critical items (score 0), and high-priority gaps

- Designed for periodic reassessment to track maturity progression over time

Comparison Table: Basic Checklist vs. Professional Assessment Tool

| Feature | Basic NIST AI RMF Checklist | This Structured Assessment Tool |

|---|---|---|

| Framework Coverage | May cover subset of subcategories | All 72 official NIST AI RMF 1.0 subcategories |

| Scoring Method | Simple yes/no or pass/fail | Six-level maturity scale (0-5) with defined criteria |

| Evidence Documentation | Limited or no documentation fields | Dedicated evidence/notes column for each item |

| Priority Weighting | No prioritization mechanism | Organizational priority assignment with weighted scoring |

| Gap Analysis | Manual interpretation required | Automated gap classification based on maturity scores |

| Progress Tracking | No built-in metrics | Overall summary with completion percentage and critical item counts |

| Usage Guidance | Minimal instructions | Comprehensive usage notes, scoring guide, and best practices |

| Formula Automation | Manual calculations required | Automated weighted scoring and gap analysis formulas |

Frequently Asked Questions

Q: Does this tool provide formal NIST AI RMF certification or audit readiness?

A: No. This tool is designed to support internal self-assessment activities. It does not constitute formal compliance certification, legal advice, or regulatory audit preparation. Organizations should consult qualified AI governance and compliance professionals before making decisions based on assessment results.

Q: What is the difference between the raw score and weighted score?

A: The raw score (0-5) reflects your organization’s current maturity level for each subcategory. The weighted score multiplies the raw score by a priority factor (High=3×, Medium=2×, Low/Blank=1×) to emphasize organizationally critical items in gap analysis and remediation planning.

Q: How often should organizations conduct this assessment?

A: The tool usage notes recommend periodic reassessment on a quarterly or semi-annual basis to track AI governance maturity progression. Assessment frequency should align with the organization’s AI system deployment pace, risk profile changes, and regulatory environment.

Q: Can this tool be used for multiple AI systems or must it be completed separately for each system?

A: The NIST AI RMF is designed as an organizational framework addressing enterprise-wide AI risk management capabilities. This tool assesses organizational maturity across all AI systems collectively. Organizations deploying multiple high-risk AI systems may benefit from system-specific risk assessments in addition to this organizational assessment.

Q: What file format is this tool provided in?

A: Documents are optimized for Microsoft Word and Excel to ensure proper formatting and collaborative editing capabilities. The assessment tool is provided as an Excel spreadsheet (.xlsx) with automated formulas and comprehensive usage documentation.

Q: What score should organizations target as a baseline?

A: The tool usage guidance identifies a score of 3 (Defined & Implemented) as the recommended baseline target, representing formal policies, documented processes, assigned responsibilities, and active implementation. Scores of 4-5 represent advanced maturity typically pursued by organizations with established AI governance programs.

Q: Do I need to complete all 72 subcategories?

A: Yes, for a comprehensive assessment. The NIST AI RMF is designed as an integrated framework where capabilities across all four functions (GOVERN, MAP, MEASURE, MANAGE) support trustworthy AI system development and deployment. However, organizations may choose to prioritize specific subcategories based on their AI use cases and risk environment.

Ideal For

- Organizations developing or deploying artificial intelligence systems requiring structured risk management

- AI Governance Officers establishing baseline capability assessments

- Risk Management teams evaluating AI-specific risk treatment approaches

- Compliance teams mapping organizational practices to NIST AI RMF guidance

- CISOs and CTOs building AI security and risk management programs

- Internal audit teams conducting AI governance capability reviews

- Cross-functional AI ethics and responsible AI committees

- Organizations pursuing NIST AI RMF implementation roadmaps

- Consultants supporting clients with AI risk management framework adoption

Pricing Strategy Options

Single Template: Free download available to support democratization of AI governance knowledge and remove barriers to responsible AI implementation.

Bundle Option: May be combined with additional AI governance templates, policy frameworks, or risk assessment tools based on organizational compliance scope and maturity objectives.

Enterprise Option: Organizations requiring customized assessment methodologies, integration with existing governance frameworks, or professional guidance should consult qualified AI governance practitioners.

What Makes This Tool Different

This assessment tool provides a structured, evidence-based approach to evaluating organizational AI risk management capabilities across all 72 NIST AI RMF 1.0 subcategories. Unlike basic checklists that offer binary pass/fail evaluations, this tool includes a six-level maturity scale with specific implementation criteria, automated weighted scoring based on organizational priorities, and comprehensive documentation support.

The tool is designed to support cross-functional collaboration by including evidence documentation fields, priority assignment mechanisms, and detailed usage guidance. Organizations can track AI governance maturity progression through periodic reassessment, focusing remediation efforts on high-priority gaps identified through automated gap analysis calculations.

The approach emphasizes honest, evidence-based self-assessment rather than aspirational scoring, with best practices guidance recommending involvement of stakeholders across legal, engineering, data science, compliance, ethics, and business operations functions.

Legal Notice

This tool is published by Tech Jacks Solutions and is provided “AS IS” without warranty of any kind. It is designed to assist organizations in evaluating their alignment with the NIST AI RMF 1.0 and does NOT constitute legal advice, regulatory compliance certification, or a formal audit. Use of this tool does not guarantee compliance with any law, regulation, or standard.

The NIST AI Risk Management Framework is a product of the National Institute of Standards and Technology (NIST), U.S. Department of Commerce. This tool references the framework for assessment purposes only. NIST does not endorse, certify, or sponsor this tool.

Organizations should consult qualified legal, compliance, and AI governance professionals before making decisions based on assessment results. Results are only as accurate as the information provided by the assessor.

© 2026 Tech Jacks Solutions. For informational purposes only — not legal or compliance advice.