Text-Guided Layer Fusion Mitigates Hallucination in Multimodal LLMscs.AI updates on arXiv.org arXiv:2601.03100v2 Announce Type: replace-cross

Abstract: Multimodal large language models (MLLMs) typically rely on a single late-layer feature from a frozen vision encoder, leaving the encoder’s rich hierarchy of visual cues under-utilized. MLLMs still suffer from visually ungrounded hallucinations, often relying on language priors rather than image evidence. While many prior mitigation strategies operate on the text side, they leave the visual representation unchanged and do not exploit the rich hierarchy of features encoded across vision layers. Existing multi-layer fusion methods partially address this limitation but remain static, applying the same layer mixture regardless of the query. In this work, we introduce TGIF (Text-Guided Inter-layer Fusion), a lightweight module that treats encoder layers as depth-wise “experts” and predicts a prompt-dependent fusion of visual features. TGIF follows the principle of direct external fusion, requires no vision-encoder updates, and adds minimal overhead. Integrated into LLaVA-1.5-7B, TGIF provides consistent improvements across hallucination, OCR, and VQA benchmarks, while preserving or improving performance on ScienceQA, GQA, and MMBench. These results suggest that query-conditioned, hierarchy-aware fusion is an effective way to strengthen visual grounding and reduce hallucination in modern MLLMs.

arXiv:2601.03100v2 Announce Type: replace-cross

Abstract: Multimodal large language models (MLLMs) typically rely on a single late-layer feature from a frozen vision encoder, leaving the encoder’s rich hierarchy of visual cues under-utilized. MLLMs still suffer from visually ungrounded hallucinations, often relying on language priors rather than image evidence. While many prior mitigation strategies operate on the text side, they leave the visual representation unchanged and do not exploit the rich hierarchy of features encoded across vision layers. Existing multi-layer fusion methods partially address this limitation but remain static, applying the same layer mixture regardless of the query. In this work, we introduce TGIF (Text-Guided Inter-layer Fusion), a lightweight module that treats encoder layers as depth-wise “experts” and predicts a prompt-dependent fusion of visual features. TGIF follows the principle of direct external fusion, requires no vision-encoder updates, and adds minimal overhead. Integrated into LLaVA-1.5-7B, TGIF provides consistent improvements across hallucination, OCR, and VQA benchmarks, while preserving or improving performance on ScienceQA, GQA, and MMBench. These results suggest that query-conditioned, hierarchy-aware fusion is an effective way to strengthen visual grounding and reduce hallucination in modern MLLMs. Read More

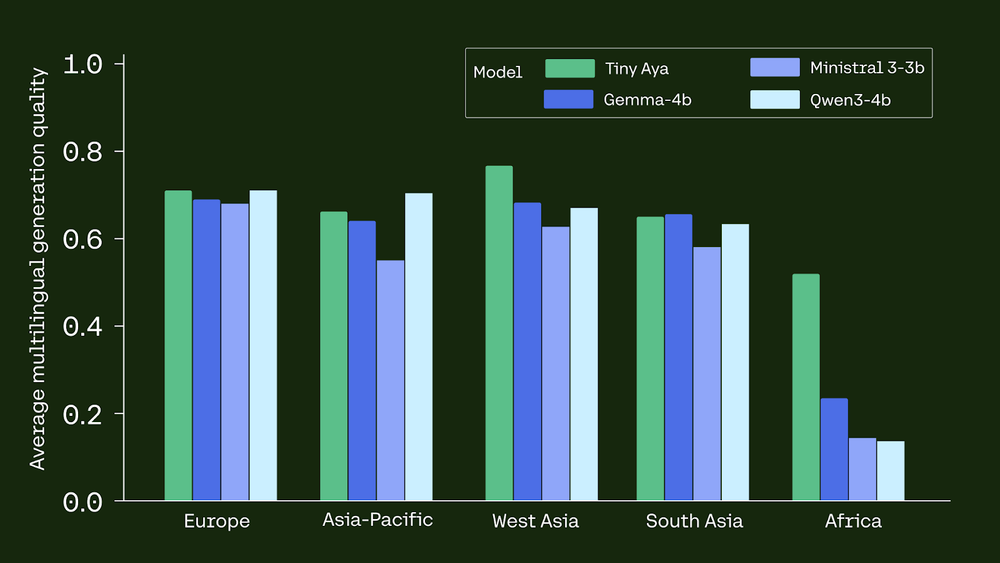

Cohere Releases Tiny Aya: A 3B-Parameter Small Language Model that Supports 70 Languages and Runs Locally Even on a PhoneMarkTechPost Cohere AI Labs has released Tiny Aya, a family of small language models (SLMs) that redefines multilingual performance. While many models scale by increasing parameters, Tiny Aya uses a 3.35B-parameter architecture to deliver state-of-the-art translation and generation across 70 languages. The release includes 5 models: Tiny Aya Base (pretrained), Tiny Aya Global (balanced instruction-tuned), and

The post Cohere Releases Tiny Aya: A 3B-Parameter Small Language Model that Supports 70 Languages and Runs Locally Even on a Phone appeared first on MarkTechPost.

Cohere AI Labs has released Tiny Aya, a family of small language models (SLMs) that redefines multilingual performance. While many models scale by increasing parameters, Tiny Aya uses a 3.35B-parameter architecture to deliver state-of-the-art translation and generation across 70 languages. The release includes 5 models: Tiny Aya Base (pretrained), Tiny Aya Global (balanced instruction-tuned), and

The post Cohere Releases Tiny Aya: A 3B-Parameter Small Language Model that Supports 70 Languages and Runs Locally Even on a Phone appeared first on MarkTechPost. Read More

The Complete Hugging Face Primer for 2026KDnuggets Check out this practical 2026 guide to Hugging Face. Explore transformers, datasets, sentiment analysis, APIs, fine-tuning, and deployment with Python.

Check out this practical 2026 guide to Hugging Face. Explore transformers, datasets, sentiment analysis, APIs, fine-tuning, and deployment with Python. Read More

SS&C Blue Prism: On the journey from RPA to agentic automationAI News For organizations who are still wedded to the rules and structures of robotic process automation (RPA), then considering agentic AI as the next step for automation may be faintly terrifying. SS&C Blue Prism, however, is here to help, taking customers on the journey from RPA to agentic automation at a pace with which they’re comfortable.

The post SS&C Blue Prism: On the journey from RPA to agentic automation appeared first on AI News.

For organizations who are still wedded to the rules and structures of robotic process automation (RPA), then considering agentic AI as the next step for automation may be faintly terrifying. SS&C Blue Prism, however, is here to help, taking customers on the journey from RPA to agentic automation at a pace with which they’re comfortable.

The post SS&C Blue Prism: On the journey from RPA to agentic automation appeared first on AI News. Read More

Insurance giant AIG deploys agentic AI with orchestration layerAI News American International Group (AIG) has reported faster than expected gains from its use of generative AI, with implications for underwriting capacity, operating cost, and portfolio integration. The company’s recent disclosures at an Investor Day merit attention from AI decision-makers as they contain assertions about measurable throughput and workflow redesign. AIG has outlined potential benefits from

The post Insurance giant AIG deploys agentic AI with orchestration layer appeared first on AI News.

American International Group (AIG) has reported faster than expected gains from its use of generative AI, with implications for underwriting capacity, operating cost, and portfolio integration. The company’s recent disclosures at an Investor Day merit attention from AI decision-makers as they contain assertions about measurable throughput and workflow redesign. AIG has outlined potential benefits from

The post Insurance giant AIG deploys agentic AI with orchestration layer appeared first on AI News. Read More

Top 7 Python Libraries for Progress BarsKDnuggets This article covers the top seven Python libraries for implementing progress bars, with practical examples to help you quickly add progress tracking to data processing, machine learning, and automation workflows.

This article covers the top seven Python libraries for implementing progress bars, with practical examples to help you quickly add progress tracking to data processing, machine learning, and automation workflows. Read More

Alibaba Qwen is challenging proprietary AI model economicsAI News The release of Alibaba’s latest Qwen model challenges proprietary AI model economics with comparable performance on commodity hardware. While US-based labs have historically held the performance advantage, open-source alternatives like the Qwen 3.5 series are closing the gap with frontier models. This offers enterprises a potential reduction in inference costs and increased flexibility in deployment

The post Alibaba Qwen is challenging proprietary AI model economics appeared first on AI News.

The release of Alibaba’s latest Qwen model challenges proprietary AI model economics with comparable performance on commodity hardware. While US-based labs have historically held the performance advantage, open-source alternatives like the Qwen 3.5 series are closing the gap with frontier models. This offers enterprises a potential reduction in inference costs and increased flexibility in deployment

The post Alibaba Qwen is challenging proprietary AI model economics appeared first on AI News. Read More

Goldman Sachs deploys Anthropic systems with successAI News Goldman Sachs plans to deploy Anthropic’s Claude model in trade accounting and client onboarding, and, according to an article in American Banker, presents this as part of a broader push among large banks to use generative artificial intelligence to improve efficiency. The focus is on operational processes that sit in the back office and have

The post Goldman Sachs deploys Anthropic systems with success appeared first on AI News.

Goldman Sachs plans to deploy Anthropic’s Claude model in trade accounting and client onboarding, and, according to an article in American Banker, presents this as part of a broader push among large banks to use generative artificial intelligence to improve efficiency. The focus is on operational processes that sit in the back office and have

The post Goldman Sachs deploys Anthropic systems with success appeared first on AI News. Read More

Beyond Static Snapshots: Dynamic Modeling and Forecasting of Group-Level Value Evolution with Large Language Modelscs.AI updates on arXiv.org arXiv:2602.14043v1 Announce Type: cross

Abstract: Social simulation is critical for mining complex social dynamics and supporting data-driven decision making. LLM-based methods have emerged as powerful tools for this task by leveraging human-like social questionnaire responses to model group behaviors. Existing LLM-based approaches predominantly focus on group-level values at discrete time points, treating them as static snapshots rather than dynamic processes. However, group-level values are not fixed but shaped by long-term social changes. Modeling their dynamics is thus crucial for accurate social evolution prediction–a key challenge in both data mining and social science. This problem remains underexplored due to limited longitudinal data, group heterogeneity, and intricate historical event impacts.

To bridge this gap, we propose a novel framework for group-level dynamic social simulation by integrating historical value trajectories into LLM-based human response modeling. We select China and the U.S. as representative contexts, conducting stratified simulations across four core sociodemographic dimensions (gender, age, education, income). Using the World Values Survey, we construct a multi-wave, group-level longitudinal dataset to capture historical value evolution, and then propose the first event-based prediction method for this task, unifying social events, current value states, and group attributes into a single framework. Evaluations across five LLM families show substantial gains: a maximum 30.88% improvement on seen questions and 33.97% on unseen questions over the Vanilla baseline. We further find notable cross-group heterogeneity: U.S. groups are more volatile than Chinese groups, and younger groups in both countries are more sensitive to external changes. These findings advance LLM-based social simulation and provide new insights for social scientists to understand and predict social value changes.

arXiv:2602.14043v1 Announce Type: cross

Abstract: Social simulation is critical for mining complex social dynamics and supporting data-driven decision making. LLM-based methods have emerged as powerful tools for this task by leveraging human-like social questionnaire responses to model group behaviors. Existing LLM-based approaches predominantly focus on group-level values at discrete time points, treating them as static snapshots rather than dynamic processes. However, group-level values are not fixed but shaped by long-term social changes. Modeling their dynamics is thus crucial for accurate social evolution prediction–a key challenge in both data mining and social science. This problem remains underexplored due to limited longitudinal data, group heterogeneity, and intricate historical event impacts.

To bridge this gap, we propose a novel framework for group-level dynamic social simulation by integrating historical value trajectories into LLM-based human response modeling. We select China and the U.S. as representative contexts, conducting stratified simulations across four core sociodemographic dimensions (gender, age, education, income). Using the World Values Survey, we construct a multi-wave, group-level longitudinal dataset to capture historical value evolution, and then propose the first event-based prediction method for this task, unifying social events, current value states, and group attributes into a single framework. Evaluations across five LLM families show substantial gains: a maximum 30.88% improvement on seen questions and 33.97% on unseen questions over the Vanilla baseline. We further find notable cross-group heterogeneity: U.S. groups are more volatile than Chinese groups, and younger groups in both countries are more sensitive to external changes. These findings advance LLM-based social simulation and provide new insights for social scientists to understand and predict social value changes. Read More

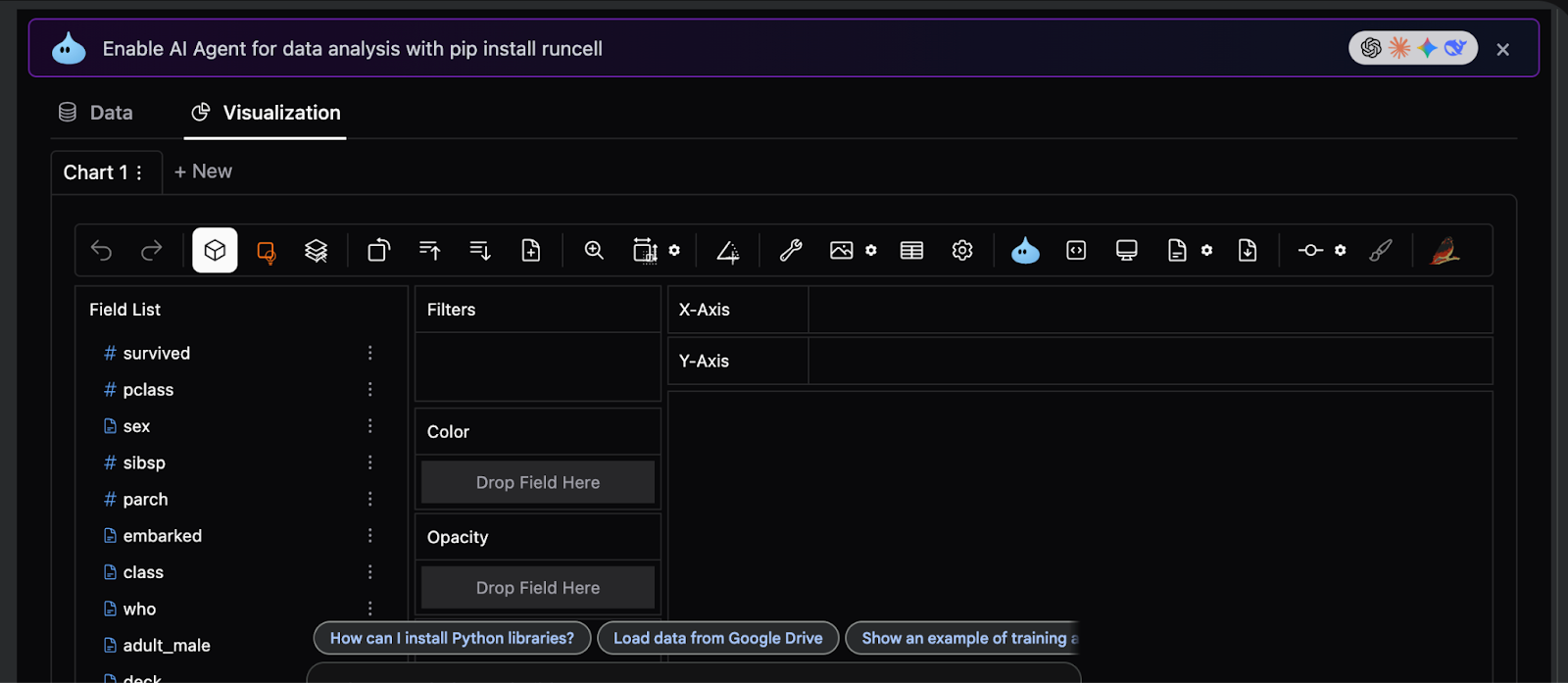

How to Build an Advanced, Interactive Exploratory Data Analysis Workflow Using PyGWalker and Feature-Engineered DataMarkTechPost In this tutorial, we demonstrate how to move beyond static, code-heavy charts and build a genuinely interactive exploratory data analysis workflow directly using PyGWalker. We start by preparing the Titanic dataset for large-scale interactive querying. These analysis-ready engineered features reveal the underlying structure of the data while enabling both detailed row-level exploration and high-level aggregated

The post How to Build an Advanced, Interactive Exploratory Data Analysis Workflow Using PyGWalker and Feature-Engineered Data appeared first on MarkTechPost.

In this tutorial, we demonstrate how to move beyond static, code-heavy charts and build a genuinely interactive exploratory data analysis workflow directly using PyGWalker. We start by preparing the Titanic dataset for large-scale interactive querying. These analysis-ready engineered features reveal the underlying structure of the data while enabling both detailed row-level exploration and high-level aggregated

The post How to Build an Advanced, Interactive Exploratory Data Analysis Workflow Using PyGWalker and Feature-Engineered Data appeared first on MarkTechPost. Read More