Security professionals are moving up the executive ranks as enterprises face rising regulatory and compliance standards. Read More

The truth left out from Elon Musk’s recent court filingOpenAI News The truth left out from Elon Musk’s recent court filing.

The truth left out from Elon Musk’s recent court filing. Read More

Cutting LLM Memory by 84%: A Deep Dive into Fused KernelsTowards Data Science Why your final LLM layer is OOMing and how to fix it with a custom Triton kernel.

The post Cutting LLM Memory by 84%: A Deep Dive into Fused Kernels appeared first on Towards Data Science.

Why your final LLM layer is OOMing and how to fix it with a custom Triton kernel.

The post Cutting LLM Memory by 84%: A Deep Dive into Fused Kernels appeared first on Towards Data Science. Read More

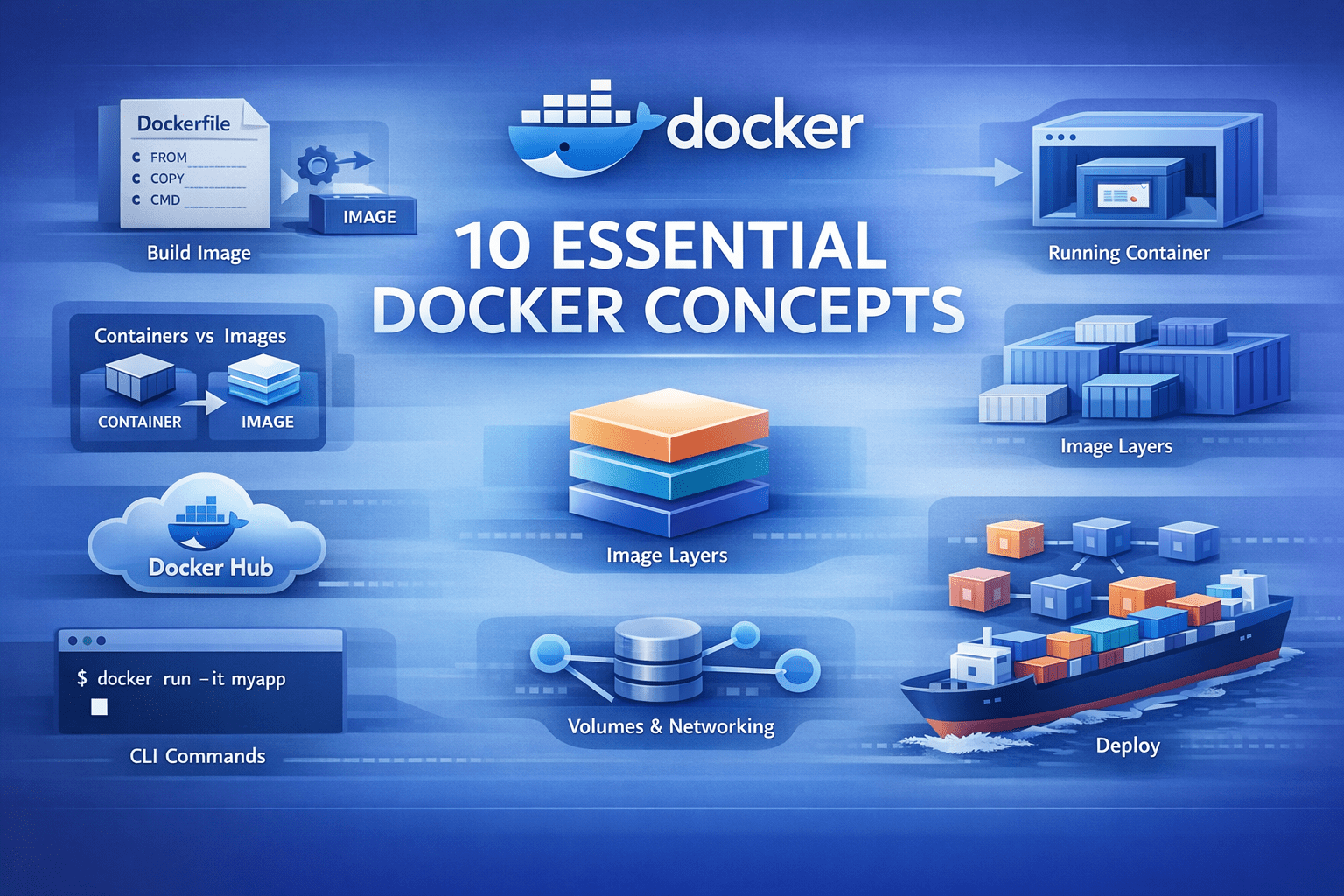

10 Essential Docker Concepts Explained in Under 10 MinutesKDnuggets Images, containers, volumes, and networks… Docker terms often sound complex to beginners. This quick guide explains Docker essentials to get started.

Images, containers, volumes, and networks… Docker terms often sound complex to beginners. This quick guide explains Docker essentials to get started. Read More

Deploy AI agents on Amazon Bedrock AgentCore using GitHub ActionsArtificial Intelligence In this post, we demonstrate how to use a GitHub Actions workflow to automate the deployment of AI agents on AgentCore Runtime. This approach delivers a scalable solution with enterprise-level security controls, providing complete continuous integration and delivery (CI/CD) automation.

In this post, we demonstrate how to use a GitHub Actions workflow to automate the deployment of AI agents on AgentCore Runtime. This approach delivers a scalable solution with enterprise-level security controls, providing complete continuous integration and delivery (CI/CD) automation. Read More

From beginner to champion: A student’s journey through the AWS AI League ASEAN finalsArtificial Intelligence The AWS AI League, launched by Amazon Web Services (AWS), expanded its reach to the Association of Southeast Asian Nations (ASEAN) last year, welcoming student participants from Singapore, Indonesia, Malaysia, Thailand, Vietnam, and the Philippines. In this blog post, you’ll hear directly from the AWS AI League champion, Blix D. Foryasen, as he shares his reflection on the challenges, breakthroughs, and key lessons discovered throughout the competition.

The AWS AI League, launched by Amazon Web Services (AWS), expanded its reach to the Association of Southeast Asian Nations (ASEAN) last year, welcoming student participants from Singapore, Indonesia, Malaysia, Thailand, Vietnam, and the Philippines. In this blog post, you’ll hear directly from the AWS AI League champion, Blix D. Foryasen, as he shares his reflection on the challenges, breakthroughs, and key lessons discovered throughout the competition. Read More

How Palo Alto Networks enhanced device security infra log analysis with Amazon BedrockArtificial Intelligence Palo Alto Networks’ Device Security team wanted to detect early warning signs of potential production issues to provide more time to SMEs to react to these emerging problems. They partnered with the AWS Generative AI Innovation Center (GenAIIC) to develop an automated log classification pipeline powered by Amazon Bedrock. In this post, we discuss how Amazon Bedrock, through Anthropic’ s Claude Haiku model, and Amazon Titan Text Embeddings work together to automatically classify and analyze log data. We explore how this automated pipeline detects critical issues, examine the solution architecture, and share implementation insights that have delivered measurable operational improvements.

Palo Alto Networks’ Device Security team wanted to detect early warning signs of potential production issues to provide more time to SMEs to react to these emerging problems. They partnered with the AWS Generative AI Innovation Center (GenAIIC) to develop an automated log classification pipeline powered by Amazon Bedrock. In this post, we discuss how Amazon Bedrock, through Anthropic’ s Claude Haiku model, and Amazon Titan Text Embeddings work together to automatically classify and analyze log data. We explore how this automated pipeline detects critical issues, examine the solution architecture, and share implementation insights that have delivered measurable operational improvements. Read More

OpenAI’s $8 ChatGPT Go subscription, which gives you 10x more messages, is now available in the United States and other regions. […] Read More

Safety Not Found (404): Hidden Risks of LLM-Based Robotics Decision Makingcs.AI updates on arXiv.org arXiv:2601.05529v2 Announce Type: replace

Abstract: One mistake by an AI system in a safety-critical setting can cost lives. As Large Language Models (LLMs) become integral to robotics decision-making, the physical dimension of risk grows; a single wrong instruction can directly endanger human safety. This paper addresses the urgent need to systematically evaluate LLM performance in scenarios where even minor errors are catastrophic. Through a qualitative evaluation of a fire evacuation scenario, we identified critical failure cases in LLM-based decision-making. Based on these, we designed seven tasks for quantitative assessment, categorized into: Complete Information, Incomplete Information, and Safety-Oriented Spatial Reasoning (SOSR). Complete information tasks utilize ASCII maps to minimize interpretation ambiguity and isolate spatial reasoning from visual processing. Incomplete information tasks require models to infer missing context, testing for spatial continuity versus hallucinations. SOSR tasks use natural language to evaluate safe decision-making in life-threatening contexts. We benchmark various LLMs and Vision-Language Models (VLMs) across these tasks. Beyond aggregate performance, we analyze the implications of a 1% failure rate, highlighting how “rare” errors escalate into catastrophic outcomes. Results reveal serious vulnerabilities: several models achieved a 0% success rate in ASCII navigation, while in a simulated fire drill, models instructed robots to move toward hazardous areas instead of emergency exits. Our findings lead to a sobering conclusion: current LLMs are not ready for direct deployment in safety-critical systems. A 99% accuracy rate is dangerously misleading in robotics, as it implies one out of every hundred executions could result in catastrophic harm. We demonstrate that even state-of-the-art models cannot guarantee safety, and absolute reliance on them creates unacceptable risks.

arXiv:2601.05529v2 Announce Type: replace

Abstract: One mistake by an AI system in a safety-critical setting can cost lives. As Large Language Models (LLMs) become integral to robotics decision-making, the physical dimension of risk grows; a single wrong instruction can directly endanger human safety. This paper addresses the urgent need to systematically evaluate LLM performance in scenarios where even minor errors are catastrophic. Through a qualitative evaluation of a fire evacuation scenario, we identified critical failure cases in LLM-based decision-making. Based on these, we designed seven tasks for quantitative assessment, categorized into: Complete Information, Incomplete Information, and Safety-Oriented Spatial Reasoning (SOSR). Complete information tasks utilize ASCII maps to minimize interpretation ambiguity and isolate spatial reasoning from visual processing. Incomplete information tasks require models to infer missing context, testing for spatial continuity versus hallucinations. SOSR tasks use natural language to evaluate safe decision-making in life-threatening contexts. We benchmark various LLMs and Vision-Language Models (VLMs) across these tasks. Beyond aggregate performance, we analyze the implications of a 1% failure rate, highlighting how “rare” errors escalate into catastrophic outcomes. Results reveal serious vulnerabilities: several models achieved a 0% success rate in ASCII navigation, while in a simulated fire drill, models instructed robots to move toward hazardous areas instead of emergency exits. Our findings lead to a sobering conclusion: current LLMs are not ready for direct deployment in safety-critical systems. A 99% accuracy rate is dangerously misleading in robotics, as it implies one out of every hundred executions could result in catastrophic harm. We demonstrate that even state-of-the-art models cannot guarantee safety, and absolute reliance on them creates unacceptable risks. Read More

Machine Learning and Theory Ladenness — A Phenomenological Accountcs.AI updates on arXiv.org arXiv:2409.11277v2 Announce Type: replace

Abstract: We provide an analysis of theory ladenness in machine learning in science, where “theory”, that we call “domain theory”, refers to the domain knowledge of the scientific discipline where ML is used. By constructing an account of ML models based on a comparison with phenomenological models, we show, against recent trends in philosophy of science, that ML model-building is mostly indifferent to domain theory, even if the model remains theory laden in a weak sense, which we call theory infection. These claims, we argue, have far-reaching consequences for the transferability of ML across scientific disciplines, and shift the priorities of the debate on theory ladenness in ML from descriptive to normative.

arXiv:2409.11277v2 Announce Type: replace

Abstract: We provide an analysis of theory ladenness in machine learning in science, where “theory”, that we call “domain theory”, refers to the domain knowledge of the scientific discipline where ML is used. By constructing an account of ML models based on a comparison with phenomenological models, we show, against recent trends in philosophy of science, that ML model-building is mostly indifferent to domain theory, even if the model remains theory laden in a weak sense, which we call theory infection. These claims, we argue, have far-reaching consequences for the transferability of ML across scientific disciplines, and shift the priorities of the debate on theory ladenness in ML from descriptive to normative. Read More