French prosecutors have raided X’s offices in Paris on Tuesday as part of a criminal investigation into the platform’s Grok AI tool, widely used to generate sexually explicit images. […] Read More

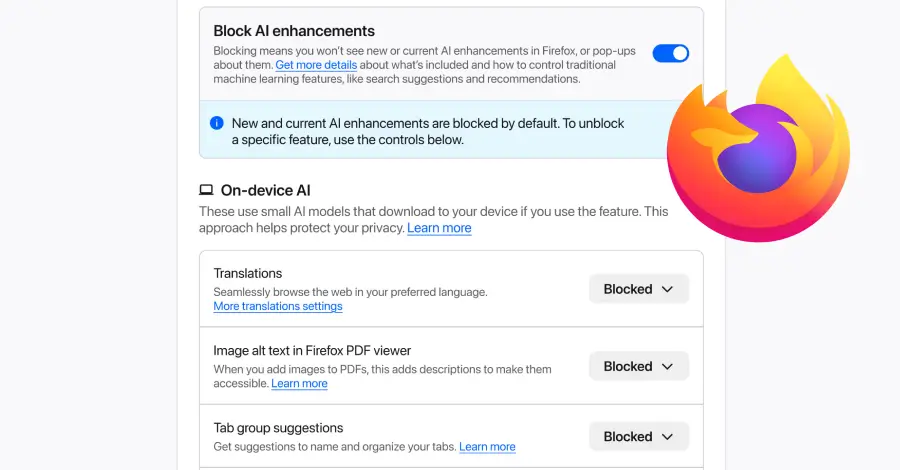

Mozilla on Monday announced a new controls section in its Firefox desktop browser settings that allows users to completely turn off generative artificial intelligence (GenAI) features. “It provides a single place to block current and future generative AI features in Firefox,” Ajit Varma, head of Firefox, said. “You can also review and manage individual AI […]

DIVERGE: Diversity-Enhanced RAG for Open-Ended Information Seekingcs.AI updates on arXiv.org arXiv:2602.00238v1 Announce Type: cross

Abstract: Existing retrieval-augmented generation (RAG) systems are primarily designed under the assumption that each query has a single correct answer. This overlooks common information-seeking scenarios with multiple plausible answers, where diversity is essential to avoid collapsing to a single dominant response, thereby constraining creativity and compromising fair and inclusive information access. Our analysis reveals a commonly overlooked limitation of standard RAG systems: they underutilize retrieved context diversity, such that increasing retrieval diversity alone does not yield diverse generations. To address this limitation, we propose DIVERGE, a plug-and-play agentic RAG framework with novel reflection-guided generation and memory-augmented iterative refinement, which promotes diverse viewpoints while preserving answer quality. We introduce novel metrics tailored to evaluating the diversity-quality trade-off in open-ended questions, and show that they correlate well with human judgments. We demonstrate that DIVERGE achieves the best diversity-quality trade-off compared to competitive baselines and previous state-of-the-art methods on the real-world Infinity-Chat dataset, substantially improving diversity while maintaining quality. More broadly, our results reveal a systematic limitation of current LLM-based systems for open-ended information-seeking and show that explicitly modeling diversity can mitigate it. Our code is available at: https://github.com/au-clan/Diverge

arXiv:2602.00238v1 Announce Type: cross

Abstract: Existing retrieval-augmented generation (RAG) systems are primarily designed under the assumption that each query has a single correct answer. This overlooks common information-seeking scenarios with multiple plausible answers, where diversity is essential to avoid collapsing to a single dominant response, thereby constraining creativity and compromising fair and inclusive information access. Our analysis reveals a commonly overlooked limitation of standard RAG systems: they underutilize retrieved context diversity, such that increasing retrieval diversity alone does not yield diverse generations. To address this limitation, we propose DIVERGE, a plug-and-play agentic RAG framework with novel reflection-guided generation and memory-augmented iterative refinement, which promotes diverse viewpoints while preserving answer quality. We introduce novel metrics tailored to evaluating the diversity-quality trade-off in open-ended questions, and show that they correlate well with human judgments. We demonstrate that DIVERGE achieves the best diversity-quality trade-off compared to competitive baselines and previous state-of-the-art methods on the real-world Infinity-Chat dataset, substantially improving diversity while maintaining quality. More broadly, our results reveal a systematic limitation of current LLM-based systems for open-ended information-seeking and show that explicitly modeling diversity can mitigate it. Our code is available at: https://github.com/au-clan/Diverge Read More

F-scheduler: illuminating the free-lunch design space for fast sampling of diffusion modelscs.AI updates on arXiv.org arXiv:2510.02390v3 Announce Type: replace-cross

Abstract: Diffusion models are the state-of-the-art generative models for high-resolution images, but sampling from pretrained models is computationally expensive, motivating interest in fast sampling. Although Free-U Net is a training-free enhancement for improving image quality, we find it ineffective under few-step ($<10$) sampling. We analyze the discrete diffusion ODE and propose F-scheduler, a scheduler designed for ODE solvers with Free-U Net. Our proposed scheduler consists of a special time schedule that does not fully denoise the feature to enable the use of the KL-term in the $beta$-VAE decoder, and the schedule of a proper inference stage for modifying the U-Net skip-connection via Free-U Net. Via information theory, we provide insights into how the better scheduled ODE solvers for the diffusion model can outperform the training-based diffusion distillation model. The newly proposed scheduler is compatible with most of the few-step ODE solvers and can sample a 1024 x 1024-resolution image in 6 steps and a 512 x 512-resolution image in 5 steps when it applies to DPM++ 2m and UniPC, with an FID result that outperforms the SOTA distillation models and the 20-step DPM++ 2m solver, respectively. Codebase: https://github.com/TheLovesOfLadyPurple/F-scheduler

arXiv:2510.02390v3 Announce Type: replace-cross

Abstract: Diffusion models are the state-of-the-art generative models for high-resolution images, but sampling from pretrained models is computationally expensive, motivating interest in fast sampling. Although Free-U Net is a training-free enhancement for improving image quality, we find it ineffective under few-step ($<10$) sampling. We analyze the discrete diffusion ODE and propose F-scheduler, a scheduler designed for ODE solvers with Free-U Net. Our proposed scheduler consists of a special time schedule that does not fully denoise the feature to enable the use of the KL-term in the $beta$-VAE decoder, and the schedule of a proper inference stage for modifying the U-Net skip-connection via Free-U Net. Via information theory, we provide insights into how the better scheduled ODE solvers for the diffusion model can outperform the training-based diffusion distillation model. The newly proposed scheduler is compatible with most of the few-step ODE solvers and can sample a 1024 x 1024-resolution image in 6 steps and a 512 x 512-resolution image in 5 steps when it applies to DPM++ 2m and UniPC, with an FID result that outperforms the SOTA distillation models and the 20-step DPM++ 2m solver, respectively. Codebase: https://github.com/TheLovesOfLadyPurple/F-scheduler Read More

TransportAgents: a multi-agents LLM framework for traffic accident severity predictioncs.AI updates on arXiv.org arXiv:2601.15519v2 Announce Type: replace

Abstract: Accurate prediction of traffic crash severity is critical for improving emergency response and public safety planning. Although recent large language models (LLMs) exhibit strong reasoning capabilities, their single-agent architectures often struggle with heterogeneous, domain-specific crash data and tend to generate biased or unstable predictions. To address these limitations, this paper proposes TransportAgents, a hybrid multi-agent framework that integrates category-specific LLM reasoning with a multilayer perceptron (MLP) integration module. Each specialized agent focuses on a particular subset of traffic information, such as demographics, environmental context, or incident details, to produce intermediate severity assessments that are subsequently fused into a unified prediction. Extensive experiments on two complementary U.S. datasets, the Consumer Product Safety Risk Management System (CPSRMS) and the National Electronic Injury Surveillance System (NEISS), demonstrate that TransportAgents consistently outperforms both traditional machine learning and advanced LLM-based baselines. Across three representative backbones, including closed-source models such as GPT-3.5 and GPT-4o, as well as open-source models such as LLaMA-3.3, the framework exhibits strong robustness, scalability, and cross-dataset generalizability. A supplementary distributional analysis further shows that TransportAgents produces more balanced and well-calibrated severity predictions than standard single-agent LLM approaches, highlighting its interpretability and reliability for safety-critical decision support applications.

arXiv:2601.15519v2 Announce Type: replace

Abstract: Accurate prediction of traffic crash severity is critical for improving emergency response and public safety planning. Although recent large language models (LLMs) exhibit strong reasoning capabilities, their single-agent architectures often struggle with heterogeneous, domain-specific crash data and tend to generate biased or unstable predictions. To address these limitations, this paper proposes TransportAgents, a hybrid multi-agent framework that integrates category-specific LLM reasoning with a multilayer perceptron (MLP) integration module. Each specialized agent focuses on a particular subset of traffic information, such as demographics, environmental context, or incident details, to produce intermediate severity assessments that are subsequently fused into a unified prediction. Extensive experiments on two complementary U.S. datasets, the Consumer Product Safety Risk Management System (CPSRMS) and the National Electronic Injury Surveillance System (NEISS), demonstrate that TransportAgents consistently outperforms both traditional machine learning and advanced LLM-based baselines. Across three representative backbones, including closed-source models such as GPT-3.5 and GPT-4o, as well as open-source models such as LLaMA-3.3, the framework exhibits strong robustness, scalability, and cross-dataset generalizability. A supplementary distributional analysis further shows that TransportAgents produces more balanced and well-calibrated severity predictions than standard single-agent LLM approaches, highlighting its interpretability and reliability for safety-critical decision support applications. Read More

SayNext-Bench: Why Do LLMs Struggle with Next-Utterance Prediction?cs.AI updates on arXiv.org arXiv:2602.00327v1 Announce Type: new

Abstract: We explore the use of large language models (LLMs) for next-utterance prediction in human dialogue. Despite recent advances in LLMs demonstrating their ability to engage in natural conversations with users, we show that even leading models surprisingly struggle to predict a human speaker’s next utterance. Instead, humans can readily anticipate forthcoming utterances based on multimodal cues, such as gestures, gaze, and emotional tone, from the context. To systematically examine whether LLMs can reproduce this ability, we propose SayNext-Bench, a benchmark that evaluates LLMs and Multimodal LLMs (MLLMs) on anticipating context-conditioned responses from multimodal cues spanning a variety of real-world scenarios. To support this benchmark, we build SayNext-PC, a novel large-scale dataset containing dialogues with rich multimodal cues. Building on this, we further develop a dual-route prediction MLLM, SayNext-Chat, that incorporates cognitively inspired design to emulate predictive processing in conversation. Experimental results demonstrate that our model outperforms state-of-the-art MLLMs in terms of lexical overlap, semantic similarity, and emotion consistency. Our results prove the feasibility of next-utterance prediction with LLMs from multimodal cues and emphasize the (i) indispensable role of multimodal cues and (ii) actively predictive processing as the foundation of natural human interaction, which is missing in current MLLMs. We hope that this exploration offers a new research entry toward more human-like, context-sensitive AI interaction for human-centered AI. Our benchmark and model can be accessed at https://saynext.github.io/.

arXiv:2602.00327v1 Announce Type: new

Abstract: We explore the use of large language models (LLMs) for next-utterance prediction in human dialogue. Despite recent advances in LLMs demonstrating their ability to engage in natural conversations with users, we show that even leading models surprisingly struggle to predict a human speaker’s next utterance. Instead, humans can readily anticipate forthcoming utterances based on multimodal cues, such as gestures, gaze, and emotional tone, from the context. To systematically examine whether LLMs can reproduce this ability, we propose SayNext-Bench, a benchmark that evaluates LLMs and Multimodal LLMs (MLLMs) on anticipating context-conditioned responses from multimodal cues spanning a variety of real-world scenarios. To support this benchmark, we build SayNext-PC, a novel large-scale dataset containing dialogues with rich multimodal cues. Building on this, we further develop a dual-route prediction MLLM, SayNext-Chat, that incorporates cognitively inspired design to emulate predictive processing in conversation. Experimental results demonstrate that our model outperforms state-of-the-art MLLMs in terms of lexical overlap, semantic similarity, and emotion consistency. Our results prove the feasibility of next-utterance prediction with LLMs from multimodal cues and emphasize the (i) indispensable role of multimodal cues and (ii) actively predictive processing as the foundation of natural human interaction, which is missing in current MLLMs. We hope that this exploration offers a new research entry toward more human-like, context-sensitive AI interaction for human-centered AI. Our benchmark and model can be accessed at https://saynext.github.io/. Read More

DuFFin: A Dual-Level Fingerprinting Framework for LLMs IP Protectioncs.AI updates on arXiv.org arXiv:2505.16530v2 Announce Type: replace-cross

Abstract: Large language models (LLMs) are considered valuable Intellectual Properties (IP) for legitimate owners due to the enormous computational cost of training. It is crucial to protect the IP of LLMs from malicious stealing or unauthorized deployment. Despite existing efforts in watermarking and fingerprinting LLMs, these methods either impact the text generation process or are limited in white-box access to the suspect model, making them impractical. Hence, we propose DuFFin, a novel $textbf{Du}$al-Level $textbf{Fin}$gerprinting $textbf{F}$ramework for black-box setting ownership verification. DuFFin extracts the trigger pattern and the knowledge-level fingerprints to identify the source of a suspect model. We conduct experiments on a variety of models collected from the open-source website, including four popular base models as protected LLMs and their fine-tuning, quantization, and safety alignment versions, which are released by large companies, start-ups, and individual users. Results show that our method can accurately verify the copyright of the base protected LLM on their model variants, achieving the IP-ROC metric greater than 0.95. Our code is available at https://github.com/yuliangyan0807/llm-fingerprint.

arXiv:2505.16530v2 Announce Type: replace-cross

Abstract: Large language models (LLMs) are considered valuable Intellectual Properties (IP) for legitimate owners due to the enormous computational cost of training. It is crucial to protect the IP of LLMs from malicious stealing or unauthorized deployment. Despite existing efforts in watermarking and fingerprinting LLMs, these methods either impact the text generation process or are limited in white-box access to the suspect model, making them impractical. Hence, we propose DuFFin, a novel $textbf{Du}$al-Level $textbf{Fin}$gerprinting $textbf{F}$ramework for black-box setting ownership verification. DuFFin extracts the trigger pattern and the knowledge-level fingerprints to identify the source of a suspect model. We conduct experiments on a variety of models collected from the open-source website, including four popular base models as protected LLMs and their fine-tuning, quantization, and safety alignment versions, which are released by large companies, start-ups, and individual users. Results show that our method can accurately verify the copyright of the base protected LLM on their model variants, achieving the IP-ROC metric greater than 0.95. Our code is available at https://github.com/yuliangyan0807/llm-fingerprint. Read More

Artificial Intelligence and Symmetries: Learning, Encoding, and Discovering Structure in Physical Datacs.AI updates on arXiv.org arXiv:2602.02351v1 Announce Type: cross

Abstract: Symmetries play a central role in physics, organizing dynamics, constraining interactions, and determining the effective number of physical degrees of freedom. In parallel, modern artificial intelligence methods have demonstrated a remarkable ability to extract low-dimensional structure from high-dimensional data through representation learning. This review examines the interplay between these two perspectives, focusing on the extent to which symmetry-induced constraints can be identified, encoded, or diagnosed using machine learning techniques.

Rather than emphasizing architectures that enforce known symmetries by construction, we concentrate on data-driven approaches and latent representation learning, with particular attention to variational autoencoders. We discuss how symmetries and conservation laws reduce the intrinsic dimensionality of physical datasets, and how this reduction may manifest itself through self-organization of latent spaces in generative models trained to balance reconstruction and compression. We review recent results, including case studies from simple geometric systems and particle physics processes, and analyze the theoretical and practical limitations of inferring symmetry structure without explicit inductive bias.

arXiv:2602.02351v1 Announce Type: cross

Abstract: Symmetries play a central role in physics, organizing dynamics, constraining interactions, and determining the effective number of physical degrees of freedom. In parallel, modern artificial intelligence methods have demonstrated a remarkable ability to extract low-dimensional structure from high-dimensional data through representation learning. This review examines the interplay between these two perspectives, focusing on the extent to which symmetry-induced constraints can be identified, encoded, or diagnosed using machine learning techniques.

Rather than emphasizing architectures that enforce known symmetries by construction, we concentrate on data-driven approaches and latent representation learning, with particular attention to variational autoencoders. We discuss how symmetries and conservation laws reduce the intrinsic dimensionality of physical datasets, and how this reduction may manifest itself through self-organization of latent spaces in generative models trained to balance reconstruction and compression. We review recent results, including case studies from simple geometric systems and particle physics processes, and analyze the theoretical and practical limitations of inferring symmetry structure without explicit inductive bias. Read More

PPoGA: Predictive Plan-on-Graph with Action for Knowledge Graph Question Answeringcs.AI updates on arXiv.org arXiv:2602.00007v1 Announce Type: cross

Abstract: Large Language Models (LLMs) augmented with Knowledge Graphs (KGs) have advanced complex question answering, yet they often remain susceptible to failure when their initial high-level reasoning plan is flawed. This limitation, analogous to cognitive functional fixedness, prevents agents from restructuring their approach, leading them to pursue unworkable solutions. To address this, we propose PPoGA (Predictive Plan-on-Graph with Action), a novel KGQA framework inspired by human cognitive control and problem-solving. PPoGA incorporates a Planner-Executor architecture to separate high-level strategy from low-level execution and leverages a Predictive Processing mechanism to anticipate outcomes. The core innovation of our work is a self-correction mechanism that empowers the agent to perform not only Path Correction for local execution errors but also Plan Correction by identifying, discarding, and reformulating the entire plan when it proves ineffective. We conduct extensive experiments on three challenging multi-hop KGQA benchmarks: GrailQA, CWQ, and WebQSP. The results demonstrate that PPoGA achieves state-of-the-art performance, significantly outperforming existing methods. Our work highlights the critical importance of metacognitive abilities like problem restructuring for building more robust and flexible AI reasoning systems.

arXiv:2602.00007v1 Announce Type: cross

Abstract: Large Language Models (LLMs) augmented with Knowledge Graphs (KGs) have advanced complex question answering, yet they often remain susceptible to failure when their initial high-level reasoning plan is flawed. This limitation, analogous to cognitive functional fixedness, prevents agents from restructuring their approach, leading them to pursue unworkable solutions. To address this, we propose PPoGA (Predictive Plan-on-Graph with Action), a novel KGQA framework inspired by human cognitive control and problem-solving. PPoGA incorporates a Planner-Executor architecture to separate high-level strategy from low-level execution and leverages a Predictive Processing mechanism to anticipate outcomes. The core innovation of our work is a self-correction mechanism that empowers the agent to perform not only Path Correction for local execution errors but also Plan Correction by identifying, discarding, and reformulating the entire plan when it proves ineffective. We conduct extensive experiments on three challenging multi-hop KGQA benchmarks: GrailQA, CWQ, and WebQSP. The results demonstrate that PPoGA achieves state-of-the-art performance, significantly outperforming existing methods. Our work highlights the critical importance of metacognitive abilities like problem restructuring for building more robust and flexible AI reasoning systems. Read More

LLMs as High-Dimensional Nonlinear Autoregressive Models with Attention: Training, Alignment and Inferencecs.AI updates on arXiv.org arXiv:2602.00426v1 Announce Type: cross

Abstract: Large language models (LLMs) based on transformer architectures are typically described through collections of architectural components and training procedures, obscuring their underlying computational structure. This review article provides a concise mathematical reference for researchers seeking an explicit, equation-level description of LLM training, alignment, and generation. We formulate LLMs as high-dimensional nonlinear autoregressive models with attention-based dependencies. The framework encompasses pretraining via next-token prediction, alignment methods such as reinforcement learning from human feedback (RLHF), direct preference optimization (DPO), rejection sampling fine-tuning (RSFT), and reinforcement learning from verifiable rewards (RLVR), as well as autoregressive generation during inference. Self-attention emerges naturally as a repeated bilinear–softmax–linear composition, yielding highly expressive sequence models. This formulation enables principled analysis of alignment-induced behaviors (including sycophancy), inference-time phenomena (such as hallucination, in-context learning, chain-of-thought prompting, and retrieval-augmented generation), and extensions like continual learning, while serving as a concise reference for interpretation and further theoretical development.

arXiv:2602.00426v1 Announce Type: cross

Abstract: Large language models (LLMs) based on transformer architectures are typically described through collections of architectural components and training procedures, obscuring their underlying computational structure. This review article provides a concise mathematical reference for researchers seeking an explicit, equation-level description of LLM training, alignment, and generation. We formulate LLMs as high-dimensional nonlinear autoregressive models with attention-based dependencies. The framework encompasses pretraining via next-token prediction, alignment methods such as reinforcement learning from human feedback (RLHF), direct preference optimization (DPO), rejection sampling fine-tuning (RSFT), and reinforcement learning from verifiable rewards (RLVR), as well as autoregressive generation during inference. Self-attention emerges naturally as a repeated bilinear–softmax–linear composition, yielding highly expressive sequence models. This formulation enables principled analysis of alignment-induced behaviors (including sycophancy), inference-time phenomena (such as hallucination, in-context learning, chain-of-thought prompting, and retrieval-augmented generation), and extensions like continual learning, while serving as a concise reference for interpretation and further theoretical development. Read More