Learn from Global Correlations: Enhancing Evolutionary Algorithm via Spectral GNNcs.AI updates on arXiv.org arXiv:2412.17629v5 Announce Type: replace-cross

Abstract: Evolutionary algorithms (EAs) simulate natural selection but have two main limitations: (1) they rarely update individuals based on global correlations, limiting comprehensive learning; (2) they struggle with balancing exploration and exploitation, where excessive exploitation causes premature convergence, and excessive exploration slows down the search. Moreover, EAs often depend on manual parameter settings, which can disrupt the exploration-exploitation balance. To address these issues, we propose Graph Neural Evolution (GNE), a novel EA framework. GNE represents the population as a graph, where nodes represent individuals, and edges capture their relationships, enabling global information usage. GNE utilizes spectral graph neural networks (GNNs) to decompose evolutionary signals into frequency components, applying a filtering function to fuse these components. High-frequency components capture diverse global information, while low-frequency ones capture more consistent information. This explicit frequency filtering strategy directly controls global-scale features through frequency components, overcoming the limitations of manual parameter settings and making the exploration-exploitation control more interpretable and manageable. Tests on nine benchmark functions (e.g., Sphere, Rastrigin, Rosenbrock) show that GNE outperforms classical (GA, DE, CMA-ES) and advanced algorithms (SDAES, RL-SHADE) under various conditions, including noise-corrupted and optimal solution deviation scenarios. GNE achieves solutions several orders of magnitude better (e.g., 3.07e-20 mean on Sphere vs. 1.51e-07).

arXiv:2412.17629v5 Announce Type: replace-cross

Abstract: Evolutionary algorithms (EAs) simulate natural selection but have two main limitations: (1) they rarely update individuals based on global correlations, limiting comprehensive learning; (2) they struggle with balancing exploration and exploitation, where excessive exploitation causes premature convergence, and excessive exploration slows down the search. Moreover, EAs often depend on manual parameter settings, which can disrupt the exploration-exploitation balance. To address these issues, we propose Graph Neural Evolution (GNE), a novel EA framework. GNE represents the population as a graph, where nodes represent individuals, and edges capture their relationships, enabling global information usage. GNE utilizes spectral graph neural networks (GNNs) to decompose evolutionary signals into frequency components, applying a filtering function to fuse these components. High-frequency components capture diverse global information, while low-frequency ones capture more consistent information. This explicit frequency filtering strategy directly controls global-scale features through frequency components, overcoming the limitations of manual parameter settings and making the exploration-exploitation control more interpretable and manageable. Tests on nine benchmark functions (e.g., Sphere, Rastrigin, Rosenbrock) show that GNE outperforms classical (GA, DE, CMA-ES) and advanced algorithms (SDAES, RL-SHADE) under various conditions, including noise-corrupted and optimal solution deviation scenarios. GNE achieves solutions several orders of magnitude better (e.g., 3.07e-20 mean on Sphere vs. 1.51e-07). Read More

The Core in Max-Loss Non-Centroid Clustering Can Be Emptycs.AI updates on arXiv.org arXiv:2511.19107v1 Announce Type: cross

Abstract: We study core stability in non-centroid clustering under the max-loss objective, where each agent’s loss is the maximum distance to other members of their cluster. We prove that for all $kgeq 3$ there exist metric instances with $nge 9$ agents, with $n$ divisible by $k$, for which no clustering lies in the $alpha$-core for any $alpha<2^{frac{1}{5}}sim 1.148$. The bound is tight for our construction. Using a computer-aided proof, we also identify a two-dimensional Euclidean point set whose associated lower bound is slightly smaller than that of our general construction. This is, to our knowledge, the first impossibility result showing that the core can be empty in non-centroid clustering under the max-loss objective.

arXiv:2511.19107v1 Announce Type: cross

Abstract: We study core stability in non-centroid clustering under the max-loss objective, where each agent’s loss is the maximum distance to other members of their cluster. We prove that for all $kgeq 3$ there exist metric instances with $nge 9$ agents, with $n$ divisible by $k$, for which no clustering lies in the $alpha$-core for any $alpha<2^{frac{1}{5}}sim 1.148$. The bound is tight for our construction. Using a computer-aided proof, we also identify a two-dimensional Euclidean point set whose associated lower bound is slightly smaller than that of our general construction. This is, to our knowledge, the first impossibility result showing that the core can be empty in non-centroid clustering under the max-loss objective. Read More

Predicting partially observable dynamical systems via diffusion models with a multiscale inference schemecs.AI updates on arXiv.org arXiv:2511.19390v1 Announce Type: cross

Abstract: Conditional diffusion models provide a natural framework for probabilistic prediction of dynamical systems and have been successfully applied to fluid dynamics and weather prediction. However, in many settings, the available information at a given time represents only a small fraction of what is needed to predict future states, either due to measurement uncertainty or because only a small fraction of the state can be observed. This is true for example in solar physics, where we can observe the Sun’s surface and atmosphere, but its evolution is driven by internal processes for which we lack direct measurements. In this paper, we tackle the probabilistic prediction of partially observable, long-memory dynamical systems, with applications to solar dynamics and the evolution of active regions. We show that standard inference schemes, such as autoregressive rollouts, fail to capture long-range dependencies in the data, largely because they do not integrate past information effectively. To overcome this, we propose a multiscale inference scheme for diffusion models, tailored to physical processes. Our method generates trajectories that are temporally fine-grained near the present and coarser as we move farther away, which enables capturing long-range temporal dependencies without increasing computational cost. When integrated into a diffusion model, we show that our inference scheme significantly reduces the bias of the predicted distributions and improves rollout stability.

arXiv:2511.19390v1 Announce Type: cross

Abstract: Conditional diffusion models provide a natural framework for probabilistic prediction of dynamical systems and have been successfully applied to fluid dynamics and weather prediction. However, in many settings, the available information at a given time represents only a small fraction of what is needed to predict future states, either due to measurement uncertainty or because only a small fraction of the state can be observed. This is true for example in solar physics, where we can observe the Sun’s surface and atmosphere, but its evolution is driven by internal processes for which we lack direct measurements. In this paper, we tackle the probabilistic prediction of partially observable, long-memory dynamical systems, with applications to solar dynamics and the evolution of active regions. We show that standard inference schemes, such as autoregressive rollouts, fail to capture long-range dependencies in the data, largely because they do not integrate past information effectively. To overcome this, we propose a multiscale inference scheme for diffusion models, tailored to physical processes. Our method generates trajectories that are temporally fine-grained near the present and coarser as we move farther away, which enables capturing long-range temporal dependencies without increasing computational cost. When integrated into a diffusion model, we show that our inference scheme significantly reduces the bias of the predicted distributions and improves rollout stability. Read More

Hybrid Neuro-Symbolic Models for Ethical AI in Risk-Sensitive Domainscs.AI updates on arXiv.org arXiv:2511.17644v1 Announce Type: new

Abstract: Artificial intelligence deployed in risk-sensitive domains such as healthcare, finance, and security must not only achieve predictive accuracy but also ensure transparency, ethical alignment, and compliance with regulatory expectations. Hybrid neuro symbolic models combine the pattern-recognition strengths of neural networks with the interpretability and logical rigor of symbolic reasoning, making them well-suited for these contexts. This paper surveys hybrid architectures, ethical design considerations, and deployment patterns that balance accuracy with accountability. We highlight techniques for integrating knowledge graphs with deep inference, embedding fairness-aware rules, and generating human-readable explanations. Through case studies in healthcare decision support, financial risk management, and autonomous infrastructure, we show how hybrid systems can deliver reliable and auditable AI. Finally, we outline evaluation protocols and future directions for scaling neuro symbolic frameworks in complex, high stakes environments.

arXiv:2511.17644v1 Announce Type: new

Abstract: Artificial intelligence deployed in risk-sensitive domains such as healthcare, finance, and security must not only achieve predictive accuracy but also ensure transparency, ethical alignment, and compliance with regulatory expectations. Hybrid neuro symbolic models combine the pattern-recognition strengths of neural networks with the interpretability and logical rigor of symbolic reasoning, making them well-suited for these contexts. This paper surveys hybrid architectures, ethical design considerations, and deployment patterns that balance accuracy with accountability. We highlight techniques for integrating knowledge graphs with deep inference, embedding fairness-aware rules, and generating human-readable explanations. Through case studies in healthcare decision support, financial risk management, and autonomous infrastructure, we show how hybrid systems can deliver reliable and auditable AI. Finally, we outline evaluation protocols and future directions for scaling neuro symbolic frameworks in complex, high stakes environments. Read More

Live-SWE-agent: Can Software Engineering Agents Self-Evolve on the Fly?cs.AI updates on arXiv.org arXiv:2511.13646v3 Announce Type: replace-cross

Abstract: Large Language Models (LLMs) are reshaping almost all industries, including software engineering. In recent years, a number of LLM agents have been proposed to solve real-world software problems. Such software agents are typically equipped with a suite of coding tools and can autonomously decide the next actions to form complete trajectories to solve end-to-end software tasks. While promising, they typically require dedicated design and may still be suboptimal, since it can be extremely challenging and costly to exhaust the entire agent scaffold design space. Recognizing that software agents are inherently software themselves that can be further refined/modified, researchers have proposed a number of self-improving software agents recently, including the Darwin-G”odel Machine (DGM). Meanwhile, such self-improving agents require costly offline training on specific benchmarks and may not generalize well across different LLMs or benchmarks. In this paper, we propose Live-SWE-agent, the first live software agent that can autonomously and continuously evolve itself on-the-fly during runtime when solving real-world software problems. More specifically, Live-SWE-agent starts with the most basic agent scaffold with only access to bash tools (e.g., mini-SWE-agent), and autonomously evolves its own scaffold implementation while solving real-world software problems. Our evaluation on the widely studied SWE-bench Verified benchmark shows that LIVE-SWE-AGENT can achieve an impressive solve rate of 77.4% without test-time scaling, outperforming all existing software agents, including the best proprietary solution. Moreover, Live-SWE-agent outperforms state-of-the-art manually crafted software agents on the recent SWE-Bench Pro benchmark, achieving the best-known solve rate of 45.8%.

arXiv:2511.13646v3 Announce Type: replace-cross

Abstract: Large Language Models (LLMs) are reshaping almost all industries, including software engineering. In recent years, a number of LLM agents have been proposed to solve real-world software problems. Such software agents are typically equipped with a suite of coding tools and can autonomously decide the next actions to form complete trajectories to solve end-to-end software tasks. While promising, they typically require dedicated design and may still be suboptimal, since it can be extremely challenging and costly to exhaust the entire agent scaffold design space. Recognizing that software agents are inherently software themselves that can be further refined/modified, researchers have proposed a number of self-improving software agents recently, including the Darwin-G”odel Machine (DGM). Meanwhile, such self-improving agents require costly offline training on specific benchmarks and may not generalize well across different LLMs or benchmarks. In this paper, we propose Live-SWE-agent, the first live software agent that can autonomously and continuously evolve itself on-the-fly during runtime when solving real-world software problems. More specifically, Live-SWE-agent starts with the most basic agent scaffold with only access to bash tools (e.g., mini-SWE-agent), and autonomously evolves its own scaffold implementation while solving real-world software problems. Our evaluation on the widely studied SWE-bench Verified benchmark shows that LIVE-SWE-AGENT can achieve an impressive solve rate of 77.4% without test-time scaling, outperforming all existing software agents, including the best proprietary solution. Moreover, Live-SWE-agent outperforms state-of-the-art manually crafted software agents on the recent SWE-Bench Pro benchmark, achieving the best-known solve rate of 45.8%. Read More

Thousands of credentials, authentication keys, and configuration data impacting organizations in sensitive sectors have been sitting in publicly accessible JSON snippets submitted to the JSONFormatter and CodeBeautify online tools that format and structure code. […] Read More

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License. Read More

2026 will mark a pivotal shift in cybersecurity. Threat actors are moving from experimenting with AI to making it their primary weapon, using it to scale attacks, automate reconnaissance, and craft hyper-realistic social engineering campaigns. The Storm on the Horizon Global world instability, coupled with rapid technological advancement, will force security teams to adapt not […]

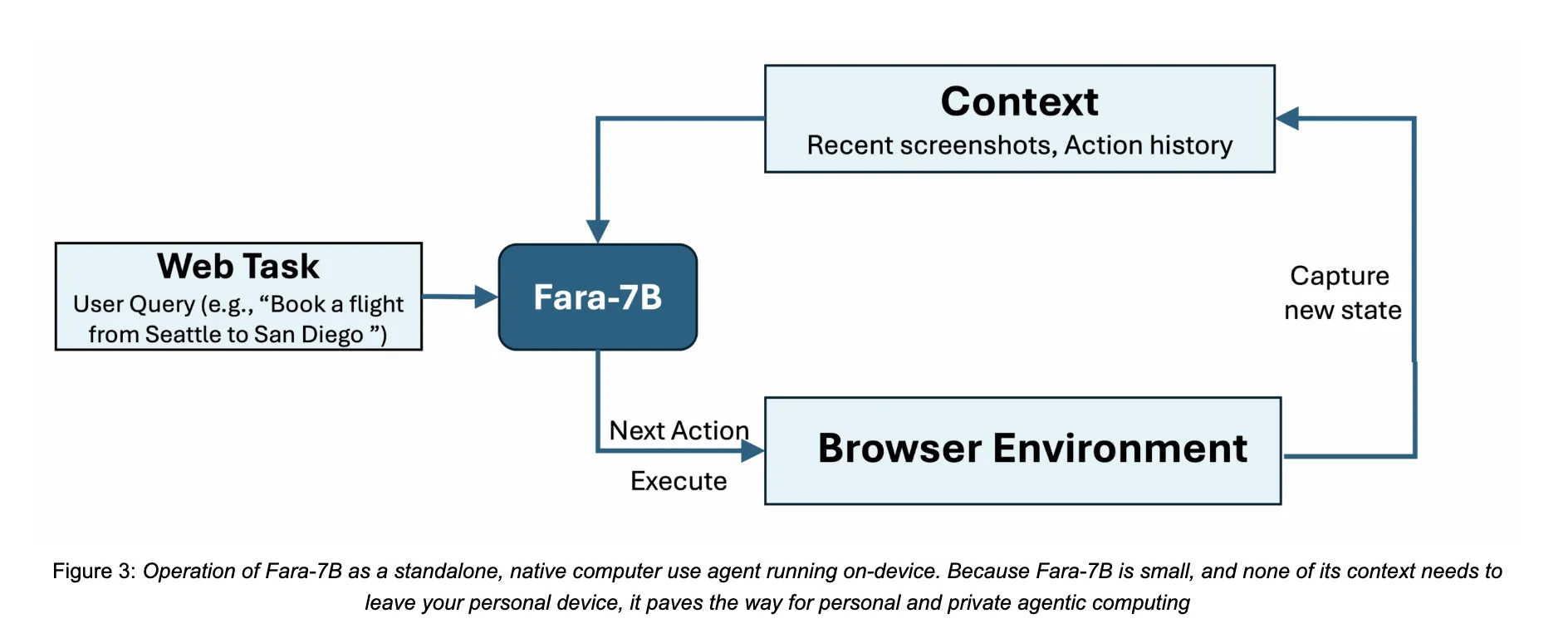

Microsoft AI Releases Fara-7B: An Efficient Agentic Model for Computer UseMarkTechPost How do we safely let an AI agent handle real web tasks like booking, searching, and form filling directly on our own devices without sending everything to the cloud? Microsoft Research has released Fara-7B, a 7 billion parameter agentic small language model designed specifically for computer use. It is an open weight Computer Use Agent

The post Microsoft AI Releases Fara-7B: An Efficient Agentic Model for Computer Use appeared first on MarkTechPost.

How do we safely let an AI agent handle real web tasks like booking, searching, and form filling directly on our own devices without sending everything to the cloud? Microsoft Research has released Fara-7B, a 7 billion parameter agentic small language model designed specifically for computer use. It is an open weight Computer Use Agent

The post Microsoft AI Releases Fara-7B: An Efficient Agentic Model for Computer Use appeared first on MarkTechPost. Read More

Expanding data residency access to business customers worldwideOpenAI News OpenAI expands data residency for ChatGPT Enterprise, ChatGPT Edu, and the API Platform, enabling eligible customers to store data at rest in-region.

OpenAI expands data residency for ChatGPT Enterprise, ChatGPT Edu, and the API Platform, enabling eligible customers to store data at rest in-region. Read More