Despite possibly supplanting some young analysts, one Gen Z cybersecurity specialist sees AI helping teach those willing to learn and removing drudge work. Read More

How to Implement Three Use Cases for the New Calendar-Based Time IntelligenceTowards Data Science Starting with the September 2025 Release of Power BI, Microsoft introduced the new Calendar-based Time Intelligence feature. Let’s see what can be done by implementing three use cases. The future looks very interesting with this new feature.

The post How to Implement Three Use Cases for the New Calendar-Based Time Intelligence appeared first on Towards Data Science.

Starting with the September 2025 Release of Power BI, Microsoft introduced the new Calendar-based Time Intelligence feature. Let’s see what can be done by implementing three use cases. The future looks very interesting with this new feature.

The post How to Implement Three Use Cases for the New Calendar-Based Time Intelligence appeared first on Towards Data Science. Read More

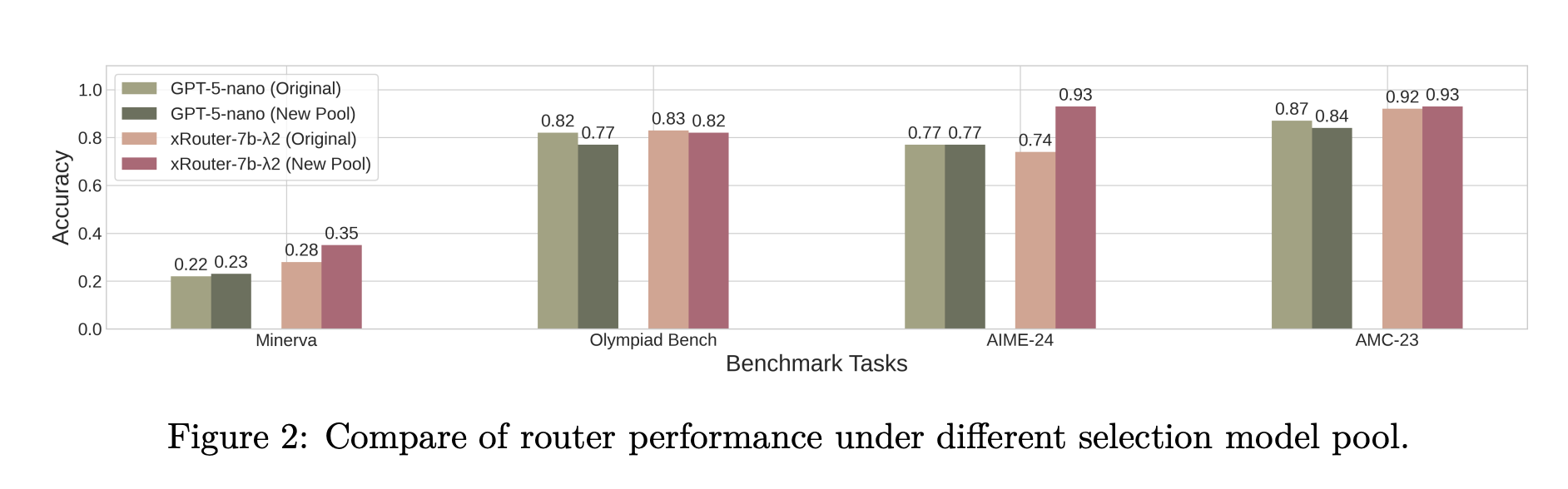

Salesforce AI Research Introduces xRouter: A Reinforcement Learning Router for Cost Aware LLM OrchestrationMarkTechPost When your application can call many different LLMs with very different prices and capabilities, who should decide which one answers each request? Salesforce AI research team introduces ‘xRouter’, a tool-calling–based routing system that targets this gap with a reinforcement learning based router and learns when to answer locally and when to call external models, while

The post Salesforce AI Research Introduces xRouter: A Reinforcement Learning Router for Cost Aware LLM Orchestration appeared first on MarkTechPost.

When your application can call many different LLMs with very different prices and capabilities, who should decide which one answers each request? Salesforce AI research team introduces ‘xRouter’, a tool-calling–based routing system that targets this gap with a reinforcement learning based router and learns when to answer locally and when to call external models, while

The post Salesforce AI Research Introduces xRouter: A Reinforcement Learning Router for Cost Aware LLM Orchestration appeared first on MarkTechPost. Read More

Risk management company Crisis24 has confirmed its OnSolve CodeRED platform suffered a cyberattack that disrupted emergency notification systems used by state and local governments, police departments, and fire agencies across the United States. […] Read More

Train custom computer vision defect detection model using Amazon SageMakerArtificial Intelligence In this post, we demonstrate how to migrate computer vision workloads from Amazon Lookout for Vision to Amazon SageMaker AI by training custom defect detection models using pre-trained models available on AWS Marketplace. We provide step-by-step guidance on labeling datasets with SageMaker Ground Truth, training models with flexible hyperparameter configurations, and deploying them for real-time or batch inference—giving you greater control and flexibility for automated quality inspection use cases.

In this post, we demonstrate how to migrate computer vision workloads from Amazon Lookout for Vision to Amazon SageMaker AI by training custom defect detection models using pre-trained models available on AWS Marketplace. We provide step-by-step guidance on labeling datasets with SageMaker Ground Truth, training models with flexible hyperparameter configurations, and deploying them for real-time or batch inference—giving you greater control and flexibility for automated quality inspection use cases. Read More

MIT scientists debut a generative AI model that could create molecules addressing hard-to-treat diseasesMIT News – Machine learning BoltzGen generates protein binders for any biological target from scratch, expanding AI’s reach from understanding biology toward engineering it.

BoltzGen generates protein binders for any biological target from scratch, expanding AI’s reach from understanding biology toward engineering it. Read More

Practical implementation considerations to close the AI value gapArtificial Intelligence The AWS Customer Success Center of Excellence (CS COE) helps customers get tangible value from their AWS investments. We’ve seen a pattern: customers who build AI strategies that address people, process, and technology together succeed more often. In this post, we share practical considerations that can help close the AI value gap.

The AWS Customer Success Center of Excellence (CS COE) helps customers get tangible value from their AWS investments. We’ve seen a pattern: customers who build AI strategies that address people, process, and technology together succeed more often. In this post, we share practical considerations that can help close the AI value gap. Read More

Why CrewAI’s Manager-Worker Architecture Fails — and How to Fix ItTowards Data Science A real-world analysis of why CrewAI’s hierarchical orchestration misfires—and a practical fix you can implement today.

The post Why CrewAI’s Manager-Worker Architecture Fails — and How to Fix It appeared first on Towards Data Science.

A real-world analysis of why CrewAI’s hierarchical orchestration misfires—and a practical fix you can implement today.

The post Why CrewAI’s Manager-Worker Architecture Fails — and How to Fix It appeared first on Towards Data Science. Read More

Our favourite Black Friday deal to Learn SQL, AI, Python, and become a certified data analyst!KDnuggets Don’t Miss DataCamp’s Black Friday Deal: Nov 12-Dec 4

Don’t Miss DataCamp’s Black Friday Deal: Nov 12-Dec 4 Read More

Warner Bros. Discovery achieves 60% cost savings and faster ML inference with AWS GravitonArtificial Intelligence Warner Bros. Discovery (WBD) is a leading global media and entertainment company that creates and distributes the world’s most differentiated and complete portfolio of content and brands across television, film and streaming. In this post, we describe the scale of our offerings, artificial intelligence (AI)/machine learning (ML) inference infrastructure requirements for our real time recommender systems, and how we used AWS Graviton-based Amazon SageMaker AI instances for our ML inference workloads and achieved 60% cost savings and 7% to 60% latency improvements across different models.

Warner Bros. Discovery (WBD) is a leading global media and entertainment company that creates and distributes the world’s most differentiated and complete portfolio of content and brands across television, film and streaming. In this post, we describe the scale of our offerings, artificial intelligence (AI)/machine learning (ML) inference infrastructure requirements for our real time recommender systems, and how we used AWS Graviton-based Amazon SageMaker AI instances for our ML inference workloads and achieved 60% cost savings and 7% to 60% latency improvements across different models. Read More