A Coding Guide to Design an Agentic AI System Using a Control-Plane Architecture for Safe, Modular, and Scalable Tool-Driven Reasoning WorkflowsMarkTechPost In this tutorial, we build an advanced Agentic AI using the control-plane design pattern, and we walk through each component step by step as we implement it. We treat the control plane as the central orchestrator that coordinates tools, manages safety rules, and structures the reasoning loop. Also, we set up a miniature retrieval system,

The post A Coding Guide to Design an Agentic AI System Using a Control-Plane Architecture for Safe, Modular, and Scalable Tool-Driven Reasoning Workflows appeared first on MarkTechPost.

In this tutorial, we build an advanced Agentic AI using the control-plane design pattern, and we walk through each component step by step as we implement it. We treat the control plane as the central orchestrator that coordinates tools, manages safety rules, and structures the reasoning loop. Also, we set up a miniature retrieval system,

The post A Coding Guide to Design an Agentic AI System Using a Control-Plane Architecture for Safe, Modular, and Scalable Tool-Driven Reasoning Workflows appeared first on MarkTechPost. Read More

How to Scale Your LLM usageTowards Data Science Learn how to increase LLM usage to achieve increased productivity

The post How to Scale Your LLM usage appeared first on Towards Data Science.

Learn how to increase LLM usage to achieve increased productivity

The post How to Scale Your LLM usage appeared first on Towards Data Science. Read More

Scientists uncover the brain’s hidden learning blocksArtificial Intelligence News — ScienceDaily Princeton researchers found that the brain excels at learning because it reuses modular “cognitive blocks” across many tasks. Monkeys switching between visual categorization challenges revealed that the prefrontal cortex assembles these blocks like Legos to create new behaviors. This flexibility explains why humans learn quickly while AI models often forget old skills. The insights may help build better AI and new clinical treatments for impaired cognitive adaptability.

Princeton researchers found that the brain excels at learning because it reuses modular “cognitive blocks” across many tasks. Monkeys switching between visual categorization challenges revealed that the prefrontal cortex assembles these blocks like Legos to create new behaviors. This flexibility explains why humans learn quickly while AI models often forget old skills. The insights may help build better AI and new clinical treatments for impaired cognitive adaptability. Read More

After scanning all 5.6 million public repositories on GitLab Cloud, a security engineer discovered more than 17,000 exposed secrets across over 2,800 unique domains. […] Read More

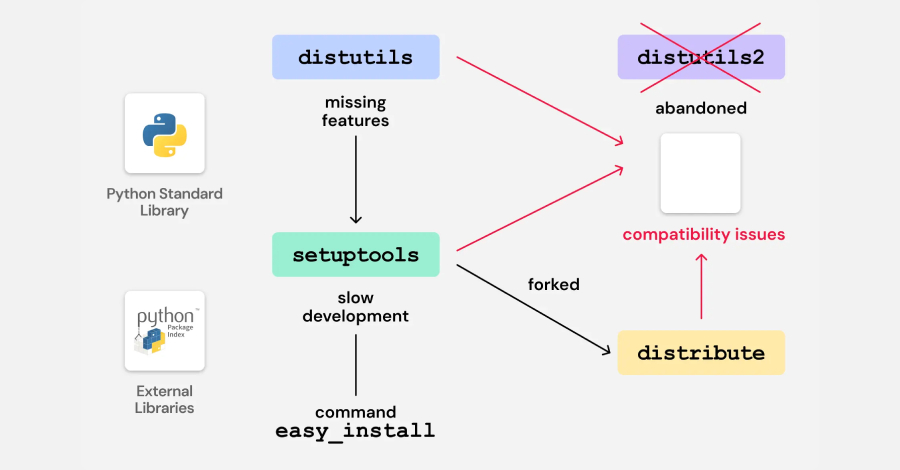

Cybersecurity researchers have discovered vulnerable code in legacy Python packages that could potentially pave the way for a supply chain compromise on the Python Package Index (PyPI) via a domain takeover attack. Software supply chain security company ReversingLabs said it found the “vulnerability” in bootstrap files provided by a build and deployment automation tool named […]

A 44-year-old man was sentenced to seven years and four months in prison for operating an “evil twin” WiFi network to steal the data of unsuspecting travelers at various airports across Australia. […] Read More

The North Korean threat actors behind the Contagious Interview campaign have continued to flood the npm registry with 197 more malicious packages since last month. According to Socket, these packages have been downloaded over 31,000 times, and are designed to deliver a variant of OtterCookie that brings together the features of BeaverTail and prior versions […]

The French Football Federation (FFF) disclosed a data breach on Friday after attackers used a compromised account to gain access to administrative management software used by football clubs. […] Read More

Data Science in 2026: Is It Still Worth It?Towards Data Science An honest view from a 10-year AI Engineer

The post Data Science in 2026: Is It Still Worth It? appeared first on Towards Data Science.

An honest view from a 10-year AI Engineer

The post Data Science in 2026: Is It Still Worth It? appeared first on Towards Data Science. Read More

Getting Started with the Claude Agent SDKKDnuggets Set up, build, and test agentic apps with Claude Code, powered by your locally installed Claude CLI and Claude Code subscription.

Set up, build, and test agentic apps with Claude Code, powered by your locally installed Claude CLI and Claude Code subscription. Read More