The Machine Learning and Deep Learning “Advent Calendar” Series: The BlueprintTowards Data Science Opening the black box of ML models, step by step, directly in Excel

The post The Machine Learning and Deep Learning “Advent Calendar” Series: The Blueprint appeared first on Towards Data Science.

Opening the black box of ML models, step by step, directly in Excel

The post The Machine Learning and Deep Learning “Advent Calendar” Series: The Blueprint appeared first on Towards Data Science. Read More

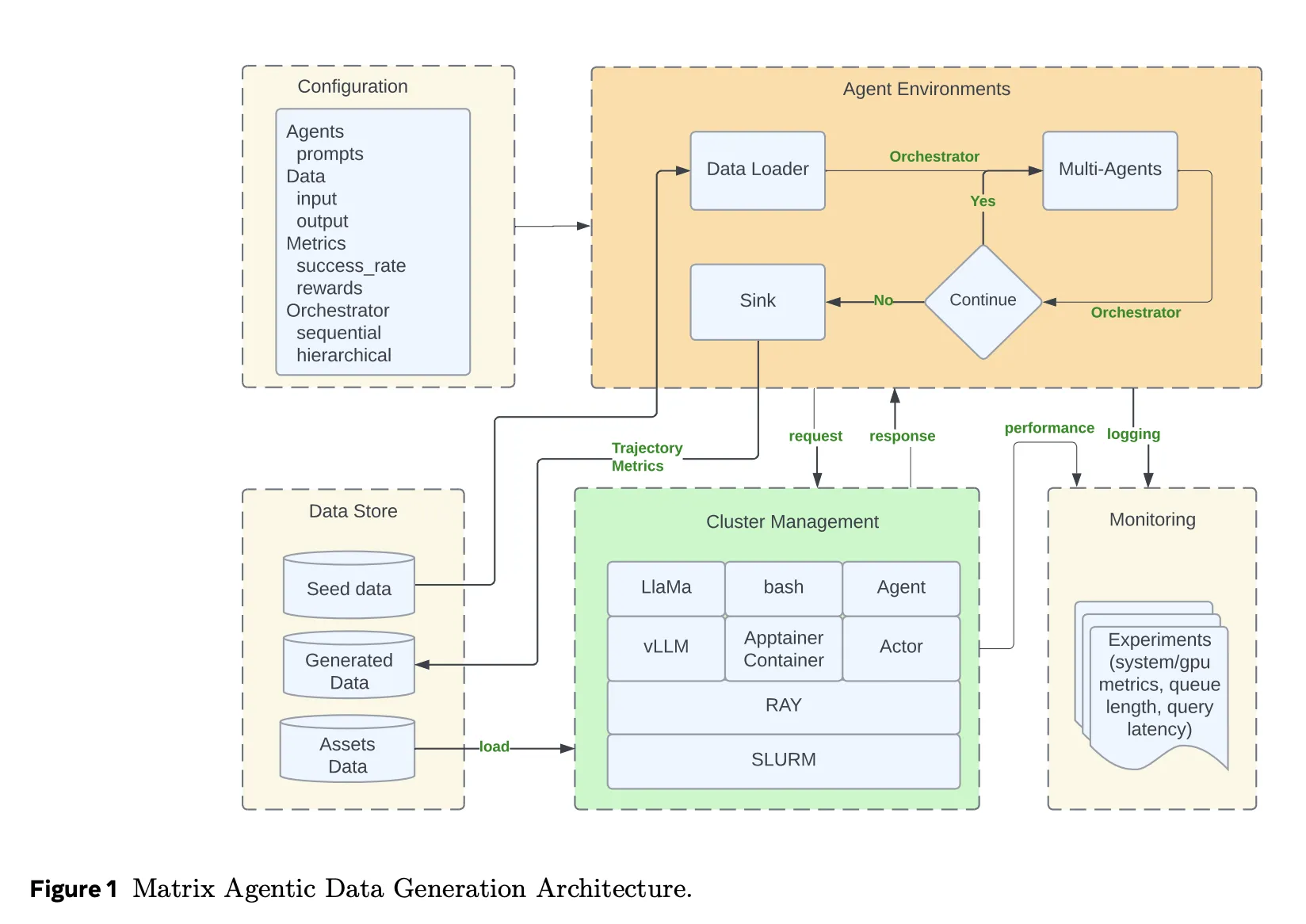

Meta AI Researchers Introduce Matrix: A Ray Native a Decentralized Framework for Multi Agent Synthetic Data GenerationMarkTechPost How do you keep synthetic data fresh and diverse for modern AI models without turning a single orchestration pipeline into the bottleneck? Meta AI researchers introduce Matrix, a decentralized framework where both control and data flow are serialized into messages that move through distributed queues. As LLM training increasingly relies on synthetic conversations, tool traces

The post Meta AI Researchers Introduce Matrix: A Ray Native a Decentralized Framework for Multi Agent Synthetic Data Generation appeared first on MarkTechPost.

How do you keep synthetic data fresh and diverse for modern AI models without turning a single orchestration pipeline into the bottleneck? Meta AI researchers introduce Matrix, a decentralized framework where both control and data flow are serialized into messages that move through distributed queues. As LLM training increasingly relies on synthetic conversations, tool traces

The post Meta AI Researchers Introduce Matrix: A Ray Native a Decentralized Framework for Multi Agent Synthetic Data Generation appeared first on MarkTechPost. Read More

The Greedy Boruta Algorithm: Faster Feature Selection Without Sacrificing RecallTowards Data Science A modification to the Boruta algorithm that dramatically reduces computation while maintaining high sensitivity

The post The Greedy Boruta Algorithm: Faster Feature Selection Without Sacrificing Recall appeared first on Towards Data Science.

A modification to the Boruta algorithm that dramatically reduces computation while maintaining high sensitivity

The post The Greedy Boruta Algorithm: Faster Feature Selection Without Sacrificing Recall appeared first on Towards Data Science. Read More

The Best Proxy Providers for Large-Scale Scraping for 2026KDnuggets Robust proxies allow you to rotate identities, reach any region, and bypass sophisticated anti-bot systems, all while protecting your infrastructure from blocks and blacklisting.

Robust proxies allow you to rotate identities, reach any region, and bypass sophisticated anti-bot systems, all while protecting your infrastructure from blocks and blacklisting. Read More

The U.S. Cybersecurity and Infrastructure Security Agency (CISA) has updated its Known Exploited Vulnerabilities (KEV) catalog to include a security flaw impacting OpenPLC ScadaBR, citing evidence of active exploitation. The vulnerability in question is CVE-2021-26829 (CVSS score: 5.4), a cross-site scripting (XSS) flaw that affects Windows and Linux versions of the software via Read More

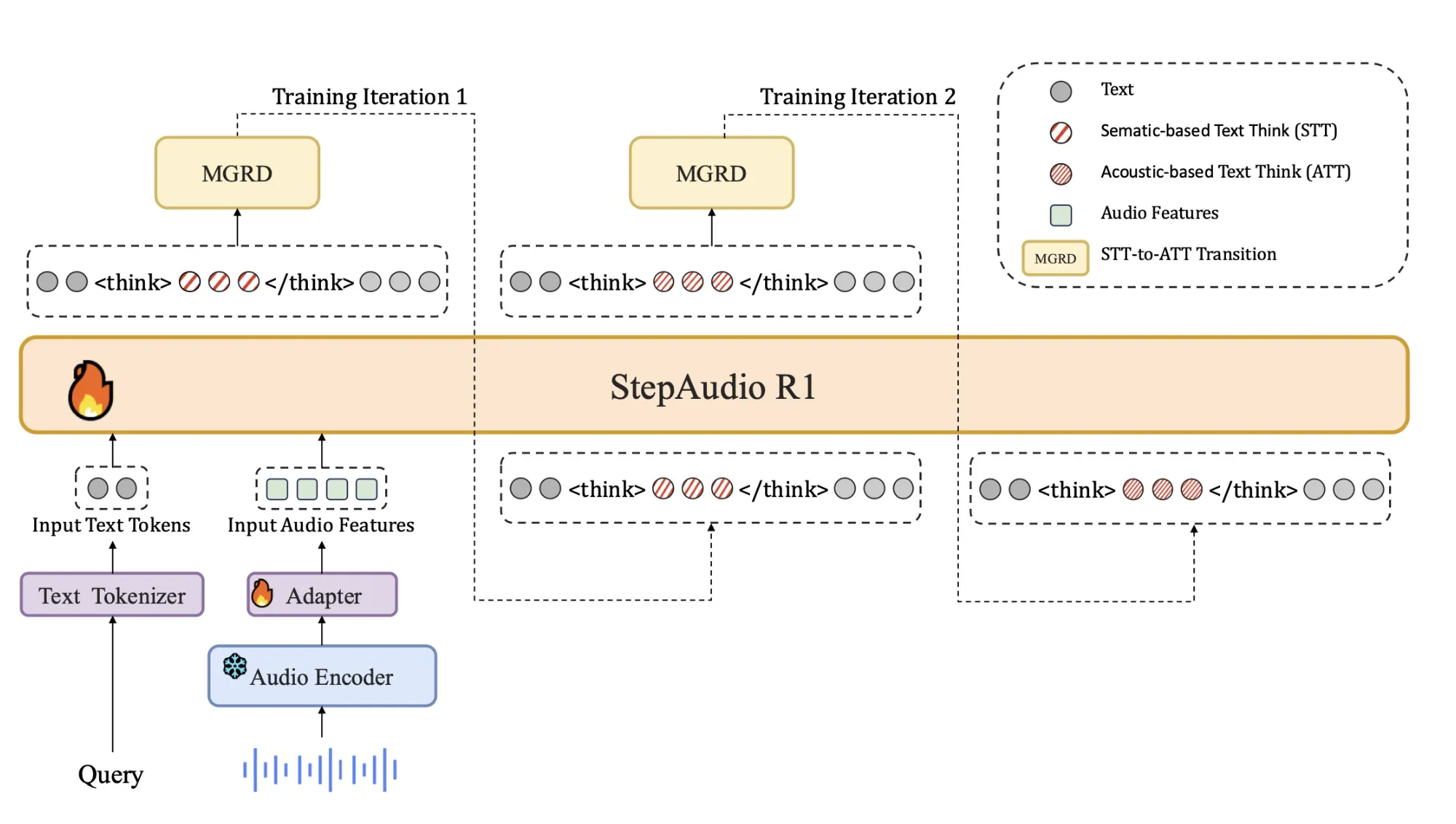

StepFun AI Releases Step-Audio-R1: A New Audio LLM that Finally Benefits from Test Time Compute ScalingMarkTechPost Why do current audio AI models often perform worse when they generate longer reasoning instead of grounding their decisions in the actual sound. StepFun research team releases Step-Audio-R1, a new audio LLM designed for test time compute scaling, address this failure mode by showing that the accuracy drop with chain of thought is not an

The post StepFun AI Releases Step-Audio-R1: A New Audio LLM that Finally Benefits from Test Time Compute Scaling appeared first on MarkTechPost.

Why do current audio AI models often perform worse when they generate longer reasoning instead of grounding their decisions in the actual sound. StepFun research team releases Step-Audio-R1, a new audio LLM designed for test time compute scaling, address this failure mode by showing that the accuracy drop with chain of thought is not an

The post StepFun AI Releases Step-Audio-R1: A New Audio LLM that Finally Benefits from Test Time Compute Scaling appeared first on MarkTechPost. Read More

Metric Deception: When Your Best KPIs Hide Your Worst FailuresTowards Data Science The most dangerous KPIs aren’t broken; they’re the ones trusted long after they’ve lost their meaning.

The post Metric Deception: When Your Best KPIs Hide Your Worst Failures appeared first on Towards Data Science.

The most dangerous KPIs aren’t broken; they’re the ones trusted long after they’ve lost their meaning.

The post Metric Deception: When Your Best KPIs Hide Your Worst Failures appeared first on Towards Data Science. Read More

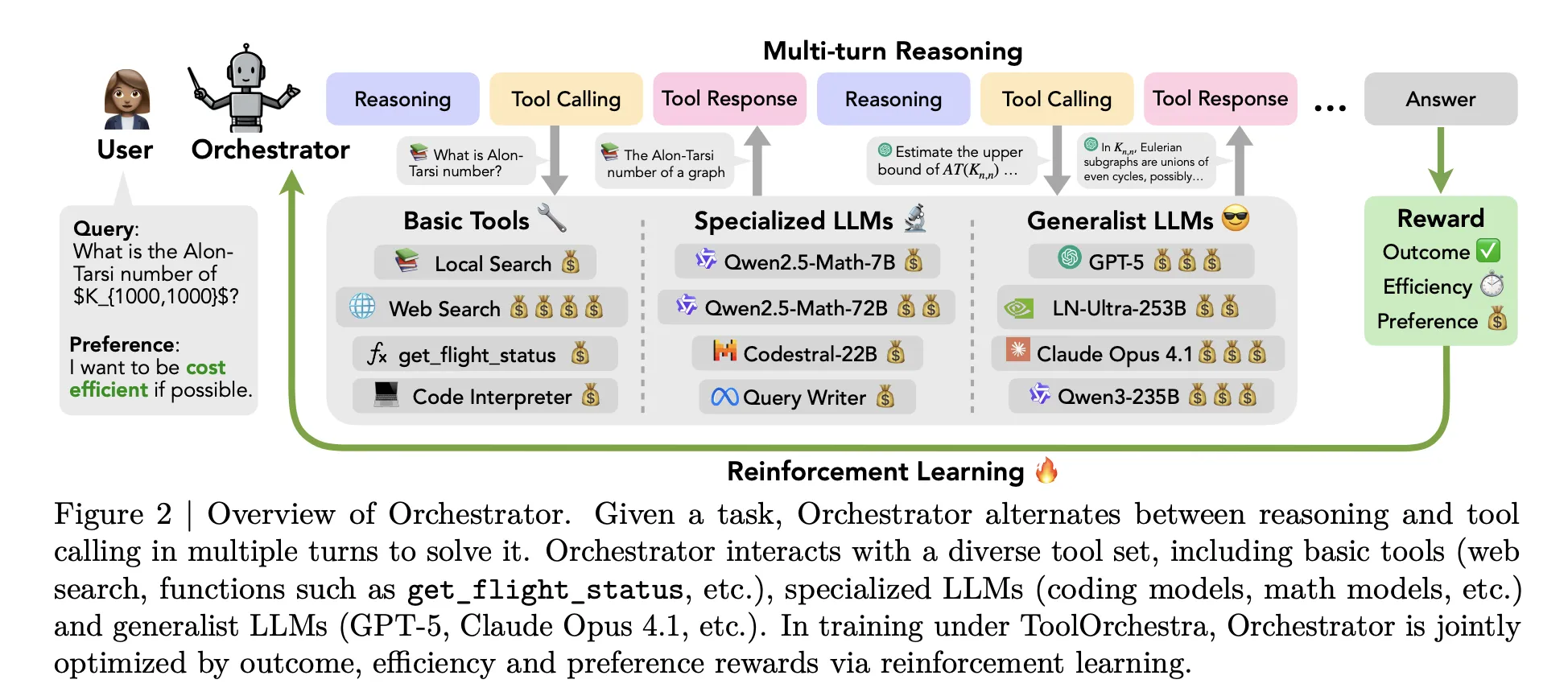

NVIDIA AI Releases Orchestrator-8B: A Reinforcement Learning Trained Controller for Efficient Tool and Model SelectionMarkTechPost How can an AI system learn to pick the right model or tool for each step of a task instead of always relying on one large model for everything? NVIDIA researchers release ToolOrchestra, a novel method for training a small language model to act as the orchestrator- the ‘brain’ of a heterogeneous tool-use agent From

The post NVIDIA AI Releases Orchestrator-8B: A Reinforcement Learning Trained Controller for Efficient Tool and Model Selection appeared first on MarkTechPost.

How can an AI system learn to pick the right model or tool for each step of a task instead of always relying on one large model for everything? NVIDIA researchers release ToolOrchestra, a novel method for training a small language model to act as the orchestrator- the ‘brain’ of a heterogeneous tool-use agent From

The post NVIDIA AI Releases Orchestrator-8B: A Reinforcement Learning Trained Controller for Efficient Tool and Model Selection appeared first on MarkTechPost. Read More

Asahi Group Holdings, Japan’s largest beer producer, has finished the investigation into the September cyberattack and found that the incident has impacted up to 1.9 million individuals. […] Read More

OpenAI is now internally testing ‘ads’ inside ChatGPT that could redefine the web economy. […] Read More