A Learning-based Control Methodology for Transitioning VTOL UAVscs.AI updates on arXiv.org arXiv:2512.03548v1 Announce Type: cross

Abstract: Transition control poses a critical challenge in Vertical Take-Off and Landing Unmanned Aerial Vehicle (VTOL UAV) development due to the tilting rotor mechanism, which shifts the center of gravity and thrust direction during transitions. Current control methods’ decoupled control of altitude and position leads to significant vibration, and limits interaction consideration and adaptability. In this study, we propose a novel coupled transition control methodology based on reinforcement learning (RL) driven controller. Besides, contrasting to the conventional phase-transition approach, the ST3M method demonstrates a new perspective by treating cruise mode as a special case of hover. We validate the feasibility of applying our method in simulation and real-world environments, demonstrating efficient controller development and migration while accurately controlling UAV position and attitude, exhibiting outstanding trajectory tracking and reduced vibrations during the transition process.

arXiv:2512.03548v1 Announce Type: cross

Abstract: Transition control poses a critical challenge in Vertical Take-Off and Landing Unmanned Aerial Vehicle (VTOL UAV) development due to the tilting rotor mechanism, which shifts the center of gravity and thrust direction during transitions. Current control methods’ decoupled control of altitude and position leads to significant vibration, and limits interaction consideration and adaptability. In this study, we propose a novel coupled transition control methodology based on reinforcement learning (RL) driven controller. Besides, contrasting to the conventional phase-transition approach, the ST3M method demonstrates a new perspective by treating cruise mode as a special case of hover. We validate the feasibility of applying our method in simulation and real-world environments, demonstrating efficient controller development and migration while accurately controlling UAV position and attitude, exhibiting outstanding trajectory tracking and reduced vibrations during the transition process. Read More

The promising potential of vision language models for the generation of textual weather forecastscs.AI updates on arXiv.org arXiv:2512.03623v1 Announce Type: cross

Abstract: Despite the promising capability of multimodal foundation models, their application to the generation of meteorological products and services remains nascent. To accelerate aspiration and adoption, we explore the novel use of a vision language model for writing the iconic Shipping Forecast text directly from video-encoded gridded weather data. These early results demonstrate promising scalable technological opportunities for enhancing production efficiency and service innovation within the weather enterprise and beyond.

arXiv:2512.03623v1 Announce Type: cross

Abstract: Despite the promising capability of multimodal foundation models, their application to the generation of meteorological products and services remains nascent. To accelerate aspiration and adoption, we explore the novel use of a vision language model for writing the iconic Shipping Forecast text directly from video-encoded gridded weather data. These early results demonstrate promising scalable technological opportunities for enhancing production efficiency and service innovation within the weather enterprise and beyond. Read More

A human rights lawyer from Pakistan’s Balochistan province received a suspicious link on WhatsApp from an unknown number, marking the first time a civil society member in the country was targeted by Intellexa’s Predator spyware, Amnesty International said in a report. The link, the non-profit organization said, is a “Predator attack attempt based on the […]

Most MSPs and MSSPs know how to deliver effective security. The challenge is helping prospects understand why it matters in business terms. Too often, sales conversations stall because prospects are overwhelmed, skeptical, or tired of fear-based messaging. That’s why we created ”Getting to Yes”: An Anti-Sales Guide for MSPs. This guide helps service providers transform […]

Multiple China-linked threat actors began exploiting the React2Shell vulnerability (CVE-2025-55182) affecting React and Next.js just hours after the max-severity issue was disclosed. […] Read More

A command injection vulnerability in Array Networks AG Series secure access gateways has been exploited in the wild since August 2025, according to an alert issued by JPCERT/CC this week. The vulnerability, which does not have a CVE identifier, was addressed by the company on May 11, 2025. It’s rooted in Array’s DesktopDirect, a remote […]

Introducing OpenAI for AustraliaOpenAI News OpenAI is launching OpenAI for Australia to build sovereign AI infrastructure, upskill more than 1.5 million workers, and accelerate innovation across the country’s growing AI ecosystem.

OpenAI is launching OpenAI for Australia to build sovereign AI infrastructure, upskill more than 1.5 million workers, and accelerate innovation across the country’s growing AI ecosystem. Read More

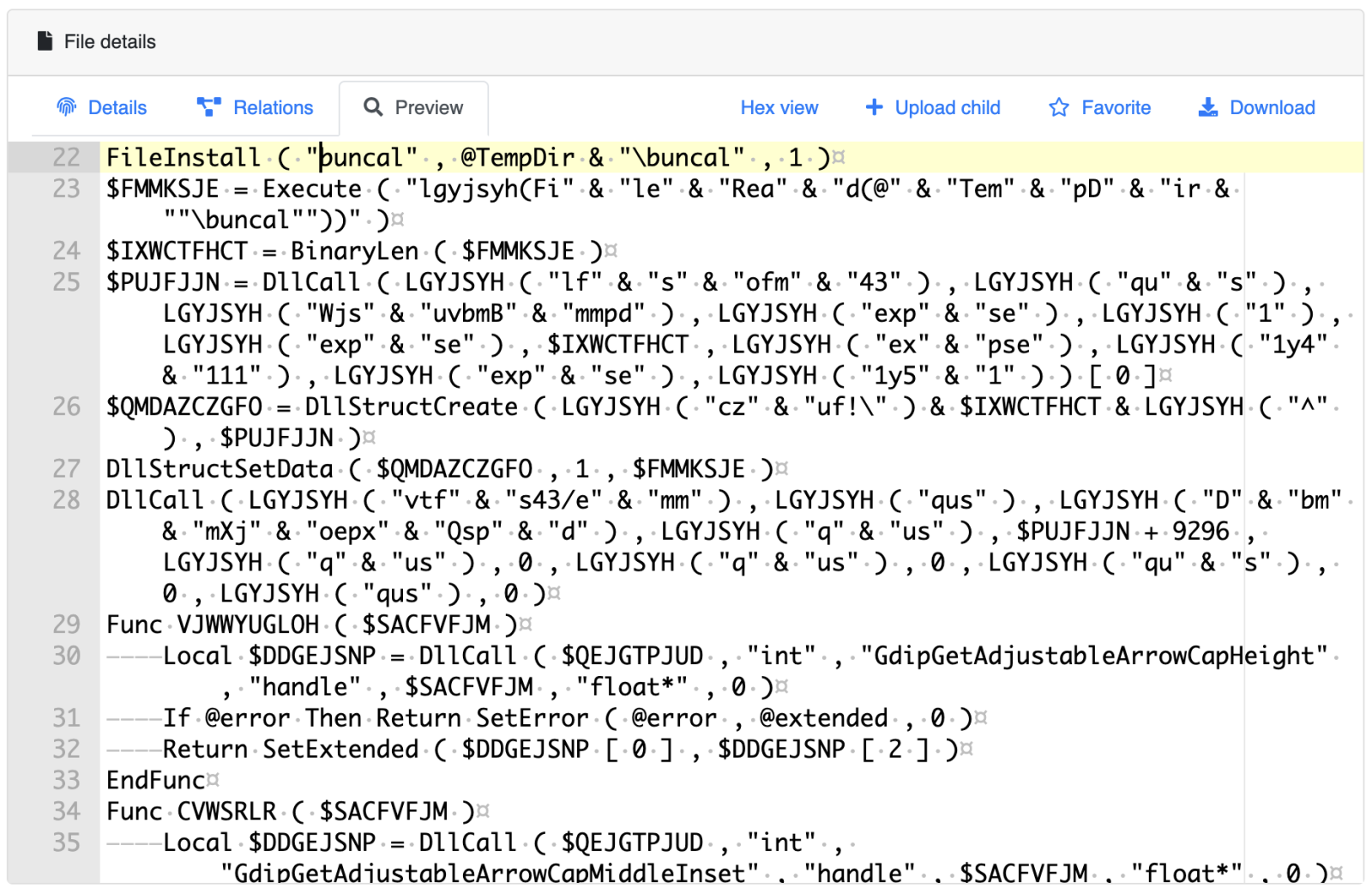

AutoIT3[1] is a powerful language that helps to built nice applications for Windows environments, mainly to automate tasks. If it looks pretty old, the latest version was released last September and it remains popular amongst developers, for the good… or the bad! Malware written in AutoIt3 has existed since the late 2000s, when attackers realized […]

Remember when Apple put that U2 album in everyone’s music libraries? India wanted to do that to all of its citizens, but with a cybersecurity app. It wasn’t a good idea. Read More

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License. Read More