The Best Web Scraping APIs for AI Models in 2026KDnuggets For powering next-generation AI models in 2026, Bright Data’s Web Scraper API delivers on all fronts: dynamic site support, anti-bot automation, structured output, and global reach.

For powering next-generation AI models in 2026, Bright Data’s Web Scraper API delivers on all fronts: dynamic site support, anti-bot automation, structured output, and global reach. Read More

Microsoft AI Releases VibeVoice-Realtime: A Lightweight Real‑Time Text-to-Speech Model Supporting Streaming Text Input and Robust Long-Form Speech GenerationMarkTechPost Microsoft has released VibeVoice-Realtime-0.5B, a real time text to speech model that works with streaming text input and long form speech output, aimed at agent style applications and live data narration. The model can start producing audible speech in about 300 ms, which is critical when a language model is still generating the rest of

The post Microsoft AI Releases VibeVoice-Realtime: A Lightweight Real‑Time Text-to-Speech Model Supporting Streaming Text Input and Robust Long-Form Speech Generation appeared first on MarkTechPost.

Microsoft has released VibeVoice-Realtime-0.5B, a real time text to speech model that works with streaming text input and long form speech output, aimed at agent style applications and live data narration. The model can start producing audible speech in about 300 ms, which is critical when a language model is still generating the rest of

The post Microsoft AI Releases VibeVoice-Realtime: A Lightweight Real‑Time Text-to-Speech Model Supporting Streaming Text Input and Robust Long-Form Speech Generation appeared first on MarkTechPost. Read More

Over 30 security vulnerabilities have been disclosed in various artificial intelligence (AI)-powered Integrated Development Environments (IDEs) that combine prompt injection primitives with legitimate features to achieve data exfiltration and remote code execution. The security shortcomings have been collectively named IDEsaster by security researcher Ari Marzouk (MaccariTA). They affect popular Read More

A campaign has been observed targeting Palo Alto GlobalProtect portals with login attempts and launching scanning activity against SonicWall SonicOS API endpoints. […] Read More

Over 77,000 Internet-exposed IP addresses are vulnerable to the critical React2Shell remote code execution flaw (CVE-2025-55182), with researchers now confirming that attackers have already compromised over 30 organizations across multiple sectors. […] Read More

Reading Research Papers in the Age of LLMsTowards Data Science How I keep up with papers with a mix of manual and AI-assisted reading

The post Reading Research Papers in the Age of LLMs appeared first on Towards Data Science.

How I keep up with papers with a mix of manual and AI-assisted reading

The post Reading Research Papers in the Age of LLMs appeared first on Towards Data Science. Read More

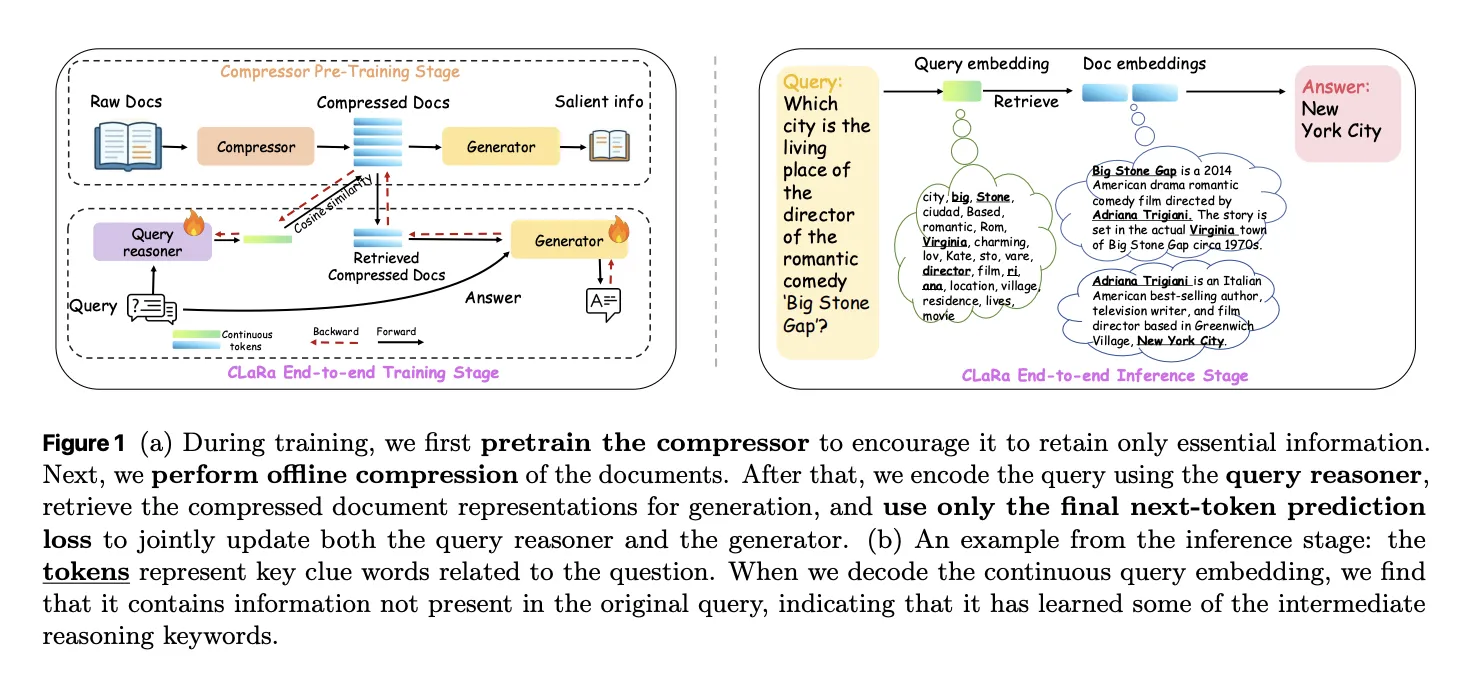

Apple Researchers Release CLaRa: A Continuous Latent Reasoning Framework for Compression‑Native RAG with 16x–128x Semantic Document CompressionMarkTechPost How do you keep RAG systems accurate and efficient when every query tries to stuff thousands of tokens into the context window and the retriever and generator are still optimized as 2 separate, disconnected systems? A team of researchers from Apple and University of Edinburgh released CLaRa, Continuous Latent Reasoning, (CLaRa-7B-Base, CLaRa-7B-Instruct and CLaRa-7B-E2E) a

The post Apple Researchers Release CLaRa: A Continuous Latent Reasoning Framework for Compression‑Native RAG with 16x–128x Semantic Document Compression appeared first on MarkTechPost.

How do you keep RAG systems accurate and efficient when every query tries to stuff thousands of tokens into the context window and the retriever and generator are still optimized as 2 separate, disconnected systems? A team of researchers from Apple and University of Edinburgh released CLaRa, Continuous Latent Reasoning, (CLaRa-7B-Base, CLaRa-7B-Instruct and CLaRa-7B-E2E) a

The post Apple Researchers Release CLaRa: A Continuous Latent Reasoning Framework for Compression‑Native RAG with 16x–128x Semantic Document Compression appeared first on MarkTechPost. Read More

A sprawling academic cheating network turbocharged by Google Ads that has generated nearly $25 million in revenue has curious ties to a Kremlin-connected oligarch whose Russian university builds drones for Russia’s war against Ukraine. The Nerdify homepage. The link between essay mills and Russian attack drones might seem improbable, but understanding it begins with a […]

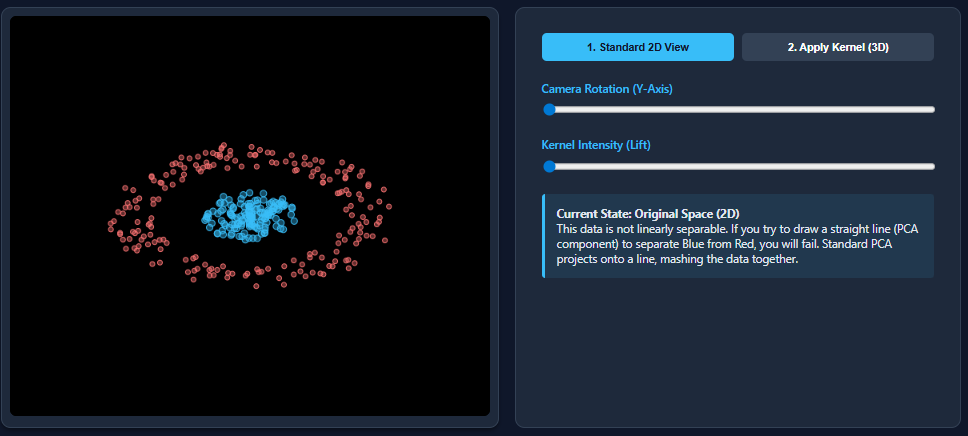

Kernel Principal Component Analysis (PCA): Explained with an ExampleMarkTechPost Dimensionality reduction techniques like PCA work wonderfully when datasets are linearly separable—but they break down the moment nonlinear patterns appear. That’s exactly what happens with datasets such as two moons: PCA flattens the structure and mixes the classes together. Kernel PCA fixes this limitation by mapping the data into a higher-dimensional feature space where nonlinear

The post Kernel Principal Component Analysis (PCA): Explained with an Example appeared first on MarkTechPost.

Dimensionality reduction techniques like PCA work wonderfully when datasets are linearly separable—but they break down the moment nonlinear patterns appear. That’s exactly what happens with datasets such as two moons: PCA flattens the structure and mixes the classes together. Kernel PCA fixes this limitation by mapping the data into a higher-dimensional feature space where nonlinear

The post Kernel Principal Component Analysis (PCA): Explained with an Example appeared first on MarkTechPost. Read More

How to Design a Fully Local Multi-Agent Orchestration System Using TinyLlama for Intelligent Task Decomposition and Autonomous CollaborationMarkTechPost In this tutorial, we explore how we can orchestrate a team of specialized AI agents locally using an efficient manager-agent architecture powered by TinyLlama. We walk through how we build structured task decomposition, inter-agent collaboration, and autonomous reasoning loops without relying on any external APIs. By running everything directly through the transformers library, we create

The post How to Design a Fully Local Multi-Agent Orchestration System Using TinyLlama for Intelligent Task Decomposition and Autonomous Collaboration appeared first on MarkTechPost.

In this tutorial, we explore how we can orchestrate a team of specialized AI agents locally using an efficient manager-agent architecture powered by TinyLlama. We walk through how we build structured task decomposition, inter-agent collaboration, and autonomous reasoning loops without relying on any external APIs. By running everything directly through the transformers library, we create

The post How to Design a Fully Local Multi-Agent Orchestration System Using TinyLlama for Intelligent Task Decomposition and Autonomous Collaboration appeared first on MarkTechPost. Read More