Production-Grade Observability for AI Agents: A Minimal-Code, Configuration-First ApproachTowards Data Science LLM-as-a-Judge, regression testing, and end-to-end traceability of multi-agent LLM systems

The post Production-Grade Observability for AI Agents: A Minimal-Code, Configuration-First Approach appeared first on Towards Data Science.

LLM-as-a-Judge, regression testing, and end-to-end traceability of multi-agent LLM systems

The post Production-Grade Observability for AI Agents: A Minimal-Code, Configuration-First Approach appeared first on Towards Data Science. Read More

5 Data Privacy Stories from 2025 Every Analyst Should KnowKDnuggets In this article we look at 5 specific privacy stories from 2025 that changed how analysts work day to day, from the code they write to the reports they publish.

In this article we look at 5 specific privacy stories from 2025 that changed how analysts work day to day, from the code they write to the reports they publish. Read More

Gemini 3 Flash: frontier intelligence built for speedGoogle DeepMind News Gemini 3 Flash offers frontier intelligence built for speed at a fraction of the cost.

Gemini 3 Flash offers frontier intelligence built for speed at a fraction of the cost. Read More

Meta AI Releases SAM Audio: A State-of-the-Art Unified Model that Uses Intuitive and Multimodal Prompts for Audio SeparationMarkTechPost Meta has released SAM Audio, a prompt driven audio separation model that targets a common editing bottleneck, isolating one sound from a real world mix without building a custom model per sound class. Meta released 3 main sizes, sam-audio-small, sam-audio-base, and sam-audio-large. The model is available to download and to try in the Segment Anything

The post Meta AI Releases SAM Audio: A State-of-the-Art Unified Model that Uses Intuitive and Multimodal Prompts for Audio Separation appeared first on MarkTechPost.

Meta has released SAM Audio, a prompt driven audio separation model that targets a common editing bottleneck, isolating one sound from a real world mix without building a custom model per sound class. Meta released 3 main sizes, sam-audio-small, sam-audio-base, and sam-audio-large. The model is available to download and to try in the Segment Anything

The post Meta AI Releases SAM Audio: A State-of-the-Art Unified Model that Uses Intuitive and Multimodal Prompts for Audio Separation appeared first on MarkTechPost. Read More

The Zeroday Cloud hacking competition in London has awarded researchers $320,000 for demonstrating critical remote code execution vulnerabilities in components used in cloud infrastructure. […] Read More

A new way to increase the capabilities of large language modelsMIT News – Machine learning MIT-IBM Watson AI Lab researchers developed an expressive architecture that provides better state tracking and sequential reasoning in LLMs over long texts.

MIT-IBM Watson AI Lab researchers developed an expressive architecture that provides better state tracking and sequential reasoning in LLMs over long texts. Read More

A “scientific sandbox” lets researchers explore the evolution of vision systemsMIT News – Machine learning The AI-powered tool could inform the design of better sensors and cameras for robots or autonomous vehicles.

The AI-powered tool could inform the design of better sensors and cameras for robots or autonomous vehicles. Read More

Tracking and managing assets used in AI development with Amazon SageMaker AI Artificial Intelligence

Tracking and managing assets used in AI development with Amazon SageMaker AI Artificial Intelligence In this post, we’ll explore the new capabilities and core concepts that help organizations track and manage models development and deployment lifecycles. We will show you how the features are configured to train models with automatic end-to-end lineage, from dataset upload and versioning to model fine-tuning, evaluation, and seamless endpoint deployment.

In this post, we’ll explore the new capabilities and core concepts that help organizations track and manage models development and deployment lifecycles. We will show you how the features are configured to train models with automatic end-to-end lineage, from dataset upload and versioning to model fine-tuning, evaluation, and seamless endpoint deployment. Read More

Track machine learning experiments with MLflow on Amazon SageMaker using Snowflake integrationArtificial Intelligence In this post, we demonstrate how to integrate Amazon SageMaker managed MLflow as a central repository to log these experiments and provide a unified system for monitoring their progress.

In this post, we demonstrate how to integrate Amazon SageMaker managed MLflow as a central repository to log these experiments and provide a unified system for monitoring their progress. Read More

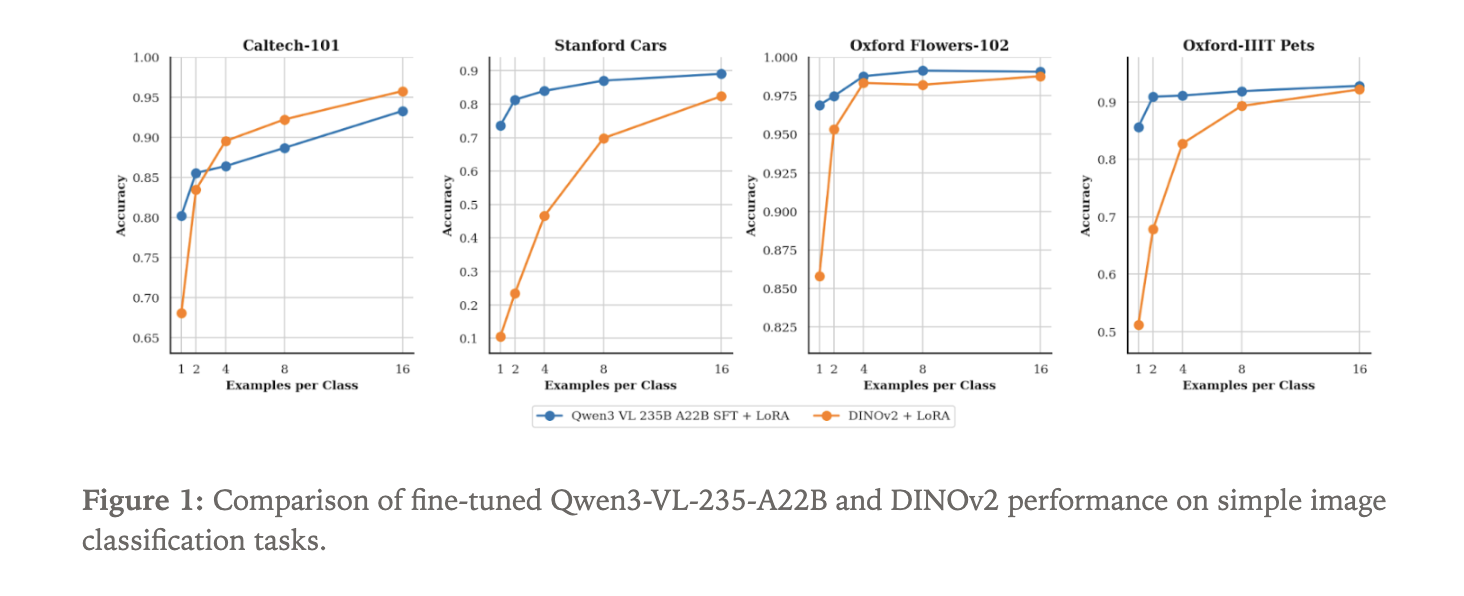

Thinking Machines Lab Makes Tinker Generally Available: Adds Kimi K2 Thinking And Qwen3-VL Vision InputMarkTechPost Thinking Machines Lab has moved its Tinker training API into general availability and added 3 major capabilities, support for the Kimi K2 Thinking reasoning model, OpenAI compatible sampling, and image input through Qwen3-VL vision language models. For AI engineers, this turns Tinker into a practical way to fine tune frontier models without building distributed training

The post Thinking Machines Lab Makes Tinker Generally Available: Adds Kimi K2 Thinking And Qwen3-VL Vision Input appeared first on MarkTechPost.

Thinking Machines Lab has moved its Tinker training API into general availability and added 3 major capabilities, support for the Kimi K2 Thinking reasoning model, OpenAI compatible sampling, and image input through Qwen3-VL vision language models. For AI engineers, this turns Tinker into a practical way to fine tune frontier models without building distributed training

The post Thinking Machines Lab Makes Tinker Generally Available: Adds Kimi K2 Thinking And Qwen3-VL Vision Input appeared first on MarkTechPost. Read More