A typosquatted domain impersonating the Microsoft Activation Scripts (MAS) tool was used to distribute malicious PowerShell scripts that infect Windows systems with the ‘Cosmali Loader’. […] Read More

OpenAI is testing a new ChatGPT feature called “Skills,” which will be similar to Claude’s feature, also called Skills. […] Read More

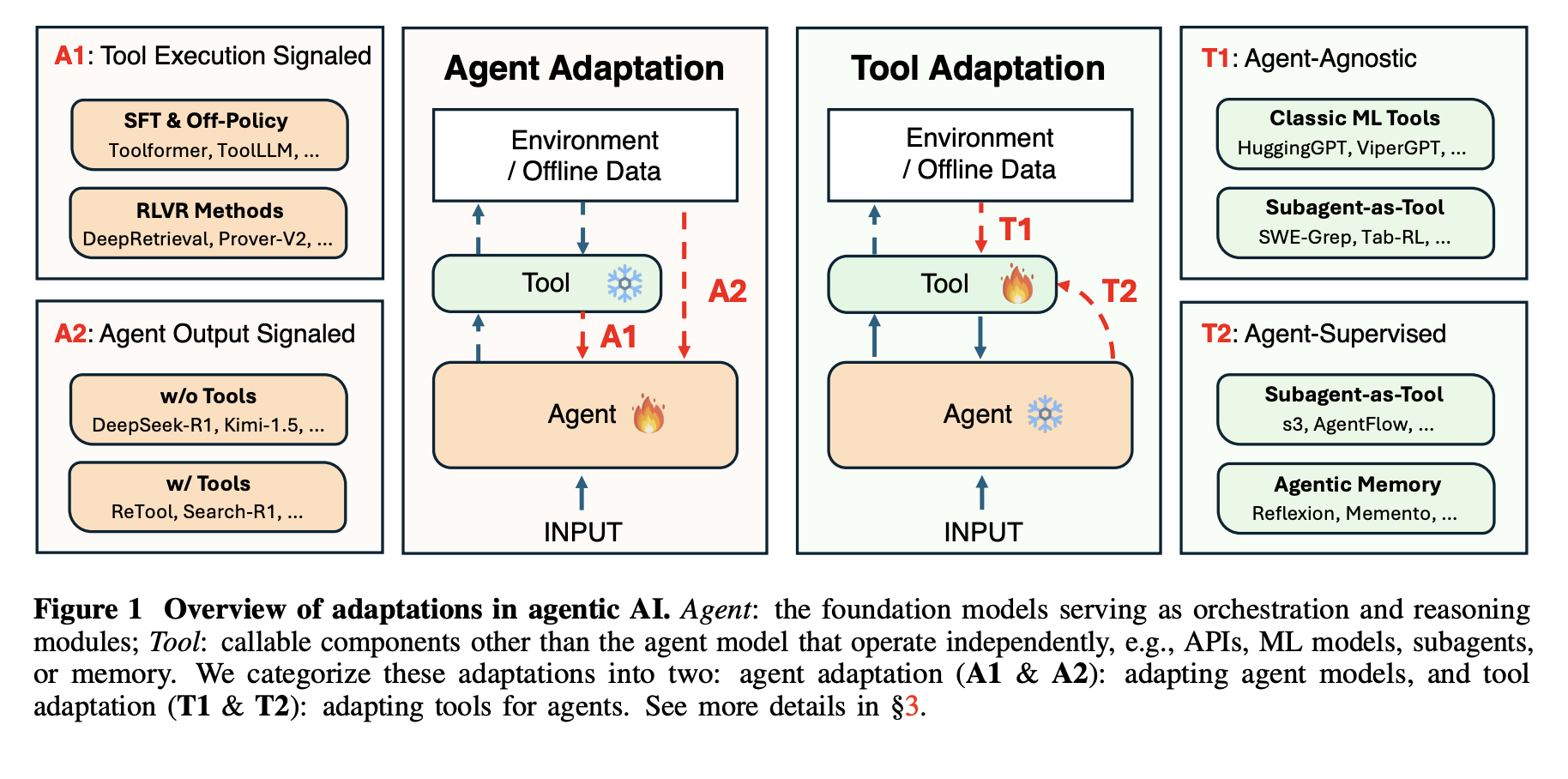

This AI Paper from Stanford and Harvard Explains Why Most ‘Agentic AI’ Systems Feel Impressive in Demos and then Completely Fall Apart in Real UseMarkTechPost Agentic AI systems sit on top of large language models and connect to tools, memory, and external environments. They already support scientific discovery, software development, and clinical research, yet they still struggle with unreliable tool use, weak long horizon planning, and poor generalization. The latest research paper ‘Adaptation of Agentic AI‘ from Stanford, Harvard, UC

The post This AI Paper from Stanford and Harvard Explains Why Most ‘Agentic AI’ Systems Feel Impressive in Demos and then Completely Fall Apart in Real Use appeared first on MarkTechPost.

Agentic AI systems sit on top of large language models and connect to tools, memory, and external environments. They already support scientific discovery, software development, and clinical research, yet they still struggle with unreliable tool use, weak long horizon planning, and poor generalization. The latest research paper ‘Adaptation of Agentic AI‘ from Stanford, Harvard, UC

The post This AI Paper from Stanford and Harvard Explains Why Most ‘Agentic AI’ Systems Feel Impressive in Demos and then Completely Fall Apart in Real Use appeared first on MarkTechPost. Read More

The Machine Learning “Advent Calendar” Day 24: Transformers for Text in ExcelTowards Data Science An intuitive, step-by-step look at how Transformers use self-attention to turn static word embeddings into contextual representations, illustrated with simple examples and an Excel-friendly walkthrough.

The post The Machine Learning “Advent Calendar” Day 24: Transformers for Text in Excel appeared first on Towards Data Science.

An intuitive, step-by-step look at how Transformers use self-attention to turn static word embeddings into contextual representations, illustrated with simple examples and an Excel-friendly walkthrough.

The post The Machine Learning “Advent Calendar” Day 24: Transformers for Text in Excel appeared first on Towards Data Science. Read More

Programmatically creating an IDP solution with Amazon Bedrock Data AutomationArtificial Intelligence In this post, we explore how to programmatically create an IDP solution that uses Strands SDK, Amazon Bedrock AgentCore, Amazon Bedrock Knowledge Base, and Bedrock Data Automation (BDA). This solution is provided through a Jupyter notebook that enables users to upload multi-modal business documents and extract insights using BDA as a parser to retrieve relevant chunks and augment a prompt to a foundational model (FM).

In this post, we explore how to programmatically create an IDP solution that uses Strands SDK, Amazon Bedrock AgentCore, Amazon Bedrock Knowledge Base, and Bedrock Data Automation (BDA). This solution is provided through a Jupyter notebook that enables users to upload multi-modal business documents and extract insights using BDA as a parser to retrieve relevant chunks and augment a prompt to a foundational model (FM). Read More

AI agent-driven browser automation for enterprise workflow managementArtificial Intelligence Enterprise organizations increasingly rely on web-based applications for critical business processes, yet many workflows remain manually intensive, creating operational inefficiencies and compliance risks. Despite significant technology investments, knowledge workers routinely navigate between eight to twelve different web applications during standard workflows, constantly switching contexts and manually transferring information between systems. Data entry and validation tasks

Enterprise organizations increasingly rely on web-based applications for critical business processes, yet many workflows remain manually intensive, creating operational inefficiencies and compliance risks. Despite significant technology investments, knowledge workers routinely navigate between eight to twelve different web applications during standard workflows, constantly switching contexts and manually transferring information between systems. Data entry and validation tasks Read More

Agentic QA automation using Amazon Bedrock AgentCore Browser and Amazon Nova ActArtificial Intelligence In this post, we explore how agentic QA automation addresses these challenges and walk through a practical example using Amazon Bedrock AgentCore Browser and Amazon Nova Act to automate testing for a sample retail application.

In this post, we explore how agentic QA automation addresses these challenges and walk through a practical example using Amazon Bedrock AgentCore Browser and Amazon Nova Act to automate testing for a sample retail application. Read More

Optimizing LLM inference on Amazon SageMaker AI with BentoML’s LLM- OptimizerArtificial Intelligence In this post, we demonstrate how to optimize large language model (LLM) inference on Amazon SageMaker AI using BentoML’s LLM-Optimizer to systematically identify the best serving configurations for your workload.

In this post, we demonstrate how to optimize large language model (LLM) inference on Amazon SageMaker AI using BentoML’s LLM-Optimizer to systematically identify the best serving configurations for your workload. Read More

Schoenfeld’s Anatomy of Mathematical Reasoning by Language Modelscs.AI updates on arXiv.org arXiv:2512.19995v1 Announce Type: cross

Abstract: Large language models increasingly expose reasoning traces, yet their underlying cognitive structure and steps remain difficult to identify and analyze beyond surface-level statistics. We adopt Schoenfeld’s Episode Theory as an inductive, intermediate-scale lens and introduce ThinkARM (Anatomy of Reasoning in Models), a scalable framework that explicitly abstracts reasoning traces into functional reasoning steps such as Analysis, Explore, Implement, Verify, etc. When applied to mathematical problem solving by diverse models, this abstraction reveals reproducible thinking dynamics and structural differences between reasoning and non-reasoning models, which are not apparent from token-level views. We further present two diagnostic case studies showing that exploration functions as a critical branching step associated with correctness, and that efficiency-oriented methods selectively suppress evaluative feedback steps rather than uniformly shortening responses. Together, our results demonstrate that episode-level representations make reasoning steps explicit, enabling systematic analysis of how reasoning is structured, stabilized, and altered in modern language models.

arXiv:2512.19995v1 Announce Type: cross

Abstract: Large language models increasingly expose reasoning traces, yet their underlying cognitive structure and steps remain difficult to identify and analyze beyond surface-level statistics. We adopt Schoenfeld’s Episode Theory as an inductive, intermediate-scale lens and introduce ThinkARM (Anatomy of Reasoning in Models), a scalable framework that explicitly abstracts reasoning traces into functional reasoning steps such as Analysis, Explore, Implement, Verify, etc. When applied to mathematical problem solving by diverse models, this abstraction reveals reproducible thinking dynamics and structural differences between reasoning and non-reasoning models, which are not apparent from token-level views. We further present two diagnostic case studies showing that exploration functions as a critical branching step associated with correctness, and that efficiency-oriented methods selectively suppress evaluative feedback steps rather than uniformly shortening responses. Together, our results demonstrate that episode-level representations make reasoning steps explicit, enabling systematic analysis of how reasoning is structured, stabilized, and altered in modern language models. Read More

Decoupling the “What” and “Where” With Polar Coordinate Positional Embeddingscs.AI updates on arXiv.org arXiv:2509.10534v2 Announce Type: replace-cross

Abstract: The attention mechanism in a Transformer architecture matches key to query based on both content — the what — and position in a sequence — the where. We present an analysis indicating that what and where are entangled in the popular RoPE rotary position embedding. This entanglement can impair performance particularly when decisions require independent matches on these two factors. We propose an improvement to RoPE, which we call Polar Coordinate Position Embeddings or PoPE, that eliminates the what-where confound. PoPE is far superior on a diagnostic task requiring indexing solely by position or by content. On autoregressive sequence modeling in music, genomic, and natural language domains, Transformers using PoPE as the positional encoding scheme outperform baselines using RoPE with respect to evaluation loss (perplexity) and downstream task performance. On language modeling, these gains persist across model scale, from 124M to 774M parameters. Crucially, PoPE shows strong zero-shot length extrapolation capabilities compared not only to RoPE but even a method designed for extrapolation, YaRN, which requires additional fine tuning and frequency interpolation.

arXiv:2509.10534v2 Announce Type: replace-cross

Abstract: The attention mechanism in a Transformer architecture matches key to query based on both content — the what — and position in a sequence — the where. We present an analysis indicating that what and where are entangled in the popular RoPE rotary position embedding. This entanglement can impair performance particularly when decisions require independent matches on these two factors. We propose an improvement to RoPE, which we call Polar Coordinate Position Embeddings or PoPE, that eliminates the what-where confound. PoPE is far superior on a diagnostic task requiring indexing solely by position or by content. On autoregressive sequence modeling in music, genomic, and natural language domains, Transformers using PoPE as the positional encoding scheme outperform baselines using RoPE with respect to evaluation loss (perplexity) and downstream task performance. On language modeling, these gains persist across model scale, from 124M to 774M parameters. Crucially, PoPE shows strong zero-shot length extrapolation capabilities compared not only to RoPE but even a method designed for extrapolation, YaRN, which requires additional fine tuning and frequency interpolation. Read More