Patricia Voight, CISO at Webster Bank, shares her expertise on advancing cybersecurity careers, combating financial crimes, and championing diversity in a rapidly changing industry. Read More

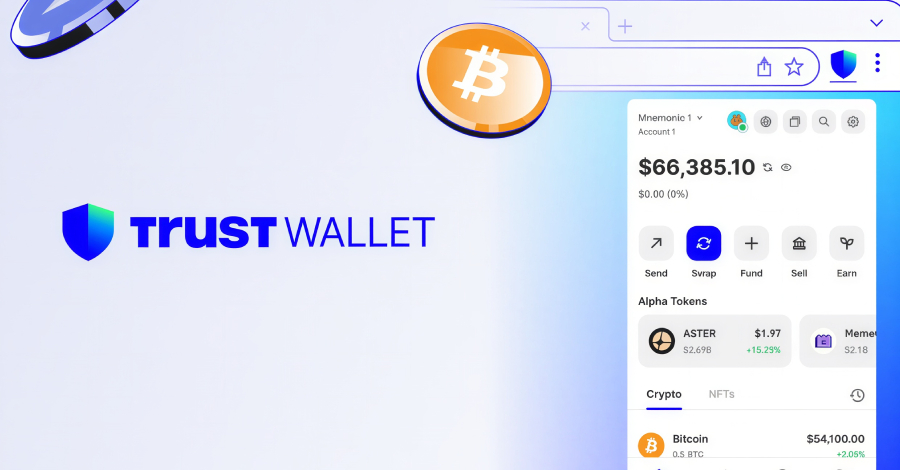

Trust Wallet is urging users to update its Google Chrome extension to the latest version following what it described as a “security incident” that led to the loss of approximately $7 million. The issue, the multi‑chain, non‑custodial cryptocurrency wallet service said, impacts version 2.68. The extension has about one million users, according to the Chrome […]

Grubhub users received fraudulent messages, apparently from a company email address, promising a tenfold bitcoin payout in return for a transfer to a specified wallet. […] Read More

Several users of the Trust Wallet Chrome extension report having their cryptocurrency wallets drained after installing a compromised extension update released on December 24, prompting an urgent response from the company and warnings to affected users. Simultaneously, BleepingComputer observed a phishing domain launched by hackers. […] Read More

From Gemma 3 270M to FunctionGemma, How Google AI Built a Compact Function Calling Specialist for Edge WorkloadsMarkTechPost Google has released FunctionGemma, a specialized version of the Gemma 3 270M model that is trained specifically for function calling and designed to run as an edge agent that maps natural language to executable API actions. But, What is FunctionGemma? FunctionGemma is a 270M parameter text only transformer based on Gemma 3 270M. It keeps

The post From Gemma 3 270M to FunctionGemma, How Google AI Built a Compact Function Calling Specialist for Edge Workloads appeared first on MarkTechPost.

Google has released FunctionGemma, a specialized version of the Gemma 3 270M model that is trained specifically for function calling and designed to run as an edge agent that maps natural language to executable API actions. But, What is FunctionGemma? FunctionGemma is a 270M parameter text only transformer based on Gemma 3 270M. It keeps

The post From Gemma 3 270M to FunctionGemma, How Google AI Built a Compact Function Calling Specialist for Edge Workloads appeared first on MarkTechPost. Read More

This tiny chip could change the future of quantum computingArtificial Intelligence News — ScienceDaily A new microchip-sized device could dramatically accelerate the future of quantum computing. It controls laser frequencies with extreme precision while using far less power than today’s bulky systems. Crucially, it’s made with standard chip manufacturing, meaning it can be mass-produced instead of custom-built. This opens the door to quantum machines far larger and more powerful than anything possible today.

A new microchip-sized device could dramatically accelerate the future of quantum computing. It controls laser frequencies with extreme precision while using far less power than today’s bulky systems. Crucially, it’s made with standard chip manufacturing, meaning it can be mass-produced instead of custom-built. This opens the door to quantum machines far larger and more powerful than anything possible today. Read More

Think Your Python Code Is Slow? Stop Guessing and Start MeasuringTowards Data Science A hands-on tour of using cProfile + SnakeViz to find (and fix) the “hot” paths in your code.

The post Think Your Python Code Is Slow? Stop Guessing and Start Measuring appeared first on Towards Data Science.

A hands-on tour of using cProfile + SnakeViz to find (and fix) the “hot” paths in your code.

The post Think Your Python Code Is Slow? Stop Guessing and Start Measuring appeared first on Towards Data Science. Read More

Agentic AI for Scaling Diagnosis and Care in Neurodegenerative Diseasecs.AI updates on arXiv.org arXiv:2502.06842v4 Announce Type: replace-cross

Abstract: United States healthcare systems are struggling to meet the growing demand for neurological care, particularly in Alzheimer’s disease and related dementias (ADRD). Generative AI built on language models (LLMs) now enables agentic AI systems that can enhance clinician capabilities to approach specialist-level assessment and decision-making in ADRD care at scale. This article presents a comprehensive six-phase roadmap for responsible design and integration of such systems into ADRD care: (1) high-quality standardized data collection across modalities; (2) decision support; (3) clinical integration enhancing workflows; (4) rigorous validation and monitoring protocols; (5) continuous learning through clinical feedback; and (6) robust ethics and risk management frameworks. This human centered approach optimizes clinicians’ capabilities in comprehensive data collection, interpretation of complex clinical information, and timely application of relevant medical knowledge while prioritizing patient safety, healthcare equity, and transparency. Though focused on ADRD, these principles offer broad applicability across medical specialties facing similar systemic challenges.

arXiv:2502.06842v4 Announce Type: replace-cross

Abstract: United States healthcare systems are struggling to meet the growing demand for neurological care, particularly in Alzheimer’s disease and related dementias (ADRD). Generative AI built on language models (LLMs) now enables agentic AI systems that can enhance clinician capabilities to approach specialist-level assessment and decision-making in ADRD care at scale. This article presents a comprehensive six-phase roadmap for responsible design and integration of such systems into ADRD care: (1) high-quality standardized data collection across modalities; (2) decision support; (3) clinical integration enhancing workflows; (4) rigorous validation and monitoring protocols; (5) continuous learning through clinical feedback; and (6) robust ethics and risk management frameworks. This human centered approach optimizes clinicians’ capabilities in comprehensive data collection, interpretation of complex clinical information, and timely application of relevant medical knowledge while prioritizing patient safety, healthcare equity, and transparency. Though focused on ADRD, these principles offer broad applicability across medical specialties facing similar systemic challenges. Read More

Emergent temporal abstractions in autoregressive models enable hierarchical reinforcement learningcs.AI updates on arXiv.org arXiv:2512.20605v2 Announce Type: replace-cross

Abstract: Large-scale autoregressive models pretrained on next-token prediction and finetuned with reinforcement learning (RL) have achieved unprecedented success on many problem domains. During RL, these models explore by generating new outputs, one token at a time. However, sampling actions token-by-token can result in highly inefficient learning, particularly when rewards are sparse. Here, we show that it is possible to overcome this problem by acting and exploring within the internal representations of an autoregressive model. Specifically, to discover temporally-abstract actions, we introduce a higher-order, non-causal sequence model whose outputs control the residual stream activations of a base autoregressive model. On grid world and MuJoCo-based tasks with hierarchical structure, we find that the higher-order model learns to compress long activation sequence chunks onto internal controllers. Critically, each controller executes a sequence of behaviorally meaningful actions that unfold over long timescales and are accompanied with a learned termination condition, such that composing multiple controllers over time leads to efficient exploration on novel tasks. We show that direct internal controller reinforcement, a process we term “internal RL”, enables learning from sparse rewards in cases where standard RL finetuning fails. Our results demonstrate the benefits of latent action generation and reinforcement in autoregressive models, suggesting internal RL as a promising avenue for realizing hierarchical RL within foundation models.

arXiv:2512.20605v2 Announce Type: replace-cross

Abstract: Large-scale autoregressive models pretrained on next-token prediction and finetuned with reinforcement learning (RL) have achieved unprecedented success on many problem domains. During RL, these models explore by generating new outputs, one token at a time. However, sampling actions token-by-token can result in highly inefficient learning, particularly when rewards are sparse. Here, we show that it is possible to overcome this problem by acting and exploring within the internal representations of an autoregressive model. Specifically, to discover temporally-abstract actions, we introduce a higher-order, non-causal sequence model whose outputs control the residual stream activations of a base autoregressive model. On grid world and MuJoCo-based tasks with hierarchical structure, we find that the higher-order model learns to compress long activation sequence chunks onto internal controllers. Critically, each controller executes a sequence of behaviorally meaningful actions that unfold over long timescales and are accompanied with a learned termination condition, such that composing multiple controllers over time leads to efficient exploration on novel tasks. We show that direct internal controller reinforcement, a process we term “internal RL”, enables learning from sparse rewards in cases where standard RL finetuning fails. Our results demonstrate the benefits of latent action generation and reinforcement in autoregressive models, suggesting internal RL as a promising avenue for realizing hierarchical RL within foundation models. Read More

WGLE:Backdoor-free and Multi-bit Black-box Watermarking for Graph Neural Networkscs.AI updates on arXiv.org arXiv:2506.08602v2 Announce Type: replace-cross

Abstract: Graph Neural Networks (GNNs) are increasingly deployed in real-world applications, making ownership verification critical to protect their intellectual property against model theft. Fingerprinting and black-box watermarking are two main methods. However, the former relies on determining model similarity, which is computationally expensive and prone to ownership collisions after model post-processing. The latter embeds backdoors, exposing watermarked models to the risk of backdoor attacks. Moreover, both previous methods enable ownership verification but do not convey additional information about the copy model. If the owner has multiple models, each model requires a distinct trigger graph.

To address these challenges, this paper proposes WGLE, a novel black-box watermarking paradigm for GNNs that enables embedding the multi-bit string in GNN models without using backdoors. WGLE builds on a key insight we term Layer-wise Distance Difference on an Edge (LDDE), which quantifies the difference between the feature distance and the prediction distance of two connected nodes in a graph. By assigning unique LDDE values to the edges and employing the LDDE sequence as the watermark, WGLE supports multi-bit capacity without relying on backdoor mechanisms. We evaluate WGLE on six public datasets across six mainstream GNN architectures, and compare WGLE with state-of-the-art GNN watermarking and fingerprinting methods. WGLE achieves 100% ownership verification accuracy, with an average fidelity degradation of only 1.41%. Additionally, WGLE exhibits robust resilience against potential attacks. The code is available in the repository.

arXiv:2506.08602v2 Announce Type: replace-cross

Abstract: Graph Neural Networks (GNNs) are increasingly deployed in real-world applications, making ownership verification critical to protect their intellectual property against model theft. Fingerprinting and black-box watermarking are two main methods. However, the former relies on determining model similarity, which is computationally expensive and prone to ownership collisions after model post-processing. The latter embeds backdoors, exposing watermarked models to the risk of backdoor attacks. Moreover, both previous methods enable ownership verification but do not convey additional information about the copy model. If the owner has multiple models, each model requires a distinct trigger graph.

To address these challenges, this paper proposes WGLE, a novel black-box watermarking paradigm for GNNs that enables embedding the multi-bit string in GNN models without using backdoors. WGLE builds on a key insight we term Layer-wise Distance Difference on an Edge (LDDE), which quantifies the difference between the feature distance and the prediction distance of two connected nodes in a graph. By assigning unique LDDE values to the edges and employing the LDDE sequence as the watermark, WGLE supports multi-bit capacity without relying on backdoor mechanisms. We evaluate WGLE on six public datasets across six mainstream GNN architectures, and compare WGLE with state-of-the-art GNN watermarking and fingerprinting methods. WGLE achieves 100% ownership verification accuracy, with an average fidelity degradation of only 1.41%. Additionally, WGLE exhibits robust resilience against potential attacks. The code is available in the repository. Read More