A Theoretical Framework for Environmental Similarity and Vessel Mobility as Coupled Predictors of Marine Invasive Species Pathwayscs.AI updates on arXiv.org arXiv:2511.03499v1 Announce Type: cross

Abstract: Marine invasive species spread through global shipping and generate substantial ecological and economic impacts. Traditional risk assessments require detailed records of ballast water and traffic patterns, which are often incomplete, limiting global coverage. This work advances a theoretical framework that quantifies invasion risk by combining environmental similarity across ports with observed and forecasted maritime mobility. Climate-based feature representations characterize each port’s marine conditions, while mobility networks derived from Automatic Identification System data capture vessel flows and potential transfer pathways. Clustering and metric learning reveal climate analogues and enable the estimation of species survival likelihood along shipping routes. A temporal link prediction model captures how traffic patterns may change under shifting environmental conditions. The resulting fusion of environmental similarity and predicted mobility provides exposure estimates at the port and voyage levels, supporting targeted monitoring, routing adjustments, and management interventions.

arXiv:2511.03499v1 Announce Type: cross

Abstract: Marine invasive species spread through global shipping and generate substantial ecological and economic impacts. Traditional risk assessments require detailed records of ballast water and traffic patterns, which are often incomplete, limiting global coverage. This work advances a theoretical framework that quantifies invasion risk by combining environmental similarity across ports with observed and forecasted maritime mobility. Climate-based feature representations characterize each port’s marine conditions, while mobility networks derived from Automatic Identification System data capture vessel flows and potential transfer pathways. Clustering and metric learning reveal climate analogues and enable the estimation of species survival likelihood along shipping routes. A temporal link prediction model captures how traffic patterns may change under shifting environmental conditions. The resulting fusion of environmental similarity and predicted mobility provides exposure estimates at the port and voyage levels, supporting targeted monitoring, routing adjustments, and management interventions. Read More

10 Polars One-Liners for Speeding Up Data WorkflowsKDnuggets Check out these 10 Polars one-liners intended to speed up tasks you normally perform with Pandas.

Check out these 10 Polars one-liners intended to speed up tasks you normally perform with Pandas. Read More

MIT researchers propose a new model for legible, modular softwareMIT News – Machine learning The coding framework uses modular concepts and simple synchronization rules to make software clearer, safer, and easier for LLMs to generate.

The coding framework uses modular concepts and simple synchronization rules to make software clearer, safer, and easier for LLMs to generate. Read More

Multi-Agent SQL Assistant, Part 2: Building a RAG ManagerTowards Data Science A hands-on guide to comparing multiple RAG strategies — Keyword, FAISS, and Chroma

The post Multi-Agent SQL Assistant, Part 2: Building a RAG Manager appeared first on Towards Data Science.

A hands-on guide to comparing multiple RAG strategies — Keyword, FAISS, and Chroma

The post Multi-Agent SQL Assistant, Part 2: Building a RAG Manager appeared first on Towards Data Science. Read More

Using Multi-modal Large Language Model to Boost Fireworks Algorithm’s Ability in Settling Challenging Optimization Taskscs.AI updates on arXiv.org arXiv:2511.03137v1 Announce Type: new

Abstract: As optimization problems grow increasingly complex and diverse, advancements in optimization techniques and paradigm innovations hold significant importance. The challenges posed by optimization problems are primarily manifested in their non-convexity, high-dimensionality, black-box nature, and other unfavorable characteristics. Traditional zero-order or first-order methods, which are often characterized by low efficiency, inaccurate gradient information, and insufficient utilization of optimization information, are ill-equipped to address these challenges effectively. In recent years, the rapid development of large language models (LLM) has led to substantial improvements in their language understanding and code generation capabilities. Consequently, the design of optimization algorithms leveraging large language models has garnered increasing attention from researchers. In this study, we choose the fireworks algorithm(FWA) as the basic optimizer and propose a novel approach to assist the design of the FWA by incorporating multi-modal large language model(MLLM). To put it simply, we propose the concept of Critical Part(CP), which extends FWA to complex high-dimensional tasks, and further utilizes the information in the optimization process with the help of the multi-modal characteristics of large language models. We focus on two specific tasks: the textit{traveling salesman problem }(TSP) and textit{electronic design automation problem} (EDA). The experimental results show that FWAs generated under our new framework have achieved or surpassed SOTA results on many problem instances.

arXiv:2511.03137v1 Announce Type: new

Abstract: As optimization problems grow increasingly complex and diverse, advancements in optimization techniques and paradigm innovations hold significant importance. The challenges posed by optimization problems are primarily manifested in their non-convexity, high-dimensionality, black-box nature, and other unfavorable characteristics. Traditional zero-order or first-order methods, which are often characterized by low efficiency, inaccurate gradient information, and insufficient utilization of optimization information, are ill-equipped to address these challenges effectively. In recent years, the rapid development of large language models (LLM) has led to substantial improvements in their language understanding and code generation capabilities. Consequently, the design of optimization algorithms leveraging large language models has garnered increasing attention from researchers. In this study, we choose the fireworks algorithm(FWA) as the basic optimizer and propose a novel approach to assist the design of the FWA by incorporating multi-modal large language model(MLLM). To put it simply, we propose the concept of Critical Part(CP), which extends FWA to complex high-dimensional tasks, and further utilizes the information in the optimization process with the help of the multi-modal characteristics of large language models. We focus on two specific tasks: the textit{traveling salesman problem }(TSP) and textit{electronic design automation problem} (EDA). The experimental results show that FWAs generated under our new framework have achieved or surpassed SOTA results on many problem instances. Read More

Traversal Verification for Speculative Tree Decodingcs.AI updates on arXiv.org arXiv:2505.12398v2 Announce Type: replace-cross

Abstract: Speculative decoding is a promising approach for accelerating large language models. The primary idea is to use a lightweight draft model to speculate the output of the target model for multiple subsequent timesteps, and then verify them in parallel to determine whether the drafted tokens should be accepted or rejected. To enhance acceptance rates, existing frameworks typically construct token trees containing multiple candidates in each timestep. However, their reliance on token-level verification mechanisms introduces two critical limitations: First, the probability distribution of a sequence differs from that of individual tokens, leading to suboptimal acceptance length. Second, current verification schemes begin from the root node and proceed layer by layer in a top-down manner. Once a parent node is rejected, all its child nodes should be discarded, resulting in inefficient utilization of speculative candidates. This paper introduces Traversal Verification, a novel speculative decoding algorithm that fundamentally rethinks the verification paradigm through leaf-to-root traversal. Our approach considers the acceptance of the entire token sequence from the current node to the root, and preserves potentially valid subsequences that would be prematurely discarded by existing methods. We theoretically prove that the probability distribution obtained through Traversal Verification is identical to that of the target model, guaranteeing lossless inference while achieving substantial acceleration gains. Experimental results across different large language models and multiple tasks show that our method consistently improves acceptance length and throughput over existing methods.

arXiv:2505.12398v2 Announce Type: replace-cross

Abstract: Speculative decoding is a promising approach for accelerating large language models. The primary idea is to use a lightweight draft model to speculate the output of the target model for multiple subsequent timesteps, and then verify them in parallel to determine whether the drafted tokens should be accepted or rejected. To enhance acceptance rates, existing frameworks typically construct token trees containing multiple candidates in each timestep. However, their reliance on token-level verification mechanisms introduces two critical limitations: First, the probability distribution of a sequence differs from that of individual tokens, leading to suboptimal acceptance length. Second, current verification schemes begin from the root node and proceed layer by layer in a top-down manner. Once a parent node is rejected, all its child nodes should be discarded, resulting in inefficient utilization of speculative candidates. This paper introduces Traversal Verification, a novel speculative decoding algorithm that fundamentally rethinks the verification paradigm through leaf-to-root traversal. Our approach considers the acceptance of the entire token sequence from the current node to the root, and preserves potentially valid subsequences that would be prematurely discarded by existing methods. We theoretically prove that the probability distribution obtained through Traversal Verification is identical to that of the target model, guaranteeing lossless inference while achieving substantial acceleration gains. Experimental results across different large language models and multiple tasks show that our method consistently improves acceptance length and throughput over existing methods. Read More

Inverse Entropic Optimal Transport Solves Semi-supervised Learning via Data Likelihood Maximizationcs.AI updates on arXiv.org arXiv:2410.02628v4 Announce Type: replace-cross

Abstract: Learning conditional distributions $pi^*(cdot|x)$ is a central problem in machine learning, which is typically approached via supervised methods with paired data $(x,y) sim pi^*$. However, acquiring paired data samples is often challenging, especially in problems such as domain translation. This necessitates the development of $textit{semi-supervised}$ models that utilize both limited paired data and additional unpaired i.i.d. samples $x sim pi^*_x$ and $y sim pi^*_y$ from the marginal distributions. The usage of such combined data is complex and often relies on heuristic approaches. To tackle this issue, we propose a new learning paradigm that integrates both paired and unpaired data $textbf{seamlessly}$ using the data likelihood maximization techniques. We demonstrate that our approach also connects intriguingly with inverse entropic optimal transport (OT). This finding allows us to apply recent advances in computational OT to establish an $textbf{end-to-end}$ learning algorithm to get $pi^*(cdot|x)$. In addition, we derive the universal approximation property, demonstrating that our approach can theoretically recover true conditional distributions with arbitrarily small error. Furthermore, we demonstrate through empirical tests that our method effectively learns conditional distributions using paired and unpaired data simultaneously.

arXiv:2410.02628v4 Announce Type: replace-cross

Abstract: Learning conditional distributions $pi^*(cdot|x)$ is a central problem in machine learning, which is typically approached via supervised methods with paired data $(x,y) sim pi^*$. However, acquiring paired data samples is often challenging, especially in problems such as domain translation. This necessitates the development of $textit{semi-supervised}$ models that utilize both limited paired data and additional unpaired i.i.d. samples $x sim pi^*_x$ and $y sim pi^*_y$ from the marginal distributions. The usage of such combined data is complex and often relies on heuristic approaches. To tackle this issue, we propose a new learning paradigm that integrates both paired and unpaired data $textbf{seamlessly}$ using the data likelihood maximization techniques. We demonstrate that our approach also connects intriguingly with inverse entropic optimal transport (OT). This finding allows us to apply recent advances in computational OT to establish an $textbf{end-to-end}$ learning algorithm to get $pi^*(cdot|x)$. In addition, we derive the universal approximation property, demonstrating that our approach can theoretically recover true conditional distributions with arbitrarily small error. Furthermore, we demonstrate through empirical tests that our method effectively learns conditional distributions using paired and unpaired data simultaneously. Read More

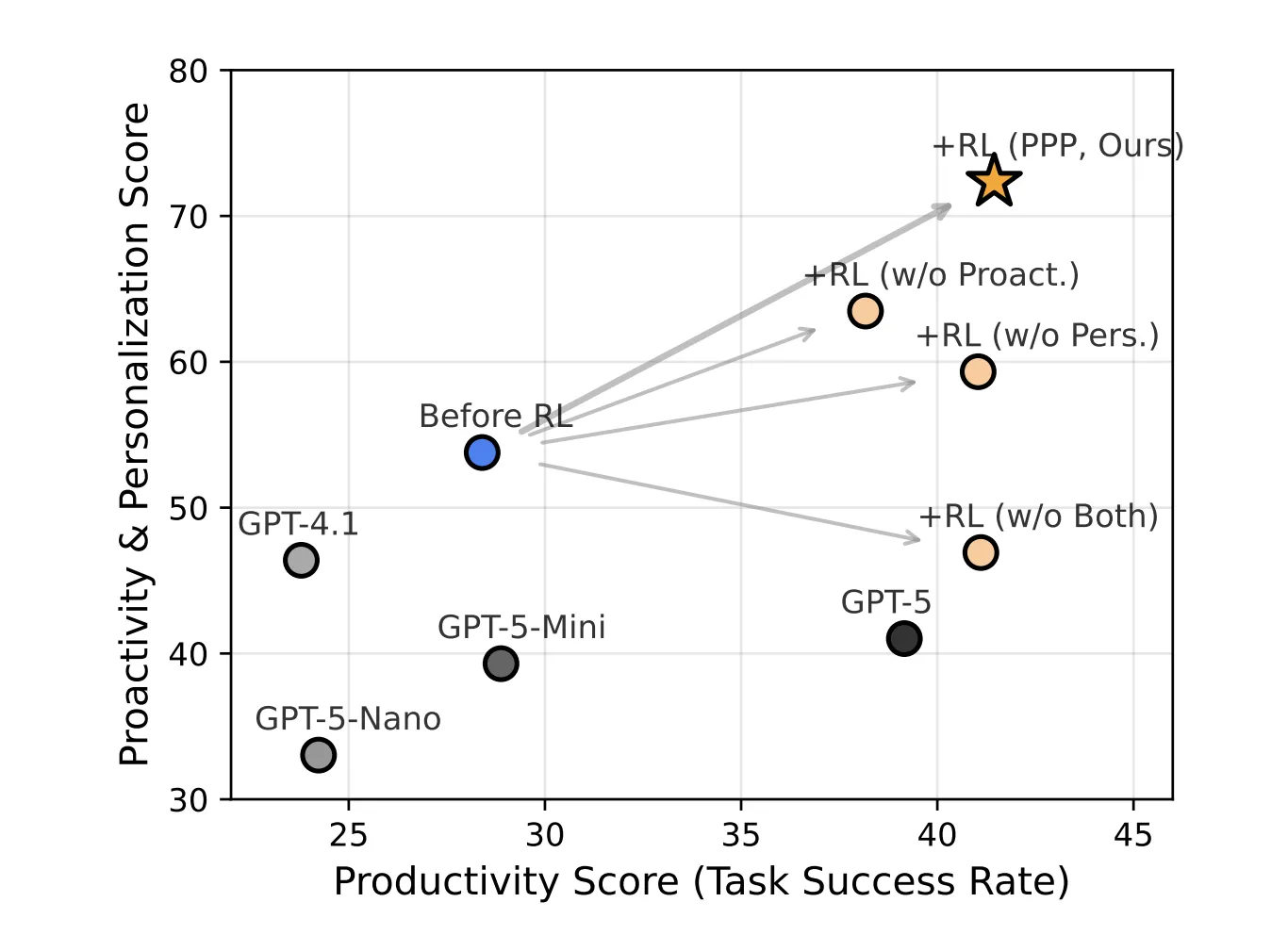

CMU Researchers Introduce PPP and UserVille To Train Proactive And Personalized LLM AgentsMarkTechPost Most LLM agents are tuned to maximize task success. They resolve GitHub issues or answer deep research queries, but they do not reason carefully about when to ask the user questions or how to respect different interaction preferences. How can we design LLM agents that know when to ask better questions and adapt their behavior

The post CMU Researchers Introduce PPP and UserVille To Train Proactive And Personalized LLM Agents appeared first on MarkTechPost.

Most LLM agents are tuned to maximize task success. They resolve GitHub issues or answer deep research queries, but they do not reason carefully about when to ask the user questions or how to respect different interaction preferences. How can we design LLM agents that know when to ask better questions and adapt their behavior

The post CMU Researchers Introduce PPP and UserVille To Train Proactive And Personalized LLM Agents appeared first on MarkTechPost. Read More

Apple plans big Siri update with help from Google AIAI News Apple is planning to use a custom version of Google’s Gemini model to support a major upgrade to Siri, according to Bloomberg’s Mark Gurman. The company may pay Google about $1 billion each year for access to technology that can create summaries and handle planning tasks. Bloomberg says Apple will run the custom model on

The post Apple plans big Siri update with help from Google AI appeared first on AI News.

Apple is planning to use a custom version of Google’s Gemini model to support a major upgrade to Siri, according to Bloomberg’s Mark Gurman. The company may pay Google about $1 billion each year for access to technology that can create summaries and handle planning tasks. Bloomberg says Apple will run the custom model on

The post Apple plans big Siri update with help from Google AI appeared first on AI News. Read More

A Proprietary Model-Based Safety Response Framework for AI Agentscs.AI updates on arXiv.org arXiv:2511.03138v1 Announce Type: new

Abstract: With the widespread application of Large Language Models (LLMs), their associated security issues have become increasingly prominent, severely constraining their trustworthy deployment in critical domains. This paper proposes a novel safety response framework designed to systematically safeguard LLMs at both the input and output levels. At the input level, the framework employs a supervised fine-tuning-based safety classification model. Through a fine-grained four-tier taxonomy (Safe, Unsafe, Conditionally Safe, Focused Attention), it performs precise risk identification and differentiated handling of user queries, significantly enhancing risk coverage and business scenario adaptability, and achieving a risk recall rate of 99.3%. At the output level, the framework integrates Retrieval-Augmented Generation (RAG) with a specifically fine-tuned interpretation model, ensuring all responses are grounded in a real-time, trustworthy knowledge base. This approach eliminates information fabrication and enables result traceability. Experimental results demonstrate that our proposed safety control model achieves a significantly higher safety score on public safety evaluation benchmarks compared to the baseline model, TinyR1-Safety-8B. Furthermore, on our proprietary high-risk test set, the framework’s components attained a perfect 100% safety score, validating their exceptional protective capabilities in complex risk scenarios. This research provides an effective engineering pathway for building high-security, high-trust LLM applications.

arXiv:2511.03138v1 Announce Type: new

Abstract: With the widespread application of Large Language Models (LLMs), their associated security issues have become increasingly prominent, severely constraining their trustworthy deployment in critical domains. This paper proposes a novel safety response framework designed to systematically safeguard LLMs at both the input and output levels. At the input level, the framework employs a supervised fine-tuning-based safety classification model. Through a fine-grained four-tier taxonomy (Safe, Unsafe, Conditionally Safe, Focused Attention), it performs precise risk identification and differentiated handling of user queries, significantly enhancing risk coverage and business scenario adaptability, and achieving a risk recall rate of 99.3%. At the output level, the framework integrates Retrieval-Augmented Generation (RAG) with a specifically fine-tuned interpretation model, ensuring all responses are grounded in a real-time, trustworthy knowledge base. This approach eliminates information fabrication and enables result traceability. Experimental results demonstrate that our proposed safety control model achieves a significantly higher safety score on public safety evaluation benchmarks compared to the baseline model, TinyR1-Safety-8B. Furthermore, on our proprietary high-risk test set, the framework’s components attained a perfect 100% safety score, validating their exceptional protective capabilities in complex risk scenarios. This research provides an effective engineering pathway for building high-security, high-trust LLM applications. Read More